Ubuntu20.04安装基于docker的最新版K8sv1.25.4版本

基础环境

| 主机名 | 配置 | 角色 | 系统版本 | IP |

|---|---|---|---|---|

| master | 4核4G | master | Ubuntu20.04 | 10.0.0.61 |

| node | 4核4G | worker | Ubuntu20.04 | 10.0.0.62 |

系统初始化配置

注意:集群内的主机都要执行

配置免密互信

$ ssh-keygen

$ ssh-copy-id 10.0.0.61

$ ssh-copy-id 10.0.0.62

配置本地域名解析

$ cat << EOF >>/etc/hosts

10.0.0.61 master

10.0.0.62 node

EOF

设置内核参数

$ cat >> /etc/sysctl.conf <<EOF

vm.swappiness = 0

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

#加载模块

$ modprobe br_netfilter

#让配置生效

$ sysctl -p

关闭系统的交换分区swap

$ swapoff -a

$ sed -i '/swap/s/^/#/' /etc/fstab && cat /etc/fstab && free -h

swap坑

在centos中,这样配置就可以了,但是ubuntu中却不行,重启了主机,swap分区还是会打开,而k8s非常依赖swap的关闭,所以如果swap分区没有关闭,k8s集群是起不来的。

解决办法

编辑/etc/fstab ,sudo vim /etc/fstab,找到如下行

/dev/disk/by-uuid/5fea4562-481e-4bdc-9373-0a55b3420cc0 none swap sw 0 0

修改成

/dev/disk/by-uuid/5fea4562-481e-4bdc-9373-0a55b3420cc0 none swap sw,noauto 0 0

sed -ri '/swap/s/^(.*swap sw)( .*)$/\1,noauto\2/' /etc/fstab

在sw后面添加noauto就是让swap分区不自动启动

将如下行注释,即在行首添加#

/swap.img none swap sw 0 0

重启系统并验证

安装docker

注意:集群内的主机都要执行

$ apt update

$ apt -y install docker.io

$ cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"https://hub-mirror.c.163.com",

"https://reg-mirror.qiniu.com",

"https://registry.docker-cn.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

$ systemctl restart docker.service

$ docker info

安装k8s必要软件

注意:集群内的主机都要执行

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

# 查看版本

apt-cache madison kubelet kubeadm kubectl

# 安装

apt-get install -y kubelet kubeadm kubectl

# 锁定版本

apt-mark hold kubelet kubeadm kubectl

# 解锁

apt-mark unhold kubelet kubeadm kubectl

注意:经测试,需要一起复制粘贴才行,否在会报找不到k8s的三个软件

安装 cri-dockerd

注意:集群内的主机都要执行

Kubernetes自v1.24移除了对docker-shim的支持,而Docker Engine默认又不支持CRI规范,因而二者将无法直接完成整合。

为此,Mirantis和Docker联合创建了cri-dockerd项目,用于为Docker Engine提供一个能够支持到CRI规范的垫片,从而能够让Kubernetes基于CRI控制Docker 。

项目地址:https://github.com/Mirantis/cri-dockerd

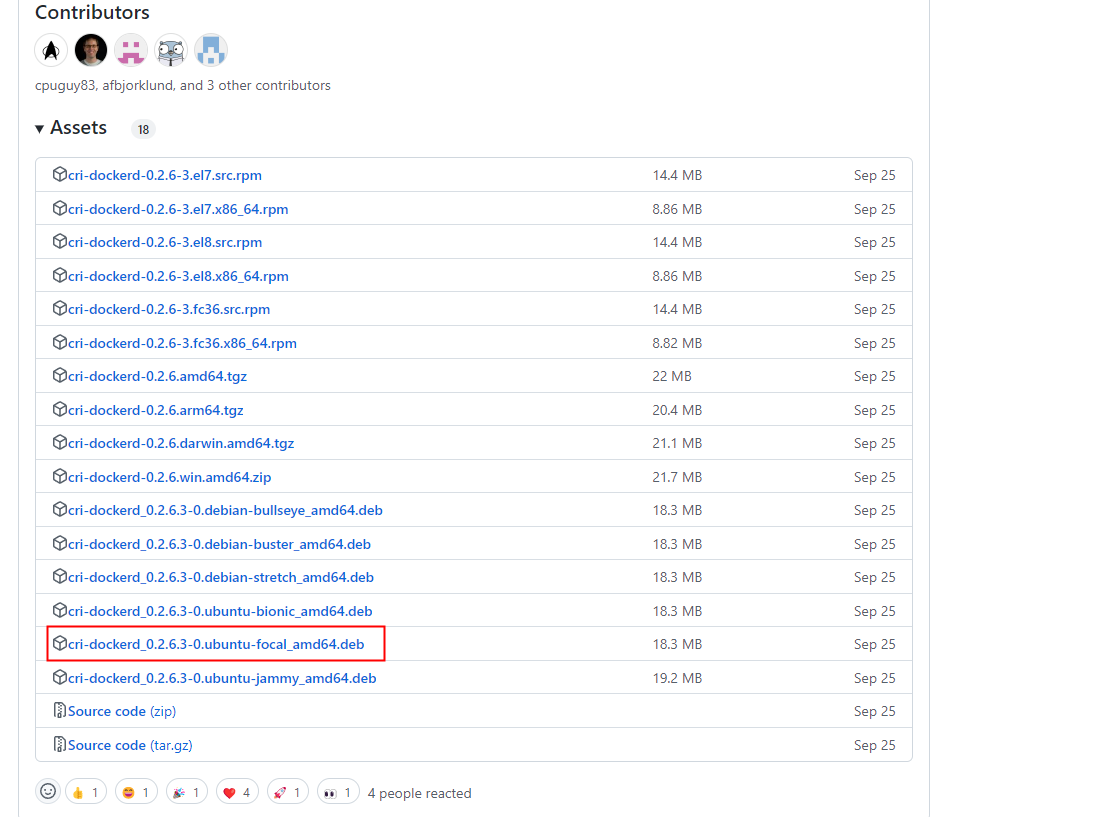

cri-dockerd项目提供了安装包

Ubuntu有三个版本,分别是bionic、focal、jammy

分别代表不同的大版本

- 22.04:jammy

- 20.04:focal

- 18.04:bionic

- 16.04:xenial

- 14.04:trusty

这里我们是20.04版本,所以选择focal

$ wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd_0.2.6.3-0.ubuntu-focal_amd64.deb

#安装

$ dpkg -i ./cri-dockerd_0.2.6.3-0.ubuntu-focal_amd64.deb

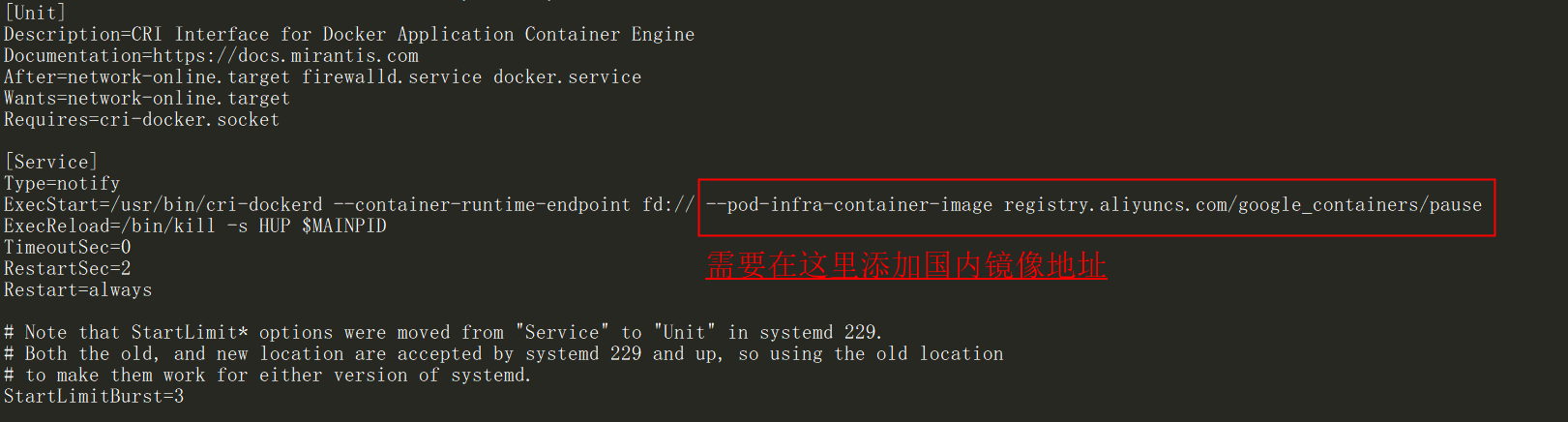

配置 cri-dockerd

从国内 cri-dockerd 服务无法下载 k8s.gcr.io上面相关镜像,导致无法启动,所以需要修改cri-dockerd 使用国内镜像源

修改配置文件,设置国内镜像源

$ sed -ri 's@^(.*fd://).*$@\1 --pod-infra-container-image registry.aliyuncs.com/google_containers/pause@' /usr/lib/systemd/system/cri-docker.service

#重启

$ systemctl daemon-reload && systemctl restart cri-docker

在master节点上初始化

只需要在一个master节点上执行就可以了,worker节点不需要执行

$ kubeadm init --control-plane-endpoint="master" \

--kubernetes-version=v1.25.0 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--token-ttl=0 \

#区别于1.23及之前的版本,一定要加上下面这行,指定容器运行时

--cri-socket unix:///run/cri-dockerd.sock \

--image-repository registry.aliyuncs.com/google_containers \

--upload-certs

出现下面的情况,就表明初始化成功

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join master:6443 --token fryk5b.yzjas1k5chmtufii \

--discovery-token-ca-cert-hash sha256:170c7c2b6dcea2a078d7c203915c8cefdeffe1ac2f10140ed7df71f6c8d92760 \

--control-plane --certificate-key ab5f8a8f97b780ca6e13a74692cceaaf10b254c32d454c44de0a46b08cea0fc3

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master:6443 --token fryk5b.yzjas1k5chmtufii \

--discovery-token-ca-cert-hash sha256:170c7c2b6dcea2a078d7c203915c8cefdeffe1ac2f10140ed7df71f6c8d92760

按照提示要求,创建配置文件目录以及复制配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

加入worker节点

在worker节点上执行

kubeadm join master:6443 --token fryk5b.yzjas1k5chmtufii \

--discovery-token-ca-cert-hash sha256:170c7c2b6dcea2a078d7c203915c8cefdeffe1ac2f10140ed7df71f6c8d92760 --cri-socket unix:///run/cri-dockerd.sock

注意,一定要加上--cri-socket unix:///run/cri-dockerd.sock

指定容器运行时

执行完成,查看节点

$ kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 11m v1.25.4

node NotReady <none> 10m v1.25.4

加入master节点

因为这里只是单master集群,所以不需要加入master节点,如果有需要可以按照如下的方法加入

在其他两个master节点上,接入主节点

用的是第一个master节点初始化时输出的另一个证书

也就是这部分

kubeadm join master:6443 --token fryk5b.yzjas1k5chmtufii \

--discovery-token-ca-cert-hash sha256:170c7c2b6dcea2a078d7c203915c8cefdeffe1ac2f10140ed7df71f6c8d92760 \

--control-plane --certificate-key ab5f8a8f97b780ca6e13a74692cceaaf10b254c32d454c44de0a46b08cea0fc3

出现这个,就表示加入完成

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster

同样根据提示,创建文件夹,拷贝文件

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装pod网络calico

注意:只需要在其中一个master节点上执行

下载资源清单文件

在线下载配置文件地址是: https://docs.projectcalico.org/manifests/calico.yaml

$ wget https://docs.projectcalico.org/manifests/calico.yaml

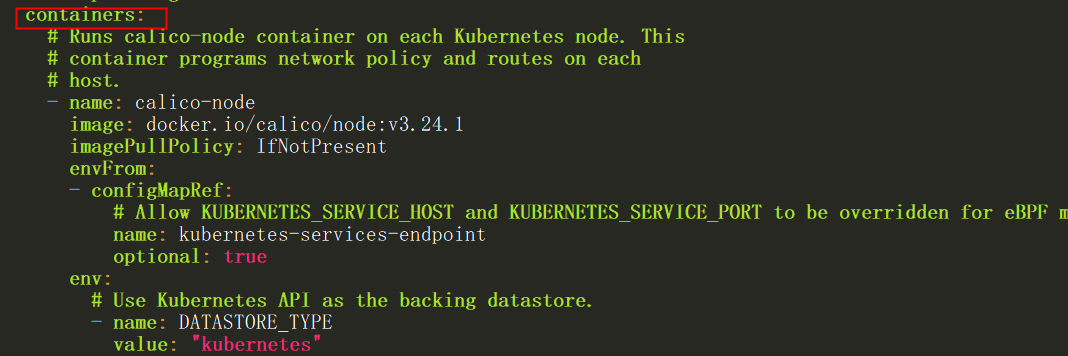

修改资源清单文件

查找 DaemonSet

找到下面的容器containers部分

如果有多个网卡,需要添加网卡

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

#在这里指定网卡 添加下面两行

- name: IP_AUTODETECTION_METHOD

value: "interface=ens33"

# Enable or Disable VXLAN on the default IP pool.

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

修改CIDR,将CIDR修改成上面初始化时pod的内部网段

对应项:

--pod-network-cidr=10.244.0.0/16podSubnet: 10.244.0.0/16

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

#

#这部分原本是注释的,需要去掉#号,将192.168.0.0/16修改成10.244.0.0/16

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

安装calico

$ kubectl apply -f calico.yaml

查看各节点

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 21m v1.25.4

node Ready <none> 20m v1.25.4

测试集群网络是否正常

$ kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

/ # nslookup kubernetes.default.svc.cluster.local

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

10.96.0.10 就是我们coreDNS的clusterIP,说明coreDNS配置好了。

解析内部Service的名称,是通过coreDNS去解析的。

注意:

busybox要用指定的1.28版本,不能用最新版本,最新版本,nslookup会解析不到dns和ip

本文来自博客园,作者:厚礼蝎,转载请注明原文链接:https://www.cnblogs.com/guangdelw/p/16967841.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号