import os

import lr as lr

import tensorflow as tf

from pyspark.sql.functions import stddev

from tensorflow.keras import datasets

os.environ['TF_CPP_MIN_LOG_LEVEL']='2' #只打印error的信息

(x,y),(x_test,y_test)=datasets.mnist.load_data()

#x: [60k,28,28] [10k,28,28]

#y: [60k] [10k]

x=tf.convert_to_tensor(x,dtype=tf.float32)/255 #使x的值从0~255降到0~1

y=tf.convert_to_tensor(y,dtype=tf.int32)

x_test=tf.convert_to_tensor(x_test,dtype=tf.float32)/255 #使x的值从0~255降到0~1

y_test=tf.convert_to_tensor(y_test,dtype=tf.int32)

# print(x.shape,y.shape,x.dtype,y.dtype,x_test.shape,y_test.shape,x_test.dtype,y_test.dtype)

# print(tf.reduce_min(x),tf.reduce_max(x),tf.reduce_min(x_test),tf.reduce_max(x_test))

# print(tf.reduce_min(y),tf.reduce_max(y),tf.reduce_min(y_test),tf.reduce_max(y_test))

train_db=tf.data.Dataset.from_tensor_slices((x,y)).batch(100) #每次从60k中取100张

test_db=tf.data.Dataset.from_tensor_slices((x_test,y_test)).batch(100) #每次从10k中取100张

train_iter=iter(train_db) #迭代器

sample=next(train_iter)

print('batch:',sample[0].shape,sample[1].shape)

#[b,784]=>[b,256]=>[b,128]=>[b,10]

#[dim_in,dim_out],[dim_out]

w1=tf.Variable(tf.random.truncated_normal([784,256],stddev=0.1)) #防止梯度爆炸,需要设定均值和方差的范围,原来是均值为0,方差为1,现在设置方差为0.1

b1=tf.Variable(tf.zeros([256]))

w2=tf.Variable(tf.random.truncated_normal([256,128],stddev=0.1))

b2=tf.Variable(tf.zeros([128]))

w3=tf.Variable(tf.random.truncated_normal([128,10],stddev=0.1))

b3=tf.Variable(tf.zeros([10]))

#h1=x@w1+b1 x指的是之前的一个batch,100个28*28的图片

for epoch in range(50): #对整个数据集进行50次迭代

for step,(x,y) in enumerate(train_db): # x:[100,28,28] y:[100] 对每个batch进行,整体进度

x=tf.reshape(x,[-1,28*28]) #[b,28,28]=>[b,28*28] 维度变换

with tf.GradientTape() as tape: #tf.Variable

h1 = x @ w1 + b1 # [b,784]@[784,256]+[256]=>[b,256]

h1 = tf.nn.relu(h1) # 加入非线性因素

h2 = h1 @ w2 + b2 # [b,256]@[256,128]+[128]=>[b,128]

h2 = tf.nn.relu(h2)

out = h2 @ w3 + b3 # [b,128]@[128,10]+[10]=>[b,10] 前项计算结束

# compute loss

# out:[b,10]

# y:[b]=>[b,10]

y_onehot = tf.one_hot(y, depth=10) #将y one_hot编码为长度为10的一维数组,好与x*w+b的[b,10]进行相减误差运算

# mes=mean(sum(y_onehot-out)^2)

loss = tf.square(y_onehot - out)

# mean:scalar

loss = tf.reduce_mean(loss) #求均值,就是计算100张图片的平均误差

#compute gradient

grads=tape.gradient(loss,[w1,b1,w2,b2,w3,b3]) #loss函数中队w1,b1,w2,b2,w3,b3求导

# print(grads)

#w1=w1-lr*w1_grad 求下一个w1,梯度下降算法

# w1 = w1 - lr * grads[0] #tf.Variable相减之后还是tf.tensor,需要原地更新

# b1 = b1 - lr * grads[1]

# w2 = w2 - lr * grads[2]

# b2 = b2 - lr * grads[3]

# w3 = w3 - lr * grads[4]

# b3 = b3 - lr * grads[5]

lr = 1e-3 #0.001

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

w3.assign_sub(lr * grads[4])

b3.assign_sub(lr * grads[5])

# print(isinstance(b3, tf.Variable))

# print(isinstance(b3, tf.Tensor))

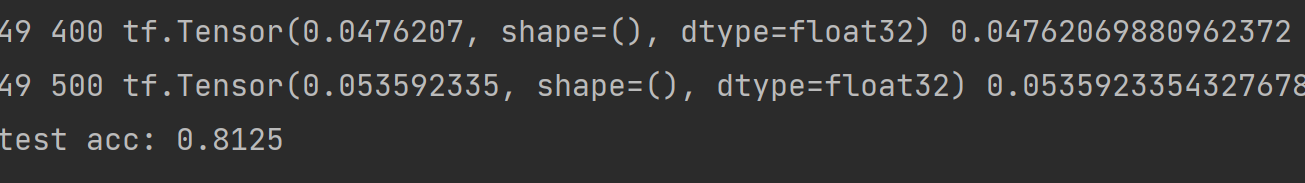

if step%100==0: #每进行100个batch输出一次

print(epoch,step,loss,float(loss))

#test

total_correct,total_num=0,0 #总的正确数量和总的测试数量

for step ,(x,y) in enumerate(test_db):

x = tf.reshape(x,[-1,28*28])

h1=tf.nn.relu(x@w1+b1)

h2=tf.nn.relu(h1@w2+b2)

out=h2@w3+b3

#out:[b,10]属于实数范围

#prob:[b,10]属于0~1范围,概率

prob=tf.nn.softmax(out,axis=1) #将out映射到0~1范围的概率上

pred=tf.argmax(prob,axis=1) #选取prob中值最大的索引

pred=tf.cast(pred,dtype=tf.int32)

#y:[b]

# print(pred.dtype,y.dtype)

correct = tf.cast(tf.equal(pred,y),dtype=tf.int32) #对预测值和真实值进行比较,但是比较之后返回的是bool值,需要转换成0和1 int32位

#[b],int32

correct=tf.reduce_sum(correct) #计算统计对的个数

total_correct+=int(correct) #将tensor转换为int,累加起来

total_num+=x.shape[0] #总的测试个数

acc=total_correct/total_num

print("test acc:",acc)

#对数据集训练50次的acc

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号