从kafka client端(producers、consumers、 其他的brokers、tools)到kafka broker端的连接,进行身份认证,使用SSL或者SASL。kafka所支持的SASL机制如下:

- SASL/GSSAPI (Kerberos) - starting at version 0.9.0.0

- SASL/PLAIN - starting at version 0.10.0.0

- SASL/SCRAM-SHA-256

- SASL/SCRAM-SHA-512 - starting at version 0.10.2.0

- SASL/OAUTHBEARER - starting at version 2.0

本篇主要总结 SASL/PLAIN

0. perquisite

安装kafka:kafka_2.12-2.4.0,download

安装zookeeper: zookeeper-3.6.3, download

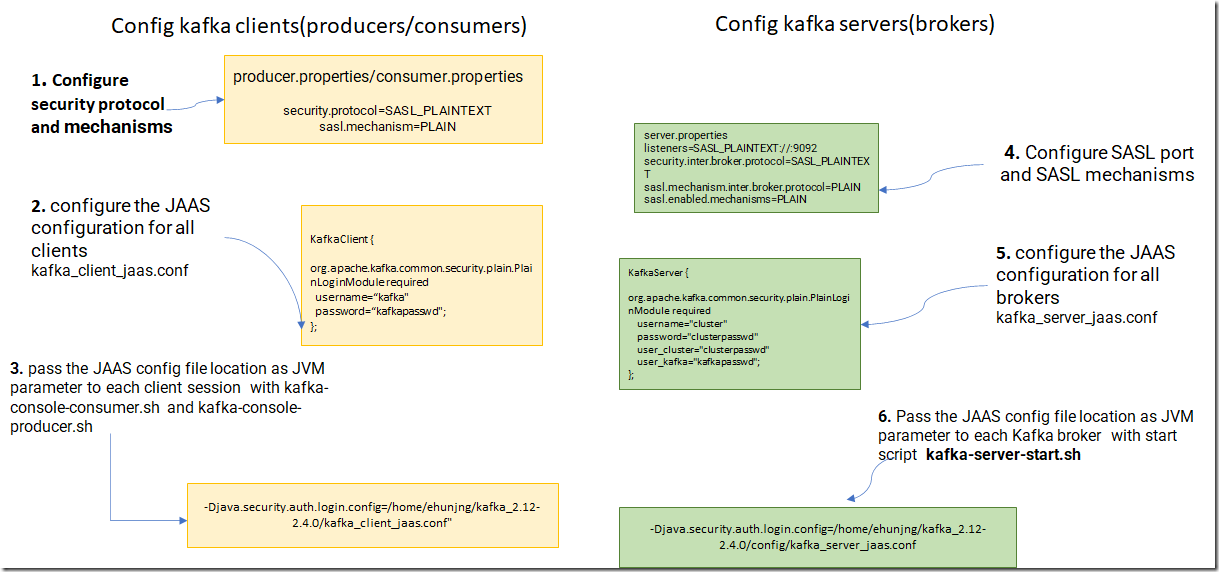

1. kafka SASL/PLAIN配置方法和步骤

2. 为zookeeper开启SASL身份认证(可选,可以不配置)

如果希望连接zookeeper的时候 也启用对身份做一个基本认证,基本配置如下。

- 1)为zookeeper启用SASL认证功能,添加如下内容到zoo.cfg

## add SASL support for zookeeper

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

jaasLoginRenew=3600000- 2) 添加 zk_server_jaas.conf, 为zookeeper添加账号信息

:~/zookeeper/apache-zookeeper-3.6.3-bin/conf$ cat zk_server_jaas.conf Server {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="cluster" password="clusterpasswd" user_alice="passwdofzookeeperserver"; };

username password是zookeeper集群之间的密码,alice用户可以给zookeeper client端使用。

并且需要把以下账户信息添加到kafka_server_jaas.conf,kafka作为zookeeper client需要提供身份认证信息。

Client{

org.apache.kafka.common.security.plain.PlainLoginModule required username="alice" password="passwdofzookeeperserver"; };

- 3)导入kafka依赖的包

新建目录zk_sasl_dependency,kafka/lib目录下复制以下几个jar包到该目录下。

zk_sasl_dependency$ ls

kafka-clients-2.4.0.jar lz4-java-1.6.0.jar slf4j-api-1.7.28.jar slf4j-log4j12-1.7.28.jar snappy-java-1.1.7.3.jar- 4)修改zkEnv.sh

在zkEnv.sh中添加:

for i in /home/ehunjng/zk_sasl_dependency/*.jar;

do

CLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=/home/ehunjng/zookeeper/apache-zookeeper-3.6.3-bin/conf/zk_server_jaas.conf "

3. 验证配置是否成功

- 1)启动 zookeeper

ehunjng@CN-00005131:~$ /home/ehunjng/zookeeper/apache-zookeeper-3.6.3-bin/bin/zkServer.sh start

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /home/ehunjng/zookeeper/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED- 2) 启动kafka 如果开启了zookeeper的身份认证,此时会用到连接zookeeper的用户密码, 否则用不到。

cd /home/ehunjng/kafka_2.12-2.4.0

./bin/kafka-server-start.sh config/server.properties通过修改 kafka_2.12-2.4.0/config$ vim log4j.properties的文件,来修改kafka的loglevel。

如果kafka broker端设置了正确的连接zookeeper的密码,打开zookeeper的log可以看到:

cd zookeeper/apache-zookeeper-3.6.3-bin/logs

cat zookeeper-ehunjng-server-CN-00005131.out

2021-10-25 17:54:40,340 [myid:] - INFO [NIOWorkerThread-5:SaslServerCallbackHandler@119] - Successfully authenticated client: authenticationID=alice; authorizationID=alice.

2021-10-25 17:54:40,353 [myid:] - INFO [NIOWorkerThread-5:SaslServerCallbackHandler@135] - Setting authorizedID: alice

2021-10-25 17:54:40,353 [myid:] - INFO [NIOWorkerThread-5:ZooKeeperServer@1680] - adding SASL authorization for authorizationID: alice如果kafka broker端设置了错误的连接zookeeper的密码,启动kafka的时候,控制台会有错误log:

[2021-10-25 18:07:45,068] ERROR Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

org.apache.zookeeper.KeeperException$AuthFailedException: KeeperErrorCode = AuthFailed for /consumers

at org.apache.zookeeper.KeeperException.create(KeeperException.java:130)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:54)

at kafka.zookeeper.AsyncResponse.maybeThrow(ZooKeeperClient.scala:561)

at kafka.zk.KafkaZkClient.createRecursive(KafkaZkClient.scala:1640)

at kafka.zk.KafkaZkClient.makeSurePersistentPathExists(KafkaZkClient.scala:1562)

at kafka.zk.KafkaZkClient.$anonfun$createTopLevelPaths$1(KafkaZkClient.scala:1554)

at kafka.zk.KafkaZkClient.$anonfun$createTopLevelPaths$1$adapted(KafkaZkClient.scala:1554)

at scala.collection.immutable.List.foreach(List.scala:392)

at kafka.zk.KafkaZkClient.createTopLevelPaths(KafkaZkClient.scala:1554)

at kafka.server.KafkaServer.initZkClient(KafkaServer.scala:400)

at kafka.server.KafkaServer.startup(KafkaServer.scala:207)

at kafka.server.KafkaServerStartable.startup(KafkaServerStartable.scala:44)

at kafka.Kafka$.main(Kafka.scala:84)

at kafka.Kafka.main(Kafka.scala)

[2021-10-25 18:07:45,094] INFO shutting down (kafka.server.KafkaServer)

[2021-10-25 18:07:45,104] INFO [ZooKeeperClient Kafka server] Closing. (kafka.zookeeper.ZooKeeperClient)

[2021-10-25 18:07:45,105] DEBUG Shutting down task scheduler. (kafka.utils.KafkaScheduler)

[2021-10-25 18:07:45,106] DEBUG Close called on already closed client (org.apache.zookeeper.ZooKeeper)

[2021-10-25 18:07:45,116] INFO [ZooKeeperClient Kafka server] Closed. (kafka.zookeeper.ZooKeeperClient)

[2021-10-25 18:07:45,123] INFO shut down completed (kafka.server.KafkaServer)

[2021-10-25 18:07:45,125] ERROR Exiting Kafka. (kafka.server.KafkaServerStartable)

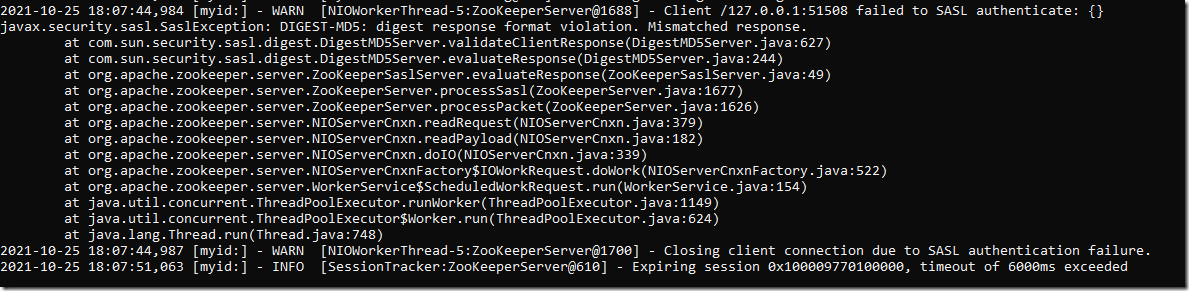

[2021-10-25 18:07:45,130] INFO shutting down (kafka.server.KafkaServer)同时,zookeeper的log也有以下错误提醒:

- 3)验证kafka consumers/producers 到kafka broker的连接

从控制台验证:

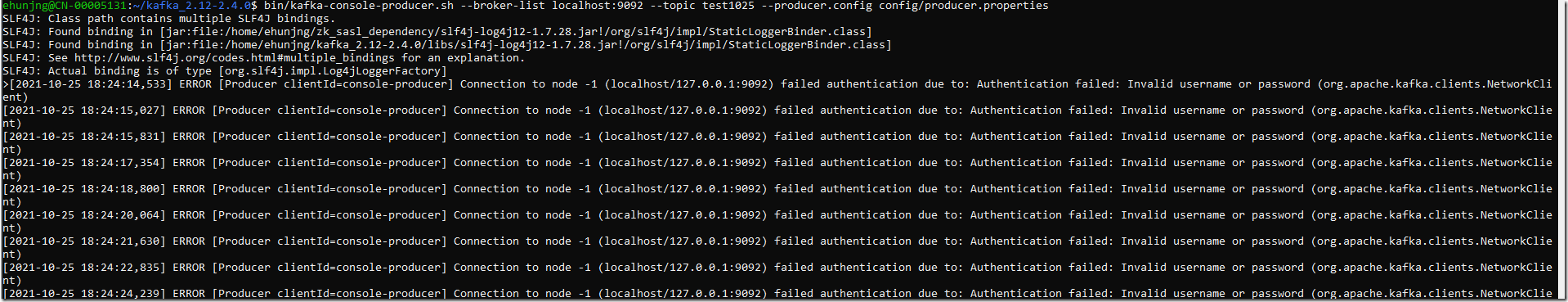

1) 当kafka_client_jaas.conf中的用户名密码不为username=“kafka"和password=“kafkapasswd";

cat kafka_client_jaas.conf

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kfktest"

password="kfktest";

};启动生产者和消费者会报错:

2)当kafka_client_jaas.conf中的用户名密码为username=“kafka"和password=“kafkapasswd",和kafka_server_jaas.conf匹配时

① 启动生产者和消费者,观察二者之间的通信。

@CN-00005131:~/kafka_2.12-2.4.0$ bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test1025 --producer.config config/producer.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/ehunjng/zk_sasl_dependency/slf4j-log4j12-1.7.28.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/ehunjng/kafka_2.12-2.4.0/libs/slf4j-log4j12-1.7.28.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

>test1025-1

>test1025-2

>test1025-3

>ehunjng@CN-00005131:~/kafka_2.12-2.4.0$ /home/ehunjng/kafka_2.12-2.4.0/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test1025 --from-beginning --consumer.config config/consumer.properties

test1025-1

test1025-2

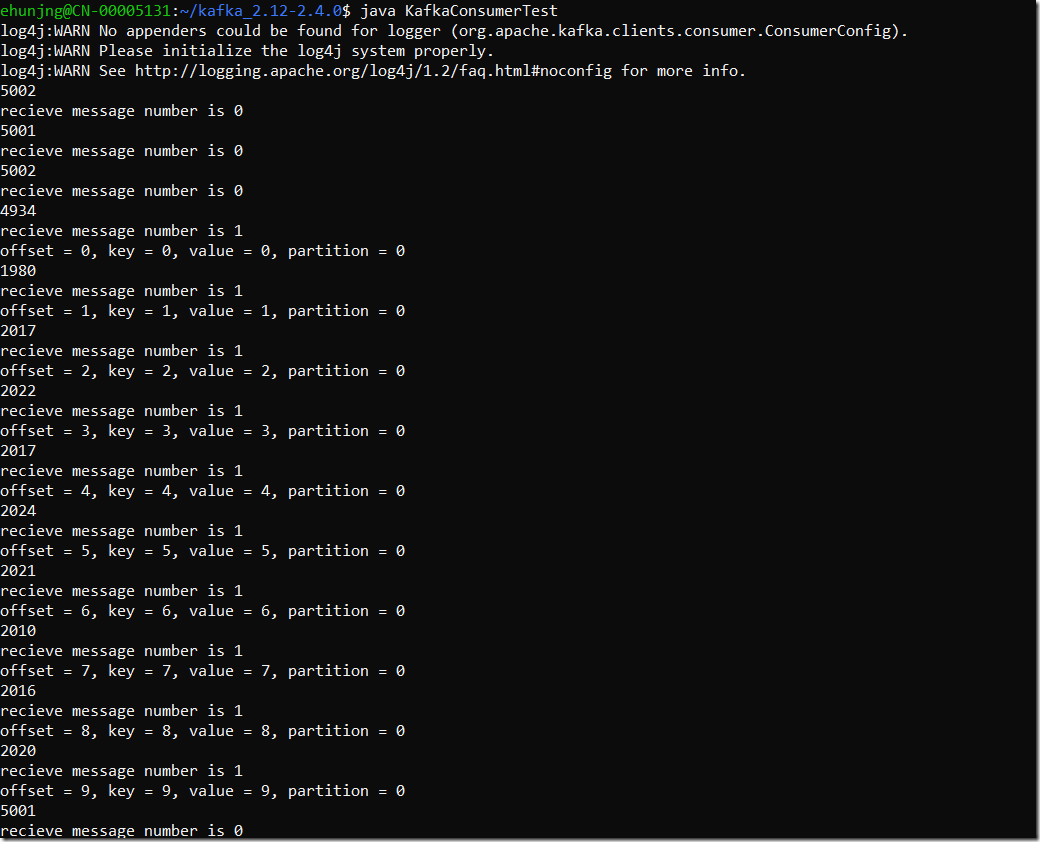

test1025-3从代码验证:

① consumer端代码:

import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.Producer; import org.apache.kafka.clients.producer.ProducerRecord; import java.util.Collections; import java.util.Properties; public class KafkaConsumerTest{ public static void main(String [] args) throws Exception{ testConsumer(); } public static void testConsumer() throws Exception { System.setProperty("java.security.auth.login.config", "/home/ehunjng/kafka_2.12-2.4.0/kafka_client_jaas.conf"); Properties props = new Properties(); props.put("bootstrap.servers", "127.0.0.1:9092"); props.put("enable.auto.commit", "true"); props.put("auto.commit.interval.ms", "1000"); props.put("group.id", "kafka_test_group"); props.put("session.timeout.ms", "6000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("security.protocol", "SASL_PLAINTEXT"); props.put("sasl.mechanism", "PLAIN"); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Collections.singletonList("kafkatest0715")); while(true){ long startTime = System.currentTimeMillis(); ConsumerRecords<String, String> records = consumer.poll(5000); System.out.println(System.currentTimeMillis() - startTime); System.out.println("recieve message number is " + records.count()); for (ConsumerRecord<String, String> record : records) { System.out.printf("offset = %d, key = %s, value = %s, partition = %d %n", record.offset(), record.key(), record.value(), record.partition()); } } } }

import org.apache.kafka.clients.consumer.ConsumerRecord; import org.apache.kafka.clients.consumer.ConsumerRecords; import org.apache.kafka.clients.consumer.KafkaConsumer; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.Producer; import org.apache.kafka.clients.producer.ProducerRecord; import java.util.Collections; import java.util.Properties; public class KafkaProducerTest{ public static void main(String [] args) throws Exception{ testProduct(); } public static void testProduct() throws Exception{ System.setProperty("java.security.auth.login.config", "/home/ehunjng/kafka_2.12-2.4.0/kafka_client_jaas.conf"); Properties props = new Properties(); props.put("bootstrap.servers", "127.0.0.1:9092"); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("security.protocol", "SASL_PLAINTEXT"); props.put("sasl.mechanism", "PLAIN"); Producer<String, String> producer = new KafkaProducer<>(props); long startTime = System.currentTimeMillis(); for (int i = 0; i < 10; i++) { producer.send(new ProducerRecord<>("kafkatest0715", Integer.toString(i), Integer.toString(i))).get(); System.out.println("Send message key = " + Integer.toString(i) + ", value = " + Integer.toString(i)); Thread.sleep(2000); } System.out.println(System.currentTimeMillis()-startTime); } }

③ 设置必要的classpath:

export CLASSPATH="/usr/lib/jvm/java-1.8.0-openjdk-amd64/lib:/usr/lib/jvm/java-1.8.0-openjdk-amd64/jre/lib:/home/ehunjng/kafka_2.12-2.4.0:/home/ehunjng/kafka_2.12-2.4.0/libs:/home/ehunjng/zk_sasl_dependency/kafka-clients-2.4.0.jar:/home/ehunjng/zk_sasl_dependency/slf4j-api-1.7.28.jar:/home/ehunjng/zk_sasl_dependency/slf4j-log4j12-1.7.28.jar:/home/ehunjng/zk_sasl_dependency/snappy-java-1.1.7.3.jar:/home/ehunjng/zk_sasl_dependency/lz4-java-1.6.0.jar:/home/ehunjng/kafka_2.12-2.4.0/libs/log4j-1.2.17.jar"

④ 编译代码

javac -cp $CLASSPATH KafkaConsumerTest.java KafkaProducerTest.java

⑤启动consumer

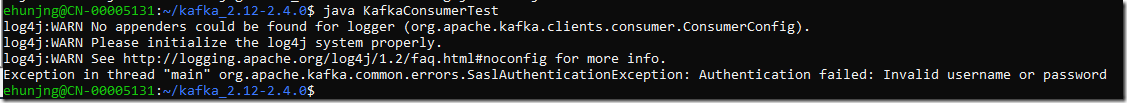

java KafkaConsumerTest

⑥启动producer

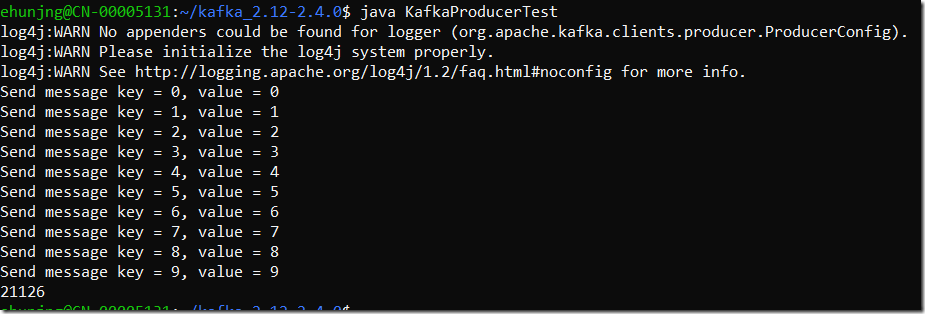

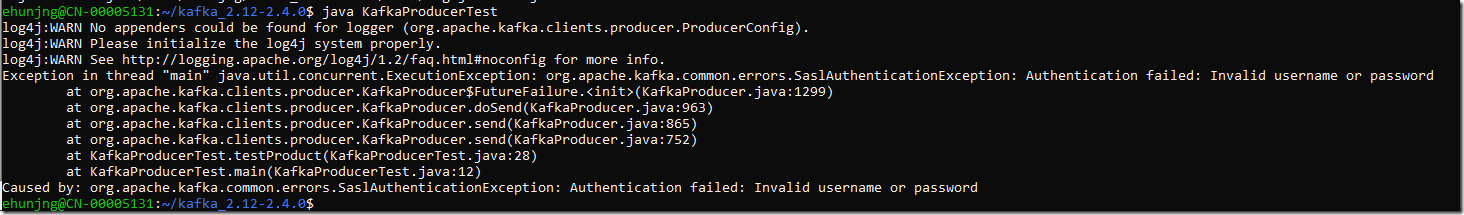

java KafkaProducerTest

kafka_client_jaas.conf持有正确的密码,如下:

kafka_client_jaas.conf持有错误的密码,如下:

参照:

kafka 2.4 guide:

https://kafka.apache.org/24/documentation.html#security_sasl

https://kafka.apache.org/24/documentation.html#security_sasl_plain_production

https://www.cnblogs.com/ilovena/p/10123516.html

https://en.wikipedia.org/wiki/Simple_Authentication_and_Security_Layer

浙公网安备 33010602011771号

浙公网安备 33010602011771号