https://github.com/HuajianUP/Photo-SLAM

0 安装依赖

sudo apt install libeigen3-dev libboost-all-dev libjsoncpp-dev libopengl-dev mesa-utils libglfw3-dev libglm-dev

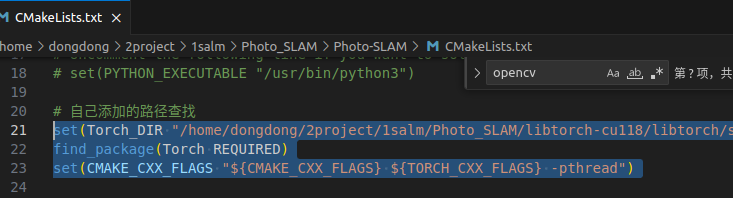

支持的 LibTorch 版本最高为 2.1.2。如果你没有在 CMake 的系统搜索路径中安装 LibTorch,则需要添加其他选项来build.sh帮助 CMake 找到 LibTorch。详情请参阅在build.sh之前添加一行:find_package(Torch REQUIRED)CMakeLists.txt

2选1安装,这里使用c++版本的。

[选项 1]

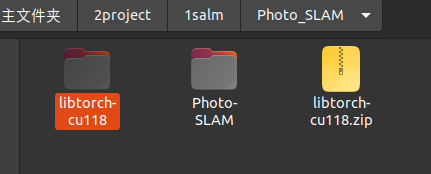

您可以下载 libtorch,例如cu118,然后将其解压到文件夹中./the_path_to_where_you_extracted_LibTorch。

# In a Terminal wget https://download.pytorch.org/libtorch/cu118/libtorch-cxx11-abi-shared-with-deps-2.0.1%2Bcu118.zip -O libtorch-cu118.zip unzip libtorch-cu118.zip -d ./the_path_to_where_you_extracted_LibTorch rm libtorch-cu118.zip

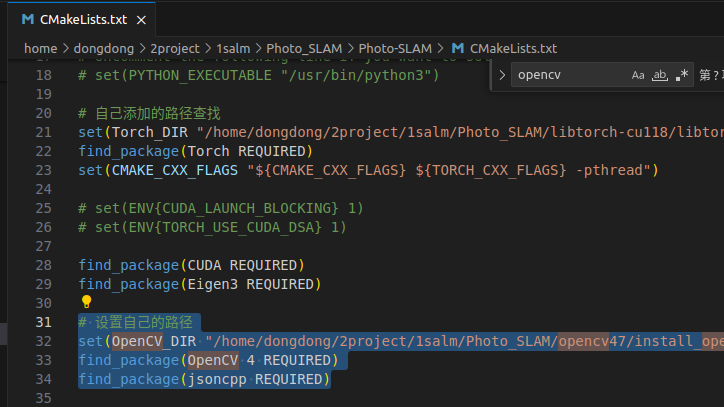

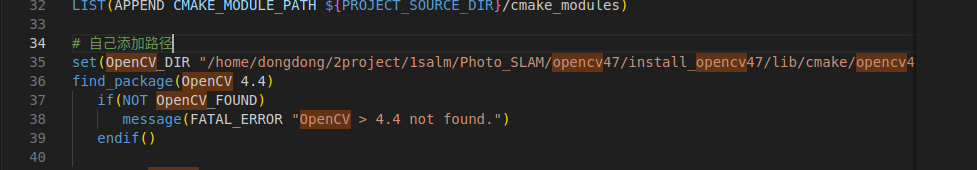

cmake设置路径

# In CMakeLists.txt set(Torch_DIR ./the_path_to_where_you_extracted_LibTorch/libtorch/share/cmake/Torch)

自己的

set(Torch_DIR "/home/dongdong/2project/1salm/Photo_SLAM/libtorch-cu118/libtorch/share/cmake/Torch")

[选项 2]Conda。

如果你使用 Conda 来管理你的 Python 包,并且安装了兼容的 Pytorch,你可以设置

# [For Jatson Orin] To install Pytorch in Jatson developer kit, you can run the below commands # export TORCH_INSTALL=https://developer.download.nvidia.com/compute/redist/jp/v511/pytorch/torch-2.0.0+nv23.05-cp38-cp38-linux_aarch64.whl # pip install --no-cache $TORCH_INSTALL

cmake设置路径

set(Torch_DIR /the_path_to_conda/python3.x/site-packages/torch/share/cmake/Torch)

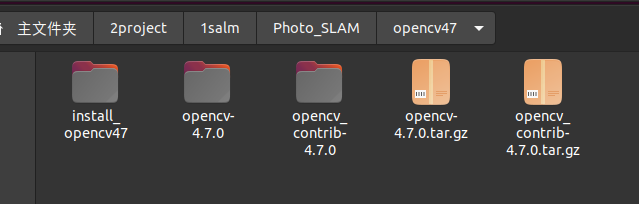

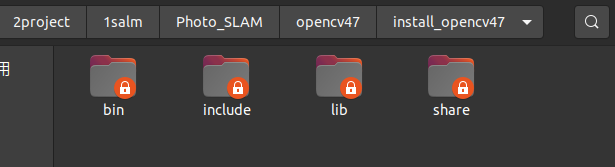

3 将 OpenCV 与 opencv_contrib 和 CUDA 结合使用

以 4.7.0 版本为例,查看OpenCV realeases和opencv_contrib,会找到OpenCV 4.7.0和对应的opencv_contrib 4.7.0,下载到同一目录(例如~/opencv)并解压,

https://github.com/opencv/opencv/archive/refs/tags/4.7.0.tar.gz

https://github.com/opencv/opencv_contrib/archive/refs/tags/4.7.0.tar.gz

注意修改安装路径

DCMAKE_INSTALL_PREFIX

修改扩展库路径

DOPENCV_EXTRA_MODULES_PATH

cmake -DCMAKE_BUILD_TYPE=RELEASE \ -DWITH_CUDA=ON \ -DWITH_CUDNN=ON \ -DOPENCV_DNN_CUDA=ON \ -DWITH_NVCUVID=ON \ -DCUDA_TOOLKIT_ROOT_DIR=/usr/local/cuda-11.8 \ -DOPENCV_EXTRA_MODULES_PATH="/home/dongdong/2project/1salm/Photo_SLAM/opencv47/opencv_contrib-4.7.0/modules" \ -DCMAKE_INSTALL_PREFIX="/home/dongdong/2project/1salm/Photo_SLAM/opencv47/install_opencv47" \ -DBUILD_TIFF=ON \ -DBUILD_ZLIB=ON \ -DBUILD_JASPER=ON \ -DBUILD_CCALIB=ON \ -DBUILD_JPEG=ON \ -DWITH_FFMPEG=ON \ ..

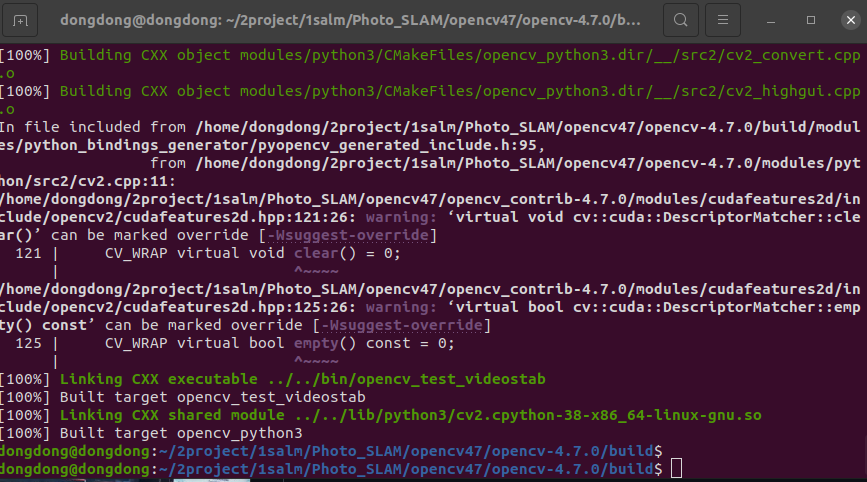

编译

sudo make -j30

安装

sudo make install

设置路径

set(OpenCV_DIR /your_preferred_path/lib/cmake/opencv4)

自己的路径# 设置自己的路径 set(OpenCV_DIR "/home/dongdong/2project/1salm/Photo_SLAM/opencv47/install_opencv47/lib/cmake/opencv4")

修改1

修改2

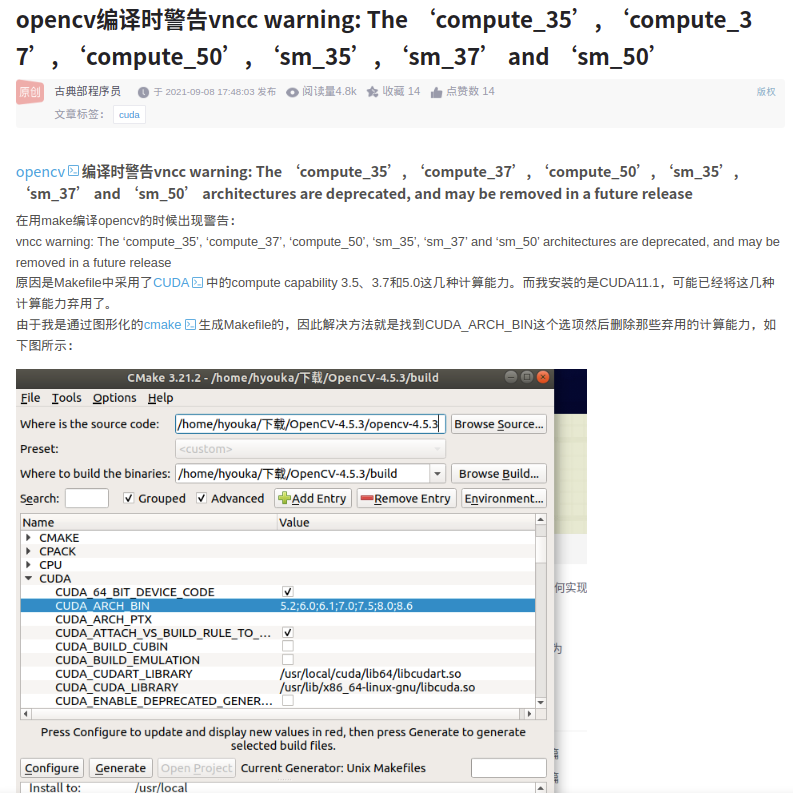

报错问题1 忽略不管

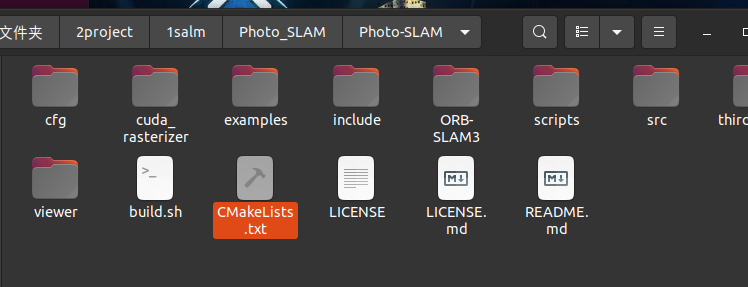

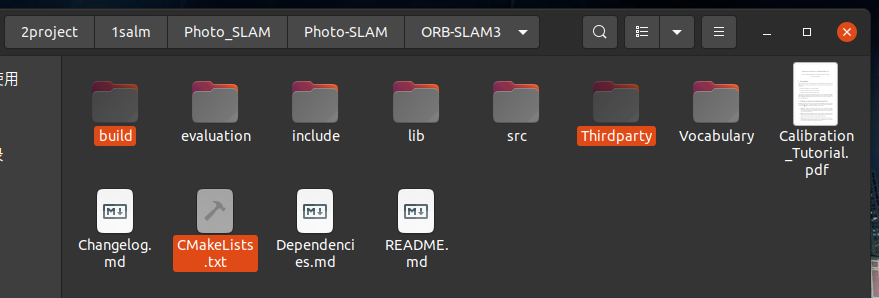

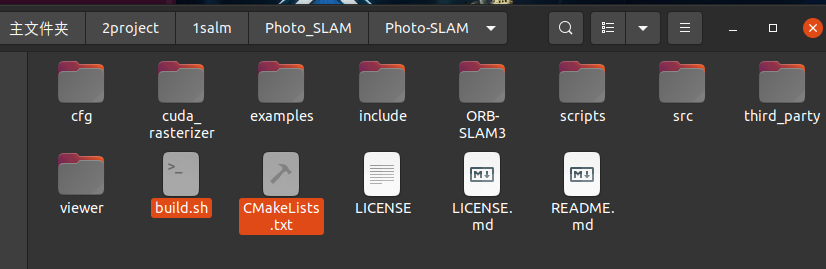

安装工程Photo-SLAM

git clone https://github.com/HuajianUP/Photo-SLAM.git cd Photo-SLAM/ chmod +x ./build.sh ./build.sh

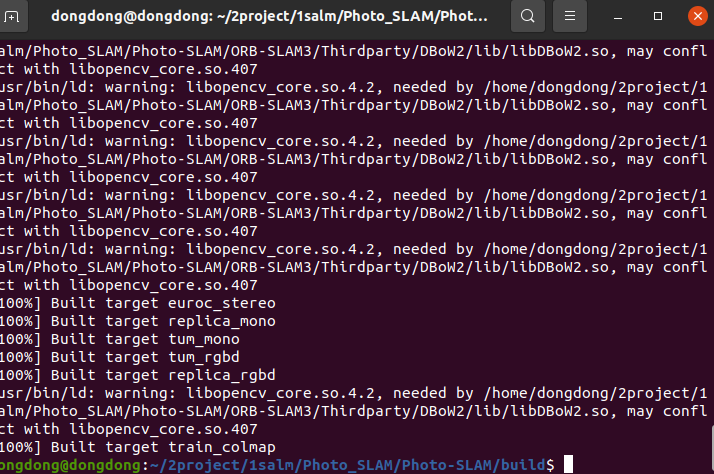

编译过程 脚本自动执行,也可以跟着脚本手动执行,看看 哪一步除了问题。

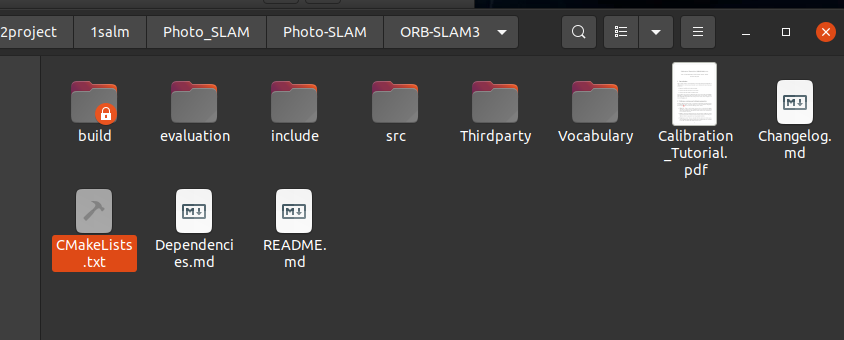

先编译orb slam3的库和工程

然后编译photo工程

编译成功

如果需要删除某个文件夹

sudo rm -r build

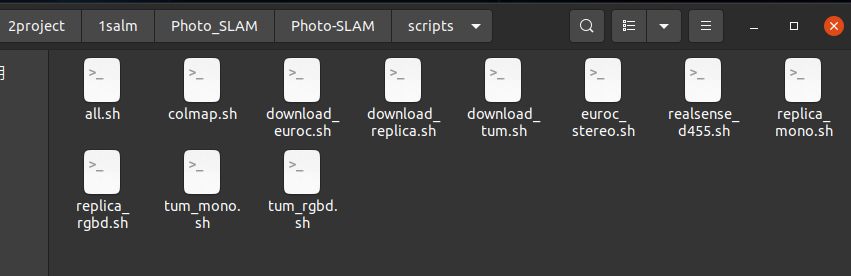

测试脚本

执行权限

sudo chmod +x ./*.sh

../bin/tum_mono \

../ORB-SLAM3/Vocabulary/ORBvoc.txt \

../cfg/ORB_SLAM3/Monocular/TUM/tum_freiburg1_desk.yaml \ 相机内参 需要改

../cfg/gaussian_mapper/Monocular/TUM/tum_mono.yaml \ 高斯训练参数 不用改

/home/rapidlab/dataset/VSLAM/TUM/rgbd_dataset_freiburg1_desk \ 数据地址

../results/tum_mono_$i/rgbd_dataset_freiburg1_desk \ 结果保存位置

no_viewer 是否可视化

自己改造的文件

/**

* This file is part of Photo-SLAM

*

* Copyright (C) 2023-2024 Longwei Li and Hui Cheng, Sun Yat-sen University.

* Copyright (C) 2023-2024 Huajian Huang and Sai-Kit Yeung, Hong Kong University of Science and Technology.

*

* Photo-SLAM is free software: you can redistribute it and/or modify it under the terms of the GNU General Public

* License as published by the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* Photo-SLAM is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even

* the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License along with Photo-SLAM.

* If not, see <http://www.gnu.org/licenses/>.

*/

#include <torch/torch.h>

#include <iostream>

#include <algorithm>

#include <fstream>

#include <chrono>

#include <ctime>

#include <sstream>

#include <thread>

#include <filesystem>

#include <memory>

#include <opencv2/core/core.hpp>

#include "ORB-SLAM3/include/System.h"

#include "include/gaussian_mapper.h"

#include "viewer/imgui_viewer.h"

void LoadImages(const std::string &strFile, std::vector<std::string> &vstrImageFilenames,

std::vector<double> &vTimestamps);

void LoadImages_DJI(const std::string &strFile, std::vector<std::string> &vstrImageFilenames,

std::vector<double> &vTimestamps);

void saveTrackingTime(std::vector<float> &vTimesTrack, const std::string &strSavePath);

void saveGpuPeakMemoryUsage(std::filesystem::path pathSave);

int main(int argc, char **argv)

{

if (argc != 6 && argc != 7)

{

std::cerr << std::endl

<< "Usage: " << argv[0]

<< " path_to_vocabulary" /*1*/

<< " path_to_ORB_SLAM3_settings" /*2*/

<< " path_to_gaussian_mapping_settings" /*3*/

<< " path_to_sequence" /*4*/

<< " path_to_trajectory_output_directory/" /*5*/

<< " (optional)no_viewer" /*6*/

<< std::endl;

return 1;

}

bool use_viewer = true;

if (argc == 7)

use_viewer = (std::string(argv[6]) == "no_viewer" ? false : true);

std::string output_directory = std::string(argv[5]);

if (output_directory.back() != '/')

output_directory += "/";

std::filesystem::path output_dir(output_directory);

// Retrieve paths to images

std::vector<std::string> vstrImageFilenamesRGB;

std::vector<double> vTimestamps;

//std::string strFile = std::string(argv[4]) + "/rgb.txt";

std::string strFile = std::string(argv[4])+"/slam_config/gnss.txt";

std::cout << "strFile: " << strFile << std::endl;

LoadImages_DJI(strFile, vstrImageFilenamesRGB, vTimestamps);

// Check consistency in the number of images and depthmaps

int nImages = vstrImageFilenamesRGB.size();

if (vstrImageFilenamesRGB.empty())

{

std::cerr << std::endl << "No images found in provided path." << std::endl;

return 1;

}

// Device

torch::DeviceType device_type;

if (torch::cuda::is_available())

{

std::cout << "CUDA available! Training on GPU." << std::endl;

device_type = torch::kCUDA;

}

else

{

std::cout << "Training on CPU." << std::endl;

device_type = torch::kCPU;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

std::shared_ptr<ORB_SLAM3::System> pSLAM =

std::make_shared<ORB_SLAM3::System>(

argv[1], argv[2], ORB_SLAM3::System::MONOCULAR);

float imageScale = pSLAM->GetImageScale();

// Create GaussianMapper

std::filesystem::path gaussian_cfg_path(argv[3]);

std::shared_ptr<GaussianMapper> pGausMapper =

std::make_shared<GaussianMapper>(

pSLAM, gaussian_cfg_path, output_dir, 0, device_type);

std::thread training_thd(&GaussianMapper::run, pGausMapper.get());

// Create Gaussian Viewer

std::thread viewer_thd;

std::shared_ptr<ImGuiViewer> pViewer;

if (use_viewer)

{

pViewer = std::make_shared<ImGuiViewer>(pSLAM, pGausMapper);

viewer_thd = std::thread(&ImGuiViewer::run, pViewer.get());

}

// Vector for tracking time statistics

std::vector<float> vTimesTrack;

vTimesTrack.resize(nImages);

std::cout << std::endl << "-------" << std::endl;

std::cout << "Start processing sequence ..." << std::endl;

std::cout << "Images in the sequence: " << nImages << std::endl << std::endl;

// Main loop

cv::Mat im;

for (int ni = 0; ni < nImages; ni++)

{

if (pSLAM->isShutDown())

break;

// Read image and depthmap from file

std::string image_name = std::string(argv[4]) + "/images/" + vstrImageFilenamesRGB[ni];

std::cout<<"image_name "<< image_name << std::endl;

if (std::filesystem::exists(image_name)) {

std::cout << "文件存在!\n";

} else {

std::cout << "文件不存在!\n";

continue;

}

im = cv::imread(image_name, cv::IMREAD_UNCHANGED); //,cv::IMREAD_UNCHANGED);

cv::cvtColor(im, im, CV_BGR2RGB);

double tframe = vTimestamps[ni];

if (im.empty())

{

std::cerr << std::endl << "Failed to load image at: "

<< std::string(argv[4]) << "/" << vstrImageFilenamesRGB[ni] << std::endl;

return 1;

}

if (imageScale != 1.f)

{

int width = im.cols * imageScale;

int height = im.rows * imageScale;

cv::resize(im, im, cv::Size(width, height));

}

std::chrono::steady_clock::time_point t1 = std::chrono::steady_clock::now();

// Pass the image to the SLAM system

pSLAM->TrackMonocular(im, tframe, std::vector<ORB_SLAM3::IMU::Point>(), vstrImageFilenamesRGB[ni]);

std::chrono::steady_clock::time_point t2 = std::chrono::steady_clock::now();

double ttrack = std::chrono::duration_cast<std::chrono::duration<double>>(t2 - t1).count();

vTimesTrack[ni] = ttrack;

// Wait to load the next frame

double T = 0;

if (ni < nImages - 1)

T = vTimestamps[ni + 1] - tframe;

else if (ni > 0)

T = tframe - vTimestamps[ni - 1];

if (ttrack < T)

usleep((T - ttrack) * 1e6);

}

// Stop all threads

pSLAM->Shutdown();

training_thd.join();

if (use_viewer)

viewer_thd.join();

// GPU peak usage

saveGpuPeakMemoryUsage(output_dir / "GpuPeakUsageMB.txt");

// Tracking time statistics

saveTrackingTime(vTimesTrack, (output_dir / "TrackingTime.txt").string());

// Save camera trajectory

pSLAM->SaveTrajectoryTUM((output_dir / "CameraTrajectory_TUM.txt").string());

pSLAM->SaveKeyFrameTrajectoryTUM((output_dir / "KeyFrameTrajectory_TUM.txt").string());

pSLAM->SaveTrajectoryEuRoC((output_dir / "CameraTrajectory_EuRoC.txt").string());

pSLAM->SaveKeyFrameTrajectoryEuRoC((output_dir / "KeyFrameTrajectory_EuRoC.txt").string());

// pSLAM->SaveTrajectoryKITTI((output_dir / "CameraTrajectory_KITTI.txt").string());

return 0;

}

double time_stamp = 0;

void LoadImages_DJI(const std::string &strFile, std::vector<std::string> &vstrImageFilenames,

std::vector<double> &vTimestamps)

{

std::ifstream file(strFile); // 打开txt文件

if (!file) {

std::cerr << "无法打开文件!" << std::endl;

}

std::string line;

while (std::getline(file, line)) { // 逐行读取文件

std::istringstream ss(line); // 使用 stringstream 解析每一行

std::string image_name;

vTimestamps.push_back(time_stamp);

time_stamp=time_stamp+2;// 秒一张

ss >> image_name; // 提取第一列图像名称

vstrImageFilenames.push_back(image_name);

std::cout<< "time_stamp " << time_stamp << " 名字 " << image_name << std::endl;

}

}

void LoadImages(const std::string &strFile, std::vector<std::string> &vstrImageFilenames,

std::vector<double> &vTimestamps)

{

ifstream f;

f.open(strFile.c_str());

// skip first three lines

string s0;

std::getline(f,s0);

std::getline(f,s0);

std::getline(f,s0);

while(!f.eof())

{

std::string s;

std::getline(f,s);

if(!s.empty())

{

std::stringstream ss;

ss << s;

double t;

std::string sRGB;

ss >> t;

vTimestamps.push_back(t);

ss >> sRGB;

vstrImageFilenames.push_back(sRGB);

}

}

}

void saveTrackingTime(std::vector<float> &vTimesTrack, const std::string &strSavePath)

{

std::ofstream out;

out.open(strSavePath.c_str());

std::size_t nImages = vTimesTrack.size();

float totaltime = 0;

for (int ni = 0; ni < nImages; ni++)

{

out << std::fixed << std::setprecision(4)

<< vTimesTrack[ni] << std::endl;

totaltime += vTimesTrack[ni];

}

// std::sort(vTimesTrack.begin(), vTimesTrack.end());

// out << "-------" << std::endl;

// out << std::fixed << std::setprecision(4)

// << "median tracking time: " << vTimesTrack[nImages / 2] << std::endl;

// out << std::fixed << std::setprecision(4)

// << "mean tracking time: " << totaltime / nImages << std::endl;

out.close();

}

void saveGpuPeakMemoryUsage(std::filesystem::path pathSave)

{

namespace c10Alloc = c10::cuda::CUDACachingAllocator;

c10Alloc::DeviceStats mem_stats = c10Alloc::getDeviceStats(0);

c10Alloc::Stat reserved_bytes = mem_stats.reserved_bytes[static_cast<int>(c10Alloc::StatType::AGGREGATE)];

float max_reserved_MB = reserved_bytes.peak / (1024.0 * 1024.0);

c10Alloc::Stat alloc_bytes = mem_stats.allocated_bytes[static_cast<int>(c10Alloc::StatType::AGGREGATE)];

float max_alloc_MB = alloc_bytes.peak / (1024.0 * 1024.0);

std::ofstream out(pathSave);

out << "Peak reserved (MB): " << max_reserved_MB << std::endl;

out << "Peak allocated (MB): " << max_alloc_MB << std::endl;

out.close();

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号