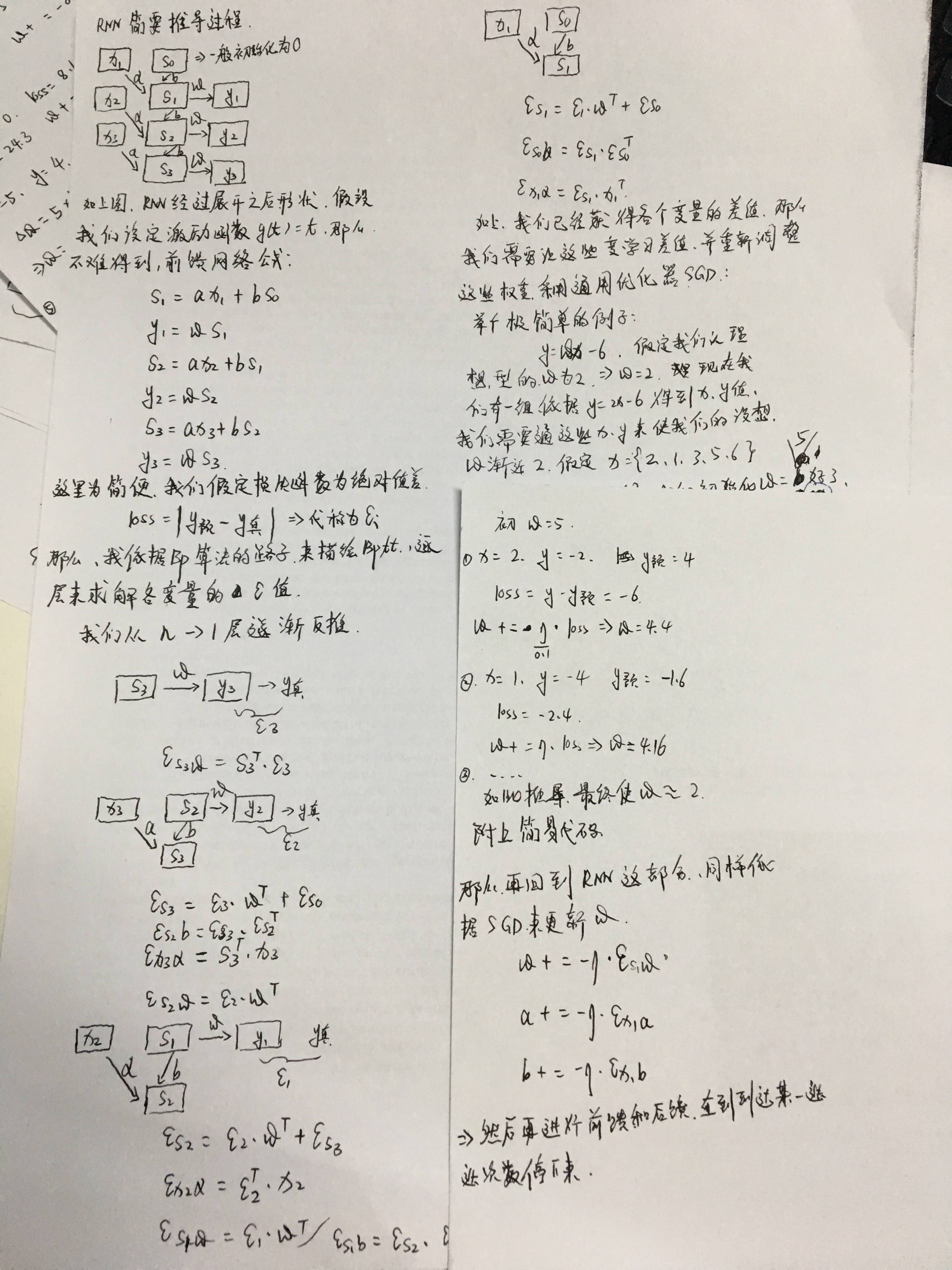

RNN的简单的推导演算公式(BPTT)

附上y=2x-b拟合的简单的代码.

1 import numpy as np 2 x = np.asarray([2,1,3,5,6]); 3 y = np.zeros((1,5)); 4 learning_rate=0.1; 5 w=5; 6 7 for i in range(len(x)): 8 y[0][i]= func(x[i]); 9 10 def func(x): 11 return 2*x -6; 12 13 def forward(w,x): 14 return w*x -6; 15 16 def backward(w,x,y): 17 pred_y = w*x -6; 18 loss = (y - pred_y); 19 delta_w = loss; 20 w += (learning_rate*loss); 21 return w; 22 23 def train(w): 24 for epoch in range(5): 25 for i in range(len(x)): 26 print 'w = {} ,pred_y = {} ,y ={}'.format(w,forward(w,x[i]),y[0][i]); 27 w = backward(w,x[i],y[0][i]); 28 if __name__ == '__main__': 29 train(w);

w = 5 ,pred_y = 4 ,y =-2.0 w = 4.4 ,pred_y = -1.6 ,y =-4.0 w = 4.16 ,pred_y = 6.48 ,y =0.0 w = 3.512 ,pred_y = 11.56 ,y =4.0 w = 2.756 ,pred_y = 10.536 ,y =6.0 w = 2.3024 ,pred_y = -1.3952 ,y =-2.0 w = 2.24192 ,pred_y = -3.75808 ,y =-4.0 w = 2.217728 ,pred_y = 0.653184 ,y =0.0 w = 2.1524096 ,pred_y = 4.762048 ,y =4.0 w = 2.0762048 ,pred_y = 6.4572288 ,y =6.0 w = 2.03048192 ,pred_y = -1.93903616 ,y =-2.0 w = 2.024385536 ,pred_y = -3.975614464 ,y =-4.0 w = 2.0219469824 ,pred_y = 0.0658409472 ,y =0.0 w = 2.01536288768 ,pred_y = 4.0768144384 ,y =4.0 w = 2.00768144384 ,pred_y = 6.04608866304 ,y =6.0 w = 2.00307257754 ,pred_y = -1.99385484493 ,y =-2.0 w = 2.00245806203 ,pred_y = -3.99754193797 ,y =-4.0 w = 2.00221225583 ,pred_y = 0.00663676747776 ,y =0.0 w = 2.00154857908 ,pred_y = 4.00774289539 ,y =4.0 w = 2.00077428954 ,pred_y = 6.00464573723 ,y =6.0 w = 2.00030971582 ,pred_y = -1.99938056837 ,y =-2.0 w = 2.00024777265 ,pred_y = -3.99975222735 ,y =-4.0 w = 2.00022299539 ,pred_y = 0.000668986161758 ,y =0.0 w = 2.00015609677 ,pred_y = 4.00078048386 ,y =4.0 w = 2.00007804839 ,pred_y = 6.00046829031 ,y =6.0

编程是一种快乐,享受代码带给我的乐趣!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号