prometheus node-exporter cadvisor grafana alertmanager 安装及服务发现

prometheus node-exporter cadvisor grafana alertmanager 安装及服务发现

本次搭建基于docker环境

前期准备

拉取镜像

docker pull google/cadvisor docker pull prom/prometheus docker pull grafana/grafana docker pull prom/alertmanager

创建持久化目录

mkdir -p /home/prometheus/{config,data,rules}

chmod -R 777 /home/prometheus/data

mkdir /home/grafana

mkdir -p /home/alertmanager/data

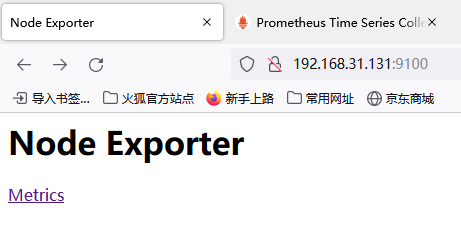

启动node-exporter硬件系统监控

docker run -d -p 9100:9100 \ -v /proc:/host/proc:ro \ -v /sys:/host/sys:ro \ -v /:/rootfs:ro \ --name=node-exporter \ prom/node-exporter

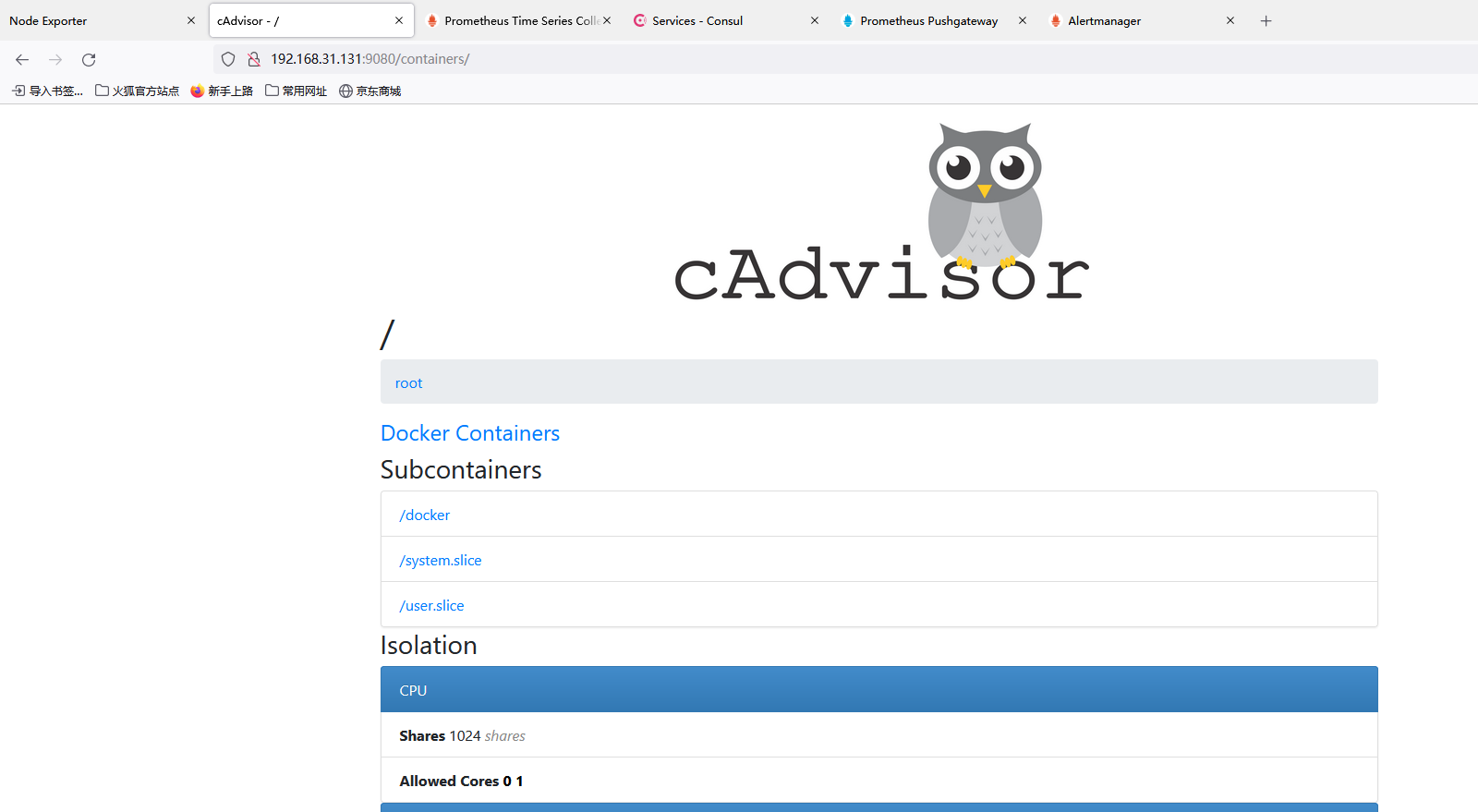

启动cadvisor容器监控

docker run \ -v /:/rootfs:ro \ -v /var/run:/var/run:rw \ -v /sys:/sys:ro \ -v /var/lib/docker/:/var/lib/docker:ro \ -p 9080:8080 \ --detach=true \ --name=cadvisor \ google/cadvisor #--detach=true #分离容器

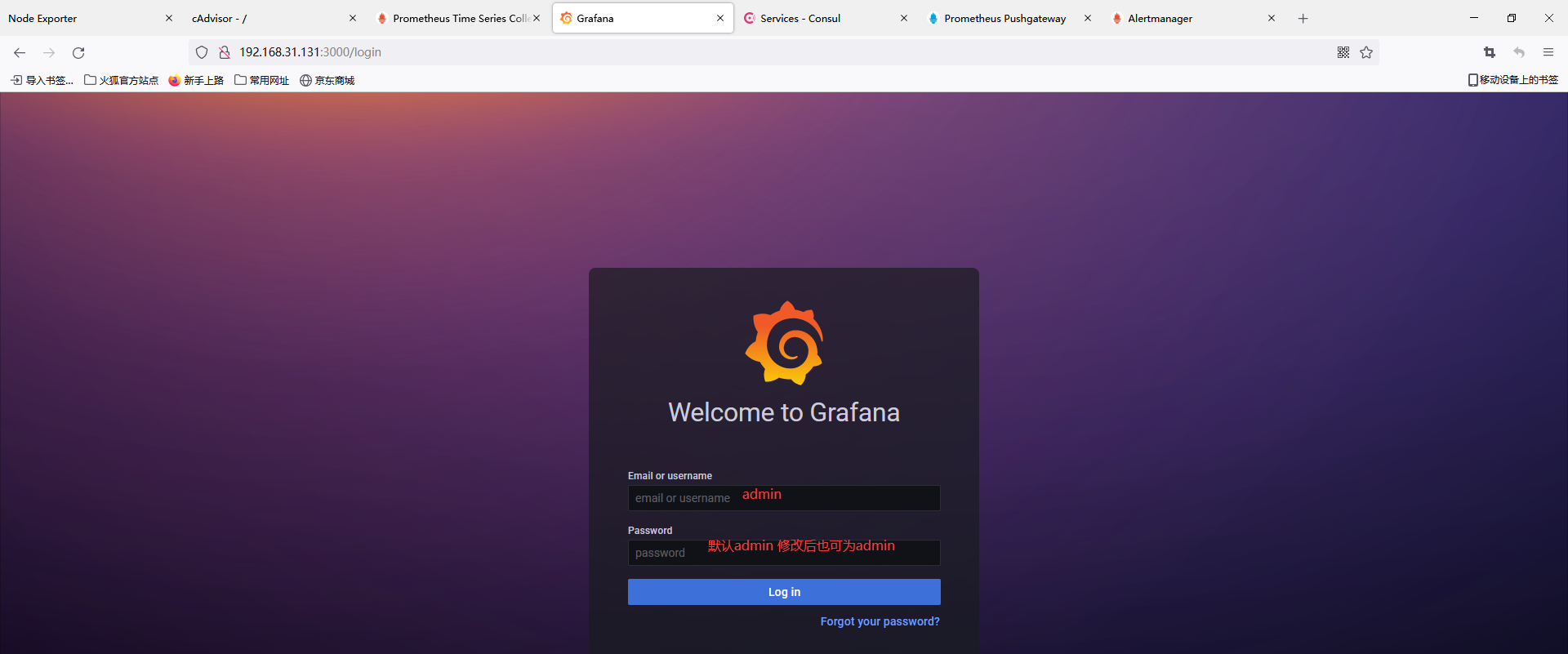

启动grafana

docker run -d -p 3000:3000 \ --user=root \ --name=grafana \ -v /home/grafana:/var/lib/grafana \ grafana/grafana #--user=root #以root用户运行

启动prometheus

#取得prometheus.yml文件,也可直接新建 docker run -d -p 9090:9090 --name prometheus prom/prometheus docker cp prometheus:/etc/prometheus/prometheus.yml /home/prometheus/ docker rm -f prometheus docker run -d -p 9090:9090 \ --name prometheus \ -v /home/prometheus:/etc/prometheus \ -v /home/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml \ prom/prometheus \ --web.enable-lifecycle \ --config.file="/etc/prometheus/prometheus.yml" \ --storage.tsdb.path="/etc/prometheus/data" #--web.enable-lifecycle #热加载参数,需要配合配置文件--config.file使用,否则会报错 #curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件 #--config.file #配置文件路径 #--storage.tsdb.path="/etc/prometheus/data" #数据存储路径

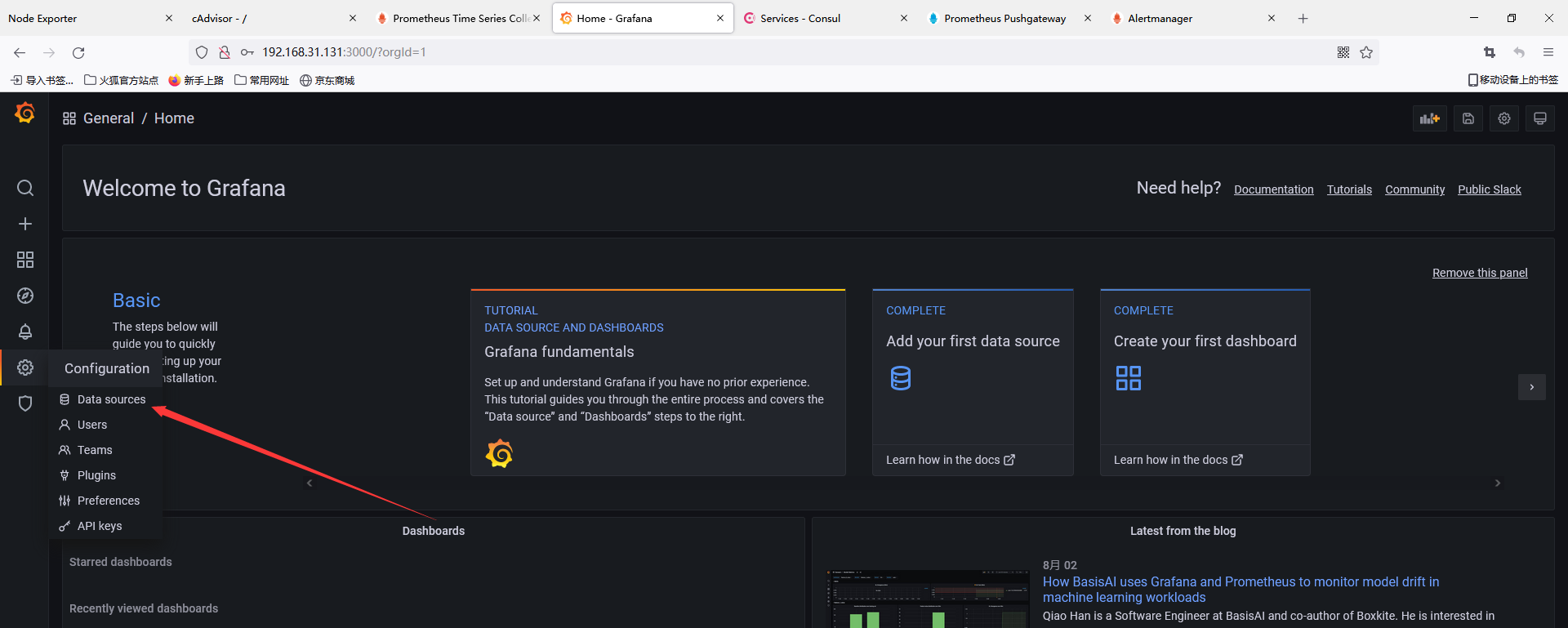

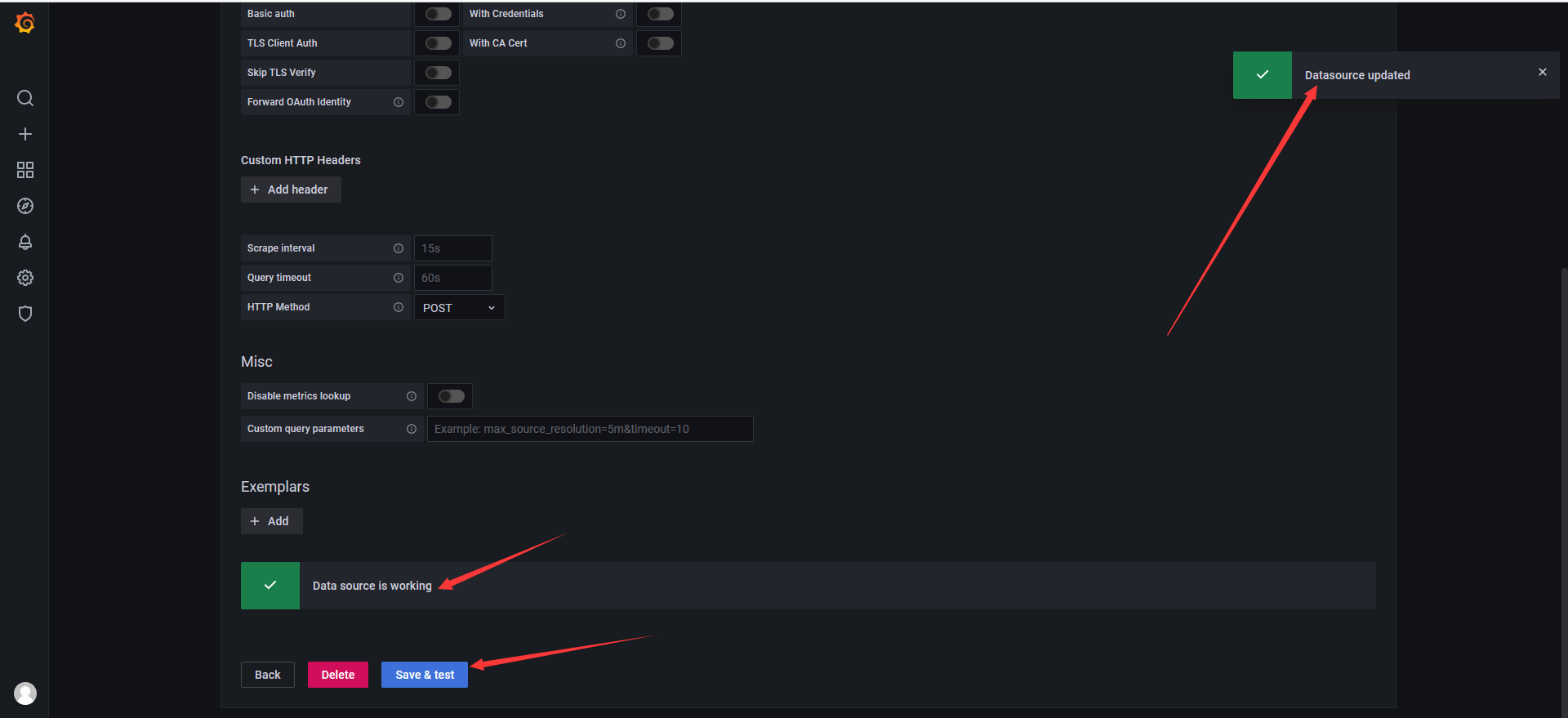

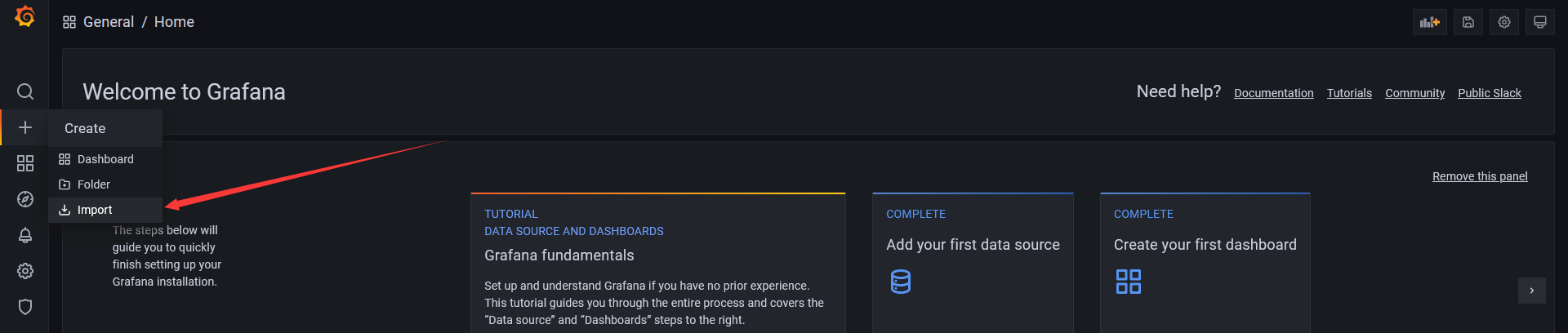

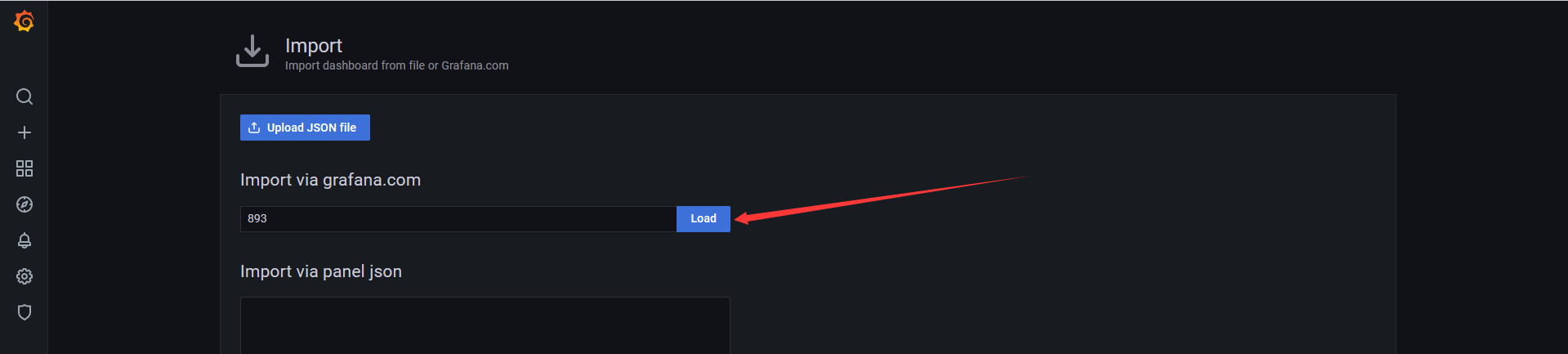

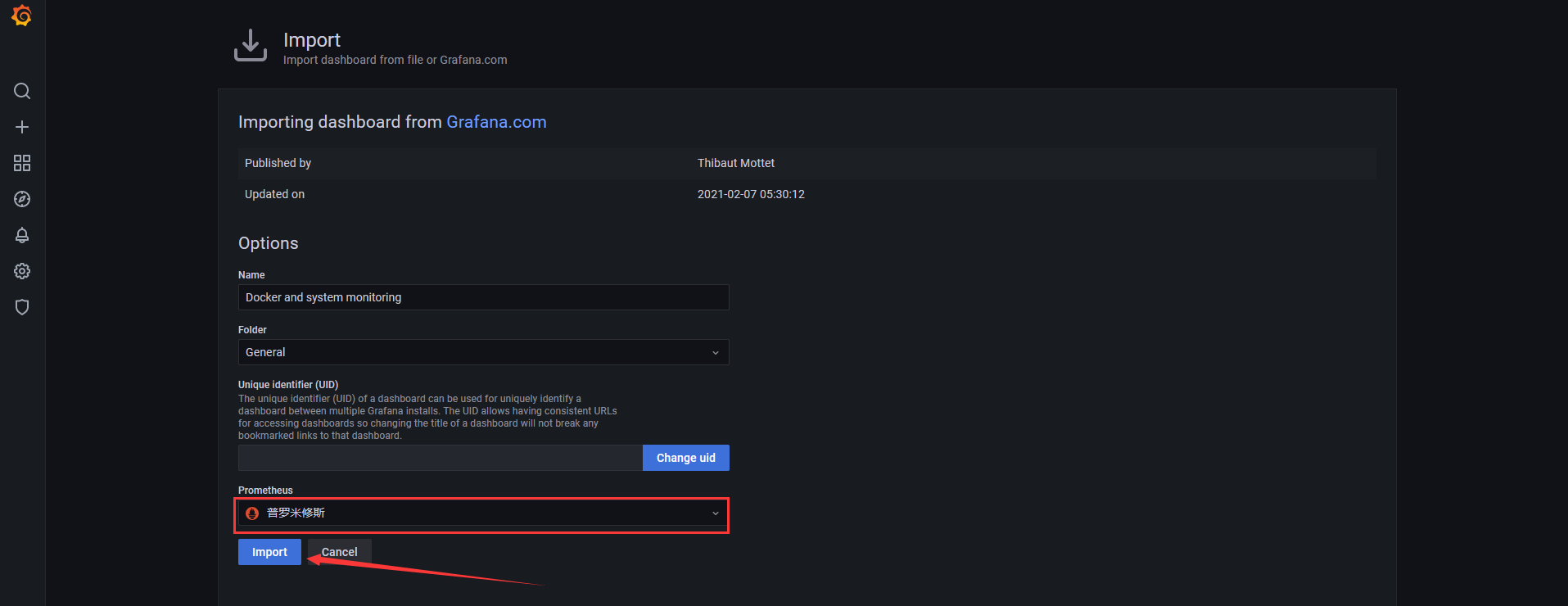

Prometheus配置grafana

[root@localhost prometheus]# vim prometheus.yml

global: # 全局设置,可以被覆盖

scrape_interval: 15s # 抓取采样数据的时间间隔,每15秒去被监控机上采样,即数据采集频率

evaluation_interval: 15s # 监控数据规则的评估频率,比如设置文件系统使用率>75%发出告警则每15秒执行一次该规则,进行文件系统检查

#告警管理

alerting:

alertmanagers:

- static_configs: #告警静态目标配置

# - targets: ['192.168.31.131:9093'] #告警ui地址

#告警规则

rule_files:

#- /etc/prometheus/rules/*.rules #告警规则文件路径

scrape_configs: # 抓取配置

#静态发现

- job_name: 'grafana' #任务名 全局唯一

scrape_interval: 5s # 抓取采样数据的时间间隔

static_configs: #静态目标配置

- targets: ['192.168.31.131:3000'] #抓取地址,默认为/metrics

labels: #标签

instance: grafana

curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件

启动pushgateway

docker run -d \ --name pushgateway \ -p 9091:9091 \ prom/pushgateway

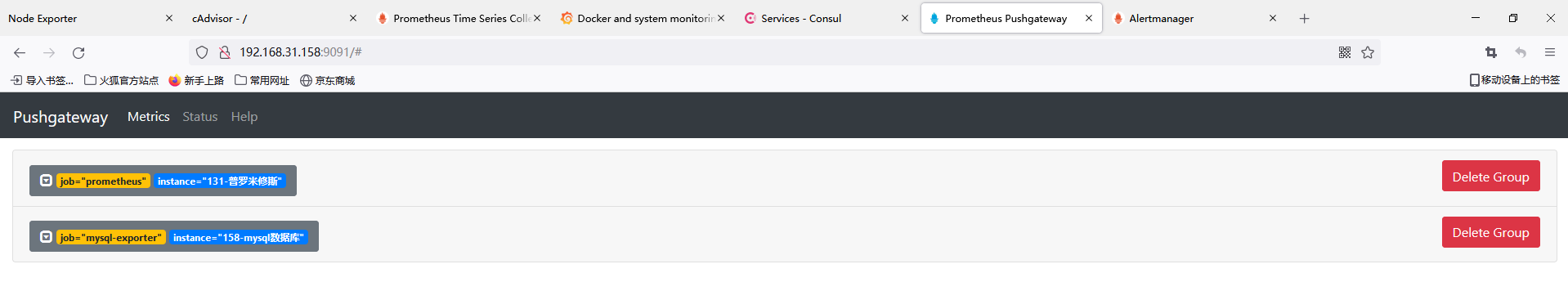

推送exporter到pushgateway

curl http://localhost:9090/metrics | curl --data-binary @- http://192.168.31.158:9091/metrics/job/prometheus/instance/131-普罗米修斯 curl http://192.168.31.158:9104/metrics | curl --data-binary @- http://192.168.31.158:9091/metrics/job/mysql/instance/158-MYSQL

注:推送到pushgateway的指标不会显示在prometheus的网页界面上,只能通过promsql查询

curl -X DELETE http://192.168.31.158:9104/metrics/job/mysql

Prometheus配置pushgateway

[root@localhost prometheus]# vim prometheus.yml

scrape_configs: # 抓取配置

#静态发现

- job_name: 'grafana' #任务名 全局唯一

scrape_interval: 5s # 抓取采样数据的时间间隔

static_configs: #静态目标配置

- targets: ['192.168.31.131:3000'] #抓取地址,默认为/metrics

labels: #标签

instance: grafana

#pushgateway中转

- job_name: pushgateway

static_configs:

- targets: ['192.168.31.158:9091']

labels:

instance: pushgateway

curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件

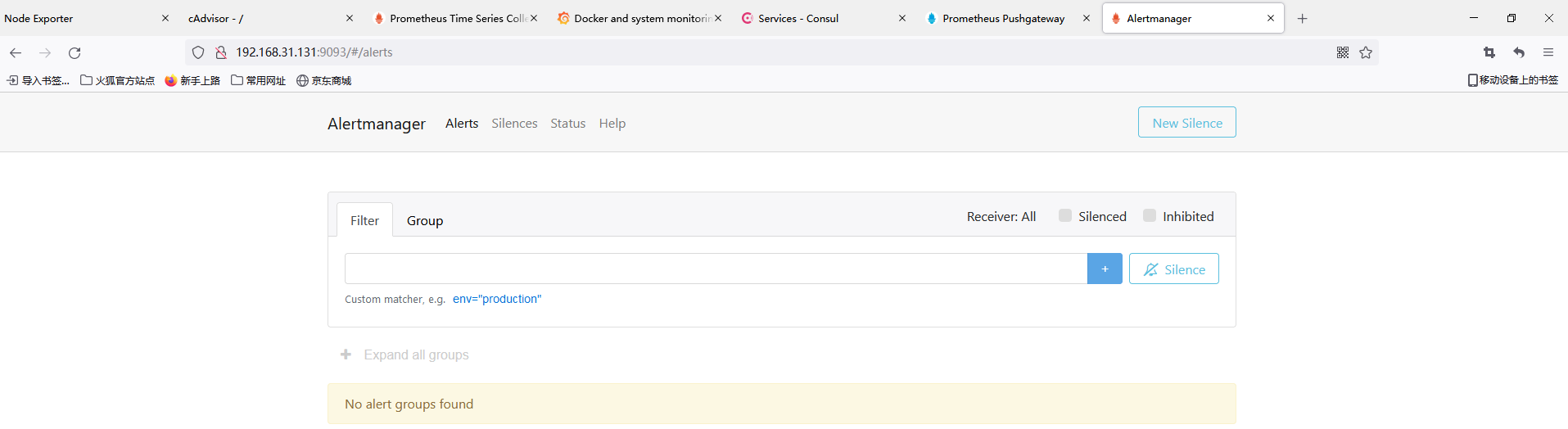

启动告警管理alertmanager

docker run --name alertmanager -d -p 9093:9093 prom/alertmanager docker cp alertmanager:/etc/alertmanager/alertmanager.yml /home/alertmanager/ docker rm -f alertmanager docker run -d --name alertmanger -p 9093:9093 \ -v /home/alertmanager:/etc/alertmanager \ prom/alertmanager \ --storage.path="/etc/alertmanager/data" \ --config.file="/etc/alertmanager/alertmanager.yml" #--storage.path 数据存储路径 #--config.file 配置文件路径

Prometheus配置alertmanager连接

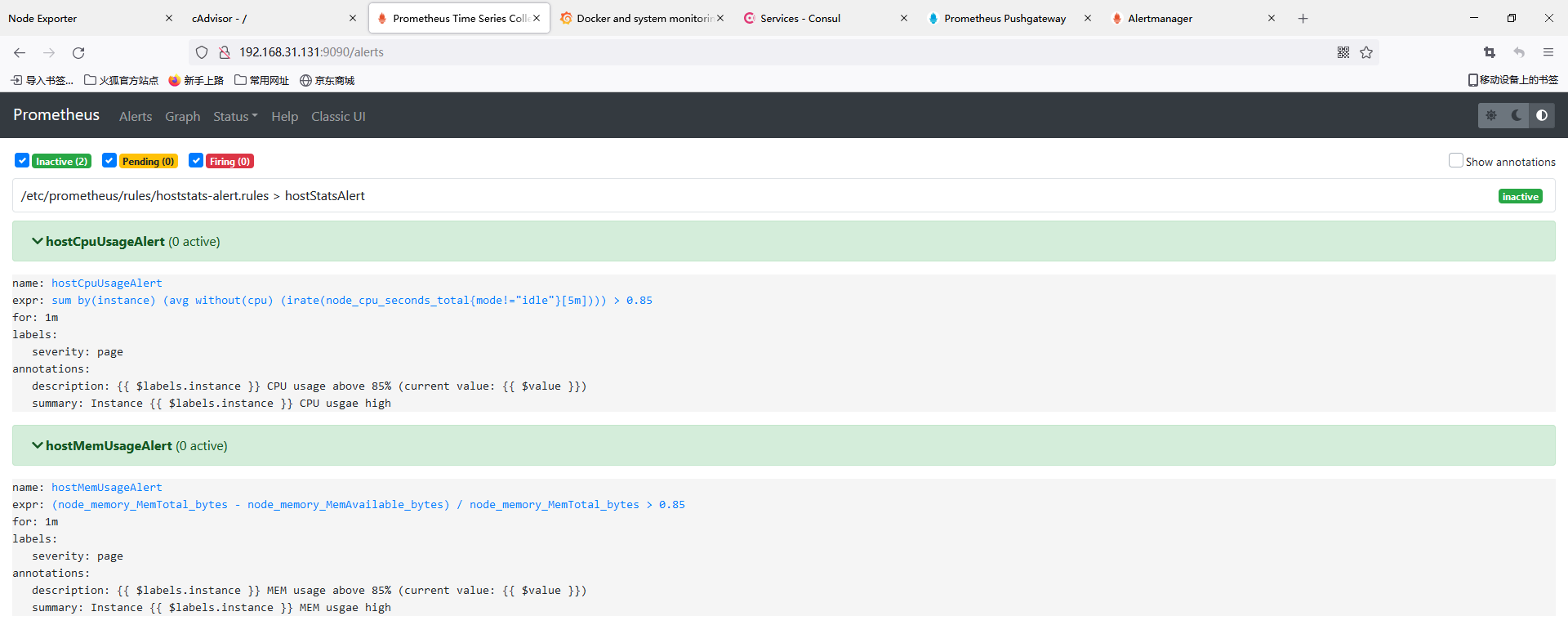

[root@localhost rules]# cat hoststats-alert.rules

groups:

- name: hostStatsAlert

rules:

- alert: hostCpuUsageAlert

expr: sum(avg without (cpu)(irate(node_cpu_seconds_total{mode!="idle"}[5m]))) by (instance) > 0.85

for: 1m

labels:

severity: page

annotations:

summary: "Instance {{ $labels.instance }} CPU usgae high"

description: "{{ $labels.instance }} CPU usage above 85% (current value: {{ $value }})"

- alert: hostMemUsageAlert

expr: (node_memory_MemTotal_bytes - node_memory_MemAvailable_bytes)/node_memory_MemTotal_bytes > 0.85

for: 1m

labels:

severity: page

annotations:

summary: "Instance {{ $labels.instance }} MEM usgae high"

description: "{{ $labels.instance }} MEM usage above 85% (current value: {{ $value }})"

[root@localhost prometheus]# vim prometheus.yml

global: # 全局设置,可以被覆盖

scrape_interval: 15s # 抓取采样数据的时间间隔,每15秒去被监控机上采样,即数据采集频率

evaluation_interval: 15s # 监控数据规则的评估频率,比如设置文件系统使用率>75%发出告警则每15秒执行一次该规则,进行文件系统检查

#告警管理

alerting:

alertmanagers:

- static_configs: #告警静态目标配置

- targets: ['192.168.31.131:9093'] #告警ui地址

#告警规则

rule_files:

- /etc/prometheus/rules/*.rules #告警规则文件路径

curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件

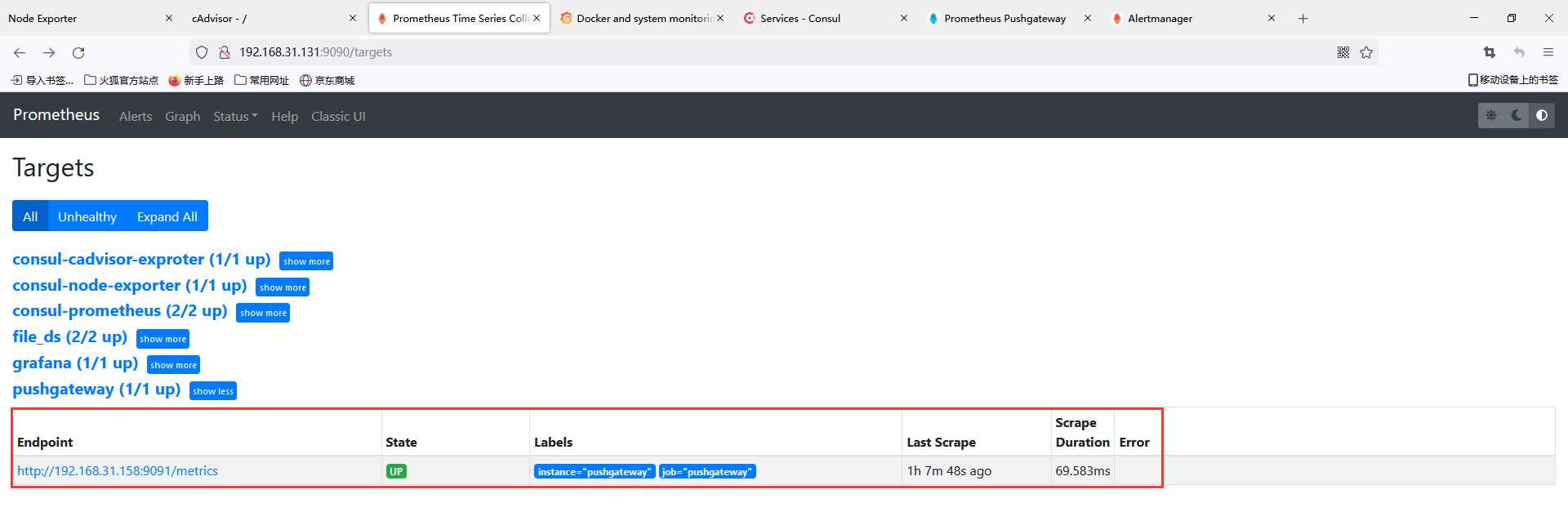

Prometheus的服务发现机制

prometheus获取数据源target的方式有多种,如静态配置和服务发现配置,prometheus支持多种服务发现,在prometheus中的服务发现主要分为以下几种:

- static_configs:静态服务发现

- kubernetes_sd_configs: 基于Kubernetes的服务发现

- consul_sd_configs: Consul 服务发现

- dns_sd_configs: DNS 服务发现

- file_sd_configs: 文件服务发现

- promethues的静态静态服务发现static_configs:每当有一个新的目标实例需要监控,都需要手动配置目标target。

- promethues的consul服务发现consul_sd_configs:Prometheus 一直监视consul服务,当发现在consul中注册的服务有变化,prometheus就会自动监控到所有注册到consul中的目标资源。

- promethues的k8s服务发现kubernetes_sd_configs:Prometheus与Kubernetes的API进行交互,动态的发现Kubernetes中部署的所有可监控的目标资源。

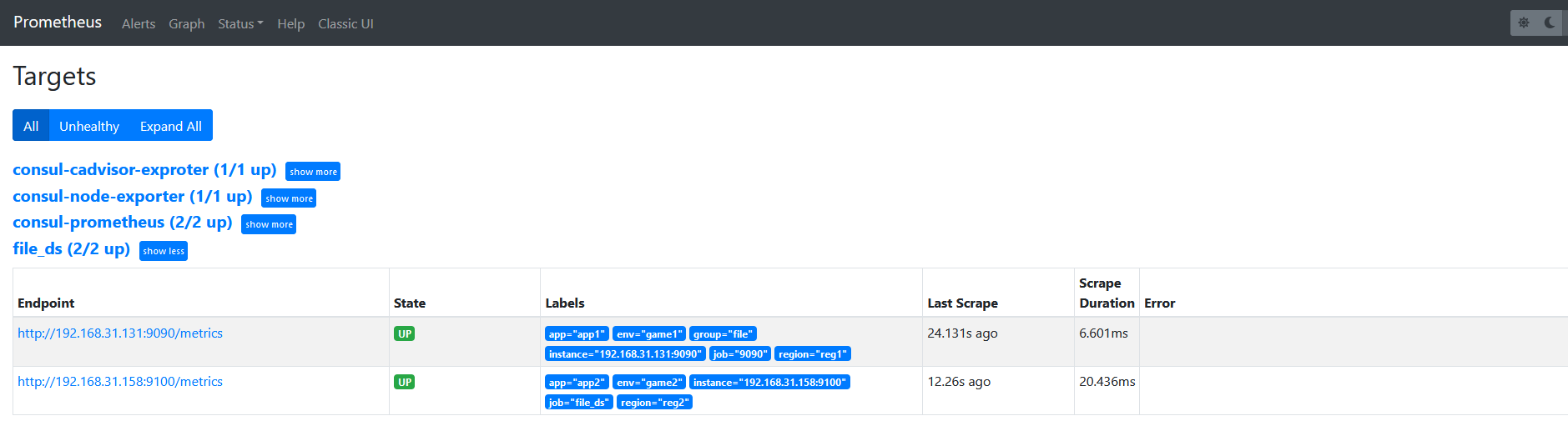

配置基于文件发现

[root@localhost config]# cat /home/prometheus/config/target.yml

- targets: ['192.168.31.131:9090'] #prometheus的地址端口,监控Prometheus信息

labels:

app: 'app1'

env: 'game1'

region: 'reg1'

- targets: ['192.168.31.158:9100'] #另外服务器的node-exporter的地址端口,监控服务器信息

labels:

app: 'app2'

env: 'game2'

region: 'reg2'

[root@localhost prometheus]# cat /home/prometheus/prometheus.yml

global: # 全局设置,可以被覆盖

scrape_interval: 15s # 抓取采样数据的时间间隔,每15秒去被监控机上采样,即数据采集频率

evaluation_interval: 15s # 监控数据规则的评估频率,比如设置文件系统使用率>75%发出告警则每15秒执行一次该规则,进行文件系统检查

#告警管理

alerting:

alertmanagers:

- static_configs: #静态目标配置

- targets: ['192.168.31.131:9093'] #告警ui地址

#告警规则

rule_files:

- /etc/prometheus/rules/*.rules #告警规则文件路径

scrape_configs: # 抓取配置

#静态发现

- job_name: 'grafana' #任务名 全局唯一

scrape_interval: 5s # 抓取采样数据的时间间隔

static_configs: #静态目标配置

- targets: ['192.168.31.131:3000'] #抓取地址,默认为/metrics

labels: #标签

instance: grafana

#pushgateway中转

- job_name: pushgateway

static_configs:

- targets: ['192.168.31.158:9091']

labels:

instance: pushgateway

#文件发现

- job_name: 'file_ds' #任务名 全局唯一

file_sd_configs: #基于文件发现配置

- files: ['/etc/prometheus/config/*.yml'] #配置文件路径,匹配config目录下所有yml文件

refresh_interval: 5s #每五秒扫描刷新配置文件

curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件

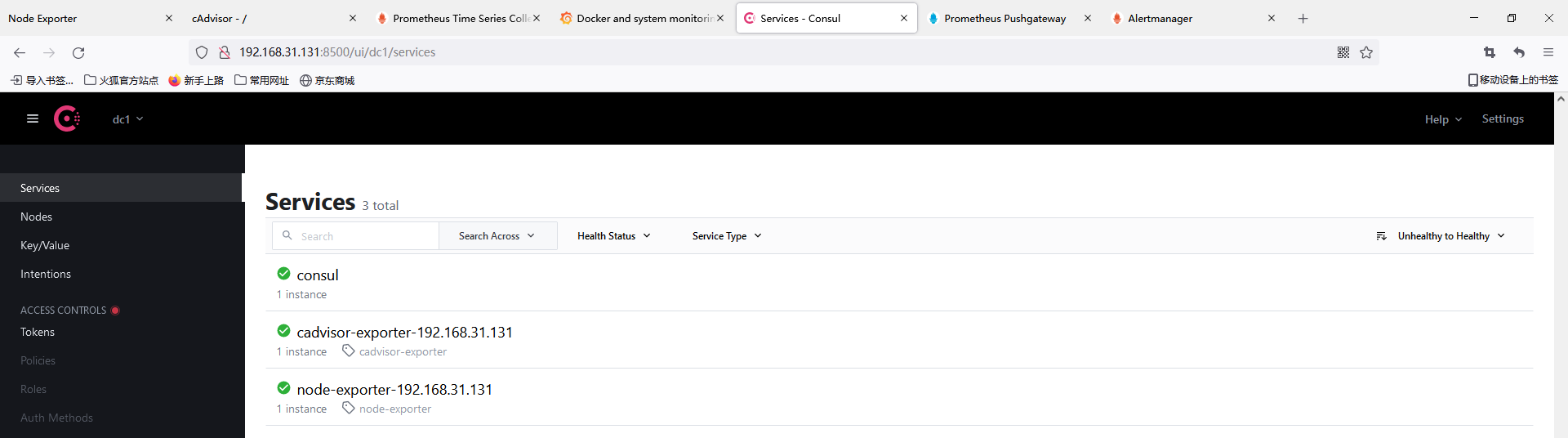

配置基于服务发现

安装consul

wget https://releases.hashicorp.com/consul/1.6.1/consul_1.6.1_linux_amd64.zip unzip consul_1.5.3_linux_amd64.zip ./consul agent -dev 或者 docker run --name consul -d -p 8500:8500 consul

注销服务

curl -X PUT http://192.168.31.131:8500/v1/agent/service/deregister/node-exporter

#node-exporter就是"id": "node-exporter"

注册服务

#vim /home/prometheus/config/consul-1.json

{

"ID": "node-exporter",

"Name": "node-exporter-192.168.31.131",

"Tags": [

"node-exporter"

],

"Address": "192.168.31.131",

"Port": 9100,

"Meta": {

"app": "spring-boot",

"team": "appgroup",

"project": "bigdata"

},

"EnableTagOverride": false,

"Check": {

"HTTP": "http://192.168.31.131:9100/metrics",

"Interval": "10s"

},

"Weights": {

"Passing": 10,

"Warning": 1

}

}

# 更新注册服务

#curl --request PUT --data @/home/prometheus/config/consul-1.json http://192.168.31.131:8500/v1/agent/service/register?replace-existing-checks=1

$ vim /home/prometheus/config/consul-2.json

{

"ID": "cadvisor-exporter",

"Name": "cadvisor-exporter-192.168.31.131",

"Tags": [

"cadvisor-exporter"

],

"Address": "192.168.31.131",

"Port": 9080,

"Meta": {

"app": "docker",

"team": "cloudgroup",

"project": "docker-service"

},

"EnableTagOverride": false,

"Check": {

"HTTP": "http://192.168.31.131:9080/metrics",

"Interval": "10s"

},

"Weights": {

"Passing": 10,

"Warning": 1

}

}

# 注册服务

# curl --request PUT --data @/home/prometheus/config/consul-2.json http://192.168.31.131:8500/v1/agent/service/register?replace-existing-checks=1

更新Prometheus.yml

[root@localhost prometheus]# vim /home/prometheus/prometheus.yml

#文件发现

- job_name: 'file_ds' #任务名 全局唯一

file_sd_configs: #基于文件发现配置

- files: ['/etc/prometheus/config/*.yml'] #配置文件路径

refresh_interval: 5s #每五秒扫描刷新配置文件

#服务发现

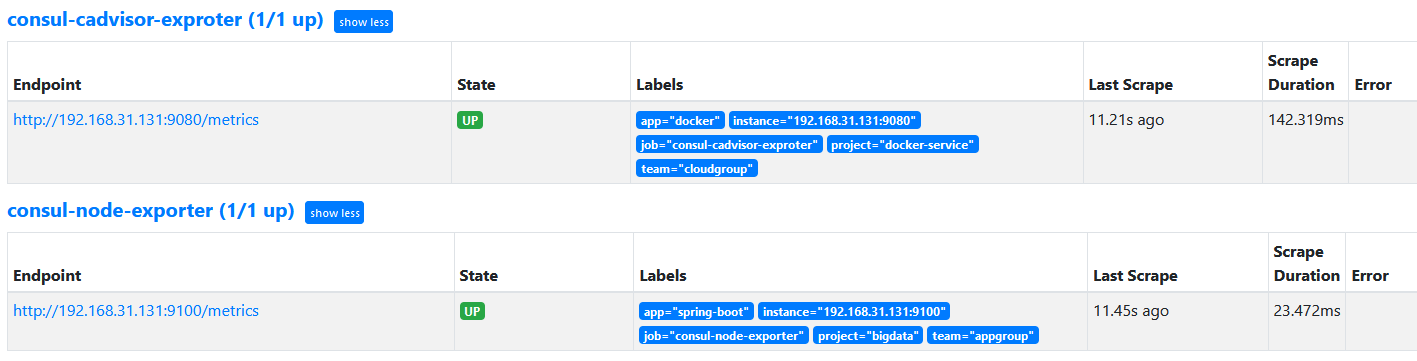

- job_name: 'consul-node-exporter'

consul_sd_configs: #基于服务发现类型

- server: '192.168.31.131:8500' #服务地址

services: []

relabel_configs:

- source_labels: [__meta_consul_tags] #注意两个横杠"__"

regex: .*node-exporter.* #匹配__meta_consul_tags中值包含node-exporter的

action: keep #keep丢弃未匹配到regex中内容的数据

- regex: __meta_consul_service_metadata_(.+) #获取__meta_consul_service_metadata_的值(标签)

action: labelmap #将获取的值作为新的标签

- job_name: 'consul-cadvisor-exproter'

consul_sd_configs:

- server: '192.168.31.131:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*cadvisor-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

curl -X POST http://localhost:9090/-/reload #热加载prometheus配置文件

[root@localhost prometheus]# cat prometheus.yml

global: # 全局设置,可以被覆盖

scrape_interval: 15s # 抓取采样数据的时间间隔,每15秒去被监控机上采样,即数据采集频率

evaluation_interval: 15s # 监控数据规则的评估频率,比如设置文件系统使用率>75%发出告警则每15秒执行一次该规则,进行文件系统检查

#告警管理

alerting:

alertmanagers:

- static_configs: #静态目标配置

- targets: ['192.168.31.131:9093'] #告警ui地址

#告警规则

rule_files:

- /etc/prometheus/rules/*.rules #告警规则文件路径

scrape_configs: # 抓取配置

#静态发现

- job_name: 'grafana' #任务名 全局唯一

scrape_interval: 5s # 抓取采样数据的时间间隔

static_configs: #静态目标配置

- targets: ['192.168.31.131:3000'] #抓取地址,默认为/metrics

labels: #标签

instance: grafana

#pushgateway中转

- job_name: pushgateway

static_configs:

- targets: ['192.168.31.158:9091']

labels:

instance: pushgateway

#文件发现

- job_name: 'file_ds' #任务名 全局唯一

file_sd_configs: #基于文件发现配置

- files: ['/etc/prometheus/config/*.yml'] #配置文件路径

refresh_interval: 5s #每五秒扫描刷新配置文件

#服务发现

- job_name: 'consul-prometheus'

consul_sd_configs:

- server: '192.168.31.131:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*test.*

action: keep

- job_name: 'consul-node-exporter'

consul_sd_configs:

- server: '192.168.31.131:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*node-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

- job_name: 'consul-cadvisor-exproter'

consul_sd_configs:

- server: '192.168.31.131:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*cadvisor-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

- job_name: 'redis-exproter'

consul_sd_configs:

- server: '192.168.31.131:8500'

services: []

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*redis-exporter.*

action: keep

- regex: __meta_consul_service_metadata_(.+)

action: labelmap

参考

Prometheus的服务发现机制 | Ray (gitee.io)

Prometheus核心组件 · Prometheus中文技术文档

Prometheus 通过 consul 实现自动服务发现_哎_小羊的博客-CSDN博客

Prometheus监控神器-服务发现篇(一)_青牛踏雪御苍穹的博客-CSDN博客

浙公网安备 33010602011771号

浙公网安备 33010602011771号