kubespray离线k8s部署方案

在上一篇当中,我们介绍了如何在大陆网络环境下使用kubespray部署k8s集群,那么这一篇我们更进一步介绍如何在离线的网络环境中使用kubespray部署k8s。

1、主机准备

| 主机名 | IP | 配置 | 操作系统 |

| console | 192.168.0.32 | 4C8G | Centos7.9 |

| master | 192.168.0.7 | 4C16G | Centos7.9 |

| node | 192.168.0.151 | 4C16G | Centos7.9 |

| files | 192.168.0.119 | 4C8G | Centos7.9 |

2、准备离线环境

2.1下载项目代码

wget https://github.com/kubernetes-sigs/kubespray/archive/refs/tags/v2.20.0.tar.gz # 重命名,进入目录 mv kubespray-2.20.0 kubespray cd kubespray

2.2生成离线资源清单

# 拉取kubespray镜像 docker pull quay.m.daocloud.io/kubespray/kubespray:v2.20.0 # 这里解释一下为什么要拉取这个镜像,正常情况用运行kubespray项目中的ansible剧本,有很多环境依赖,所以这里用容器来代替。 # 运行kubespray容器 docker run --rm -it -v $(pwd):/kubespray quay.m.daocloud.io/kubespray/kubespray:v2.20.0 bash # 进入offline目录,执行脚本生成离线资源清单files.list和images.list cd contrib/offline && bash generate_list.sh # 文件在temp目录下 ls -l temp/ total 16 -rw-r--r-- 1 root root 1769 Jul 17 07:04 files.list -rw-r--r-- 1 root root 2501 Jul 17 07:04 files.list.template -rw-r--r-- 1 root root 2286 Jul 17 07:04 images.list -rw-r--r-- 1 root root 3065 Jul 17 07:04 images.list.template

其实现在生成的files.list和images.list当中的url资源在大陆都有可能被墙,不能下载,我们需要对其进行加工处理。

# 修改files.list文件,加上files.m.daocloud.io前缀 sed -i "s#https://#https://files.m.daocloud.io/#g" files.list # 修改images.list文件,修改成daocloud的镜像加速配置 sed -i "s@quay.io@quay.m.daocloud.io@g" images.list sed -i "s@docker.io@docker.m.daocloud.io@g" images.list sed -i "s@registry.k8s.io@k8s.m.daocloud.io@g" images.list sed -i "s@ghcr.io@ghcr.m.daocloud.io@g" images.list

下面展示完整的files.list文件

https://files.m.daocloud.io/storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubelet https://files.m.daocloud.io/storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubectl https://files.m.daocloud.io/storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubeadm https://files.m.daocloud.io/github.com/etcd-io/etcd/releases/download/v3.5.4/etcd-v3.5.4-linux-amd64.tar.gz https://files.m.daocloud.io/github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz https://files.m.daocloud.io/github.com/projectcalico/calico/releases/download/v3.23.3/calicoctl-linux-amd64 https://files.m.daocloud.io/github.com/projectcalico/calicoctl/releases/download/v3.23.3/calicoctl-linux-amd64 https://files.m.daocloud.io/github.com/projectcalico/calico/archive/v3.23.3.tar.gz https://files.m.daocloud.io/github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.0/crictl-v1.24.0-linux-amd64.tar.gz https://files.m.daocloud.io/get.helm.sh/helm-v3.9.4-linux-amd64.tar.gz https://files.m.daocloud.io/github.com/opencontainers/runc/releases/download/v1.1.4/runc.amd64 https://files.m.daocloud.io/github.com/containers/crun/releases/download/1.4.5/crun-1.4.5-linux-amd64 https://files.m.daocloud.io/github.com/containers/youki/releases/download/v0.0.1/youki_v0_0_1_linux.tar.gz https://files.m.daocloud.io/github.com/kata-containers/kata-containers/releases/download/2.4.1/kata-static-2.4.1-x86_64.tar.xz https://files.m.daocloud.io/storage.googleapis.com/gvisor/releases/release/20210921/x86_64/runsc https://files.m.daocloud.io/storage.googleapis.com/gvisor/releases/release/20210921/x86_64/containerd-shim-runsc-v1 https://files.m.daocloud.io/github.com/containerd/nerdctl/releases/download/v0.22.2/nerdctl-0.22.2-linux-amd64.tar.gz https://files.m.daocloud.io/github.com/kubernetes-sigs/krew/releases/download/v0.4.3/krew-linux_amd64.tar.gz https://files.m.daocloud.io/github.com/containerd/containerd/releases/download/v1.6.8/containerd-1.6.8-linux-amd64.tar.gz https://files.m.daocloud.io/github.com/Mirantis/cri-dockerd/releases/download/v0.2.2/cri-dockerd-0.2.2.amd64.tgz

下面展示完整的images.list文件内容

docker.m.daocloud.io/mirantis/k8s-netchecker-server:v1.2.2 docker.m.daocloud.io/mirantis/k8s-netchecker-agent:v1.2.2 quay.m.daocloud.io/coreos/etcd:v3.5.4 quay.m.daocloud.io/cilium/cilium:v1.12.1 quay.m.daocloud.io/cilium/cilium-init:2019-04-05 quay.m.daocloud.io/cilium/operator:v1.12.1 ghcr.m.daocloud.io/k8snetworkplumbingwg/multus-cni:v3.8-amd64 docker.m.daocloud.io/flannelcni/flannel:v0.19.2-amd64 docker.m.daocloud.io/flannelcni/flannel-cni-plugin:v1.1.0-amd64 quay.m.daocloud.io/calico/node:v3.23.3 quay.m.daocloud.io/calico/cni:v3.23.3 quay.m.daocloud.io/calico/pod2daemon-flexvol:v3.23.3 quay.m.daocloud.io/calico/kube-controllers:v3.23.3 quay.m.daocloud.io/calico/typha:v3.23.3 quay.m.daocloud.io/calico/apiserver:v3.23.3 docker.m.daocloud.io/weaveworks/weave-kube:2.8.1 docker.m.daocloud.io/weaveworks/weave-npc:2.8.1 docker.m.daocloud.io/kubeovn/kube-ovn:v1.9.7 docker.m.daocloud.io/cloudnativelabs/kube-router:v1.5.1 k8s.m.daocloud.io/pause:3.6 ghcr.m.daocloud.io/kube-vip/kube-vip:v0.4.2 docker.m.daocloud.io/library/nginx:1.23.0-alpine docker.m.daocloud.io/library/haproxy:2.6.1-alpine k8s.m.daocloud.io/coredns/coredns:v1.8.6 k8s.m.daocloud.io/dns/k8s-dns-node-cache:1.21.1 k8s.m.daocloud.io/cpa/cluster-proportional-autoscaler-amd64:1.8.5 docker.m.daocloud.io/library/registry:2.8.1 k8s.m.daocloud.io/metrics-server/metrics-server:v0.6.1 k8s.m.daocloud.io/sig-storage/local-volume-provisioner:v2.4.0 quay.m.daocloud.io/external_storage/cephfs-provisioner:v2.1.0-k8s1.11 quay.m.daocloud.io/external_storage/rbd-provisioner:v2.1.1-k8s1.11 docker.m.daocloud.io/rancher/local-path-provisioner:v0.0.22 k8s.m.daocloud.io/ingress-nginx/controller:v1.3.1 docker.m.daocloud.io/amazon/aws-alb-ingress-controller:v1.1.9 quay.m.daocloud.io/jetstack/cert-manager-controller:v1.9.1 quay.m.daocloud.io/jetstack/cert-manager-cainjector:v1.9.1 quay.m.daocloud.io/jetstack/cert-manager-webhook:v1.9.1 k8s.m.daocloud.io/sig-storage/csi-attacher:v3.3.0 k8s.m.daocloud.io/sig-storage/csi-provisioner:v3.0.0 k8s.m.daocloud.io/sig-storage/csi-snapshotter:v5.0.0 k8s.m.daocloud.io/sig-storage/snapshot-controller:v4.2.1 k8s.m.daocloud.io/sig-storage/csi-resizer:v1.3.0 k8s.m.daocloud.io/sig-storage/csi-node-driver-registrar:v2.4.0 docker.m.daocloud.io/k8scloudprovider/cinder-csi-plugin:v1.22.0 docker.m.daocloud.io/amazon/aws-ebs-csi-driver:v0.5.0 docker.m.daocloud.io/kubernetesui/dashboard-amd64:v2.6.1 docker.m.daocloud.io/kubernetesui/metrics-scraper:v1.0.8 quay.m.daocloud.io/metallb/speaker:v0.12.1 quay.m.daocloud.io/metallb/controller:v0.12.1 k8s.m.daocloud.io/kube-apiserver:v1.24.6 k8s.m.daocloud.io/kube-controller-manager:v1.24.6 k8s.m.daocloud.io/kube-scheduler:v1.24.6 k8s.m.daocloud.io/kube-proxy:v1.24.6

2.3、离线二进制文件

根据修改后的files.list下载部署所需的二进制文件

# 执行以下命令将依赖的静态文件全部下载到 temp/files 目录下 wget -x -P temp/files -i temp/files.list # 把files目录复制到192.168.0.119上的/opt下 scp -r temp/files root@192.168.0.119:/opt # 进入192.168.0.119上opt/files目录 cd /opt/files # 修改目录名,方便后面该离线配置文件 mv files.m.daocloud.io k8s # 这里解释一下为什么下载下来的内容为什么这么奇怪,wget -x 会根据远程文件的 URL 路径,在本地创建完整的目录结构。例如https://files.m.daocloud.io/storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubelet,则会在本地创建 ./files.m.daocloud.io/storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubelet。只有最后的kubelet是二进制文件,前面的都是依据url生成的目录

使用服务器自带的python暴露下载的文件

# 在192.168.0.119机器上 cd /opt/files && python -m SimpleHTTPServer 3200

我们只需要临时前台暴露一下,直到部署完成就行了。

现在我们就可以在其他机器通过 wget http://192.168.0.119:3200/k8s//storage.googleapis.com/kubernetes-release/release/v1.24.6/bin/linux/amd64/kubelet 下载到kubelet了。

2.4、离线镜像搬运到私有仓库

根据修改后的images.list文件内容,使用skopeo工具把所需镜像搬运到私有harbor仓库

Centos7 有epel源的情况可以直接 yum -y install skopeo

因为我这边已经有harbor仓库了,所以就用这个了。没有的同学可以搭建一个docker registry,这个更方便快捷。

harbor地址是https://myharbor.com:45678 账号admin 密码123456

# 我们先登录harbor

docker login https://myharbor.com:45678 uadmin -p'123456'

# 进入temp目录导镜像

cd kubespray/contrib/offline/temp/

# 执行skopeo命令迁移镜像到harbor

for image in $(cat images.list); do skopeo copy docker://${image} docker://myharbor.com:45678/k8s/${image#*/}; done

# 稍微解释一下循环体中的${image#*/},Shell 参数扩展语法,移除镜像名称中第一个/及其之前的所有字符

若image=quay.m.daocloud.io/calico/node:v3.23.3,则${image#*/}=calico/node:v3.23.3

3、修改离线配置

3.1 修改 kubespray/inventory/mycluster/group_vars/all/offline.yml 文件,让kubespray在部署的过程中二进制下载去我们暴露的地址,镜像下载去私服harbor

---

## Global Offline settings

### Private Container Image Registry

# registry_host: "myprivateregisry.com"

#files_repo: "https://files.m.daocloud.io"

files_repo: "http://192.168.0.119:3200/k8s"

### If using CentOS, RedHat, AlmaLinux or Fedora

# yum_repo: "http://myinternalyumrepo"

### If using Debian

# debian_repo: "http://myinternaldebianrepo"

### If using Ubuntu

# ubuntu_repo: "http://myinternalubunturepo"

## Container Registry overrides

registry_host: "myharbor.com:45678/k8s"

kube_image_repo: "{{ registry_host }}"

gcr_image_repo: "{{ registry_host }}"

github_image_repo: "{{ registry_host }}"

docker_image_repo: "{{ registry_host }}"

quay_image_repo: "{{ registry_host }}"

...

3.2 修改kubespray/inventory/mycluster/group_vars/all/containerd.yml配置contaierd私服harbor地址

...

containerd_registries:

"harbor.ch-uniconnect.com:23789":

endpoint:

- "https://harbor.ch-uniconnect.com:23789"

auth:

username: "admin"

password: "1c6qwKx1T25NJwQ3"

#如果你的harbor域名是自签的,加上下面的配置,跳过域名证书验证

#skip_verify: true

...

3.3 配置部署过程资源下载不从控制节点中转,节点需要的资源节点自己去下载。

kubespray/inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

... download_run_once: false download_localhost: false download_force_cache: false ...

3.4 其他配置可以参考我上一篇kubespray的文章,以上配置只跟本次离线部署相关

4、一键部署

执行一键部署命令

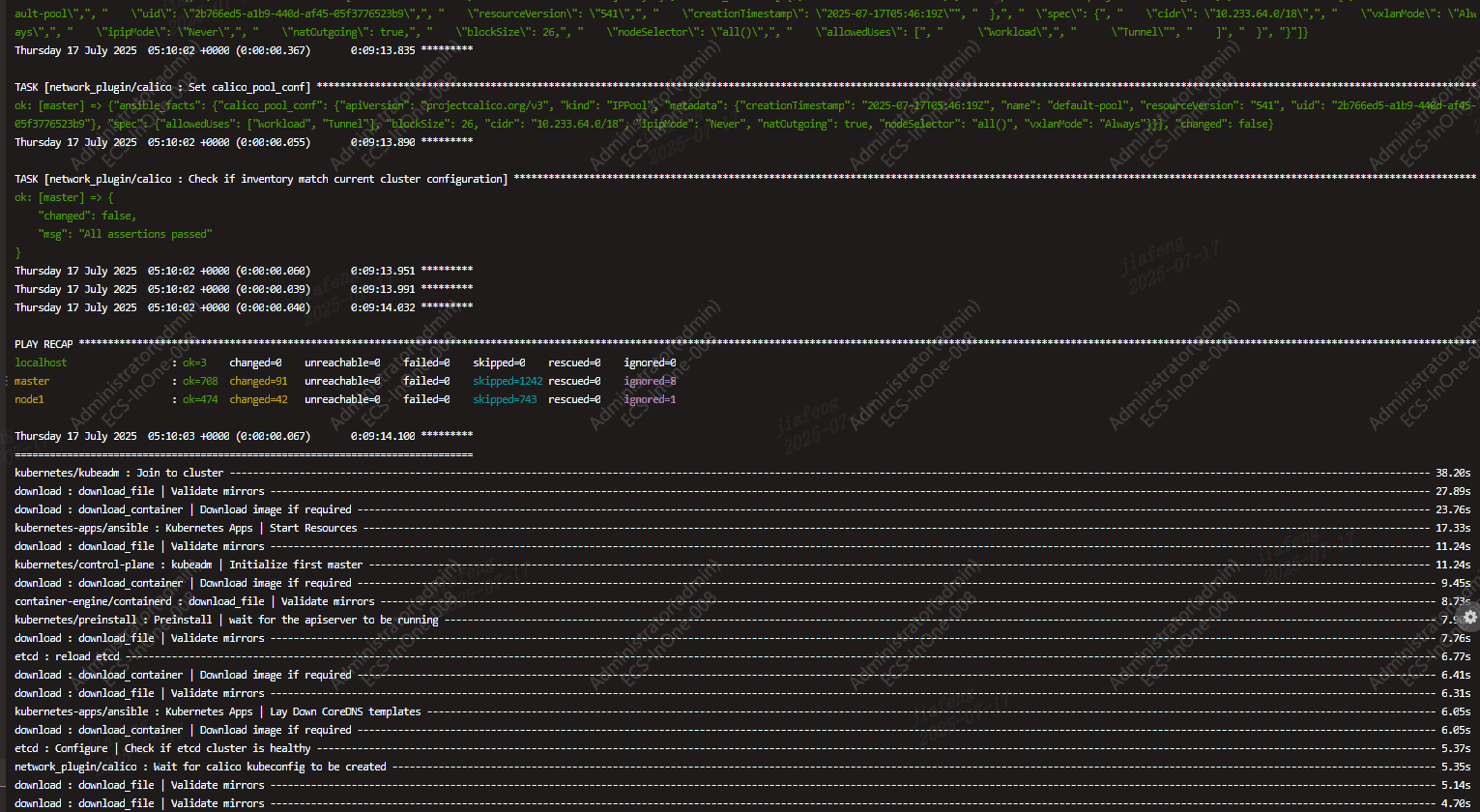

# 启动kubespray容器 docker run --rm -it \ --add-host=myharbor.com:192.168.0.30 \ # 我的仓库域名不是公网解析,需要注入到容器的hosts -v $(pwd):/kubespray \ -v /root/.ssh:/root/.ssh \ quay.m.daocloud.io/kubespray/kubespray:v2.20.0 \ bash # 需要注意所有k8s节点都要手动配置harbor的域名解析,不然拉不到镜像 # 然后一键部署剧本 ansible-playbook -i inventory/mycluster/inventory.ini --user=root -b -v cluster.yml

5、后续优化

kubespray官方的容器太大了,条件允许的可以对其进行瘦身。

参考链接:

https://www.magiccloudnet.com/kubespray

https://imroc.cc/kubernetes/deploy/kubespray/offline

浙公网安备 33010602011771号

浙公网安备 33010602011771号