05 RDD练习:词频统计

一、词频统计:

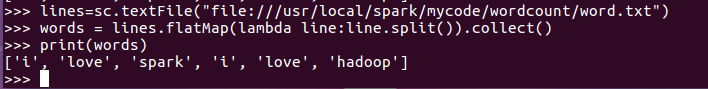

1.读文本文件生成RDD lines

2.将一行一行的文本分割成单词 words flatmap()

lines=sc.textFile("file:///usr/local/spark/mycode/wordcount/word.txt") words = lines.flatMap(lambda line:line.split()).collect() print(words)

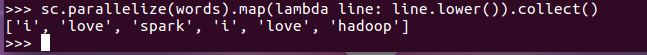

3.全部转换为小写 lower()

sc.parallelize(words).map(lambda line: line.lower()).collect()

4.去掉长度小于3的单词 filter()

words1=sc.parallelize(words) words1.collect() words1.filter(lambda word:len(word)>3).collect()

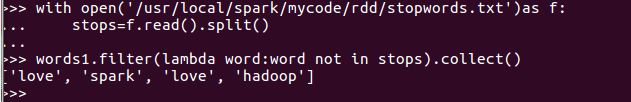

5.去掉停用词

with open('/usr/local/spark/mycode/rdd/stopwords.txt')as f: stops=f.read().split() words1.filter(lambda word:word not in stops).collect()

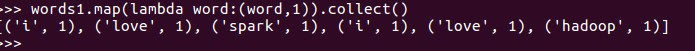

6.转换成键值对 map()

words1.map(lambda word:(word,1)).collect()

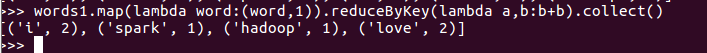

7.统计词频 reduceByKey()

words1.map(lambda word:(word,1)).reduceByKey(lambda a,b:b+b).collect()

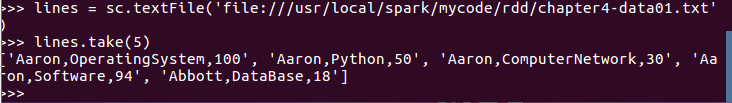

二、学生课程分数 groupByKey()

-- 按课程汇总全总学生和分数

1. 分解出字段 map()

lines = sc.textFile('file:///usr/local/spark/mycode/rdd/chapter4-data01.txt') lines.take(5)

2. 生成键值对 map()

lines.map(lambda line:line.split(',')).take(5)

3. 按键分组

lines.map(lambda line:line.split(',')).map(lambda line:(line[1],(line[0],line[2]))).take(5)

4. 输出汇总结果

groupByCoure=lines.map(lambda line:line.split(',')).map(lambda line:(line[1],(line[0],line[2]))).groupByKey() for i in groupByCoure.first()[1]: print(i)

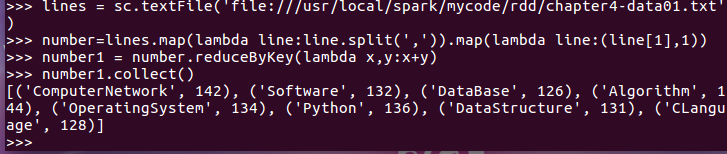

三、学生课程分数 reduceByKey()

-- 每门课程的选修人数

lines = sc.textFile('file:///usr/local/spark/mycode/rdd/chapter4-data01.txt') number=lines.map(lambda line:line.split(',')).map(lambda line:(line[1],1)) number1 = number.reduceByKey(lambda x,y:x+y) number1.collect()

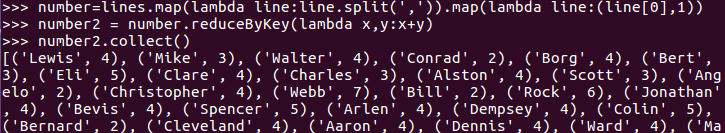

-- 每个学生的选修课程数

number=lines.map(lambda line:line.split(',')).map(lambda line:(line[0],1)) number2 = number.reduceByKey(lambda x,y:x+y) number2.collect()