视频学习--二进制安装k8s记录-未完~~

- Kubernetes概述

- Kubernetes优势

- Kubernetes快速入门

- 逻辑架构

- 常见的K8S安装部署方式:

- 安装部署准备工作:

- 部署Master节点服务

- 部署Node节点服务

- 验证kubernetes集群

Kubernetes概述

●官网: https://kubernetes.io

●GitHub : https://github.com/kubernetes/kubernetes

●由来:谷歌的Borg系统,后经Go语言重写并捐献给CNCF基金会开源

●含义:词根源于希腊语:舵手/飞行员, K8S→K12345678S

●重要作用:开源的容器编排框架工具(生态极其丰富)

●学习的意义:解决跑裸docker的若干痛点

Kubernetes优势

●自动装箱,水平扩展,自我修复

●服务发现和负载均衡

●自动发布(默认滚动发布模式)和回滚

发布策略:[蓝绿发布,灰度发布,滚动发布,金丝雀发布]

●集中化配置管理和密钥管理

●存储编排

●任务批处理运行

Kubernetes快速入门

●四组基本概念

●Pod/Pod控制器

●Pod

●Pod是K8S里能够被运行的最小的逻辑单元(原子单元)

●1个Pod里面可以运行多个容器,它们共享UTS+ NET +IPC名称空间

●可以把Pod理解成豌豆荚,而同一Pod内的每个容器是一-颗颗豌豆

●一个Pod里运行多个容器,又叫:边车( SideCar )模式

●Pod控制器

●Pod控制器是Pod启动的一-种模板,用来保证在K8S里启动的Pod

应始终按照人们的预期运行(副本数、生命周期、健康状态检查... )

●K8S内提供了众多的Pod控制器,常用的有以下几种:

●Deployment

●DaemonSet

●ReplicaSet

●StatefulSet

●Job

●Cronjob

●Name/Namespace

●Name

●由于K8S内部,使用“资源”来定义每一 种逻辑概念(功能)

故每种"资源”, 都应该有自己的"名称”

●"资源”有api版本( apiVersion )类别( kind)、元数据

( metadata)、定义清单( spec)、状态( status )等配置信息

“名称”通常定义在"资源”的"元数据” 信息里

●Namespace

●随着项目增多、人员增加、集群规模的扩大,需要- -种能够隔离K8S内

各种"资源”的方法,这就是名称空间

●名称空间可以理解为K8S内部的虚拟集群组

●不同名称空间内的"资源” ,名称可以相同,相同名称空间内的同种

“资源” ,

“名称” 不能相同

●合理的使用K8S的名称空间,使得集群管理员能够更好的对交付到K8S里

的服务进行分类管理和浏览

●K8S里默认存在的名称空间有: default. kube-system、 kube-public

●查询K8S里特定"资源”要带上相应的名称空间

●Label/Label选择器

●Label

●标签是k8s特色的管理方式,便于分类管理资源对象。

●一个标签可以对应多个资源,一个资源也可以有多个标签,它们是

多对多的关系。

●一个资源拥有多个标签,可以实现不同维度的管理。

●标签的组成: key=value

●与标签类似的,还有一-种“注解” ( annotations )

Label选择器

●给资源打上标签后,可以使用标签选择器过滤指定的标签

标签选择器目前有两个:基于等值关系(等于、不等于)和基于集

合关系(属于、不属于、存在)

●许多资源支持内嵌标签选择器字段

●matchLabels

●matchExpressions

● Service/Ingress

●Service

●在K8S的世界里,虽然每个Pod都会被分配一 个单独的IP地址,但这

个IP地址会随着Pod的销毁而消失

●Service (服务)就是用来解决这个问题的核心概念

●一个Service可以看作- -组提供相同服务的Pod的对外访问接口

●Service作用于哪些Pod是通过标签选择器来定义的

●Ingress

●Ingress是K8S集群里工作在OSI网络参考模型下,第7层的应用,对

外暴露的接口

●Service只能进行4流量调度,表现形式是ip+ port

●Ingress则可以调度不同业务域、 不同URL访问路径的业务流量

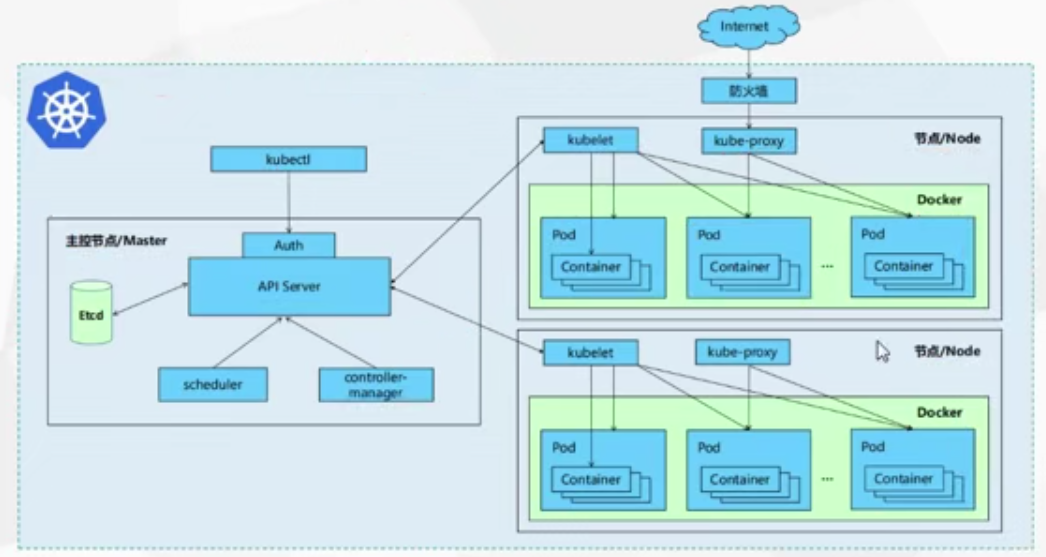

组件

●核心组件

配置存储中心→etcd服务(集群主挂了会选举)

●主控( master )节点

kube-apiserver服务 核心大脑

●apiserver

●提供了集群管理的REST

API接口(包括鉴权、数据;

校验及集群状态变更)

负责其他模块之间的数据

交互,承担通信枢纽功能

●是资源配额控制的入口

●提供完备的集群安全机制

kube-controller-manager服务

●controller-manager

●由一系列控制器组成,通过

apiserver监控整个集群的

状态,并确保集群处于预期

的工作状态

●Node Controller 节点控制器

●Deployment Controller pod控制器

●Service Controller 服务控制器

●Volume Controller 存储卷控制器

●Endpoint Controller 接入点控制器

●Garbage Controller 垃圾回收控制器

●Namespace Controller 名称空间控制器

●Job Controller 任务控制器

●Resource quta Controller 资源配额控制器

kube-scheduler服务

●scheduler

●主要功能是接收调度pod

到适合的运算节点上

●预算策略( predict )

●优选策略( priorities )

●运算(node)节点

kube-kubelet服务

●kubelet

●简单地说, kubelet的

主要功能就是定时从某

个地方获取节点上pod

的期望状态(运行什么

容器、运行的副本数量

、

网络或者存储如何配

置等等) , 并调用对应

的容器平台接口达到这

个状态

定时汇报当前节点的状

态给apiserver,以供调

度的时候使用

●镜像和容器的清理工作

保证节点上镜像不会

占满磁盘空间,退出的

容器不会占用太多资源

●Kube-proxy服务

●kube-proxy

●是K8S在每个节点上运行网络

代理, service资源的载体

●建立了pod网络和集群网络的

关系( clusterip>podip )

●常用三种流量调度模式

●Userspace (废弃)

●Iptables (濒临废弃)

●Ipvs(推荐)

●负责建立和删除包括更新调

度规则、通知apiserver自己

的更新,或者从apiserver哪

里获取其他kube-proxy的调

度规则变化来更新自己的

●CLI客户端

● kubectl

核心附件

.CNI网络插件→flannel/calico

服务发现用插件> coredns

服务暴露用插件> traefik

.GUI管理插件→Dashboard

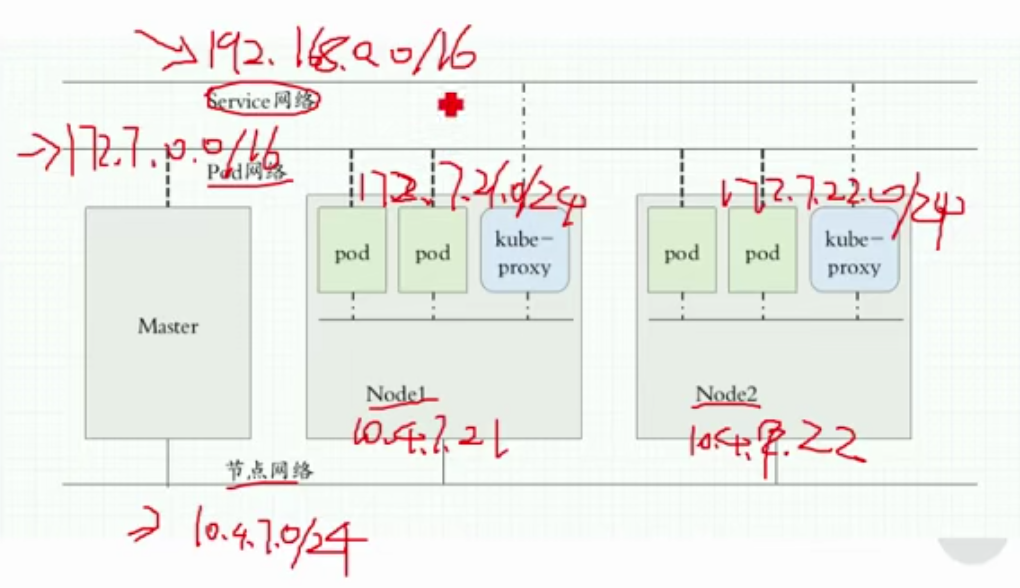

k8s三条网络

service ip

pod ip

node ip

10.4.7.0 10--idc 私有地址 4--对应机房地址(亦庄同济-世纪互联还是酒仙桥大白楼) 7--区分业务和环境(通过vlan做物理隔离)

逻辑架构

常见的K8S安装部署方式:

●Minikube 单节点微型K8S (仅供学习,预览使用)

●二进制安装部署(生产首选,新手推荐)

●使用kubeadmin进行部署 , K8S的部署工具,跑在K8S里(相对简单,熟手推荐)

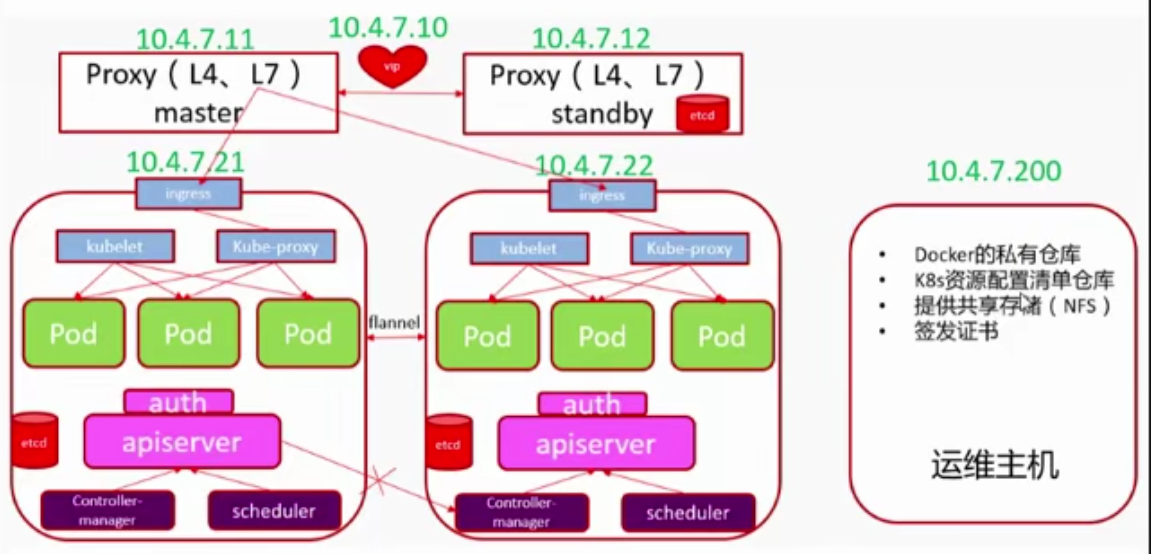

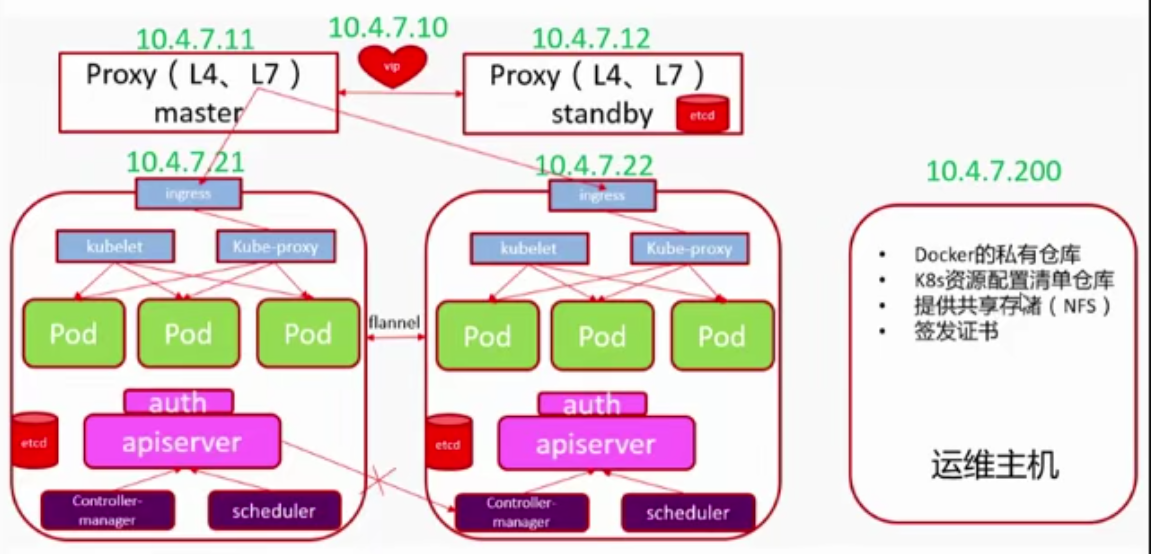

安装部署准备工作:

●准备5台2c/2g/50g虚机, 使用10.4.7.0/24网络

●

预装CentOS7. 6操作系统,做好基础优化

●安装部署bind9 ,部署自建DNS系统

●准备自签证书环境

●

安装部署Docker环境 ,部署Harbor私有仓库

虚拟网络编辑器

VMnet8 子网IP:10.4.7.0 子网掩码: 255.255.255.0

NAT设置 -->网关 10.4.7.254

windows 下网络连接 VMnet8 ipv4 10.4.7.1 跃点数 10 ,后续配置DNS 优先走此网卡

创建主机

10.4.7.11 主机名:hdss7-11.host.com CPU:2核 内存:2048

10.4.7.12 主机名:hdss7-12.host.com

10.4.7.21 主机名:hdss7-21.host.com

10.4.7.22 主机名:hdss7-22.host.com

10.4.7.200 主机名:hdss7-200.host.com #可以白底黑字醒目

主机名

hdss7-11.host.com hdss--汇德商厦 命名:位置加主机后两位

主机命名尽量和业务看起来不要有关系 ,只给地名ip,下回跑别的业务也行,避免 mysql01 这种

关闭SElinux 和 firewalld

[root@hdss7-11 ~]# getenforce

Disabled

## setenforce 0

[root@hdss7-11 ~]# uname -a

Linux hdss7-11.host.com 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

## systemctl stop firewalld

安装epel-release

yum install epel-release

安装必要的工具

# yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

DNS服务初始化

给容器绑host ,需要用到bind 自建的DNS,让所有的容器能服从DNS解析记录

HDSS7-11.host.com 上 安装bind9软件

yum install bind -y

[root@hdss7-11 ~]# rpm -qa bind

bind-9.11.4-16.P2.el7_8.6.x86_64

配置bind9

注意bind 配置格式很严格,该有空格有空格,该有分好有分号

主配置文件

[root@hdss7-11 ~]# vi /etc/named.conf

options {

listen-on port 53 { 10.4.7.11; };

18 recursing-file "/var/named/data/named.recursing";

19 secroots-file "/var/named/data/named.secroots";

20 allow-query { any; };

21 forwarders { 10.4.7.254; };

32 recursion yes; ##递归查询

33

34 dnssec-enable no; #DNS实验环境可以先关掉,节省资源

35 dnssec-validation no; #也是先关掉

保存退出,检查语法

[root@hdss7-11 ~]# named-checkconf

没报错 OK

区域配置文件

/etc/named.rfc1912.zones

##主机域

zone "host.com" IN { ##主机域假的只能主机内使用,叫啥都行 一般叫host.com 或 opi.com 没实际意义的

type master;

file "host.com.zone";

allow-update { 10.4.7.11; };

};

##业务域

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 10.4.7.11; };

};

配置区域数据文件

- 配置主机域数据文件

/var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2020061301 ; serial #需要10位 01第一条

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

HDSS7-11 A 10.4.7.11

HDSS7-12 A 10.4.7.12

HDSS7-21 A 10.4.7.21

HDSS7-22 A 10.4.7.22

HDSS7-200 A 10.4.7.200

- 配置业务域数据文件

/var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020061301 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

#检查 named-checkconf

systemctl start named

netstat -lntup |grep 53

[root@hdss7-11 ~]# netstat -lntup |grep 53

tcp 0 0 10.4.7.11:53 0.0.0.0:* LISTEN 7646/named

tcp 0 0 127.0.0.1:953 0.0.0.0:* LISTEN 7646/named

tcp6 0 0 ::1:953 :::* LISTEN 7646/named

udp 0 0 10.4.7.11:53 0.0.0.0:* 7646/named

[root@hdss7-11 ~]# dig -t A hdss7-21.host.com @10.4.7.11 +short

10.4.7.21

dns 修改

[root@hdss7-11 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=10.4.7.11

[root@hdss7-11 ~]# systemctl restart network

其他节点:

[root@hdss7-12 ~]# vi /etc/resolv.conf 正常是重启网卡自动添加 search

search host.com

windows VMnet8 ipv4 DNS : 10.4.7.11 ##windows打开网页也需要域

准备签发证书环境

运维主机HDSS7-200.host.com. 上:

安装CFSSL

。证书签发工具CFSSL: R1.2

cfssI下载地址

Clssl-jison下载地址

cfssl-certinfo 下载地址

HDSS7-200.host.com上:

~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

~]# chmod +x /usr/bin/cfssl*

创建生成CA证书签名请求( csr )的JSON配置文件

/opt/certs/ca-csr.json

{

"CN": "OldboyEdu",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

CN: Common Name ,浏览器使用该字段验证网站是否合法, -般写的是域名。非常重要。测览器使

用该字段验证网站是否合法

C: Country.国家

ST:State,州,省

L: Locality,地区,城市

O: Organization Name ,组织名称.公司名称

OU: Organization Unit Name ,组织单位名称,公司部门

生成ca证书和私钥

[root@hdss7-200 certs]# cfssl gencert -initca ca-csr.json #这个用不了

[root@hdss7-200 certs]# cfssl gencert -initca ca-csr.json |cfssl-json -bare ca #做成承载式证书

部署docker环境

HDSS7-200.host.com ,HDSS7-21.host.com ,HDSS7-22.host.com上:

安装

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

配置

[root@hdss7-21 ~]# mkdir /etc/docker /data/docker -p

[root@hdss7-21 ~]# vi /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"bip": "172.7.21.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

[root@hdss7-21 ~]# systemctl start docker

22 ,200 都操作 注意bip 172.7.x.1/24 x--对应的22,200

部署docker镜像私有仓库harbor

HDSS7-200.host.com上:

下载一个二进制包并解压

harbor官方github地址

https://github.com/goharbor/harbor 1.7.5之前19年11月有过漏洞 , harbor-offline--1.8.x以上

harbor 下载地址

src]# tar xf harbor-offline-installer-v1.8.5.tgz -C /opt/

opt]# mv harbor/ harbor-v1.8.5

opt]# ln -s /opt/harbor-v1.8.5/ /opt/harbor

修改配置文件

vi /opt/harbor/harbor.yml

1 hostname: harbor.od.com

2 http:

10 port: 180

27 harbor.admin_password: Harbor12345

35 data_volume: /data/harbor

6 1og:

7 level: info

8rotate count: 50

rotate_ size: 200M

82 location: /data/harbor/1ogs

mkdir -p /data/harbor/logs

安装 docker-compose

yum install docker-compose -y

安装harbor

# harbor 也是单机编排的容器,依赖docker-compose

sh /opt/harbor/install.sh

检查harbor启动情况

docker-compose ps

安装nginx并配置

yum install nginx -y

vi /etc/nginx/conf.d/harbor.od.com.conf

server {

listen 80;

server_name harbor.od.com;

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}

nginx -t

systemctl start nginx

systemctl enable nginx

配置harbor的DNS内网解析

- 配置

HDSS7-11上:

~]# vi /var/named/od.com.zone

2020061302 ; serial

harbor A 10.4.7.200

注意serial前滚一个序号 #手撸bind就得前滚序列号 +1

~]# systemctl restart named

- 检查

~]# dig -t A harbor.od.com +short

10.4.7.200 -t 指定查询的记录类型 +short 显示简短信息

浏览器打开

http://harbor.od.com

下拉nginx 并上传harbor

docker pull nginx:1.7.9 #公网不带v

<==> docker pull docker.io/library/nginx:1.7.9

docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9

docker login harbor.od.com

Username: admin

Password: 123456

docker push harbor.od.com/public/nginx:v1.7.9

部署Master节点服务

部署etcd集群

创建基于根证书的config配置文件(客户端和服务端通信证书配置)

HDSS7-200上:

cd /opt/certs

vi /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": { #服务端启动需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": { #客户端找服务端通信需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": { ##对端通信两边都需要证书

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

证书类型

client certificate:客户端使用。用干服名端认证客户端,例如etcdctl,etcd proxy, fleetctl

docker客户端

server certificate:服务端使用,客户端以此验证服务端身份,例如docker服务端、kube -apiserver

peer certilicate:双向证书,用于etcd集群成员间通信

创建生成自签证书签名请求(csr)的JSON配置文件

运维主机 HDSS7-200.host.com上:

vi /opt/certs/etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [

"10.4.7.11",

"10.4.7.12",

"10.4.7.21",

"10.4.7.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成etcd证书和私钥

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer

2020/06/14 09:31:57 [INFO] generate received request

2020/06/14 09:31:57 [INFO] received CSR

2020/06/14 09:31:57 [INFO] generating key: rsa-2048

2020/06/14 09:31:58 [INFO] encoded CSR

2020/06/14 09:31:58 [INFO] signed certificate with serial number 558323261183529745818213423343421240701155084378

2020/06/14 09:31:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

检查生成的证书和私钥

cd /opt/certs

[root@hdss7-200 certs]# ls -l |grep etcd

-rw-r--r-- 1 root root 1062 Jun 14 09:31 etcd-peer.csr

-rw-r--r-- 1 root root 363 Jun 14 09:02 etcd-peer-csr.json

-rw------- 1 root root 1679 Jun 14 09:31 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Jun 14 09:31 etcd-peer.pem

创建etcd用户

HDSS7-12.host.com上:

useradd -s /sbin/nologin -M etcd

下载软件. 解压,做软链接

etcd 下载地址:https://github.com/etcd-io/etcd/tags 建议使用稳定的3.1

HDSS7-12.host.com上:

[root@hdss7-12 src]# wget https://github.com/etcd-io/etcd/releases/download/v3.1.20/etcd-v3.1.20-linux-amd64.tar.gz

[root@hdss7-12 src]# tar xfv etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

[root@hdss7-12 opt]# mv etcd-v3.1.20-linux-amd64 etcd-v3.1.20

[root@hdss7-12 opt]# ln -s etcd-v3.1.20 etcd

[root@hdss7-12 opt]# ll

total 0

lrwxrwxrwx 1 root root 12 Jun 14 10:19 etcd -> etcd-v3.1.20

drwxr-xr-x 3 478493 89939 123 Oct 11 2018 etcd-v3.1.20

drwxr-xr-x 2 root root 45 Jun 14 10:03 src

创建目录,拷贝证书,私钥

- 创建目录:

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

- 拷贝证书

将运维主机上生成的ca.pem. etcd-peer-key.pem. etcd-peer.pem 拷贝到/opt/etcd/certs目

录中.注意私钥文件权限600

[root@hdss7-12 certs]# ll

total 12

-rw-r--r-- 1 root root 1346 Jun 14 10:28 ca.pem

-rw------- 1 root root 1679 Jun 14 10:29 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Jun 14 10:28 etcd-peer.pem

- 创建etcd服务启动脚本

HDSS7-12.host.com上:

/opt/etcd/etcd-server-startup.sh

#!/bin/sh

./etcd --name etcd-server-7-12 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://10.4.7.12:2380 \

--listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://10.4.7.12:2380 \

--advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

调整权限

[root@hdss7-12 etcd]# chmod +x etcd-server-startup.sh

[root@hdss7-21 certs]# useradd -s /sbin/nologin -M etcd

[root@hdss7-12 etcd]# chown -R etcd.etcd /opt/etcd-v3.1.20

[root@hdss7-12 opt]# chown -R etcd.etcd /data/etcd/

[root@hdss7-12 opt]# chown -R etcd.etcd /data/logs/etcd-server/

安装supervisor软件

yum install supervisor -y 管理后台进程

systemctl start supervisord

systemctl enable supervisord

创建etcd-server启动配置

HDSS7-12.host.com上:

/etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

启动并检查

supervisorctl update #配置文件修改后可以使用该命令加载新的配置

etcd-server-7-12: added process group

[root@hdss7-12 etcd]# supervisorctl status #查看所有任务状态

etcd-server-7-12 RUNNING pid 22656, uptime 0:00:35

root@hdss7-12 opt]# tail -fn 200 /data/logs/etcd-server/etcd.stdout.log

[root@hdss7-12 etcd]# netstat -luntp|grep etcd

tcp 0 0 10.4.7.12:2379 0.0.0.0:* LISTEN 22657/./etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 22657/./etcd

tcp 0 0 10.4.7.12:2380 0.0.0.0:* LISTEN 22657/./etcd

另两台etcd同理

HDSS7-21.host.com HDSS7-22.host.com

/opt/etcd/etcd-server-startup.sh ##注意ip修改

/etc/supervisord.d/etcd-server.ini #注意ip修改

三个etcd节点都起来后,检查etcd集群状态

##任意一点都可执行

[root@hdss7-21 etcd]# ./etcdctl cluster-health

member 988139385f78284 is healthy: got healthy result from http://127.0.0.1:2379

member 5a0ef2a004fc4349 is healthy: got healthy result from http://127.0.0.1:2379

member f4a0cb0a765574a8 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy

[root@hdss7-21 etcd]# ./etcd member list

2020-06-14 12:05:54.774522 E | etcdmain: error verifying flags, 'member' is not a valid flag. See 'etcd --help'.

[root@hdss7-21 etcd]# ./etcdctl member list

988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=false

5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false

f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true

部署kube-apiserver集群

集群规划

10.4.7.11 和 10.4.7.12 使用nginx做4层负载均衡器,用keepalived跑一个vip:10.4.7.10 代理两个kube-apiserver ,实现高可用

现在hdss7-21.host.com为例

下载软件,解压,做软链接

hdss7-21.host.com上;

/opt/src

rz kubernetes-server-linux-amd64-v1.15.2.tar.gz

[root@hdss7-21 src]# tar xf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt/

[root@hdss7-21 opt]# mv kubernetes /opt/kubernetes-v.1.15.2

[root@hdss7-21 opt]# ln -s kubernetes-v.1.15.2/ /opt/kubernetes

[root@hdss7-21 opt]# ll

total 0

drwx--x--x 4 root root 28 Jun 13 16:15 containerd

lrwxrwxrwx 1 root root 13 Jun 14 11:16 etcd -> etcd-v3.1.20/

drwxr-xr-x 4 etcd etcd 166 Jun 14 12:00 etcd-v3.1.20

lrwxrwxrwx 1 root root 20 Jun 26 16:43 kubernetes -> kubernetes-v.1.15.2/

drwxr-xr-x 4 root root 79 Aug 5 2019 kubernetes-v.1.15.2

drwxr-xr-x 2 root root 97 Jun 26 16:35 src

签发client证书

运维主机 hdss7-21.host.com上:

创建生成证书签名请求(csr)的JSON配置文件 (apiserver找etcd的证书)

# vi client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

检查生成的证书.和私钥

ls -l |grep client

-rw-r--r-- 1 root root 993 Jun 26 17:22 client.csr

-rw-r--r-- 1 root root 281 Jun 26 17:16 client-csr.json

-rw------- 1 root root 1679 Jun 26 17:22 client-key.pem

-rw-r--r-- 1 root root 1363 Jun 26 17:22 client.pem

签发api-server证书

运维主机 HDSS7-200.host.com上:

创建生成证书签名请求(csv)的JSON的配置文件 (自己启动需要的证书)

# vi apiserver-csr.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成证书和秘钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

检查生成的证书和秘钥

ls -l |grep apiserver

-rw-r--r-- 1 root root 1249 Jun 26 17:38 apiserver.csr

-rw-r--r-- 1 root root 566 Jun 26 17:35 apiserver-csr.json

-rw------- 1 root root 1675 Jun 26 17:38 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Jun 26 17:38 apiserver.pem

拷贝证书到各预算节点,并创建配置

HDSS7-21.host.com上:

拷贝证书,私钥,注意私钥文件属性600

cd /opt/kubernetes/server/bin

mkdir cert

[root@hdss7-21 cert]# ll

total 24

-rw------- 1 root root 1675 Jun 26 17:53 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Jun 26 17:53 apiserver.pem

-rw------- 1 root root 1679 Jun 26 17:51 ca-key.pem

-rw-r--r-- 1 root root 1346 Jun 26 17:48 ca.pem

-rw------- 1 root root 1679 Jun 26 17:52 client-key.pem

-rw-r--r-- 1 root root 1363 Jun 26 17:52 client.pem

创建配置 (k8s日志审计)

[root@hdss7-21 conf]# vi audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

创建启动脚本

HDSS7-21-host.com 上:

/opt/kubernetes/server/bin/kube-apiserver.sh

#!/bin/bash

./kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file ./conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./cert/ca.pem \

--requestheader-client-ca-file ./cert/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./cert/ca.pem \

--etcd-certfile ./cert/client.pem \

--etcd-keyfile ./cert/client-key.pem \

--etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--service-account-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 192.168.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./cert/client.pem \

--kubelet-client-key ./cert/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./cert/apiserver.pem \

--tls-private-key-file ./cert/apiserver-key.pem \

--v 2

调整权限和目录

HDSS7-21.host.com上:

/opt/kubernetes/server/bin

chmod +x kube-apiserver.sh

mkdir -p /data/logs/kubernetes/kube-apiserver(不创建目录启动会有问题)

创建supervisor配置

控制apiserver启动进程,异常退出能恢复出来

HDSS7-21.host.com上:

# vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-7-21]

command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

supervisorctl update ##启动

kube-apiserver-7-21: added process group

HDSS7-22.host.com同理

检查 两个节点状态

[root@hdss7-21 bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 6491, uptime 8:13:37

kube-apiserver-7-21 RUNNING pid 7440, uptime 0:32:08

[root@hdss7-22 bin]# supervisorctl update

kube-apiserver-7-21: added process group

[root@hdss7-22 bin]# supervisorctl status

etcd-server-7-22 RUNNING pid 6515, uptime 8:39:19

kube-apiserver-7-21 RUNNING pid 7377, uptime 0:02:51

apiserver root 启动的

etcd 普通用户启动的

##查看监听的端口

[root@hdss7-22 bin]# netstat -lntup |grep kube-api

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 7378/./kube-apiserv

tcp6 0 0 :::6443 :::* LISTEN 7378/./kube-apiserv

配4层反向代理

HDSS7-11.host.com 和HDSS7-12.host.com上:

安装nginx

yum install nginx -y

nginx 配置

/etc/nginx/nginx.conf 4层配置加到文件最后

stream {

upstream kube-apiserver {

server 10.4.7.21:6443 max_fails=3 fail_timeout=30s;

server 10.4.7.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

安装 keepalived

yum install keepalived -y

- 监控端口漂移脚本

/etc/keepalived/check_port.sh

#!/bin/bash

#keepalived 监控端口脚本

#使用方法:

#在keepalived的配置文件中

#vrrp_script check_port {#创建一个vrrp_script脚本,检查配置

# script "/etc/keepalived/check_port.sh 6379" #配置监听的端口

# interval 2 #检查脚本的频率,单位(秒)

#}

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

# chmod +x /etc/keepalived/check_port.sh

keepalived 配置

keepalived 主:

! Configuration File for keepalived

global_defs {

router_id 10.4.7.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 10.4.7.11

nopreempt ##非抢占式,重启nginx不会飘回主,生产上vip不能来回飘着玩,vip移动属于重大事故

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

keepalived从:

! Configuration File for keepalived

global_defs {

router_id 10.4.7.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

mcast_src_ip 10.4.7.12

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

##systemctl start keepalived.service

##systemctl enable keepalived.service

部署controller-manager

集群规划 :

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | controller-manager | 10.4.7.21 |

| HDSS7-22.host.com | controller-manager | 10.4.7.22 |

注:以HDSS7-21.host.com 主机安装为例,另一台22同理,可同步进行

创建启动脚本

HDSS7-21.host.com上:

/opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 192.168.0.0/16 \

--root-ca-file ./cert/ca.pem \

--v 2

调整文件权限,创建目录

HDSS7-21.host.com 上:

chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh

mkdir -p /data/logs/kubernetes/kube-controller-manager

创建supervisor配置

HDSS7-21.host.com上:

/etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-7-21]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 ~]# supervisorctl update

kube-controller-manager-7-21: added process group

[root@hdss7-21 ~]# supervisorctl status

etcd-server-7-21 RUNNING pid 6491, uptime 11:32:58

kube-apiserver-7-21 RUNNING pid 7440, uptime 3:51:28

kube-controller-manager-7-21 RUNNING pid 7680, uptime 0:01:11

安装HDSS7-22.host.com 主机kube-controller-manager服务 ,启动并检查

部署kube-scheduler

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | kube-scheduler | 10.4.7.21 |

| HDSS7-22.host.com | kube-scheduler | 10.4.7.22 |

注:以HDSS7-21.host.com 主机安装为例,另一台22同理,可同步进行

创建启动脚本

HDSS7-21.host.com上;

/opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh

./kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

调整文件权限,创建目录

HDSS7-22.host.com上:

chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh

mkdir -p /data/logs/kubernetes/kube-scheduler

创建supervisor配置

HDSS7-21.host.com上:

/etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-7-21]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 ~]# supervisorctl update

kube-scheduler-7-21: added process group

[root@hdss7-21 ~]# supervisorctl status

etcd-server-7-21 RUNNING pid 6491, uptime 11:49:56

kube-apiserver-7-21 RUNNING pid 7440, uptime 4:08:26

kube-controller-manager-7-21 RUNNING pid 7680, uptime 0:18:09

kube-scheduler-7-21 RUNNING pid 7707, uptime 0:00:31

检查集群状态

[root@hdss7-21 ~]# ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl ##软链接 kubectl命令 ,查看集群状态需要此命令

[root@hdss7-21 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

部署Node节点服务

部署kubelet

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | kubelet | 10.4.7.21 |

| HDSS7-22.host.com | kubelet | 10.4.7.22 |

注:部署文档以HDSS7-21.host.com主机为例,另一台运算节点同理

签发kubelet 证书

运维主机HDSS7-200.host.com 上;

创建生成证书签名请求(csr)的JSON配置文件

# vi kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23",

"10.4.7.24",

"10.4.7.25",

"10.4.7.26",

"10.4.7.27",

"10.4.7.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成kubelet证书和私钥

/opt/certs

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

2020/06/27 11:02:34 [INFO] generate received request

2020/06/27 11:02:34 [INFO] received CSR

2020/06/27 11:02:34 [INFO] generating key: rsa-2048

2020/06/27 11:02:34 [INFO] encoded CSR

2020/06/27 11:02:34 [INFO] signed certificate with serial number 71509884340440940923668995138673838436096221898

2020/06/27 11:02:34 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

检查生成的证书,私钥

/opt/certs

[root@hdss7-200 certs]# ls -l |grep kubelet

-rw-r--r-- 1 root root 1115 Jun 27 11:02 kubelet.csr

-rw-r--r-- 1 root root 452 Jun 27 10:59 kubelet-csr.json

-rw------- 1 root root 1679 Jun 27 11:02 kubelet-key.pem

-rw-r--r-- 1 root root 1468 Jun 27 11:02 kubelet.pem

拷贝证书到各运算节点,并创建配置

HDSS7-21.host.com上: 分发证书到7-21和7-22

拷贝证书,私钥,注意私钥文件属性600

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/kubelet.pem .

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/kubelet-key.pem .

[root@hdss7-21 cert]# ll

total 32

-rw------- 1 root root 1675 Jun 26 17:53 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Jun 26 17:53 apiserver.pem

-rw------- 1 root root 1679 Jun 26 17:51 ca-key.pem

-rw-r--r-- 1 root root 1346 Jun 26 17:48 ca.pem

-rw------- 1 root root 1679 Jun 26 17:52 client-key.pem

-rw-r--r-- 1 root root 1363 Jun 26 17:52 client.pem

-rw------- 1 root root 1679 Jun 27 11:17 kubelet-key.pem

-rw-r--r-- 1 root root 1468 Jun 27 11:17 kubelet.pem

创建配置

set-cluster

注意:在conf目录下

/opt/kubernetes/server/conf

conf]#kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kubelet.kubeconfig

Cluster "myk8s" set.

set-credentials

注意: 在conf目录下

/opt/kubernetes/server/conf

conf]# kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/cert/client.pem \

--client-key=/opt/kubernetes/server/bin/cert/client-key.pem \

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig

User "k8s-node" set.

set-context

注意:在conf目录下

/opt/kubernetes/server/conf

conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

Context "myk8s-context" created.

use-context 切换上下文到k8s node

注意:在conf目录下

/opt/kubernetes/server/conf

conf]# kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

Switched to context "myk8s-context".

**k8s-node.yaml ** 角色绑定,给k8s-node,赋予具有集群运算节点的权限

-

创建资源配置文件

conf}# k8s-node.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: k8s-node roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: k8s-node- 创建集群权限资源

[root@hdss7-21 conf]# kubectl create -f k8s-node.yaml clusterrolebinding.rbac.authorization.k8s.io/k8s-node created- 检查

[root@hdss7-21 conf]# kubectl get clusterrolebinding k8s-node NAME AGE k8s-node 72sHDSS7-22.host.com 上 直接copy 一份就妥,省着在set ,因为文件已经落盘了

[root@hdss7-22 ~]# cd /opt/kubernetes/server/bin/conf/ [root@hdss7-22 conf]# scp hdss7-21:/opt/kubernetes/server/bin/conf/kubelet.kubeconfig .准备pause基础镜像

运维主机HDSS7-200.host.com上:

下载镜像,打标签,推送到harbor仓库

HDSS7-200上: #docker login harbor.od.com admin 123456 不成功,可能是没启动 docker和harbor systemctl start docker docker-compose up -d # docker pull kubernetes/pause # docker tag f9d5de079539 harbor.od.com/public/pause:latest # docker push harbor.od.com/public/pause:latestpause作用: 全称infrastucture container(又叫infra)基础容器。

kubelet 在启动的时候要指定这个镜像,在所有业务启动时候先让pause容器给业务初始化网络空间,IPC空间,uts空间

kubernetes中的pause容器主要为每个业务容器提供以下功能: PID命名空间:Pod中的不同应用程序可以看到其他应用程序的进程ID。 网络命名空间:Pod中的多个容器能够访问同一个IP和端口范围。 IPC命名空间:Pod中的多个容器能够使用SystemV IPC或POSIX消息队列进行通信。 UTS命名空间:Pod中的多个容器共享一个主机名;Volumes(共享存储卷): Pod中的各个容器可以访问在Pod级别定义的Volumes。 作者:程序员同行者 链接:https://www.jianshu.com/p/bff9cf543ca4 来源:简书 著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

创建kubelet启动脚本

HDSS7-21.host.com 上; 22上 可同步进行

/opt/kubernetes/server/bin/kubelet.sh

#!/bin/sh

./kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 192.168.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./cert/ca.pem \

--tls-cert-file ./cert/kubelet.pem \

--tls-private-key-file ./cert/kubelet-key.pem \

--hostname-override hdss7-21.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.od.com/public/pause:latest \

--root-dir /data/kubelet

注意:kubelet 集群个主机的启动脚本略有不同,部署其他节点时注意修改

检查配置,权限,创建日志目录

HDSS7-21.host.com上: 22同步

[root@hdss7-21 conf]# pwd

/opt/kubernetes/server/bin/conf

# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

# chmod +x /opt/kubernetes/server/bin/kubelet.sh

创建supervisor配置

HDSS7-21.host.com上:

/etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-7-21]

command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

启动并检查节点状态

[root@hdss7-21 ~]# supervisorctl update

kube-scheduler-7-21: added process grou

[root@hdss7-21 conf]# supervisorctl status

etcd-server-7-21 RUNNING pid 6259, uptime 5:45:15

kube-apiserver-7-21 RUNNING pid 6261, uptime 5:45:15

kube-controller-manager-7-21 RUNNING pid 6257, uptime 5:45:15

kube-kubelet-7-21 RUNNING pid 18626, uptime 0:58:07

kube-scheduler-7-21 RUNNING pid 6258, uptime 5:45:15

##查看日志报错 tail -n 200 /data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

查看集群状态

[root@hdss7-21 cert]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready <none> 78m v1.15.2

hdss7-22.host.com Ready <none> 2m38s v1.15.2

label node

[root@hdss7-21 cert]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/master=

node/hdss7-21.host.com labeled

[root@hdss7-21 cert]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/node=

node/hdss7-21.host.com labeled

[root@hdss7-21 cert]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 105m v1.15.2

hdss7-22.host.com Ready <none> 29m v1.15.2

supervisor 用法

其他命令:

supervisorctl help:帮助命令

supervisorctl update :配置文件修改后可以使用该命令加载新的配置

supervisorctl reload: 重新启动配置中的所有程序

supervisorctl restart 服务名

supervisorctl restart kube-kubelet-7-22

部署kube-proxy

作用:连接pod 网络和集群网络

集群规划

| 主机名 | 角色 | ip |

|---|---|---|

| HDSS7-21.host.com | kube-proxy | 10.4.7.21 |

| HDSS7-22.host.com | kube-proxy | 20.4.7.22 |

注意:步骤以 hdss7-21.host.com为例 ,另一台运算节点同理,可同步

签发kube-proxy证书

运维主机HDSS7-200.host.com上:

创建生成证书签名请求(csr)的JSON配置文件

# vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

CN直接配置k8s中的角色,省着 clusterbinding

生成kube-proxy证书和秘钥

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

2020/06/27 17:11:44 [INFO] generate received request

2020/06/27 17:11:44 [INFO] received CSR

2020/06/27 17:11:44 [INFO] generating key: rsa-2048

2020/06/27 17:11:44 [INFO] encoded CSR

2020/06/27 17:11:44 [INFO] signed certificate with serial number 65842469619446700066178412711509736733762516362

2020/06/27 17:11:44 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

检查证书

[root@hdss7-200 certs]# ls -l |grep kube-proxy

-rw-r--r-- 1 root root 1005 Jun 27 17:11 kube-proxy-client.csr

-rw------- 1 root root 1675 Jun 27 17:11 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1375 Jun 27 17:11 kube-proxy-client.pem

-rw-r--r-- 1 root root 267 Jun 27 17:09 kube-proxy-csr.json

拷贝证书到各运算节点,并创建配置

HDSS7-21.host.com 上:

拷贝证书.私钥,注意私钥文件属性600

[root@hdss7-21 cert]# ls -l

total 40

-rw------- 1 root root 1675 Jun 26 17:53 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Jun 26 17:53 apiserver.pem

-rw------- 1 root root 1679 Jun 26 17:51 ca-key.pem

-rw-r--r-- 1 root root 1346 Jun 26 17:48 ca.pem

-rw------- 1 root root 1679 Jun 26 17:52 client-key.pem

-rw-r--r-- 1 root root 1363 Jun 26 17:52 client.pem

-rw------- 1 root root 1679 Jun 27 11:17 kubelet-key.pem

-rw-r--r-- 1 root root 1468 Jun 27 11:17 kubelet.pem

-rw------- 1 root root 1675 Jun 27 17:28 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1375 Jun 27 17:28 kube-proxy-client.pem

创建配置

kubeconfig是k8s的一个用户的文件,作为用户交付到K8s里的

set-cluster

注意:在conf目录下

conf]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kube-proxy.kubeconfig

Cluster "myk8s" set.

set-credentials

注意: 在conf目录下

conf]# kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

User "kube-proxy" set.

set-context

注意:在conf目录下

conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

Context "myk8s-context" created.

use-context

注意:在conf目录下

conf]# kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

Switched to context "myk8s-context".

HDSS7-22.host.com 直接复制

[root@hdss7-22 conf]# scp hdss7-21:/opt/kubernetes/server/bin/conf/kube-proxy.kubeconfig .

创建kube-proxy启动脚本

HDSS7-21.host.com上:

加载ipvs模块:

/root/ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

创建启动脚本

/opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override hdss7-21.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

注意:kube-proxy集群各主机的启动脚本略有不同,部署其他节点时注意修改

iptables 只支持rr

ipvs 支持的很多

[root@hdss7-21 ~]# lsmod |grep ip_vs

ip_vs_wrr 12697 0

ip_vs_wlc 12519 0

ip_vs_sh 12688 0

ip_vs_sed 12519 0

ip_vs_rr 12600 0

ip_vs_pe_sip 12740 0

nf_conntrack_sip 33860 1 ip_vs_pe_sip

ip_vs_nq 12516 0

ip_vs_lc 12516 0

ip_vs_lblcr 12922 0

ip_vs_lblc 12819 0

ip_vs_ftp 13079 0

ip_vs_dh 12688 0

检查配置,权限,创建日志目录

HDSS7-21.host.com 上;

/opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# ls -l |grep kube-proxy

-rw------- 1 root root 6215 Jun 27 17:59 kube-proxy.kubeconfig

[root@hdss7-21 conf]# chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

[root@hdss7-21 conf]# mkdir -p /data/logs/kubernetes/kube-proxy

创建supervisor配置

HDSS7-21.host.com上;

/etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-7-21]

command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 conf]# supervisorctl update

kube-proxy-7-21: added process group

[root@hdss7-21 conf]# supervisorctl status

etcd-server-7-21 RUNNING pid 6259, uptime 8:10:51

kube-apiserver-7-21 RUNNING pid 6261, uptime 8:10:51

kube-controller-manager-7-21 RUNNING pid 62565, uptime 0:27:32

kube-kubelet-7-21 RUNNING pid 18626, uptime 3:23:43

kube-proxy-7-21 RUNNING pid 69323, uptime 0:00:50

kube-scheduler-7-21 RUNNING pid 62541, uptime 0:27:35

安装LVS 验证 ,lvs实际上内嵌在k8s里,kube-proxy

lvs单臂路由模式 ,很nb ,和k8s结合后逼格更高了

[root@hdss7-21 conf]# yum install ipvsadm -y

[root@hdss7-21 conf]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 0 0

-> 10.4.7.22:6443 Masq 1 0 0

##1.将cluster ip 和node ip 绑定.2.将clusterip 反代到两个运算节点6443端口3.生产交付后,clusterip 应该指向pod ip4.kube-proxy组件就负责维护这三条网络,节点网络,cluster网路,pod网络

[root@hdss7-21 conf]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 24h

验证kubernetes集群

在任意一个运算节点,创建一个资源配置清单

现在选择HDSS7-21.host.com主机

/root/nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 80

kubectl create -f nginx-ds.yaml

daemonset.extensions/nginx-ds created

检查

[root@hdss7-21 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-1 Healthy {"health": "true"}

controller-manager Healthy ok

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

[root@hdss7-21 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 5h48m v1.15.2

hdss7-22.host.com Ready <none> 4h32m v1.15.2

[root@hdss7-21 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-ds-8r4w9 0/1 ImagePullBackOff 0 40m

nginx-ds-v4sn9 0/1 ImagePullBackOff 0 40m

学习条件

学习本套课程的资源需求说明:

由于本套课程是要实现一整套K8S生态的搭建 ,并实战交付-套

dubbo ( java )微服务,我们要一步步实现以下功能 :

持续集成

配置中心

监控系统

●.日志收集分析系统

●自动化运维平台(最终实现基于K8S的开源PaaS平台)

故学习本套课程的资源需求如下:

●2c/2g/50g x 3 + 4c/8g/50g x 2

与课程中的环境( ip规划和部署的服务)保持-致

●

, 资源获得方式

●笔记本加内存(缺点:无法实现24小时在线,排错成本高)

有条件的可以自建服务器工作站(缺点:费电,噪音)

租用阿里云云主机(缺点:贵,环境不-致)

沃佳云

浙公网安备 33010602011771号

浙公网安备 33010602011771号