google gRPC在macOS中的简单使用

1. 简述

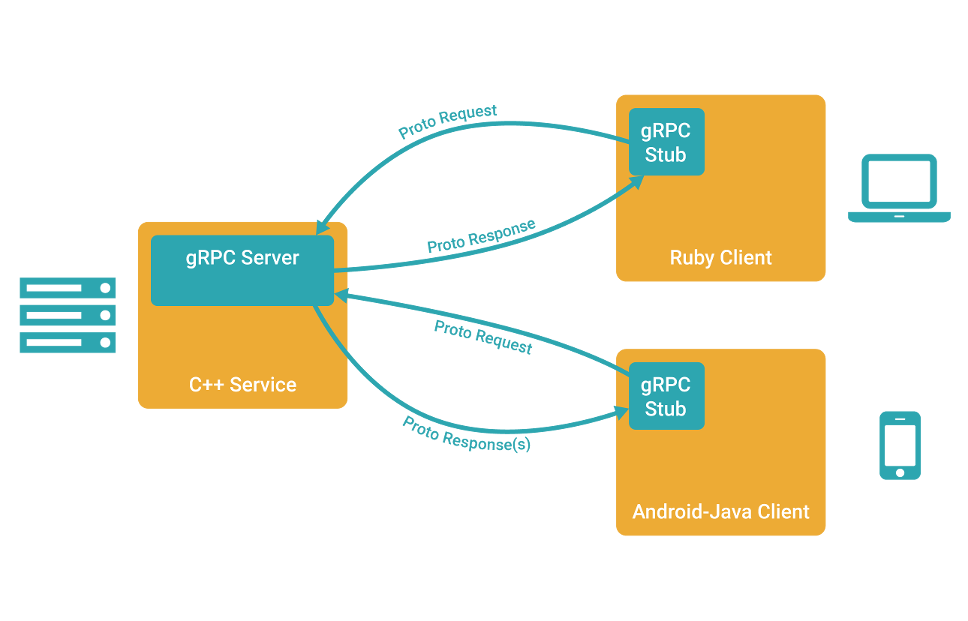

A high-performance, open-source universal RPC framework

gRPC 是一个高性能、开源和通用的 RPC 框架,面向移动和 HTTP/2 设计。目前提供 C、Java 和 Go 语言版本,分别是:grpc, grpc-java, grpc-go. 其中 C 版本支持 C, C++, Node.js, Python, Ruby, Objective-C, PHP 和 C# 支持。

gRPC 基于 HTTP/2 标准设计,带来诸如双向流、流控、头部压缩、单 TCP 连接上的多复用请求等特。这些特性使得其在移动设备上表现更好,更省电和节省空间占用。

RPC(Remote Procedure Call)远程过程调用,简单的理解是一个节点请求另一个节点提供的服务,使应用程序之间可以进行通信,而且也遵循service/client模型。在客户端调用service端提供的接口就像是调用本地函数一样,一般使用客户端快速能获取到服务器接口数据的时候,如:实时语音识别、翻译、语音合成等。

下面详解googleapis里的gRPC的使用

2. cocopods添加gRPC

- ios-docs-samples 下载googleapis库

- 找到并复制ios-docs-samples/speech-to-speech目录下的google文件夹和googleapis.podspec文件

- 将上述两个文件复制到自己工程主目录

- cd 到自己工程

- pod install –verbose 或 pod update –verbose (过程较慢,需要FQ)

3. 示例

客户端代码、不会服务器<可以自行研究>

3.1 语音识别

// ZDASRService.h

#import <Foundation/Foundation.h>

#import <google/cloud/speech/v1p1beta1/CloudSpeech.pbobjc.h>

NS_ASSUME_NONNULL_BEGIN

typedef void (^SpeechRecognitionCompletionHandler)(BOOL done, StreamingRecognizeResponse *object, NSError *error);

@interface ZDASRService : NSObject

- (void)streamAudioData:(NSData *)audioData

languageCode:(NSString *)languageCode

withCompletion:(SpeechRecognitionCompletionHandler)completion;

- (void)stopStreaming;

- (BOOL)isStreaming;

@end

// ZDASRService.m

NS_ASSUME_NONNULL_END

#import "ZDASRService.h"

#import <GRPCClient/GRPCCall.h>

#import <RxLibrary/GRXBufferedPipe.h>

#import <ProtoRPC/ProtoRPC.h>

#import <google/cloud/speech/v1p1beta1/CloudSpeech.pbrpc.h>

@interface ZDASRService ()

@property (nonatomic, strong) Speech *client;

@property (nonatomic, strong) GRXBufferedPipe *writer;

@property (nonatomic, strong) GRPCProtoCall *call;

@property (nonatomic, assign) BOOL streaming;

@end

@implementation ZDASRService

- (id)init {

self = [super init];

if (self) {

[self defaultSetting];

}

return self;

}

- (void)defaultSetting {

}

- (void)streamAudioData:(NSData *)audioData

languageCode:(NSString *)languageCode

withCompletion:(SpeechRecognitionCompletionHandler)completion {

if (!_streaming) {

_client = [[Speech alloc] initWithHost:[ZDDogAPIService shared].dogApis];

_writer = [[GRXBufferedPipe alloc] init];

_call = [_client RPCToStreamingRecognizeWithRequestsWriter:_writer eventHandler:^(BOOL done, StreamingRecognizeResponse * _Nullable response, NSError * _Nullable error) {

completion(done, response, error);

}];

_call.requestHeaders[@"Authorization"] = [NSString stringWithFormat:@"Bearer %@", [[NSUserDefaults standardUserDefaults] valueForKey:kDeviceTokenKey]];

_call.requestHeaders[@"appId"] = kAppId;

_call.requestHeaders[@"userId"] = [[NSUserDefaults standardUserDefaults] valueForKey:kUserId];

[_call start];

_streaming = YES;

RecognitionConfig *recognitionConfig = [RecognitionConfig message];

recognitionConfig.encoding = RecognitionConfig_AudioEncoding_Linear16;

recognitionConfig.sampleRateHertz = 16000;

recognitionConfig.languageCode = languageCode;

recognitionConfig.maxAlternatives = 30;

recognitionConfig.enableAutomaticPunctuation = YES;

recognitionConfig.audioChannelCount = 1;

StreamingRecognitionConfig *streamingRecognitionConfig = [StreamingRecognitionConfig message];

streamingRecognitionConfig.config = recognitionConfig;

streamingRecognitionConfig.singleUtterance = NO;

streamingRecognitionConfig.interimResults = YES;

StreamingRecognizeRequest *streamingRecognizeRequest = [StreamingRecognizeRequest message];

streamingRecognizeRequest.streamingConfig = streamingRecognitionConfig;

[_writer writeValue:streamingRecognizeRequest];

}

StreamingRecognizeRequest *streamingRecognizeRequest = [StreamingRecognizeRequest message];

streamingRecognizeRequest.audioContent = audioData;

[_writer writeValue:streamingRecognizeRequest];

}

- (void)stopStreaming {

if (!_streaming) {

return;

}

StreamingRecognizeRequest *streamingRecognizeRequest = [StreamingRecognizeRequest message];

streamingRecognizeRequest.audioContent = [NSData data];

[_writer writeValue:streamingRecognizeRequest];

[_writer finishWithError:nil];

_streaming = NO;

}

- (BOOL)isStreaming {

return _streaming;

}

@end

3.2 语音合成

// ZDTTSService.h

#import <Foundation/Foundation.h>

#import "google/cloud/texttospeech/v1beta1/CloudTts.pbobjc.h"

NS_ASSUME_NONNULL_BEGIN

typedef void (^TTSRecognitionCompletionHandler)(NSData *__nullable data, NSString *__nullable error);

@interface ZDTTSService : NSObject

- (void)ttsRecognition:(NSString *)text

language:(NSString *)language

completionHandler:(TTSRecognitionCompletionHandler)handler;

@end

NS_ASSUME_NONNULL_END

// ZDTTSService.m

#import "ZDTTSService.h"

#import <RxLibrary/GRXBufferedPipe.h>

#import <AVFoundation/AVFoundation.h>

#import <google/cloud/texttospeech/v1beta1/CloudTts.pbrpc.h>

@interface ZDTTSService ()

@property (nonatomic, strong) TextToSpeech *client;

@property (nonatomic, strong) GRXBufferedPipe *writer;

@property (nonatomic, strong) GRPCProtoCall *call;

@property (assign, nonatomic) BOOL mGoogleTimeout;

@end

@implementation ZDTTSService

- (id)init {

self = [super init];

if (self) {

[self defaultSetting];

}

return self;

}

- (void)defaultSetting {

self.mGoogleTimeout = NO;

}

- (void)ttsRecognition:(NSString *)text

language:(nonnull NSString *)language

completionHandler:(TTSRecognitionCompletionHandler)handler {

NSArray *sIflyteks = @[@"zh-CN", @"zh-TW", @"zh-HK"];

NSArray *sMicrosofts = @[@"ms-MY", @"bg-BG", @"sl-SI", @"he_IL"];

if ([sIflyteks containsObject:language] || [sMicrosofts containsObject:language] || self.mGoogleTimeout) {

[self ttsDogRecognition:text language:language completionHandler:handler];

} else {

[self ttsGoogleRecognition:text language:language completionHandler:handler];

}

}

// google api合成

- (void)ttsGoogleRecognition:(NSString *)text

language:(NSString *)language

completionHandler:(TTSRecognitionCompletionHandler)handler {

text = [text stringByAddingPercentEncodingWithAllowedCharacters:[NSCharacterSet URLQueryAllowedCharacterSet]];

NSMutableString *urlString = [NSMutableString stringWithString:[ZDDogAPIService shared].mGoogleUrl];

[urlString appendFormat:@"&total=1"];

[urlString appendFormat:@"&idx=0"];

[urlString appendFormat:@"&textlen=%lu", (unsigned long)text.length];

[urlString appendFormat:@"&q=%@", text];

[urlString appendFormat:@"&tl=%@", language];

NSMutableURLRequest *request = [NSMutableURLRequest requestWithURL:[NSURL URLWithString:urlString]

cachePolicy:NSURLRequestUseProtocolCachePolicy

timeoutInterval:10.0];

[request setHTTPMethod:@"GET"];

NSURLSession *session = [NSURLSession sharedSession];

NSURLSessionDataTask *dataTask = [session dataTaskWithRequest:request

completionHandler:^(NSData *data, NSURLResponse *response, NSError *error) {

if (error) {

if (error.code == -1001) {

self.mGoogleTimeout = YES;

}

handler(nil, error.localizedDescription);

} else {

NSHTTPURLResponse *httpResponse = (NSHTTPURLResponse *) response;

if (httpResponse.statusCode == 200) {

handler(data, @"");

}

}

}];

[dataTask resume];

}

// dog RPC

- (void)ttsDogRecognition:(NSString *)text

language:(NSString *)language

completionHandler:(TTSRecognitionCompletionHandler)handler {

_client = [[TextToSpeech alloc] initWithHost:[ZDDogAPIService shared].dogApis];

_writer = [[GRXBufferedPipe alloc] init];

SynthesisInput *input = [[SynthesisInput alloc] init];

input.text = text;

VoiceSelectionParams *params = [[VoiceSelectionParams alloc] init];

params.languageCode = language;

params.ssmlGender = SsmlVoiceGender_Female;

AudioConfig *config = [[AudioConfig alloc] init];

config.audioEncoding = AudioEncoding_Mp3;

SynthesizeSpeechRequest *speechRequest = [[SynthesizeSpeechRequest alloc] init];

speechRequest.audioConfig = config;

speechRequest.input = input;

speechRequest.voice = params;

_call = [_client RPCToSynthesizeSpeechWithRequest:speechRequest handler:^(SynthesizeSpeechResponse * _Nullable response, NSError * _Nullable error) {

handler(response.audioContent, error.localizedDescription);

}];

_call.requestHeaders[@"Authorization"] = [NSString stringWithFormat:@"Bearer %@", [[NSUserDefaults standardUserDefaults] valueForKey:kDeviceTokenKey]];

_call.requestHeaders[@"appId"] = kAppId;

[_call start];

}

@end

3.3 翻译

// ZDTranslateService.h

#import <Foundation/Foundation.h>

#import <google/cloud/translate/v3beta1/TranslationService.pbobjc.h>

NS_ASSUME_NONNULL_BEGIN

typedef void (^TranslationCompletionHandler)(TranslateTextResponse *object, NSError *error);

@interface ZDTranslateService : NSObject

- (void)translateText:(NSString *)text

sourceLan:(NSString *)sourceLan

targetLan:(NSString *)targetLan

completionHandler:(TranslationCompletionHandler)handler;

@end

NS_ASSUME_NONNULL_END

// ZDTranslateService.m

#import "ZDTranslateService.h"

#import <google/cloud/translate/v3beta1/TranslationService.pbrpc.h>

@interface ZDTranslateService ()

@property (nonatomic, strong) TranslationService *client;

@property (nonatomic, strong) GRPCProtoCall *call;

@end

@implementation ZDTranslateService

- (id)init {

self = [super init];

if (self) {

[self defaultSetting];

}

return self;

}

- (void)defaultSetting {

}

- (void)translateText:(NSString *)text

sourceLan:(NSString *)sourceLan

targetLan:(NSString *)targetLan

completionHandler:(TranslationCompletionHandler)handler {

_client = [[TranslationService alloc] initWithHost:[ZDDogAPIService shared].dogApis];

TranslateTextRequest *translateRequest = [[TranslateTextRequest alloc] init];

translateRequest.contentsArray = [NSMutableArray arrayWithArray:@[text]];

translateRequest.mimeType = @"text/plain";

translateRequest.sourceLanguageCode = sourceLan;

translateRequest.targetLanguageCode = targetLan;

_call = [_client RPCToTranslateTextWithRequest:translateRequest handler:^(TranslateTextResponse * _Nullable response, NSError * _Nullable error) {

handler(response, error);

}];

_call.requestHeaders[@"Authorization"] = [NSString stringWithFormat:@"Bearer %@", [[NSUserDefaults standardUserDefaults] valueForKey:kDeviceTokenKey]];

_call.requestHeaders[@"appId"] = kAppId;

_call.requestHeaders[@"userId"] = [[NSUserDefaults standardUserDefaults] valueForKey:kUserId];

[_call start];

}

@end

浙公网安备 33010602011771号

浙公网安备 33010602011771号