kubernetes 基础

https://www.kubernetes.org.cn/doc-16

从集群外部访问pod或service

pod

在Kubernetes中,创建、调度和管理的最小单位是pod而不是容器。

pod代表着一个运行着的工作单元。一般情况下,每个pod中只有一个容器(原因是为了避免容器之间发生端口冲突)。

如果几个容器是紧耦合的,也可以放在同一个pod中,但一定要避免同一个pod下容器之间发生端口冲突。

Kubernetes承担了pod与外界环境的通信工作。

Pod和Service是Kubernetes集群范围内的虚拟概念。

集群外的客户端系统无法通过Pod的IP地址或者Service的虚拟IP地址和虚拟端口号访问到它们,但是可以将容器应用的端口号映射到物理机上

方法一:设置容器的hostport,将容器应用的端口号映射到物理机上

apiVersion: v1

kind: Pod #kind表示创建什么(pod?service?deployment?)

metadata:

name: webapp

labels:

app: webapp

spec:

replicas: 2 #创建多少个副本,如果指定了映射到宿主机的端口,需要在两台node上创建副本

containers:

- name: webapp

image: docker.io/nginx:latest #默认会先从本地查找镜像,找不到从服务器上pull

ports:

- containerPort: 80 #容器端口

hostPort: 8081 #宿主机端口,指定端口后,同一台宿主机无法启动第二个副本(端口会冲突)

通过 命令可查看pod在哪台node上创建了容器

kubectl get pods -o wide

方法二,设置pod hostNetwork=true

apiVersion: v1

kind: ReplicationController

metadata:

name: testnetwork

labels:

app: testnetwork

spec:

replicas: 5 #,同样,不能在一台机器上创建两个副本

selector:

app: testnetwork

template:

metadata:

labels:

app: testnetwork

spec:

hostNetwork: true #表示使用宿主机网络

containers:

- name: testnetwork

image: docker.io/nginx:latest

ports:

- containerPort: 80 #这里没有指定hostport,默认会使pod中的所有容器的端口号直接映射到物理机上

service

Kubernetes通过Service能够提供pod间的相互通信。

service可以和Kubernetes环境中其它部分(包括其它pod和replication controller)进行通信,告诉它们你的应用提供什么服务。

Pod可以四处移动(会改变IP地址),但是service的IP地址和端口号是不变的。而且其它应用可以通过Kubernetes的服务发现找到对应的service。

Service是真实应用服务的抽象。

将代理的Pod对外表现为一个单一的访问接口,外部不需要了解后端Pod如何运行,提供了一套简化的服务代理和发现机制。

网络方式:

port: 80:service映射的端口

nodePort: 30001物理机端口

targetPort: 80容器端口

设置nodeport映射到物理机,同时设置service类型为nodeport

#cat service.yml

apiVersion: v1 kind: Service metadata: name: testnetwork #pod名称 labels: app: testnetwork spec: type: NodePort ports: - port: 8080 targetPort: 8080 nodePort: 8000 selector: app: testnetwork #这里的app名称应该与pod name设定的app名称相同,包括上面的metadata信息

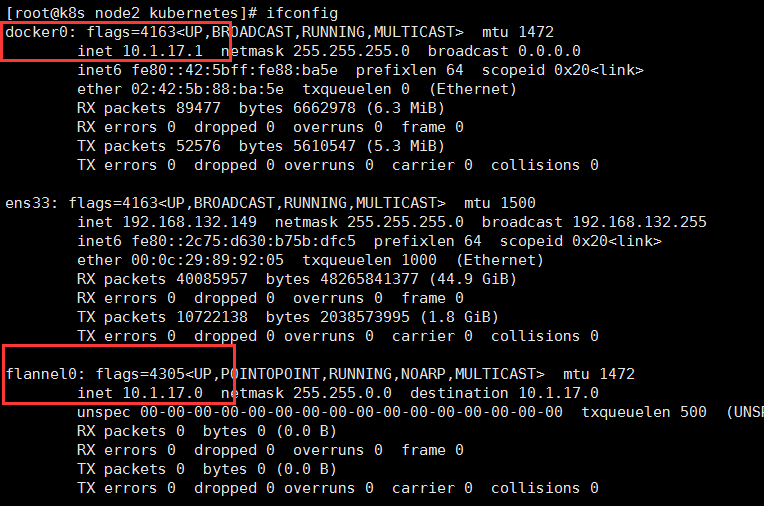

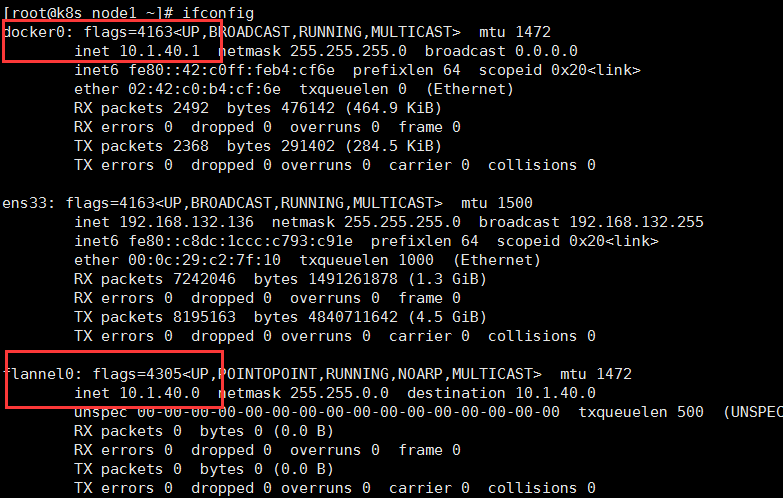

创建kubernetes网络

要实现Kubernetes的网络模型,需要在Kubernetes的集群中创建一个覆盖网络,连通各个节点。这里选用Flannel。

Flannel使用Etcd进行配置,用来保证多个Flannel实例间的配置一致性。

对Master的配置,其实就是对Master上Etcd的配置。指令如下:

etcdctl mk /coreos.com/network/config '{"Network":"10.0.0.0/16"}' #此处 /coreos.com/network/config在 /etc/sysconfig/flanneld里配置

etcd集群和node节点配置看k8s集群安装文章

Kubernetes 集群ca验证

创建集群时跳过ca验证

# vim /etc/kubernetes/apiserver 去除KUBE_ADMISSION_CONTROL中的 SecurityContextDeny,ServiceAccount #重启服务 systemctl restart kube-apiserver.service

创建集群时安装ca验证

在一个安全的内网环境中,kubernetes的各个组件需要与master之间可以通过apiserver的非安全端口进行连接,但如果对外,安全的做法需要启用https安全机制,这里采用CA签名的双向数字证书认证方式:

(1)为kube-apiserver生成一个数字证书,并用CA证书进行签名。 (2)为kube-apiserver进程配置证书相关的启动参数,包括CA证书(用于验证客户端证书的签名真伪、自己经过CA签名后的证书及私钥)。 (3)为每个访问Kubernetes API Server的客户端进程生成自己的数字证书,也都用CA证书进行签名,在相关程序的启动参数中增加CA证书、自己的证书等相关参数。

master端配置

OpenSSL工具在Master服务器上创建CA证书和私钥相关的文件

#openssl genrsa -out ca.key 2048 #openssl req -x509 -new -nodes -key ca.key -subj "/CN=192.168.132.148" -days 5000 -out ca.crt #masterip #openssl genrsa -out server.key 2048

#生成文件 ca.crt cat.key server.key

创建master_ssl.cnf文件,生成x509 v3版本证书

[req] req_extensions = v3_req distinguished_name = req_distinguished_name [req_distinguished_name] [ v3_req ] basicConstraints = CA:FALSE keyUsage = nonRepudiation, digitalSignature, keyEncipherment subjectAltName = @alt_names [alt_names] DNS.1 = kubernetes DNS.2 = kubernetes.default DNS.3 = kubernetes.default.svc DNS.4 = kubernetes.default.svc.cluster.local DNS.5 = k8s_master #master hostname IP.1 = 10.254.0.1 #master clusterip 可通过kubectl get service获取 IP.2 = 192.168.132.148 #master ip

基于Master_ssl.cnf生成server.csr和server.crt

openssl req -new -key server.key -subj "/CN=k8s_master" -config Master_ssl.cnf -out server.csr #/CN为master 主机名

openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 5000 -extensions v3_req -extfile Master_ssl.cnf -out server.crt

#目前文件有如下,将他们复制到 /etc/kubernetes/ssl/下

ca.crt ca.key ca.srl server.crt server.csr server.key

配置kube-apiserver的启动参数

[root@k8s_master kubernetes]# cat apiserver |grep -v '^#\|^$' KUBE_API_ADDRESS="--advertise-address=192.168.132.148 --bind-address=192.168.132.148 --insecure-bind-address=192.168.132.148" KUBELET_PORT="--kubelet-port=10250" KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.132.148:2379,http://192.168.132.136:2379,http://192.168.132.149:2379" KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" KUBE_API_ARGS="--client-ca-file=/etc/kubernetes/ssl/ca.crt --tls-private-key-file=/etc/kubernetes/ssl/server.key --tls-cert-file=/etc/kubernetes/ssl/server.crt" #解释说明 --client-ca-file #CA根证书文件 --tls-cert-file #服务端证书文件 --tls-private-key-file #服务端私钥文件 --secure-port=6443 #安全端口 --bind-address=192.168.1323.148 #HTTPS(安全)绑定的MasterIp地址 --advertise-address=192.168.132.148 #对外提供服务的IP --insecure-bind-address=192.168.132.148 #非安全IP

配置kube-controller-manager的启动参数

[root@k8s_master kubernetes]# cat controller-manager |grep -v '^#\|^$' KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 --service-account-private-key-file=/etc/kubernetes/ssl/server.key --root-ca-file=/etc/kubernetes/ssl/ca.crt --kubeconfig=/etc/kubernetes/kubeconfig" KUBELET_ADDRESSES="--machines= 192.168.132.136,192.168.132.149"

配置kube-scheduler的启动参数

[root@k8s_master kubernetes]# cat scheduler |grep -v '^#\|^$' KUBE_SCHEDULER_ARGS="--address=127.0.0.1 --kubeconfig=/etc/kubernetes/kubeconfig"

配置/etc/kubernetes/config

[root@k8s_master kubernetes]# cat config |grep -v '^#\|^$' KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=true" KUBE_MASTER="--master=https://192.168.132.148:6443"

生成集群管理员证书

#cd /etc/kubernetes/ssl/openssl genrsa -out cs_client.key 2048openssl req -new -key cs_client.key -subj "/CN=k8s_master" -out cs_client.csropenssl x509 -req -in cs_client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out cs_client.crt -days 5000

创建/etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

users:

- name: controllermanager

user:

client-certificate: /etc/kubernetes/ssl/cs_client.crt

client-key: /etc/kubernetes/ssl/cs_client.key

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.crt

contexts:

- context:

cluster: local

user: controllermanager

name: my-context

current-context: my-context

重启kube-controller-manager、kube-scheduler、kube-apiserver服务

systemctl restart kube-scheduler kube-controller-manager

Node端配置

复制kube-apiserver的ca.crt和ca.key到各个Node 主机/etc/kubernetes/ssl下

生成kubelet_client.crt

在生成kubelet_client.crt时,-CA参数和-CAkey参数使用的是apiserver的ca.crt和ca.key文件。

在生成kubelet_client.csr时,-subj参数中的“/CN”设置为本Node的IP地址。 openssl genrsa -out kubelet_client.key 2048 openssl req -new -key kubelet_client.key -subj "/CN=192.168.132.136" -out kubelet_client.csropenssl x509 -req -in kubelet_client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kubelet_client.crt -days 5000

创建/etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ssl/kubelet_client.crt

client-key: /etc/kubernetes/ssl/kubelet_client.key

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.crt

contexts:

- context:

cluster: local

user: kubelet

name: my-context

current-context: my-context

修改kubernetes的配置文件/etc/kubernetes/config

[root@k8s_node1 kubernetes]# cat /etc/kubernetes/config|grep -v '^#\|^$' KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=https://192.168.132.148:6443"

修改/etc/kubernetes/kubelet

[root@k8s_node1 kubernetes]# cat /etc/kubernetes/kubelet|grep -v '^#\|^$' KUBELET_ADDRESS="--address=192.168.132.136" KUBELET_HOSTNAME="--hostname-override=192.168.132.136" KUBELET_API_SERVER="--api-servers=https://192.168.132.148:6443" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS="--cluster_dns=10.254.10.2 --cluster_domain=cluster.local --kubeconfig=/etc/kubernetes/kubeconfig"

设置kube-proxy服务的启动参数

[root@k8s_node1 kubernetes]# cat /etc/kubernetes/proxy|grep -v '^#\|^$' KUBE_PROXY_ARGS="--master=https://192.168.132.148:6443 --kubeconfig=/etc/kubernetes/kubeconfig"

重启kube-proxy、kubelet服务

systemctl restart kubelet systemctl restart kube-proxy

验证

删除master下etcd中的旧数据

#master执行 etcdctl rm --recursive registry

重启master上的服务

#systemctl daemon-reload #systemctl restart etcd kube-apiserver kube-scheduler kube-controller-manager

重启node上的服务

systemctl daemon-reload systemctl restart docker kubelet kube-proxy

验证

[root@k8s_master kubernetes]# curl https://192.168.132.148:6443/api/v1/nodes --cert /etc/kubernetes/ssl/cs_client.crt --key /etc/kubernetes/ssl/cs_client.key --cacert /etc/kubernetes/ssl/ca.crt { "kind": "NodeList", "apiVersion": "v1", "metadata": { "selfLink": "/api/v1/nodes", "resourceVersion": "291570" }, "items": [ { "metadata": { "name": "192.168.132.136", "selfLink": "/api/v1/nodes/192.168.132.136", "uid": "f740f219-2b4a-11e8-bc10-000c29c38a0c", "resourceVersion": "291569", "creationTimestamp": "2018-03-19T07:56:01Z", "labels": { "beta.kubernetes.io/arch": "amd64", "beta.kubernetes.io/os": "linux", "kubernetes.io/hostname": "192.168.132.136" }, "annotations": { "volumes.kubernetes.io/controller-managed-attach-detach": "true" } }, "spec": { "externalID": "192.168.132.136" }, "status": { "capacity": { "alpha.kubernetes.io/nvidia-gpu": "0", "cpu": "1", "memory": "999920Ki", "pods": "110" }, "allocatable": { "alpha.kubernetes.io/nvidia-gpu": "0", "cpu": "1", "memory": "999920Ki", "pods": "110" }, "conditions": [ { "type": "OutOfDisk", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:42Z", "lastTransitionTime": "2018-03-19T07:56:01Z", "reason": "KubeletHasSufficientDisk", "message": "kubelet has sufficient disk space available" }, { "type": "MemoryPressure", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:42Z", "lastTransitionTime": "2018-03-19T07:56:01Z", "reason": "KubeletHasSufficientMemory", "message": "kubelet has sufficient memory available" }, { "type": "DiskPressure", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:42Z", "lastTransitionTime": "2018-03-19T07:56:01Z", "reason": "KubeletHasNoDiskPressure", "message": "kubelet has no disk pressure" }, { "type": "Ready", "status": "True", "lastHeartbeatTime": "2018-03-19T09:10:42Z", "lastTransitionTime": "2018-03-19T07:56:01Z", "reason": "KubeletReady", "message": "kubelet is posting ready status" } ], "addresses": [ { "type": "LegacyHostIP", "address": "192.168.132.136" }, { "type": "InternalIP", "address": "192.168.132.136" }, { "type": "Hostname", "address": "192.168.132.136" } ], "daemonEndpoints": { "kubeletEndpoint": { "Port": 10250 } }, "nodeInfo": { "machineID": "b3c5e7f95f9b4da99bcf0744f38f5eee", "systemUUID": "87164D56-73D6-32A7-28F1-66EE23C27F10", "bootID": "29849630-efce-4aa4-b690-ff45726b17a2", "kernelVersion": "3.10.0-514.26.2.el7.x86_64", "osImage": "CentOS Linux 7 (Core)", "containerRuntimeVersion": "docker://1.12.6", "kubeletVersion": "v1.5.2", "kubeProxyVersion": "v1.5.2", "operatingSystem": "linux", "architecture": "amd64" }, "images": [ { "names": [ "index.tenxcloud.com/yexianwolail/gb-frontend@sha256:0be2894d43915ba057e5f892d7e0dac75dfa3765874dbc99ccd78ad26e6213de", "index.tenxcloud.com/yexianwolail/gb-frontend:v4" ], "sizeBytes": 512107183 }, { "names": [ "index.tenxcloud.com/google_containers/redis@sha256:f066bcf26497fbc55b9bf0769cb13a35c0afa2aa42e737cc46b7fb04b23a2f25", "index.tenxcloud.com/google_containers/redis:e2e" ], "sizeBytes": 418929769 }, { "names": [ "finance/centos6.8-base@sha256:1cb78ded75775ad289cf43c67141b39676bf3571cb20cd6a80e105c6497ae0e4", "finance/centos6.8-base:latest" ], "sizeBytes": 332467242 }, { "names": [ "registry.access.redhat.com/rhel7/pod-infrastructure@sha256:01f829e96ba98a7ef5481359de7ea684c2fe41f2d28963e6c87370334a5256c4", "registry.access.redhat.com/rhel7/pod-infrastructure:latest" ], "sizeBytes": 208587465 }, { "names": [ "docker.io/bestwu/kubernetes-dashboard-amd64@sha256:d820c9a0a0a7cd7d0c9d3630a2db0fc33d190db31f3e0797d4df9dc4a6a41c6b", "docker.io/bestwu/kubernetes-dashboard-amd64:v1.6.3" ], "sizeBytes": 138972432 }, { "names": [ "ubuntu@sha256:84c334414e2bfdcae99509a6add166bbb4fa4041dc3fa6af08046a66fed3005f", "ubuntu:latest" ], "sizeBytes": 119509665 }, { "names": [ "daocloud.io/megvii/kubernetes-dashboard-amd64@sha256:6e0218eeb940f0fc9ca68972bda2b9569921fed1ef793ba78e30900cc7ab9396", "daocloud.io/megvii/kubernetes-dashboard-amd64:v1.8.0" ], "sizeBytes": 119155776 }, { "names": [ "index.tenxcloud.com/yexianwolail/gb-redisslave@sha256:9f13d46b2e4cac290aa9757f83dff26e0fa06802f898abec43ca22076966440a", "index.tenxcloud.com/yexianwolail/gb-redisslave:v1" ], "sizeBytes": 109462535 }, { "names": [ "docker.io/nginx@sha256:004ac1d5e791e705f12a17c80d7bb1e8f7f01aa7dca7deee6e65a03465392072", "docker.io/nginx:latest" ], "sizeBytes": 108275923 }, { "names": [ "swarm@sha256:1a05498cfafa8ec767b0d87d11d3b4aeab54e9c99449fead2b3df82d2744d345", "swarm:latest" ], "sizeBytes": 15771951 }, { "names": [ "index.tenxcloud.com/google_containers/busybox@sha256:4bdd623e848417d96127e16037743f0cd8b528c026e9175e22a84f639eca58ff", "index.tenxcloud.com/google_containers/busybox:1.24" ], "sizeBytes": 1113554 } ] } }, { "metadata": { "name": "192.168.132.149", "selfLink": "/api/v1/nodes/192.168.132.149", "uid": "7321176a-2b49-11e8-bc10-000c29c38a0c", "resourceVersion": "291570", "creationTimestamp": "2018-03-19T07:45:09Z", "labels": { "beta.kubernetes.io/arch": "amd64", "beta.kubernetes.io/os": "linux", "kubernetes.io/hostname": "192.168.132.149" }, "annotations": { "volumes.kubernetes.io/controller-managed-attach-detach": "true" } }, "spec": { "externalID": "192.168.132.149" }, "status": { "capacity": { "alpha.kubernetes.io/nvidia-gpu": "0", "cpu": "1", "memory": "999920Ki", "pods": "110" }, "allocatable": { "alpha.kubernetes.io/nvidia-gpu": "0", "cpu": "1", "memory": "999920Ki", "pods": "110" }, "conditions": [ { "type": "OutOfDisk", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:44Z", "lastTransitionTime": "2018-03-19T07:45:09Z", "reason": "KubeletHasSufficientDisk", "message": "kubelet has sufficient disk space available" }, { "type": "MemoryPressure", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:44Z", "lastTransitionTime": "2018-03-19T07:45:09Z", "reason": "KubeletHasSufficientMemory", "message": "kubelet has sufficient memory available" }, { "type": "DiskPressure", "status": "False", "lastHeartbeatTime": "2018-03-19T09:10:44Z", "lastTransitionTime": "2018-03-19T07:45:09Z", "reason": "KubeletHasNoDiskPressure", "message": "kubelet has no disk pressure" }, { "type": "Ready", "status": "True", "lastHeartbeatTime": "2018-03-19T09:10:44Z", "lastTransitionTime": "2018-03-19T07:45:19Z", "reason": "KubeletReady", "message": "kubelet is posting ready status" } ], "addresses": [ { "type": "LegacyHostIP", "address": "192.168.132.149" }, { "type": "InternalIP", "address": "192.168.132.149" }, { "type": "Hostname", "address": "192.168.132.149" } ], "daemonEndpoints": { "kubeletEndpoint": { "Port": 10250 } }, "nodeInfo": { "machineID": "0e3f05a02b4046c2a29f626cd4af4e5f", "systemUUID": "0B514D56-1364-0109-7339-5D0772899205", "bootID": "320c4977-903b-4b46-a295-9cb601ae9e44", "kernelVersion": "3.10.0-514.26.2.el7.x86_64", "osImage": "CentOS Linux 7 (Core)", "containerRuntimeVersion": "docker://1.12.6", "kubeletVersion": "v1.5.2", "kubeProxyVersion": "v1.5.2", "operatingSystem": "linux", "architecture": "amd64" }, "images": [ { "names": [ "index.tenxcloud.com/yexianwolail/gb-frontend@sha256:0be2894d43915ba057e5f892d7e0dac75dfa3765874dbc99ccd78ad26e6213de", "index.tenxcloud.com/yexianwolail/gb-frontend:v4" ], "sizeBytes": 512107183 }, { "names": [ "finance/centos6.8-base@sha256:1cb78ded75775ad289cf43c67141b39676bf3571cb20cd6a80e105c6497ae0e4", "finance/centos6.8-base:latest" ], "sizeBytes": 332467242 }, { "names": [ "registry.access.redhat.com/rhel7/pod-infrastructure@sha256:01f829e96ba98a7ef5481359de7ea684c2fe41f2d28963e6c87370334a5256c4", "registry.access.redhat.com/rhel7/pod-infrastructure:latest" ], "sizeBytes": 208587465 }, { "names": [ "docker.io/bestwu/kubernetes-dashboard-amd64@sha256:d820c9a0a0a7cd7d0c9d3630a2db0fc33d190db31f3e0797d4df9dc4a6a41c6b", "docker.io/bestwu/kubernetes-dashboard-amd64:v1.6.3" ], "sizeBytes": 138972432 }, { "names": [ "ubuntu@sha256:84c334414e2bfdcae99509a6add166bbb4fa4041dc3fa6af08046a66fed3005f", "ubuntu:latest" ], "sizeBytes": 119509665 }, { "names": [ "index.tenxcloud.com/yexianwolail/gb-redisslave@sha256:9f13d46b2e4cac290aa9757f83dff26e0fa06802f898abec43ca22076966440a", "index.tenxcloud.com/yexianwolail/gb-redisslave:v1" ], "sizeBytes": 109462535 }, { "names": [ "docker.io/nginx@sha256:004ac1d5e791e705f12a17c80d7bb1e8f7f01aa7dca7deee6e65a03465392072", "docker.io/nginx:latest" ], "sizeBytes": 108275923 }, { "names": [ "index.tenxcloud.com/google_containers/skydns@sha256:a317df077c678e684790c22902ff4040393cc172abe245d4b478bac6dbdd8052", "index.tenxcloud.com/google_containers/skydns:2015-10-13-8c72f8c" ], "sizeBytes": 40547562 }, { "names": [ "index.tenxcloud.com/google_containers/etcd-amd64@sha256:94573e18def7a39c277a3b088b4d5332c3f68492bb73ac66f7f93c12cdd1356c", "index.tenxcloud.com/google_containers/etcd-amd64:2.2.1" ], "sizeBytes": 28192476 }, { "names": [ "index.tenxcloud.com/google_containers/kube2sky@sha256:edafbc690dd3c95ea321a83ace5dac4750095920f8a565cef37dd8438ee509c4", "index.tenxcloud.com/google_containers/kube2sky:1.14" ], "sizeBytes": 27804037 }, { "names": [ "swarm@sha256:1a05498cfafa8ec767b0d87d11d3b4aeab54e9c99449fead2b3df82d2744d345", "swarm:latest" ], "sizeBytes": 15771951 }, { "names": [ "index.tenxcloud.com/google_containers/exechealthz@sha256:512fad89cb2e76b24f12884bab4117a85733ebe033f18f8da34b617fbfa03708", "index.tenxcloud.com/google_containers/exechealthz:1.0" ], "sizeBytes": 7095869 }, { "names": [ "index.tenxcloud.com/google_containers/busybox@sha256:4bdd623e848417d96127e16037743f0cd8b528c026e9175e22a84f639eca58ff", "index.tenxcloud.com/google_containers/busybox:1.24" ], "sizeBytes": 1113554 } ] } } ] }

执行kubectl get nodes 报错:

报错信息如下: The connection to the server localhost:8080 was refused - did you specify the right host or port?

用如下命令代替

kubectl -s 192.168.132.148:8080 get nodes

浙公网安备 33010602011771号

浙公网安备 33010602011771号