数仓:环境准备

开发环境

创建项目

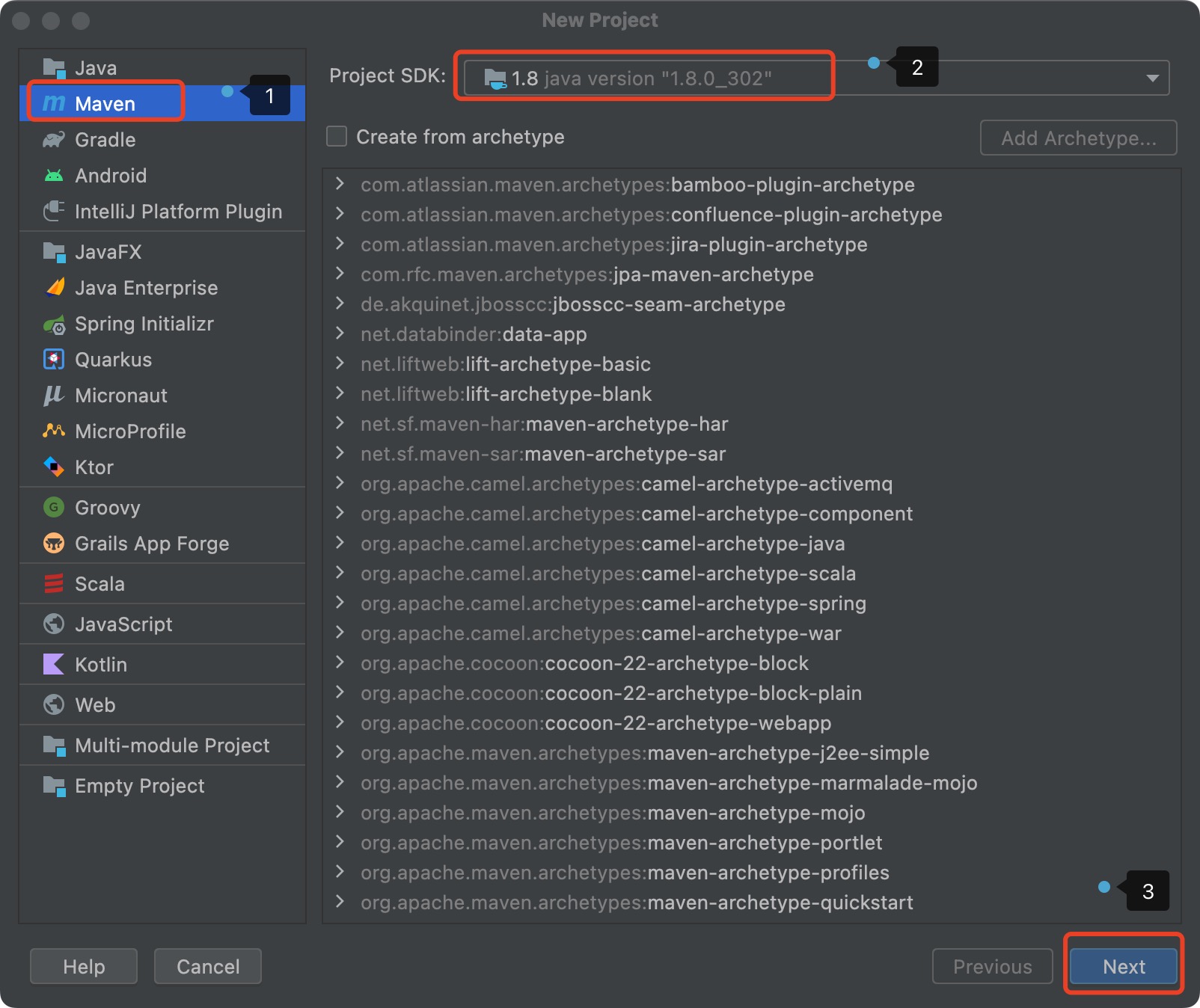

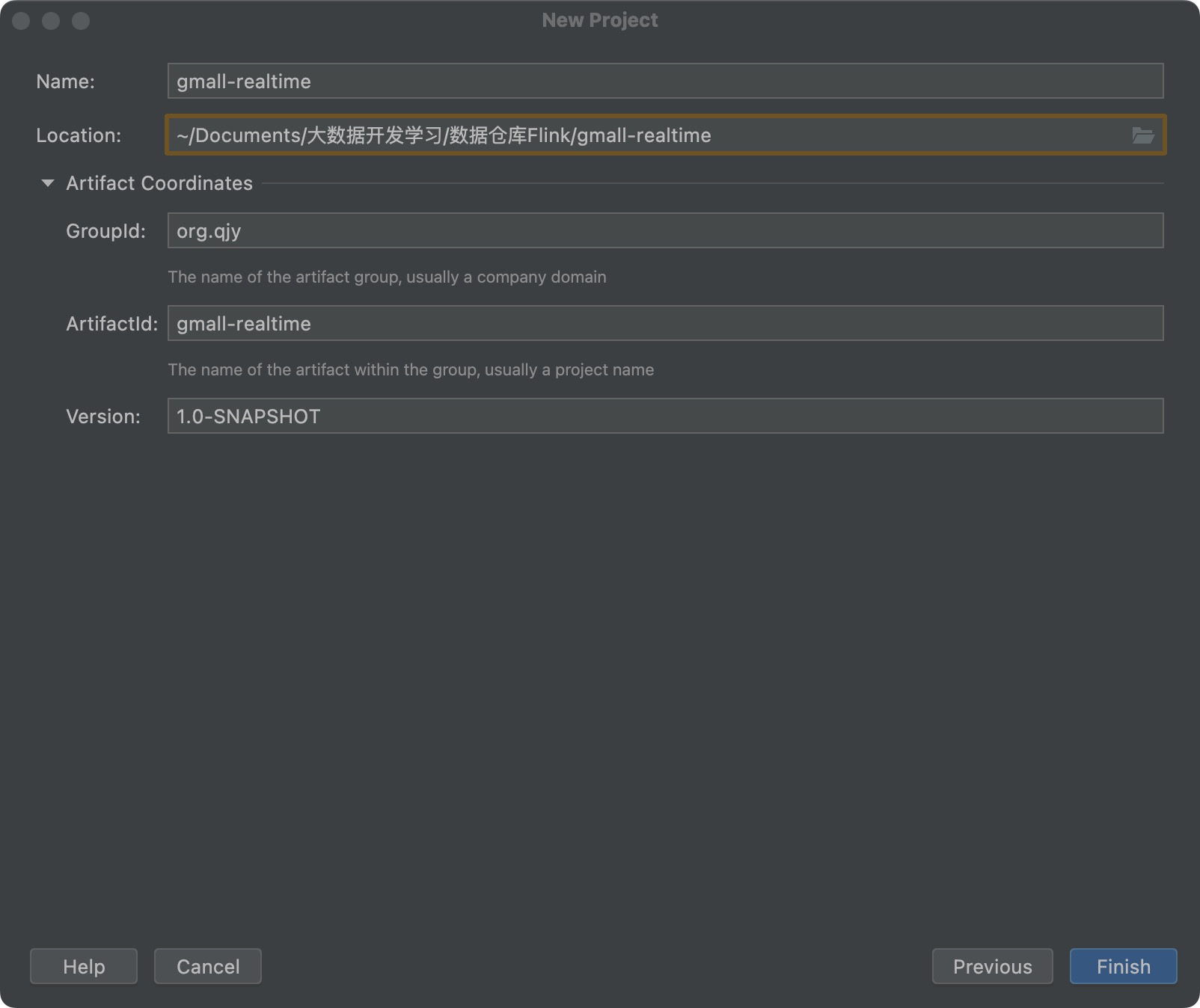

创建 \(Maven\) 项目 \(gmall-realtime\)

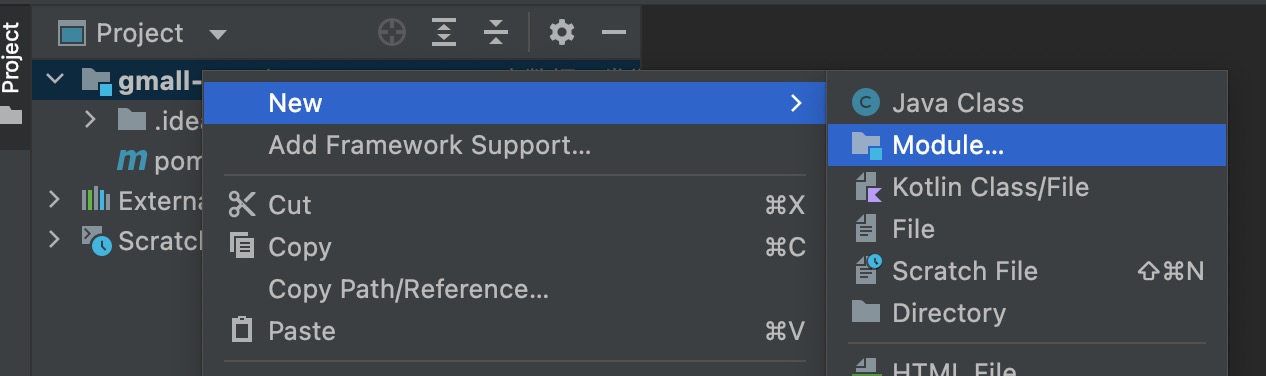

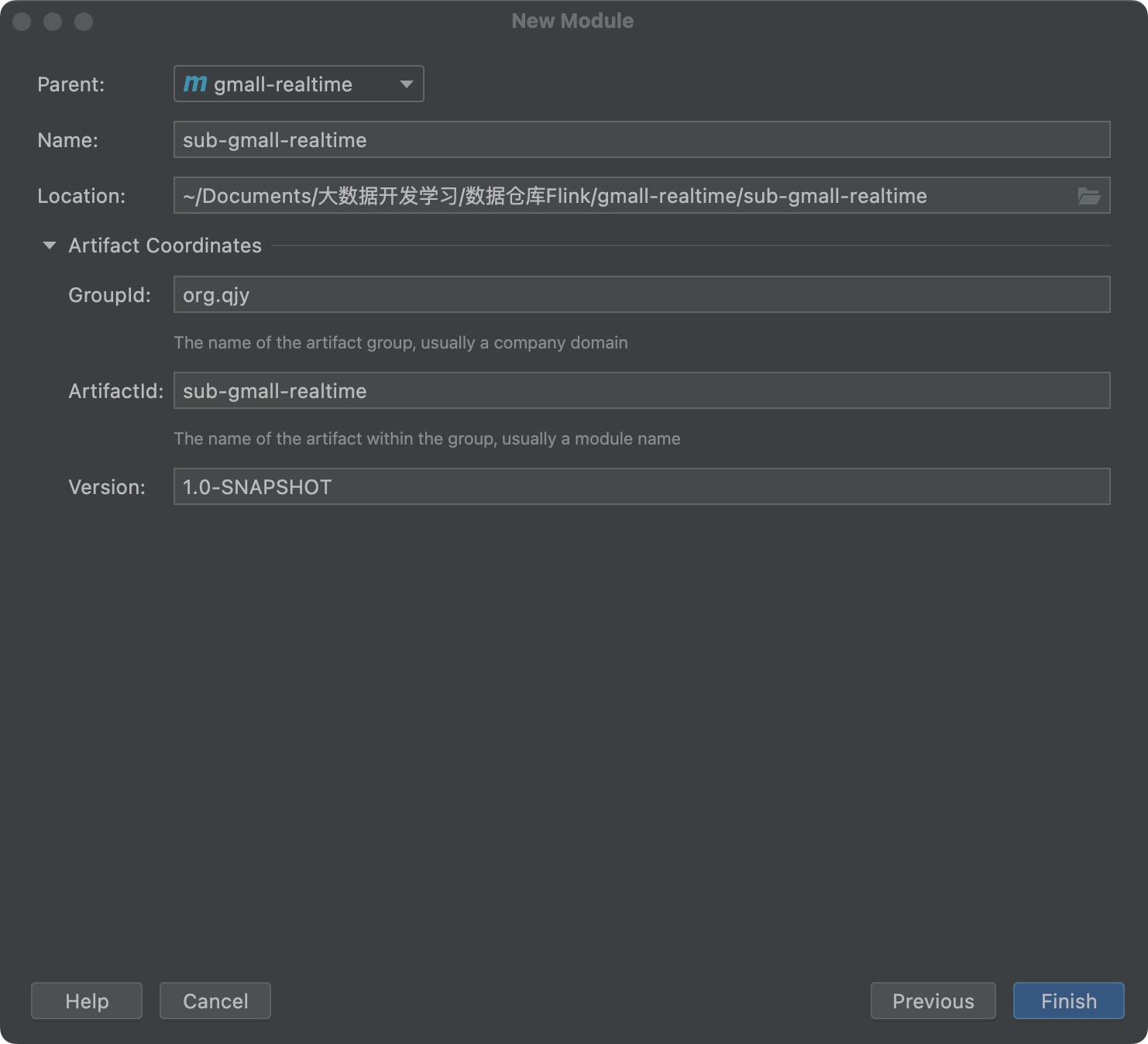

删除当前项目的 \(src\) 目录并创建 \(sub-gmall-realtime\) 模块

在主 \(pom\) 文件中导入依赖

<properties>

<java.version>1.8</java.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<flink.version>1.13.0</flink.version>

<scala.version>2.12</scala.version>

<hadoop.version>3.1.3</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.68</version>

</dependency>

<!--如果保存检查点到hdfs上,需要引入此依赖-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.20</version>

</dependency>

<!--Flink默认使用的是slf4j记录日志,相当于一个日志的接口,我们这里使用log4j作为具体的日志实现-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-to-slf4j</artifactId>

<version>2.14.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.1</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

<exclude>org.apache.hadoop:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<!-- 打包时不复制META-INF下的签名文件,避免报非法签名文件的SecurityExceptions异常-->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers combine.children="append">

<!-- connector和format依赖的工厂类打包时会相互覆盖,需要使用ServicesResourceTransformer解决-->

<transformer implementation="org.apache.maven.plugins.shade.resource.ServicesResourceTransformer"/>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

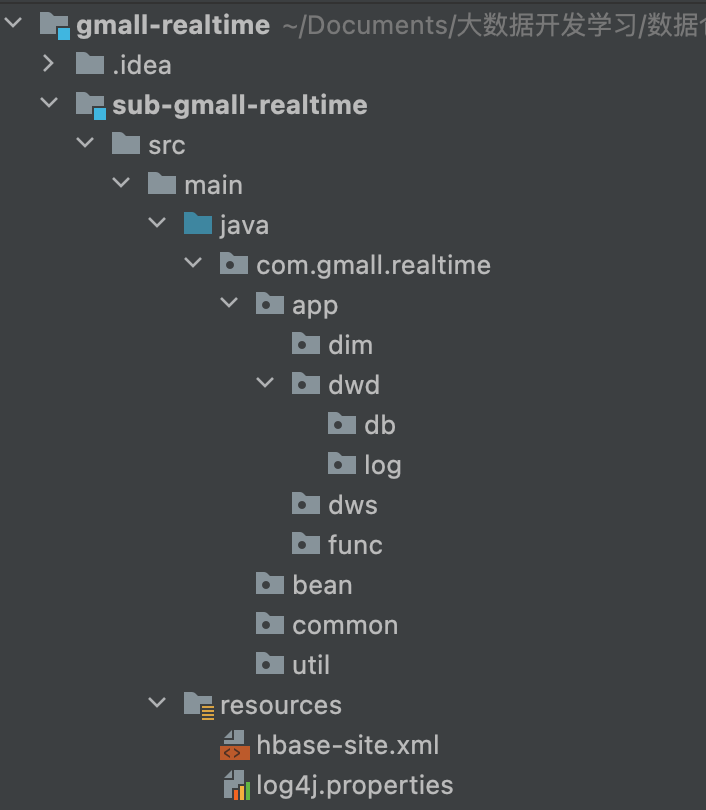

创建相关的包

在 \(resources\) 目录下的 \(log4j.properties\) 文件中写入如下内容

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss} %10p (%c:%M) - %m%n

log4j.rootLogger=error,stdout

运行环境

Flink 环境搭建

Flink 提交作业和执行任务,需要几个关键组件:客户端(Client)、作业管理器(JobManager)和任务管理器(TaskManager)。

- 我们的代码由客户端获取并做转换

- 提交给 JobManger, 对作业进行中央调度管理,进一步处理转换,然后分发任务给众多的 TaskManager。

- TaskManager 对数据进行处理

本地启动

下载安装包

上传并解压

上传 Flink 并解压缩至 /opt/module 目录下。

# 将主机上的flink压缩包上传到master的/opt目录下

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp flink-1.13.0-bin-scala_2.12.tgz cluster-master:/opt/

# 解压master的flink

[root@cluster-master opt]# tar -zxvf flink-1.13.0-bin-scala_2.12.tgz -C /opt/module/

启动

# 进入解压后的目录

[root@cluster-master opt]# cd module/flink-1.13.0/

# 执行启动命令

[root@cluster-master flink-1.13.0]# bin/start-cluster.sh

# 查看进程

[root@cluster-master flink-1.13.0]# jps

1269 StandaloneSessionClusterEntrypoint

1941 TaskManagerRunner

2039 Jps

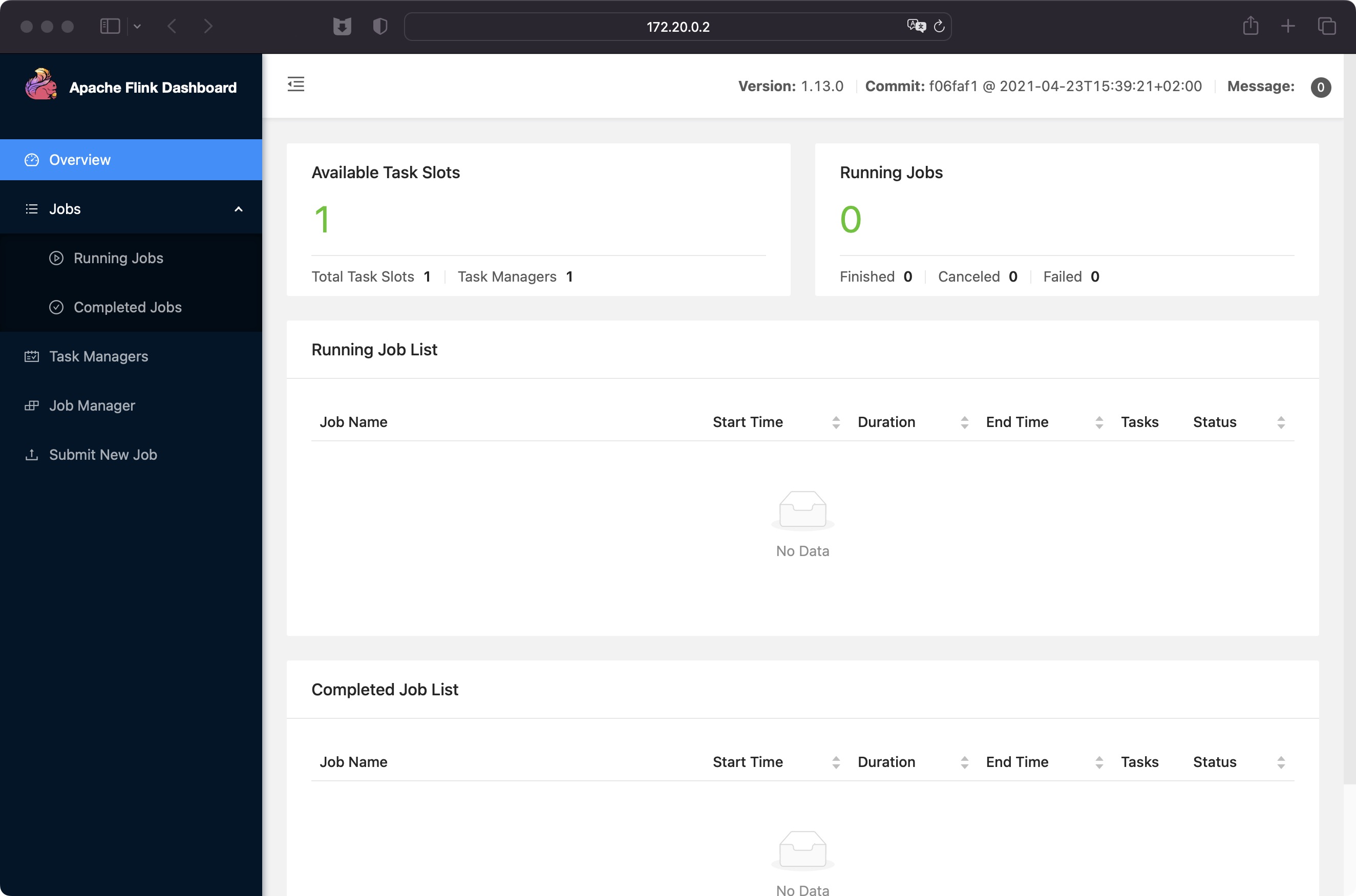

访问 Web UI

启动成功后,访问 http://172.20.0.2:8081,可以对flink集群和任务进行监控管理。

关闭集群

[root@cluster-master flink-1.13.0]# bin/stop-cluster.sh

集群启动

Flink 是典型的 Master-Slave 架构的分布式数据处理框架,其中 Master 角色对应着 JobManager,Slave 角色则对应 TaskManager。

| 节点服务器 | cluster-master | cluster-slave1 | cluster-slave2 | cluster-slave3 |

|---|---|---|---|---|

| 角色 | JobManager | TaskManager | TaskManager | TaskManager |

修改集群配置

- 修改

conf/flink-conf.yaml文件。

[root@cluster-master flink-1.13.0]# vim conf/flink-conf.yaml

# 指定了master节点服务器为JobManager节点。

jobmanager.rpc.address: 172.20.0.2

- 修改

conf/workers文件,将另外从节点服务器添加为本 Flink 集群的 TaskManager 节点

[root@cluster-master flink-1.13.0]# vim conf/workers

172.20.0.3

172.20.0.4

172.20.0.5

分发文件

# 将flink-1.13.0压缩为flink-dis.tar

[root@cluster-master opt]# tar -cvf /opt/module/flink-dis.tar /opt/module/flink-1.13.0/

[root@cluster-master opt]# vim flink-dis.yaml

---

- hosts: cluster

tasks:

- name: copy flink-dis.tar to slaves

unarchive: src=/opt/module/flink-dis.tar dest=/

# flink-dis.tar会自动解压到slave主机的/opt/module目录下

[root@cluster-master opt]# ansible-playbook flink-dis.yaml

启动集群

# 在master节点服务器上启动Flink集群

[root@cluster-master flink-1.13.0]# bin/start-cluster.sh

# 查看进程情况

[root@cluster-master flink-1.13.0]# jps

5608 Jps

5500 StandaloneSessionClusterEntrypoint

[root@cluster-slave1 /]# jps

1332 TaskManagerRunner

1397 Jps

[root@cluster-slave2 /]# jps

1344 TaskManagerRunner

1409 Jps

[root@cluster-slave3 module]# jps

1392 TaskManagerRunner

1457 Jps

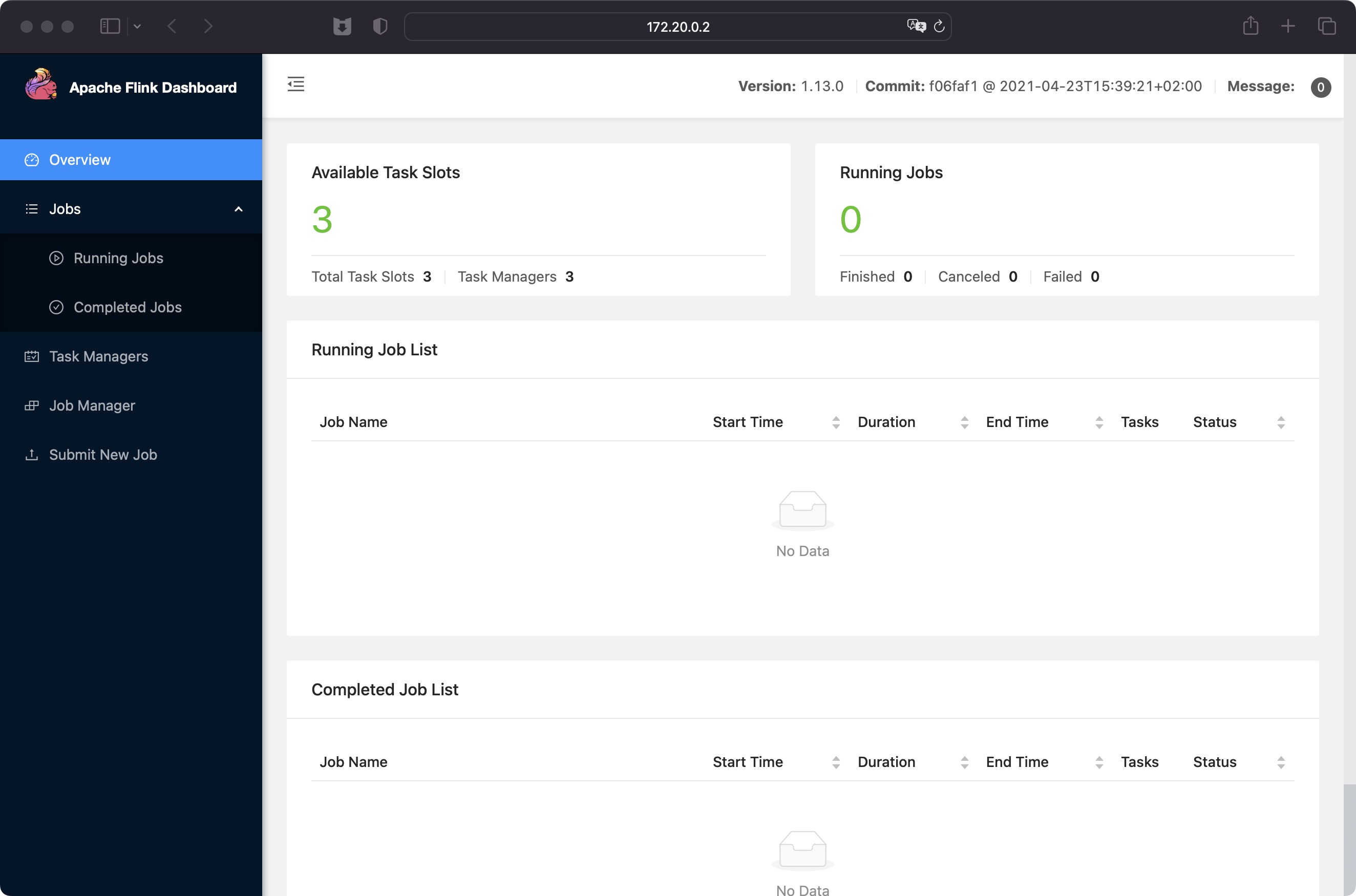

访问 Web UI

启动成功后,访问 http://172.20.0.2:8081,可以对flink集群和任务进行监控管理。

HBase 环境搭建

上传并解压

上传 HBase 并解压缩至 /opt/module 目录下。

# 将主机上的hbase压缩包上传到master的/opt目录下

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp hbase-2.0.5-bin.tar.gz cluster-master:/opt/

# 解压master的hbase

[root@cluster-master opt]# tar -zxvf hbase-2.0.5-bin.tar.gz -C /opt/module/

修改配置文件

修改 conf/hbase-env.sh 中的内容。

export HBASE_MANAGES_ZK=false

修改 conf/hbase-site.xml 中的内容。

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://172.20.0.2:8020/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>172.20.0.2,172.20.0.3,172.20.0.4,172.20.0.5</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>60010</value>

</property>

</configuration>

修改 conf/regionservers 中的内容。

172.20.0.3

172.20.0.4

172.20.0.5

分发文件

# 将hbase-2.0.5压缩为hbase-dis.tar

[root@cluster-master opt]# tar -cvf /opt/module/hbase-dis.tar /opt/module/hbase-2.0.5/

[root@cluster-master opt]# vim hbase-dis.yaml

---

- hosts: cluster

tasks:

- name: copy hbase-dis.tar to slaves

unarchive: src=/opt/module/hbase-dis.tar dest=/

# hbase-dis.tar会自动解压到slave主机的/opt/module目录下

[root@cluster-master opt]# ansible-playbook hbase-dis.yaml

配置环境变量

# 在集群所有主机的 ~/bashrc 文件中增加hbase环境变量配置。

[root@cluster-master opt]# vim ~/.bashrc

# HBASE

export HBASE_HOME=/opt/module/hbase-2.0.5

export PATH=$PATH:$HBASE_HOME/bin

[root@cluster-master opt]# source ~/.bashrc

启动服务

# 启动Zookeeper集群

[root@cluster-master opt]# cd /home/qjy

[root@cluster-master qjy]# bin/zk.sh start

# 启动Hadoop集群

[root@cluster-master qjy]# bin/hdp.sh start

# 启动Hbase集群

[root@cluster-master hbase-2.0.5]# bin/start-hbase.sh

# 停止Hbase集群

[root@cluster-master hbase-2.0.5]# bin/stop-hbase.sh

查看 HBase 页面

启动成功后,通过 http://172.20.0.2:16010 来访问 HBase 管理页面。

整合 Phoenix

上传并解压

上传 Phoenix 并解压缩至 /opt/module 目录下。

# 将主机上的Phoenix压缩包上传到master的/opt目录下

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/hbase-2.0.5-bin.tar.gz cluster-master:/opt/

# 解压master的Phoenix

[root@cluster-master opt]# tar -zxvf phoenix-hbase-2.2-5.1.3-bin.tar.gz -C /opt/module/

复制 server 包并拷贝到各个节点的

hbase-2.2.2/lib

[root@cluster-master phoenix-hbase-2.2-5.1.3-bin]# cp /opt/module/phoenix-hbase-2.2-5.1.3-bin/phoenix-server-hbase-2.2-5.1.3.jar /opt/module/hbase-2.2.2/lib/

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp cluster-master:/opt/module/hbase-2.2.2/lib/phoenix-server-hbase-2.2-5.1.3.jar /Downloads/

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/phoenix-server-hbase-2.2-5.1.3.jar cluster-slave1:/opt/module/hbase-2.2.2/lib/

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/phoenix-server-hbase-2.2-5.1.3.jar cluster-slave2:/opt/module/hbase-2.2.2/lib/

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/phoenix-server-hbase-2.2-5.1.3.jar cluster-slave3:/opt/module/hbase-2.2.2/lib

配置环境变量

# 在master主机的 ~/bashrc 文件中增加Phoenix环境变量配置。

[root@cluster-master opt]# vim ~/.bashrc

# PHOENIX_HOME

export PHOENIX_HOME=/opt/module/phoenix-hbase-2.2-5.1.3-bin

export PHOENIX_CLASSPATH=$PHOENIX_HOME

export PATH=$PATH:$PHOENIX_HOME/bin

[root@cluster-master opt]# source ~/.bashrc

修改配置文件

添加集群中所有主机 /opt/module/hbase-2.2.2/conf/hbase-site.xml 中的内容。

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

在 master 主机上 /opt/module/phoenix-hbase-2.2-5.1.3-bin/bin/hbase-site.xml 文件中添加配置。

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

重启 HBase

[root@cluster-master hbase-2.0.5]# bin/stop-hbase.sh

[root@cluster-master hbase-2.0.5]# bin/start-hbase.sh

连接 Phoenix

[root@cluster-master opt]# /opt/module/phoenix/bin/sqlline.py cluster-master,cluster-slave1,cluster-slave2,cluster-slave3:2181

IDEA Phoenix 环境

引入 Phoenix Thick Client 依赖

<dependency>

<groupId>org.apache.phoenix</groupId>

<artifactId>phoenix-spark</artifactId>

<version>5.0.0-HBase-2.0</version>

<exclusions>

<exclusion>

<groupId>org.glassfish</groupId>

<artifactId>javax.el</artifactId>

</exclusion>

</exclusions>

</dependency>

在 resources 目录下创建 hbase-site.xml 文件,并在文件中添加如下内容

<?xml version="1.0" ?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl" ?>

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://172.20.0.2:8020/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>172.20.0.2,172.20.0.3,172.20.0.4,172.20.0.5</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>

<!-- 注意:为了开启hbase的namespace和phoenix的schema的映射,在程序中需要加这个配置文件,另外在linux服务上,也需要在hbase以及phoenix的hbase-site.xml配置文件中,加上以上两个配置 -->

<property>

<name>phoenix.schema.isNamespaceMappingEnabled</name>

<value>true</value>

</property>

<property>

<name>phoenix.schema.mapSystemTablesToNamespace</name>

<value>true</value>

</property>

</configuration>

Redis 环境搭建

安装 C 语言的编译环境

[root@cluster-master opt]# yum install centos-release-scl scl-utils-build

[root@cluster-master opt]# yum install -y devtoolset-8-toolchain

[root@cluster-master opt]# sudo scl enable devtoolset-8 bash

# 测试gcc版本

[root@cluster-master opt]# gcc --version

gcc (GCC) 8.3.1 20190311 (Red Hat 8.3.1-3)

Copyright (C) 2018 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

上传并解压

# 将主机上的redis压缩包上传到master的/opt目录下

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp redis-6.0.8.tar.gz cluster-master:/opt/

# 解压master的redis

[root@cluster-master opt]# tar -zxvf redis-6.0.8.tar.gz -C /opt/module/

在 redis-6.0.8 目录下执行 make 命令

[root@cluster-master redis-6.0.8]# make

# 跳过 make test 继续执行: make install

[root@cluster-master redis-6.0.8]# make install

后台启动

备份配置文件

[root@cluster-master redis-6.0.8]# cp /opt/module/redis-6.0.8/redis.conf ~/my_redis.conf

修改配置文件

# 修改my_redis.conf文件,将 daemonize no 改为 daemonize yes,让服务在后台启动

[root@cluster-master redis-6.0.8]# vim ~/my_redis.conf

启动和关闭 redis

# 进入安装目录

[root@cluster-master opt]# cd /usr/local/

# 后台启动redis

[root@cluster-master local]# bin/redis-server ~/my_redis.conf

# 查看redis

[root@cluster-master local]# ps -ef | grep redis

root 56010 1 3 11:24 ? 00:00:01 /usr/bin/qemu-x86_64 bin/redis-server bin/redis-server /root/my_redis.conf

root 56018 44110 0 11:25 pts/1 00:00:00 /usr/bin/qemu-x86_64 /bin/grep grep --color=auto redis

# 用客户端访问,进入redis

[root@cluster-master local]# bin/redis-cli

127.0.0.1:6379>

# 测试验证

127.0.0.1:6379> ping

PONG

# 可以进入终端后再关闭

127.0.0.1:6379> shutdown

not connected>

# 也可以直接关闭

[root@cluster-master local]# bin/redis-cli shutdown

# 检查是否关闭

[root@cluster-master local]# ps -ef | grep redis

root 56027 44110 0 11:29 pts/1 00:00:00 /usr/bin/qemu-x86_64 /bin/grep grep --color=auto redis

外部节点访问

[root@cluster-master redis-6.0.8]# vim ~/my_redis.conf

# 注销 bind 配置项

# bind 127.0.0.1

# 修改 protected-mode

protected-mode no

ClickHouse 环境搭建

前提准备

CentOS 取消打开的文件数限制

# 在集群所有节点的/etc/security/limits.conf文件的末尾加入以下内容

[root@cluster-master local]# vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

# 在集群所有节点的/etc/security/limits.d/20-nproc.conf文件的末尾加入以下内容

[root@cluster-master local]# vim /etc/security/limits.d/20-nproc.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

在所有节点上安装依赖

[root@cluster-master local]# yum install -y libtool

[root@cluster-master local]# yum install -y *unixODBC*

CentOS 取消 SELINUX

# 在集群所有节点上修改/etc/selinux/config中的SELINUX=disabled

[root@cluster-master opt]# vim /etc/selinux/config

SELINUX=disabled

# 但是我发现容器中的/etc/selinux/目录下没有config,所以直接查看SELINUX的状态,因此不需要修改了

[root@cluster-master opt]# getenforce

Disabled

# 重启集群所有主机

[root@cluster-master opt]# reboot

单机安装

创建 clickhouse 目录并上传 rpm 压缩包

# 在集群所有节点都创建目录/opt/module/clickhouse

[root@cluster-master module]# mkdir clickhouse

# 在集群所有节点都上传四个rpm压缩包到/opt/module/clickhouse目录下

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/clickhouse-server-21.7.3.14-2.noarch.rpm cluster-master:/opt/module/clickhouse

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/clickhouse-common-static-dbg-21.7.3.14-2.x86_64.rpm cluster-master:/opt/module/clickhouse

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/clickhouse-common-static-21.7.3.14-2.x86_64.rpm cluster-master:/opt/module/clickhouse

(base) quanjunyi@Tys-MacBook-Pro ~ % docker cp /Users/quanjunyi/Downloads/clickhouse-client-21.7.3.14-2.noarch.rpm cluster-master:/opt/module/clickhouse

在集群所有节点安装这 4 个 rpm 文件

[root@cluster-master clickhouse]# rpm -ivh *.rpm

# 查看安装情况

[root@cluster-master clickhouse]# rpm -qa|grep clickhouse

clickhouse-common-static-21.7.3.14-2.x86_64

clickhouse-server-21.7.3.14-2.noarch

clickhouse-client-21.7.3.14-2.noarch

clickhouse-common-static-dbg-21.7.3.14-2.x86_64

安装过程中会出现设置用户权限的密码,作为学习就可以不设置,直接回车就行。

/var/lib/clickhouse/:数据文件目录/usr/bin:命令文件目录/var/log/clickhouse-server/:日志文件目录/etc/clickhouse-server/:配置文件目录

修改配置文件(所有节点)

# 配置允许被外部节点访问,把<listen_host>::</listen_host>的注释打开,保存用:wq!

[root@cluster-master bin]# vim /etc/clickhouse-server/config.xml

启动 Clickhouse

# 启动Clickhouse

[root@cluster-master usr]# cd /usr/

[root@cluster-master usr]# bin/clickhouse start

<jemalloc>: MADV_DONTNEED does not work (memset will be used instead)

<jemalloc>: (This is the expected behaviour if you are running under QEMU)

chown --recursive clickhouse '/var/run/clickhouse-server/'

Will run su -s /bin/sh 'clickhouse' -c '/usr/bin/clickhouse-server --config-file /etc/clickhouse-server/config.xml --pid-file /var/run/clickhouse-server/clickhouse-server.pid --daemon'

<jemalloc>: MADV_DONTNEED does not work (memset will be used instead)

<jemalloc>: (This is the expected behaviour if you are running under QEMU)

Waiting for server to start

Waiting for server to start

Server started

# 重启Clickhouse

[root@cluster-master usr]# bin/clickhouse restart

关闭开机自启(所有节点)

[root@cluster-master usr]# systemctl disable clickhouse-server

Removed symlink /etc/systemd/system/multi-user.target.wants/clickhouse-server.service.

使用 client 连接 server

[root@cluster-master usr]# bin/clickhouse-client -m

<jemalloc>: MADV_DONTNEED does not work (memset will be used instead)

<jemalloc>: (This is the expected behaviour if you are running under QEMU)

ClickHouse client version 21.7.3.14 (official build).

Connecting to localhost:9000 as user default.

Code: 210. DB::NetException: Connection refused (localhost:9000)

# 9000端口被占用,所以需要修改clickhouse默认端口号

[root@cluster-master usr]# vim /etc/clickhouse-server/config.xml

<tcp_port>9300</tcp_port>

# 重启clickhouse

[root@cluster-master usr]# bin/clickhouse restart

# 重新访问clickhouse客户端

[root@cluster-master usr]# bin/clickhouse-client --port 9300

<jemalloc>: MADV_DONTNEED does not work (memset will be used instead)

<jemalloc>: (This is the expected behaviour if you are running under QEMU)

ClickHouse client version 21.7.3.14 (official build).

Connecting to localhost:9300 as user default.

Code: 210. DB::NetException: Connection refused (localhost:9300)

# 依旧不行,查看日志

[root@cluster-master usr]# tail -f /var/log/clickhouse-server/clickhouse-server.log

2023.02.18 02:32:01.920046 [ 1211 ] {} <Error> Application: DB::Exception: Listen [::]:8123 failed: Poco::Exception. Code: 1000, e.code() = 0, e.displayText() = DNS error: EAI: Address family for hostname not supported (version 21.7.3.14 (official build))

# 上面说的是ip地址不支持,容器不支持IPv6的格式,所以要将<listen_host>::</listen_host>改为<listen_host>0.0.0.0</listen_host>,然后把原来改的端口9300改回9000(所有节点)

[root@cluster-master usr]# vim /etc/clickhouse-server/config.xml

# 重启clickhouse后再试一次,-m表示多行输入命令行

[root@cluster-master usr]# bin/clickhouse-client -m

<jemalloc>: MADV_DONTNEED does not work (memset will be used instead)

<jemalloc>: (This is the expected behaviour if you are running under QEMU)

ClickHouse client version 21.7.3.14 (official build).

Connecting to localhost:9000 as user default.

Connected to ClickHouse server version 21.7.3 revision 54449.

cluster-master :)

模拟数据

踩坑

Hbase 启动问题

启动 Hbase 之后,master 节点启动 HMaster 后很快会自动挂掉,同时本来能访问的 HDFS 的 Web 页面也不能访问了。首先,HDFS 的端口与 Hbase 的端口是一致的,所以考虑是不是版本的问题(hadoop:3.1.3,hbase:2.0.5),在换成 Hbase-2.2.2 之后依然出现这个问题。

2023-02-17 05:57:17,103 ERROR [master/cluster-master:16000:becomeActiveMaster] master.HMaster: Failed to become active master

java.lang.IllegalStateException: The procedure WAL relies on the ability to hsync for proper operation during component failures, but the underlying filesystem does not support doing so. Please check the config value of 'hbase.procedure.store.wal.use.hsync' to set the desired level of robustness and ensure the config value of 'hbase.wal.dir' points to a FileSystem mount that can provide it.

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

重新启动之后,master 主机的 HMaster 不会自动挂掉了,但是访问 Web 依旧不行,此时发现从节点的 HRegionServer 没有启动,查看 /opt/module/hbase-2.2.2/logs/hbase-root-regionserver-cluster-slave3.out 错误如下:

Failed to instantiate SLF4J LoggerFactory

Reported exception:

java.lang.NoClassDefFoundError: org/apache/log4j/Level

at org.slf4j.LoggerFactory.bind(LoggerFactory.java:150)

at org.slf4j.LoggerFactory.performInitialization(LoggerFactory.java:124)

at org.slf4j.LoggerFactory.getILoggerFactory(LoggerFactory.java:412)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:357)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:383)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<clinit>(HRegionServer.java:241)

Caused by: java.lang.ClassNotFoundException: org.apache.log4j.Level

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 6 more

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/log4j/Level

at org.slf4j.LoggerFactory.bind(LoggerFactory.java:150)

at org.slf4j.LoggerFactory.performInitialization(LoggerFactory.java:124)

at org.slf4j.LoggerFactory.getILoggerFactory(LoggerFactory.java:412)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:357)

at org.slf4j.LoggerFactory.getLogger(LoggerFactory.java:383)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<clinit>(HRegionServer.java:241)

Caused by: java.lang.ClassNotFoundException: org.apache.log4j.Level

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 6 morez

这是因为我之前把 /opt/module/hbase-2.2.2/lib/client-facing-thirdparty/log4j-1.2.17.jar 改成了 log4j-1.2.17.jar.bak。改回来从节点就正常启动 HRegionServer 了。但是访问 Web 页面依旧不行。

可能前面启动失败一次或者安装过其他版本导致,因为 hbase 基于 zookeeper,所以在 zookeeper 上已经注册了之前的 hbase 信息,导致第二次启动失败,所以先在 zookeeper 中删除 hbase 的注册信息。删除之后依旧没用。

ClickHouse 安装问题

在从节点安装 rpm 的时候,报如下错误,master 节点不会报。

[root@cluster-slave1 clickhouse]# rpm -ivh *.rpm

warning: clickhouse-client-21.7.3.14-2.noarch.rpm: Header V4 RSA/SHA1 Signature, key ID e0c56bd4: NOKEY

error: Failed dependencies:

initscripts is needed by clickhouse-server-21.7.3.14-2.noarch

# 解决方法:缺少initscripts依赖,所以需要安装一下

[root@cluster-slave1 clickhouse]# yum install initscripts -y

浙公网安备 33010602011771号

浙公网安备 33010602011771号