[ML] {ud120} Lesson 4: Decision Trees

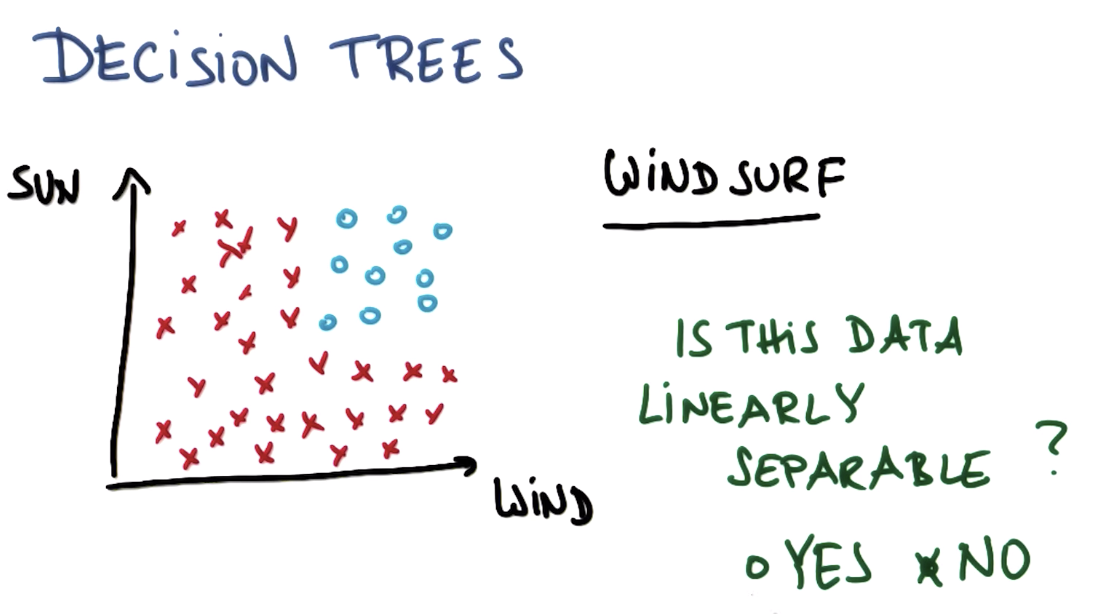

Linearly Separable Data

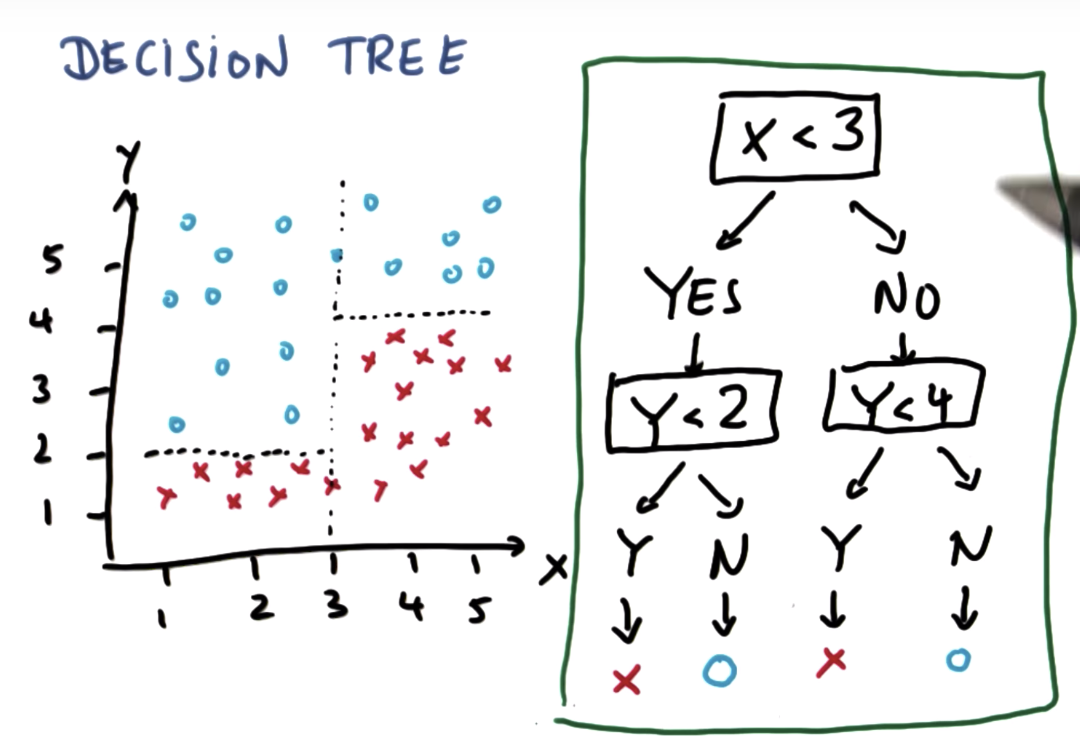

Multiple Linear Questions

Constructing a Decision Tree First Split

Coding A Decision Tree

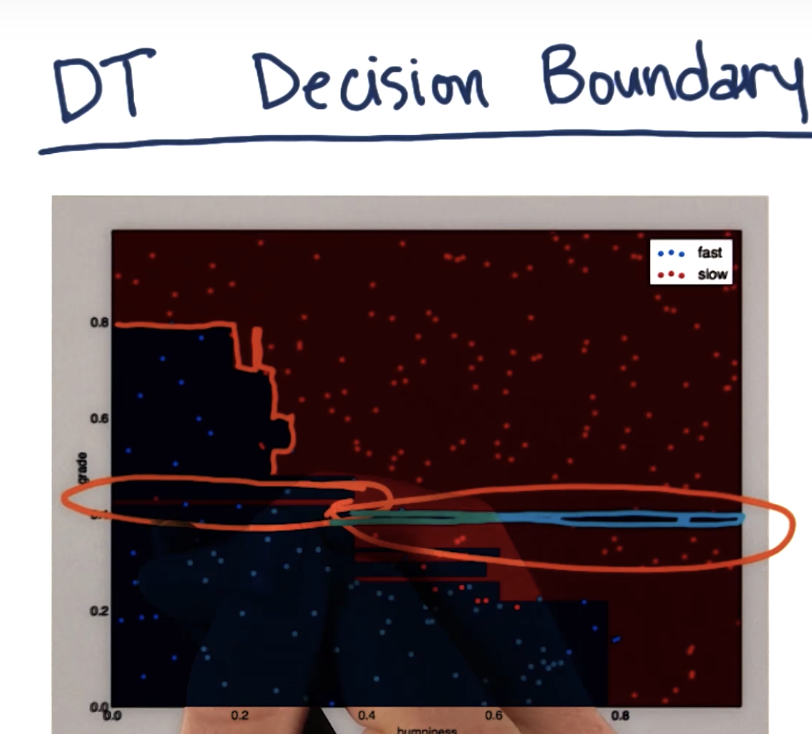

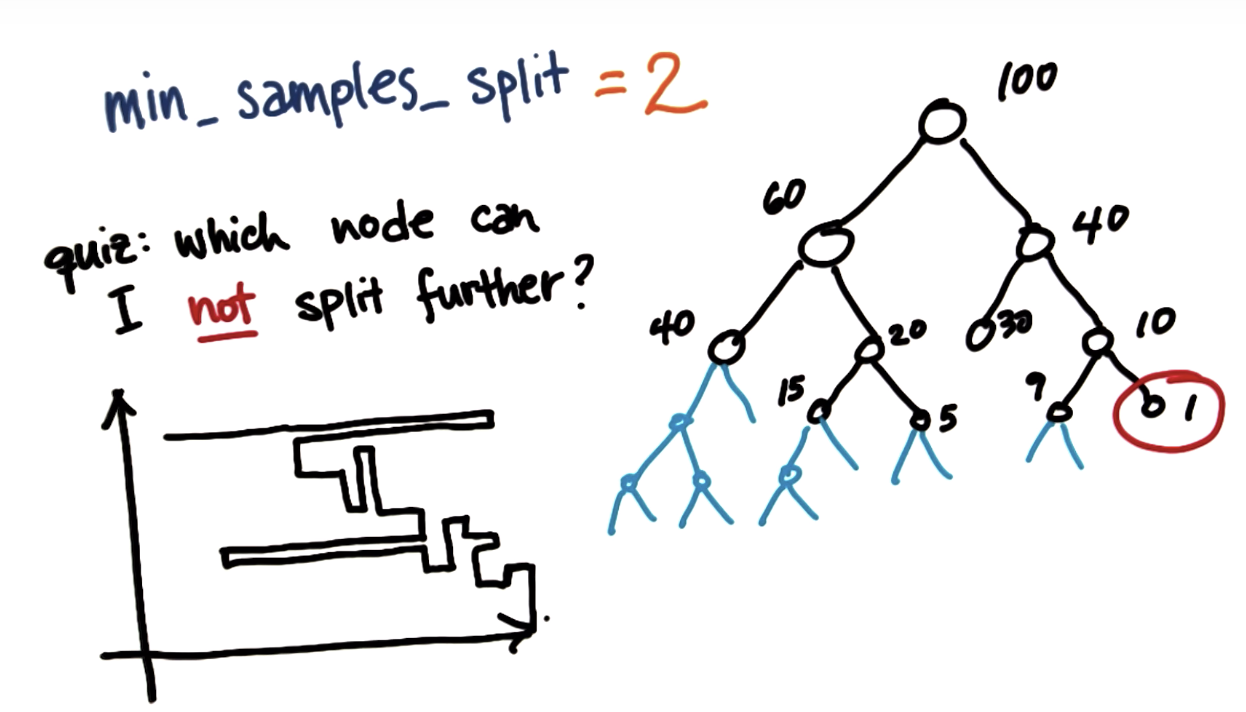

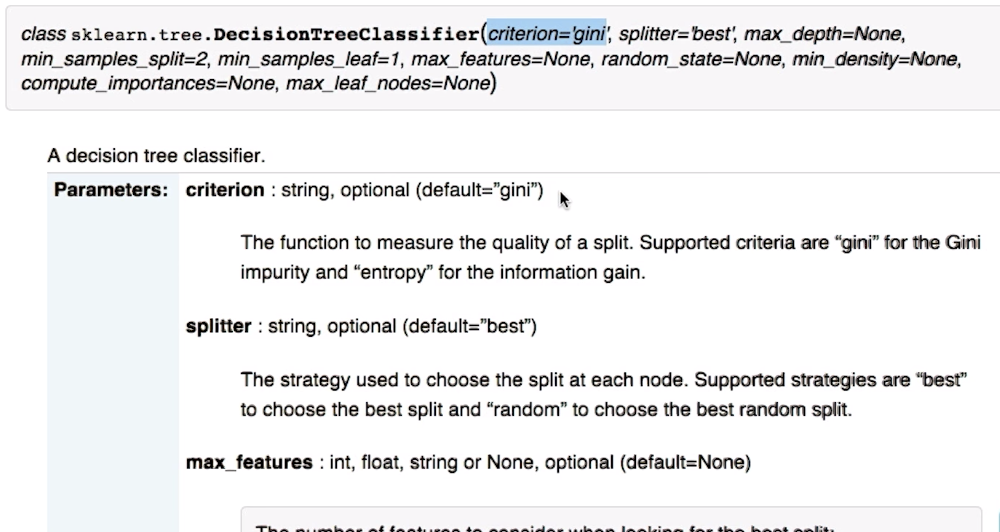

Decision Tree Parameters

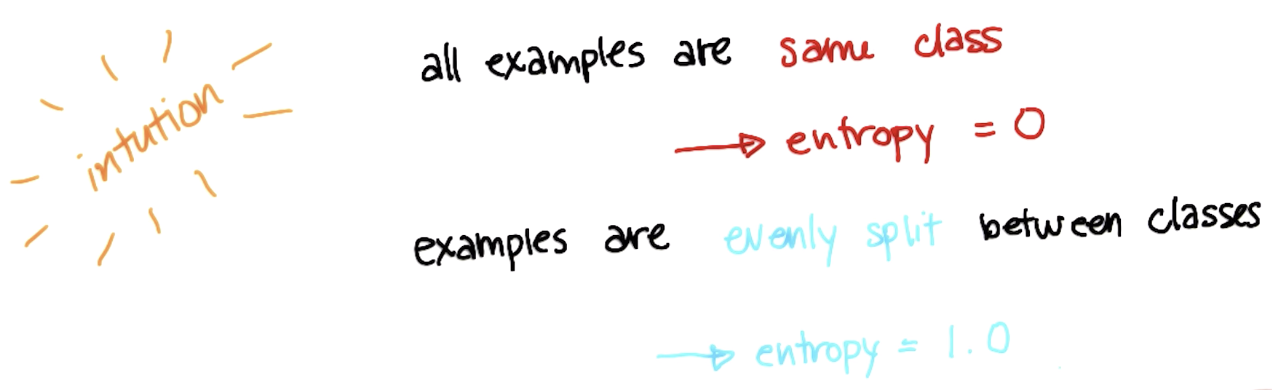

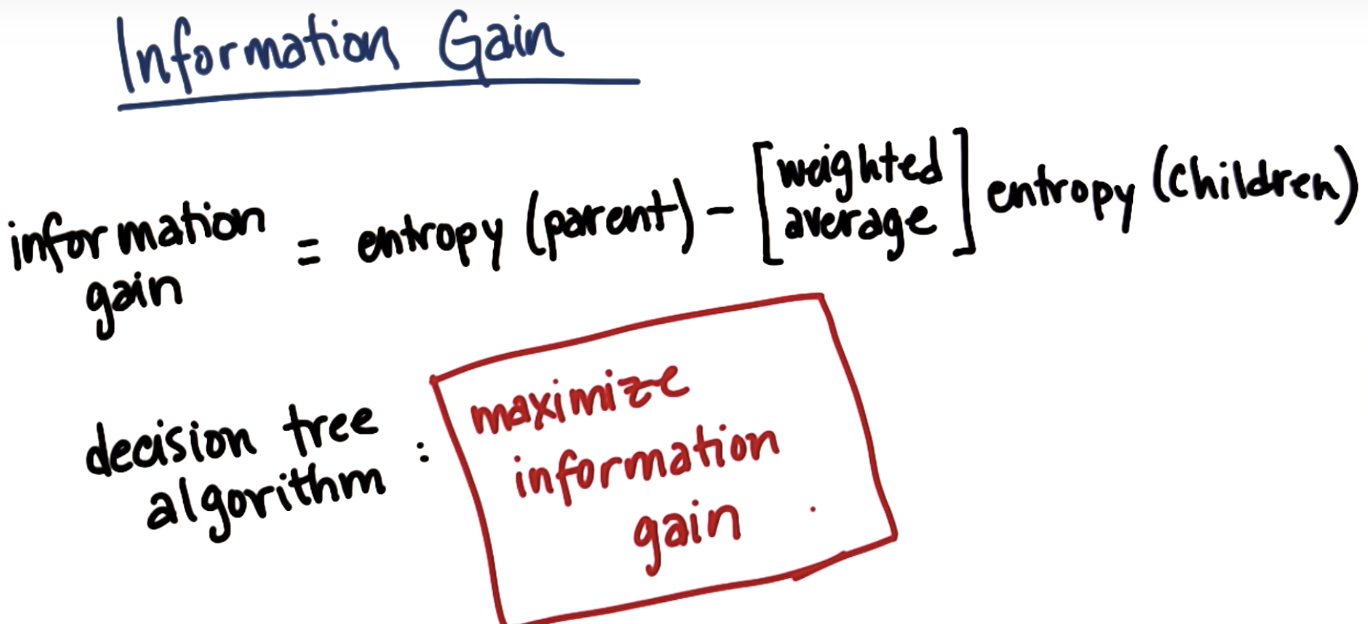

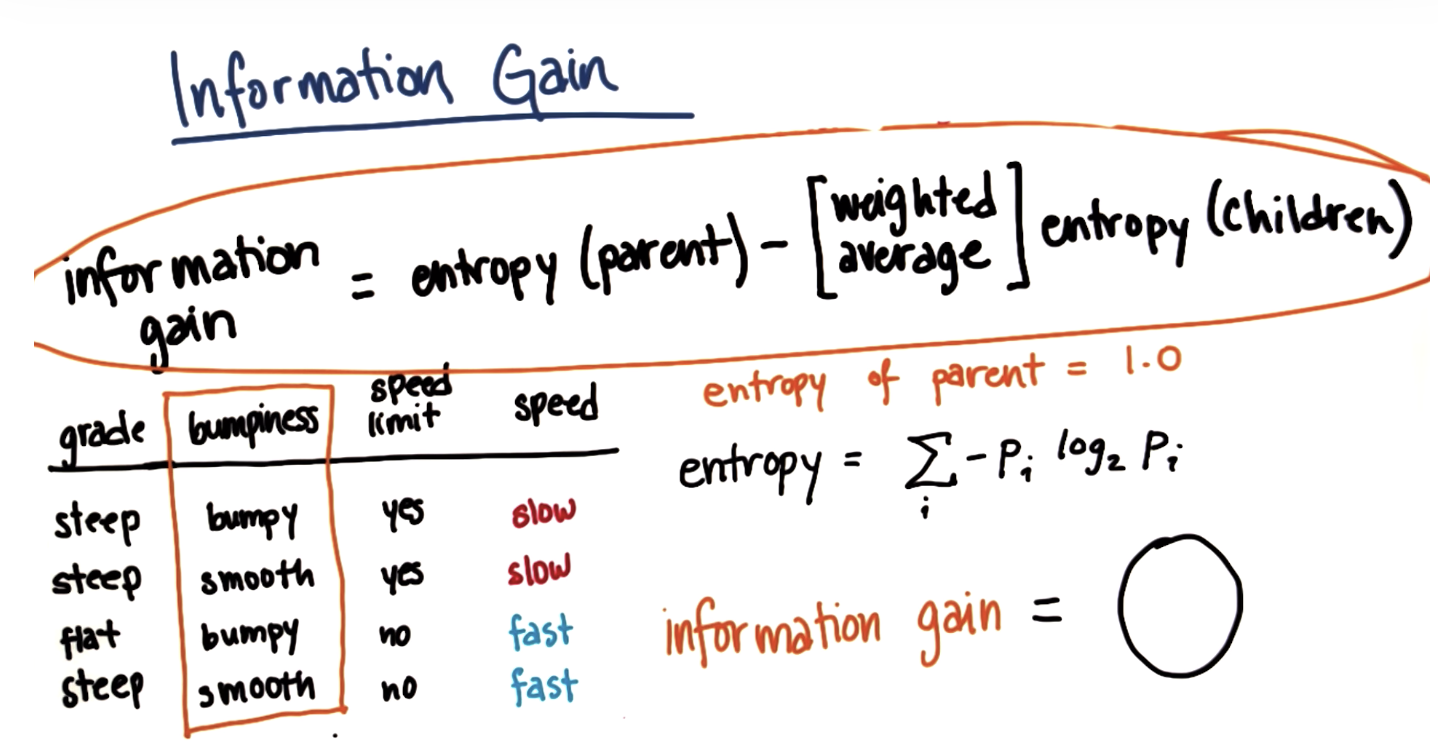

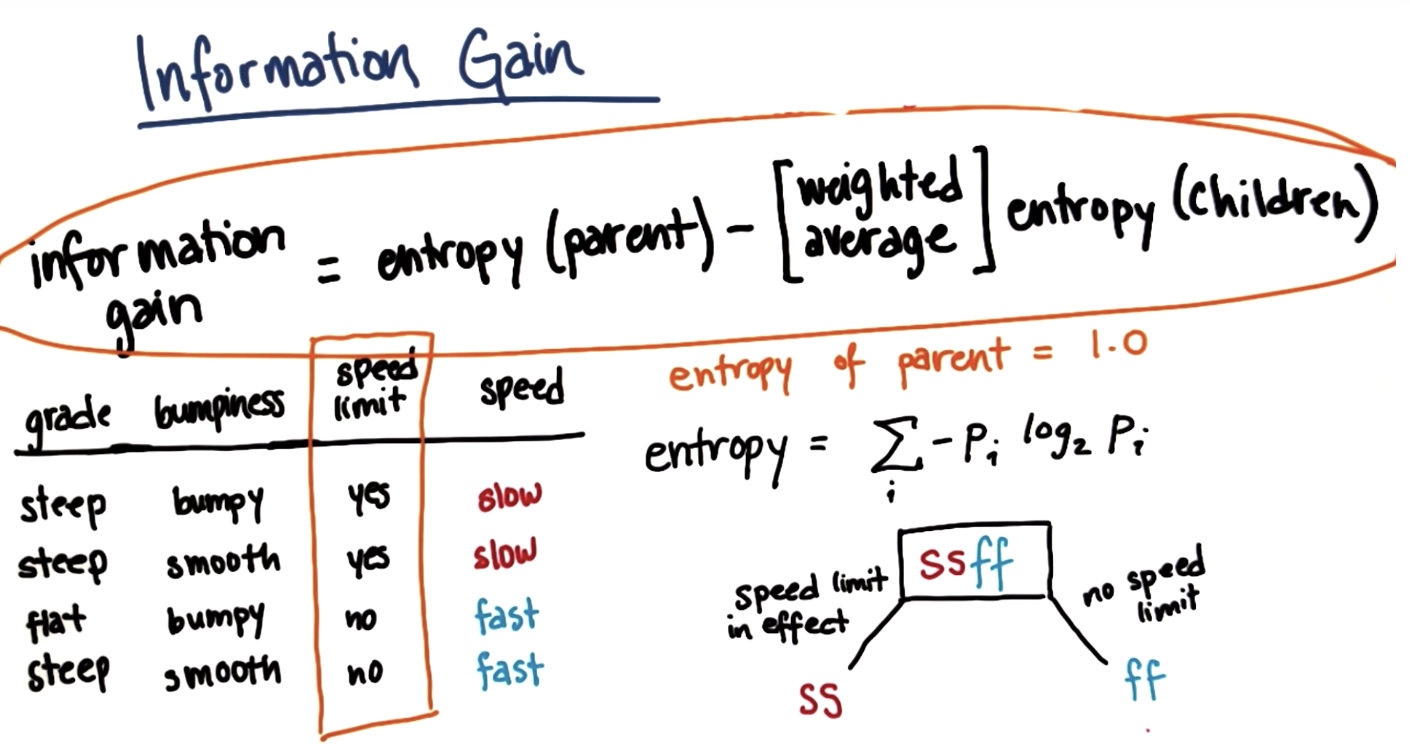

Data Impurity and Entropy

Formula of Entropy

There is an error in the formula in the entropy written on this slide. There should be a negative (-) sign preceding the sum:

Entropy = - \sum_i (p_i) \log_2 (p_i)−∑i(pi)log2(pi)

IG = 1

Tuning Criterion Parameter

gini is another measurement of purity

Decision Tree Mini-Project

In this project, we will again try to identify the authors in a body of emails, this time using a decision tree. The starter code is in decision_tree/dt_author_id.py.

Get the data for this mini project from here.

Once again, you'll do the mini-project on your own computer and enter your answers in the web browser. You can find the instructions for the decision tree mini-project here.

浙公网安备 33010602011771号

浙公网安备 33010602011771号