vllm+ray启动报错(nccl error)

ray群集启动后,运行千问3-32b模型服务,出现报错,vllm无法正常启动,错误信息如下:

(RayWorkerWrapper pid=656, ip=10.0.1.10) ERROR 12-02 16:12:30 [worker_base.py:620] raise RuntimeError(f"NCCL error: {error_str}") [repeated 3x across cluster] (RayWorkerWrapper pid=45352) ERROR 12-02 16:12:30 [worker_base.py:620] RuntimeError: NCCL error: internal error - please report this issue to the NCCL developers (RayWorkerWrapper pid=656, ip=10.0.1.10) ERROR 12-02 16:12:30 [worker_base.py:620] RuntimeError: NCCL error: remote process exited or there was a network error Traceback (most recent call last): File "/usr/local/python310/bin/vllm", line 8, in <module> sys.exit(main()) File "/usr/local/python310/lib/python3.10/site-packages/vllm/entrypoints/cli/main.py", line 53, in main args.dispatch_function(args) File "/usr/local/python310/lib/python3.10/site-packages/vllm/entrypoints/cli/serve.py", line 27, in cmd uvloop.run(run_server(args)) File "/usr/local/python310/lib/python3.10/site-packages/uvloop/__init__.py", line 82, in run return loop.run_until_complete(wrapper()) File "uvloop/loop.pyx", line 1518, in uvloop.loop.Loop.run_until_complete File "/usr/local/python310/lib/python3.10/site-packages/uvloop/__init__.py", line 61, in wrapper return await main File "/usr/local/python310/lib/python3.10/site-packages/vllm/entrypoints/openai/api_server.py", line 1078, in run_server async with build_async_engine_client(args) as engine_client: File "/usr/local/python310/lib/python3.10/contextlib.py", line 199, in __aenter__ return await anext(self.gen) File "/usr/local/python310/lib/python3.10/site-packages/vllm/entrypoints/openai/api_server.py", line 146, in build_async_engine_client async with build_async_engine_client_from_engine_args( File "/usr/local/python310/lib/python3.10/contextlib.py", line 199, in __aenter__ return await anext(self.gen) File "/usr/local/python310/lib/python3.10/site-packages/vllm/entrypoints/openai/api_server.py", line 178, in build_async_engine_client_from_engine_args async_llm = AsyncLLM.from_vllm_config( File "/usr/local/python310/lib/python3.10/site-packages/vllm/v1/engine/async_llm.py", line 150, in from_vllm_config return cls( File "/usr/local/python310/lib/python3.10/site-packages/vllm/v1/engine/async_llm.py", line 118, in __init__ self.engine_core = core_client_class( File "/usr/local/python310/lib/python3.10/site-packages/vllm/v1/engine/core_client.py", line 642, in __init__ super().__init__( File "/usr/local/python310/lib/python3.10/site-packages/vllm/v1/engine/core_client.py", line 398, in __init__ self._wait_for_engine_startup() File "/usr/local/python310/lib/python3.10/site-packages/vllm/v1/engine/core_client.py", line 430, in _wait_for_engine_startup raise RuntimeError("Engine core initialization failed. " RuntimeError: Engine core initialization failed. See root cause above.

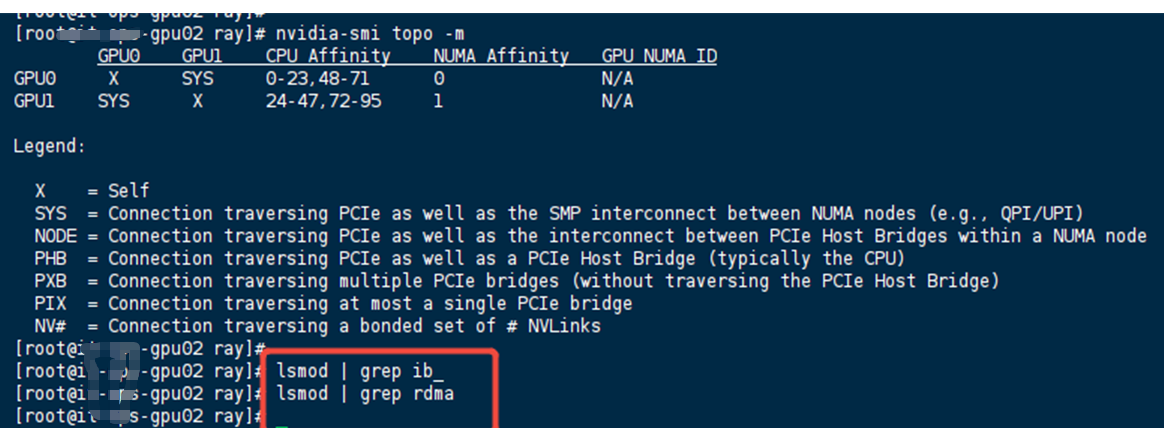

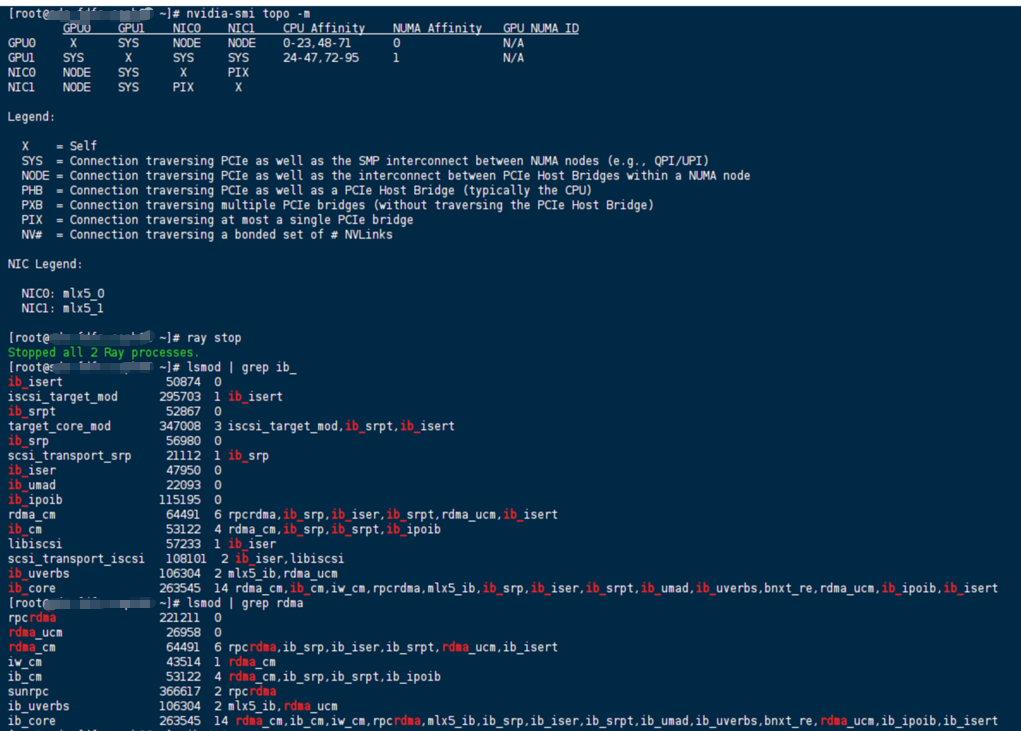

在2个节点上分别运行命令

nvidia-smi topo -m lsmod |grep ib_ lsmod |grep rdma

返回结果不一致,1个节点未安装rdma网卡驱动,另一个节点安装了rdma网卡驱动,导致群集间通信异常

在启动ray和vllm之前,运行如下命令,禁用rdma网卡后,vllm服务可以正常启动

export NCCL_NET=Socket # NCCL 直接用 Socket通信 export NCCL_IB_DISABLE=1 # 显式禁用 InfiniBand/RDMA

浙公网安备 33010602011771号

浙公网安备 33010602011771号