datax

datax

第 1 章 概述

1.1 什么是 DataX

DataX 是阿里巴巴开源的一个异构数据源离线同步工具,致力于实现包括关系型数据库 (MySQL、Oracle 等)、HDFS、Hive、ODPS、HBase、FTP 等各种异构数据源之间稳定高效的数据同步功能。

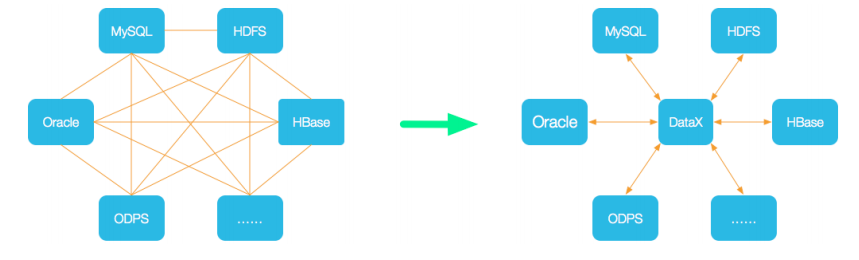

1.2 DataX 的设计

为了解决异构数据源同步问题,DataX 将复杂的网状的同步链路变成了星型数据链路,DataX 作为中间传输载体负责连接各种数据源。当需要接入一个新的数据源的时候,只需要将此数据源对接到 DataX,便能跟已有的数据源做到无缝数据同步。

1.3 支持的数据源

DataX 目前已经有了比较全面的插件体系,主流的 RDBMS 数据库、NOSQL、大数据 计算系统都已经接入。

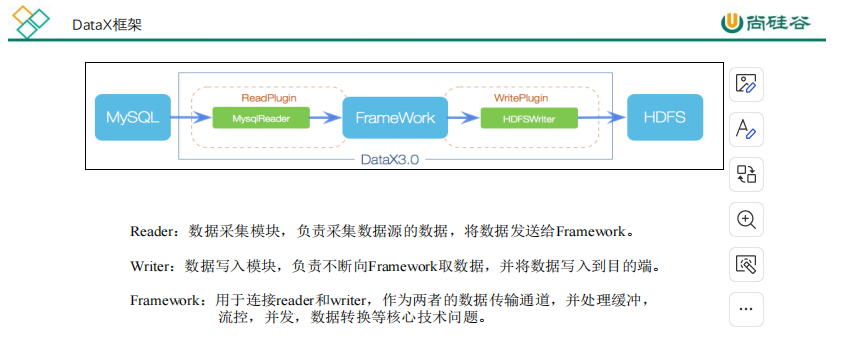

1.4 框架设计

1.5 运行原理

举例来说,用户提交了一个 DataX 作业,并且配置了 20 个并发,目的是将一个 100 张分表的 mysql 数据同步到 odps 里面。 DataX 的调度决策思路是:

1)DataXJob 根据分库分表切分成了 100 个 Task。

2)根据 20 个并发,DataX 计算共需要分配 4 个 TaskGroup。

3)4 个 TaskGroup 平分切分好的 100 个 Task,每一个 TaskGroup 负责以 5 个并发共计运

行 25 个 Task。

第2章 快速入门

2.1 官方地址

下载地址:http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

源码地址:https://github.com/alibaba/DataX

2.2 前置要求

- Linux

- JDK(1.8 以上,推荐 1.8)

- Python(推荐 Python2.6.X)

2.3 安装

1)将下载好的 datax.tar.gz 上传到 hadoop102 的/opt/software

2)解压 datax.tar.gz 到/opt/module

[atguigu@hadoop102 software]$ tar -zxvf datax.tar.gz -C /opt/module/

3)运行自检脚本

[atguigu@hadoop102 bin]$ cd /opt/module/datax/bin/

[atguigu@hadoop102 bin]$ python datax.py /opt/module/datax/job/job.json

[root@hadoop102 bin]#

[root@hadoop102 bin]# python datax.py /opt/module/datax/job/job.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2025-05-02 16:21:43.044 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

2025-05-02 16:21:43.047 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

2025-05-02 16:21:43.076 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2025-05-02 16:21:43.087 [main] INFO Engine - the machine info =>

osInfo: Linux amd64 3.10.0-862.el7.x86_64

jvmInfo: Oracle Corporation 1.8 25.141-b15

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2025-05-02 16:21:43.110 [main] INFO Engine -

{

"setting":{

"speed":{

"channel":1

},

"errorLimit":{

"record":0,

"percentage":0.02

}

},

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"value":"DataX",

"type":"string"

},

{

"value":19890604,

"type":"long"

},

{

"value":"1989-06-04 00:00:00",

"type":"date"

},

{

"value":true,

"type":"bool"

},

{

"value":"test",

"type":"bytes"

}

],

"sliceRecordCount":100000

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"print":false,

"encoding":"UTF-8"

}

}

}

]

}

2025-05-02 16:21:43.147 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false

2025-05-02 16:21:43.148 [main] INFO JobContainer - DataX jobContainer starts job.

2025-05-02 16:21:43.152 [main] INFO JobContainer - Set jobId = 0

2025-05-02 16:21:43.191 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2025-05-02 16:21:43.192 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2025-05-02 16:21:43.193 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2025-05-02 16:21:43.194 [job-0] INFO JobContainer - jobContainer starts to do split ...

2025-05-02 16:21:43.194 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2025-05-02 16:21:43.195 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks.

2025-05-02 16:21:43.196 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks.

2025-05-02 16:21:43.259 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2025-05-02 16:21:43.265 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2025-05-02 16:21:43.268 [job-0] INFO JobContainer - Running by standalone Mode.

2025-05-02 16:21:43.284 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2025-05-02 16:21:43.318 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2025-05-02 16:21:43.319 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2025-05-02 16:21:43.354 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2025-05-02 16:21:43.556 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[203]ms

2025-05-02 16:21:43.557 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2025-05-02 16:21:53.306 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.067s | All Task WaitReaderTime 0.103s | Percentage 100.00%

2025-05-02 16:21:53.306 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2025-05-02 16:21:53.307 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2025-05-02 16:21:53.307 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2025-05-02 16:21:53.307 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2025-05-02 16:21:53.308 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2025-05-02 16:21:53.310 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2025-05-02 16:21:53.310 [job-0] INFO JobContainer - PerfTrace not enable!

2025-05-02 16:21:53.310 [job-0] INFO StandAloneJobContainerCommunicator - Total 100000 records, 2600000 bytes | Speed 253.91KB/s, 10000 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.067s | All Task WaitReaderTime 0.103s | Percentage 100.00%

2025-05-02 16:21:53.311 [job-0] INFO JobContainer -

任务启动时刻 : 2025-05-02 16:21:43

任务结束时刻 : 2025-05-02 16:21:53

任务总计耗时 : 10s

任务平均流量 : 253.91KB/s

记录写入速度 : 10000rec/s

读出记录总数 : 100000

读写失败总数 : 0

[root@hadoop102 bin]#

第3章 datax使用案例

3.1 从 stream 流读取数据并打印到控制台

1)查看配置模板

[root@hadoop102 bin]#

[root@hadoop102 bin]# python datax.py -r streamreader -w streamwriter

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

// streamreader的配置参考下面这个网址

Please refer to the streamreader document:

https://github.com/alibaba/DataX/blob/master/streamreader/doc/streamreader.md

// streamwriter的配置参考下面这个网址

Please refer to the streamwriter document:

https://github.com/alibaba/DataX/blob/master/streamwriter/doc/streamwriter.md

// 配置的执行使用下面这个命令

Please save the following configuration as a json file and use

python {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column": [],

"sliceRecordCount": ""

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "",

"print": true

}

}

}

],

"setting": {

"speed": {

// 一个并发会使用一个chennel

// 当然也可以这样配置 byte:100/s 表示从数据量的角度进行限制,每秒执行100个字节

// 当然也可以这样设置 record:100/s 表示每秒执行100条记录,从数据条数的角度进行控制。

"channel": ""

}

}

}

}

[root@hadoop102 bin]#

在/opt/module/datax/job/目录下编写我们的任务文件stream2stream.json

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column": [

{

"type": "string",

"value": "zhangsan"

},

{

"type": "int",

"value": "18"

}

],

"sliceRecordCount": "10"

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

}

可能没有可执行权限

[root@hadoop102 datax]# /opt/module/datax/bin/datax.py /opt/module/datax/job/stream2stream.json

-bash: /opt/module/datax/bin/datax.py: 权限不够

赋予可执行权限

[root@hadoop102 datax]# chmod +x bin/*

[root@hadoop102 datax]#

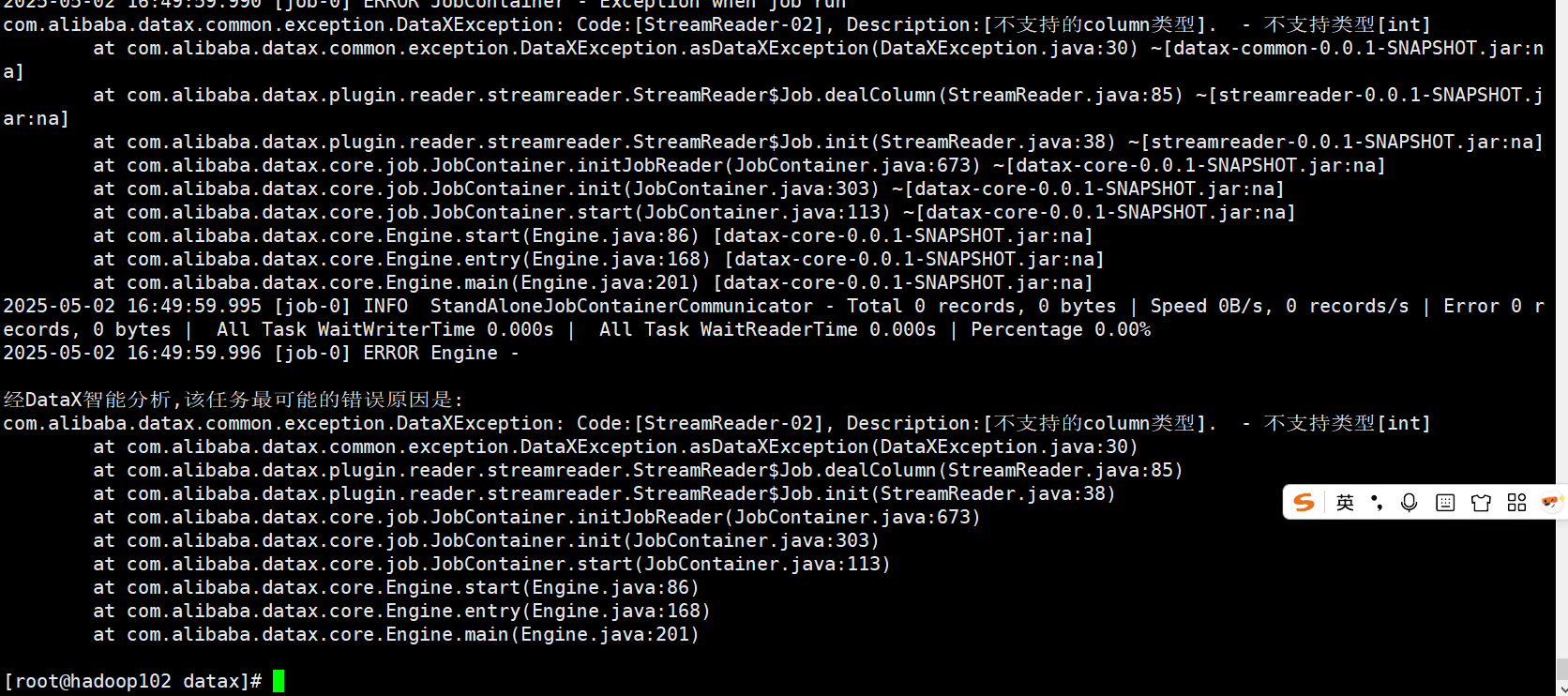

由于我们在编写的时候,可能存在语法错误,datax也是能够检测出来的。

上面的错误是因为我们将

{

"type": "int",

"value": "18"

}

所以错了,可以修改为:

{

"type": "string",

"value": "18"

}

再次执行

[root@hadoop102 job]# /opt/module/datax/bin/datax.py /opt/module/datax/job/stream2stream.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2025-05-02 17:28:35.686 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

2025-05-02 17:28:35.689 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

2025-05-02 17:28:35.709 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2025-05-02 17:28:35.717 [main] INFO Engine - the machine info =>

osInfo: Linux amd64 3.10.0-862.el7.x86_64

jvmInfo: Oracle Corporation 1.8 25.141-b15

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2025-05-02 17:28:35.734 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"type":"string",

"value":"zhangsan"

},

{

"type":"string",

"value":"18"

}

],

"sliceRecordCount":"10"

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"encoding":"UTF-8",

"print":true

}

}

}

],

"setting":{

"speed":{

"channel":"1"

}

}

}

2025-05-02 17:28:35.757 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false

2025-05-02 17:28:35.757 [main] INFO JobContainer - DataX jobContainer starts job.

2025-05-02 17:28:35.759 [main] INFO JobContainer - Set jobId = 0

2025-05-02 17:28:35.779 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2025-05-02 17:28:35.780 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2025-05-02 17:28:35.780 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2025-05-02 17:28:35.780 [job-0] INFO JobContainer - jobContainer starts to do split ...

2025-05-02 17:28:35.781 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2025-05-02 17:28:35.781 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [1] tasks.

2025-05-02 17:28:35.781 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [1] tasks.

2025-05-02 17:28:35.812 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2025-05-02 17:28:35.816 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2025-05-02 17:28:35.818 [job-0] INFO JobContainer - Running by standalone Mode.

2025-05-02 17:28:35.826 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2025-05-02 17:28:35.846 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2025-05-02 17:28:35.846 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2025-05-02 17:28:35.869 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

2025-05-02 17:28:35.970 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[103]ms

2025-05-02 17:28:35.971 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2025-05-02 17:28:45.856 [job-0] INFO StandAloneJobContainerCommunicator - Total 10 records, 100 bytes | Speed 10B/s, 1 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.003s | Percentage 100.00%

2025-05-02 17:28:45.857 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2025-05-02 17:28:45.857 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2025-05-02 17:28:45.857 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2025-05-02 17:28:45.857 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2025-05-02 17:28:45.858 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2025-05-02 17:28:45.859 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2025-05-02 17:28:45.859 [job-0] INFO JobContainer - PerfTrace not enable!

2025-05-02 17:28:45.859 [job-0] INFO StandAloneJobContainerCommunicator - Total 10 records, 100 bytes | Speed 10B/s, 1 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.003s | Percentage 100.00%

2025-05-02 17:28:45.860 [job-0] INFO JobContainer -

任务启动时刻 : 2025-05-02 17:28:35

任务结束时刻 : 2025-05-02 17:28:45

任务总计耗时 : 10s

任务平均流量 : 10B/s

记录写入速度 : 1rec/s

读出记录总数 : 10

读写失败总数 : 0

[root@hadoop102 job]#

我们将channel改成2,再次执行

[root@hadoop102 job]# vi stream2stream.json

[root@hadoop102 job]# /opt/module/datax/bin/datax.py /opt/module/datax/job/stream2stream.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2025-05-02 17:31:05.601 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

2025-05-02 17:31:05.603 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

2025-05-02 17:31:05.621 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2025-05-02 17:31:05.630 [main] INFO Engine - the machine info =>

osInfo: Linux amd64 3.10.0-862.el7.x86_64

jvmInfo: Oracle Corporation 1.8 25.141-b15

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2025-05-02 17:31:05.641 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"type":"string",

"value":"zhangsan"

},

{

"type":"string",

"value":"18"

}

],

"sliceRecordCount":"10"

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"encoding":"UTF-8",

"print":true

}

}

}

],

"setting":{

"speed":{

"channel":"2"

}

}

}

2025-05-02 17:31:05.663 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false

2025-05-02 17:31:05.663 [main] INFO JobContainer - DataX jobContainer starts job.

2025-05-02 17:31:05.665 [main] INFO JobContainer - Set jobId = 0

2025-05-02 17:31:05.684 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2025-05-02 17:31:05.685 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2025-05-02 17:31:05.686 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2025-05-02 17:31:05.686 [job-0] INFO JobContainer - jobContainer starts to do split ...

2025-05-02 17:31:05.686 [job-0] INFO JobContainer - Job set Channel-Number to 2 channels.

2025-05-02 17:31:05.686 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [2] tasks.

2025-05-02 17:31:05.687 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [2] tasks.

2025-05-02 17:31:05.720 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2025-05-02 17:31:05.724 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2025-05-02 17:31:05.727 [job-0] INFO JobContainer - Running by standalone Mode.

2025-05-02 17:31:05.739 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [2] channels for [2] tasks.

2025-05-02 17:31:05.753 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2025-05-02 17:31:05.753 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2025-05-02 17:31:05.769 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] attemptCount[1] is started

2025-05-02 17:31:05.775 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

zhangsan 18

2025-05-02 17:31:05.877 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[103]ms

2025-05-02 17:31:05.877 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] is successed, used[109]ms

2025-05-02 17:31:05.878 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2025-05-02 17:31:15.755 [job-0] INFO StandAloneJobContainerCommunicator - Total 20 records, 200 bytes | Speed 20B/s, 2 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2025-05-02 17:31:15.756 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2025-05-02 17:31:15.756 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2025-05-02 17:31:15.756 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2025-05-02 17:31:15.756 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2025-05-02 17:31:15.757 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2025-05-02 17:31:15.758 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2025-05-02 17:31:15.759 [job-0] INFO JobContainer - PerfTrace not enable!

2025-05-02 17:31:15.759 [job-0] INFO StandAloneJobContainerCommunicator - Total 20 records, 200 bytes | Speed 20B/s, 2 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2025-05-02 17:31:15.760 [job-0] INFO JobContainer -

任务启动时刻 : 2025-05-02 17:31:05

任务结束时刻 : 2025-05-02 17:31:15

任务总计耗时 : 10s

任务平均流量 : 20B/s

记录写入速度 : 2rec/s

读出记录总数 : 20

读写失败总数 : 0

[root@hadoop102 job]#

3.2 从MySQL到MySQL

注意:这里为了简单,我就以数据从一个mysql数据库中导出到另外一个MySQL数据库中。当然你可以从一个数据库导出数据到另外不同类型的数据库中。

3.2.1 查看模版怎样写

[root@hadoop102 datax]# bin/datax.py -r mysqlreader -w mysqlwriter

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

Please refer to the mysqlreader document:

https://github.com/alibaba/DataX/blob/master/mysqlreader/doc/mysqlreader.md

Please refer to the mysqlwriter document:

https://github.com/alibaba/DataX/blob/master/mysqlwriter/doc/mysqlwriter.md

Please save the following configuration as a json file and use

python {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [],

"connection": [

{

"jdbcUrl": [],

"table": []

}

],

"password": "",

"username": "",

"where": ""

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": [],

"connection": [

{

"jdbcUrl": "",

"table": []

}

],

"password": "",

"preSql": [],

"session": [],

"username": "",

"writeMode": ""

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

}

3.2.2 编写datax配置文件

[root@hadoop102 datax]# vi mysql2mysql.json

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": ["*"],

"connection": [

{

"jdbcUrl": ["jdbc:mysql://192.168.18.128:3306/test"],

"table": ["USERS"]

}

],

"password": "123456",

"username": "root",

"where": ""

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": ["*"],

"connection": [

{

"jdbcUrl": "jdbc:mysql://192.168.18.128:3306/mytest",

"table": ["USERS"]

}

],

"password": "123456",

"preSql": [],

"session": [],

"username": "root",

"writeMode": "insert"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

3.2.3 执行命令

/opt/module/datax/bin/datax.py /opt/module/datax/job/oracle2mysql.json

3.2.4 运行结果

[root@hadoop102 job]# /opt/module/datax/bin/datax.py /opt/module/datax/job/mysql2mysql.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2025-05-05 22:13:01.852 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

2025-05-05 22:13:01.859 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

2025-05-05 22:13:01.876 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2025-05-05 22:13:01.884 [main] INFO Engine - the machine info =>

osInfo: Linux amd64 3.10.0-1160.119.1.el7.x86_64

jvmInfo: Oracle Corporation 1.8 25.141-b15

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2025-05-05 22:13:01.906 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"mysqlreader",

"parameter":{

"column":[

"*"

],

"connection":[

{

"jdbcUrl":[

"jdbc:mysql://192.168.18.128:3306/test"

],

"table":[

"users"

]

}

],

"password":"******",

"username":"root",

"where":""

}

},

"writer":{

"name":"mysqlwriter",

"parameter":{

"column":[

"*"

],

"connection":[

{

"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest",

"table":[

"users"

]

}

],

"password":"******",

"preSql":[

],

"session":[

],

"username":"root",

"writeMode":"insert"

}

}

}

],

"setting":{

"speed":{

"channel":"1"

}

}

}

2025-05-05 22:13:01.942 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false

2025-05-05 22:13:01.942 [main] INFO JobContainer - DataX jobContainer starts job.

2025-05-05 22:13:01.948 [main] INFO JobContainer - Set jobId = 0

Mon May 05 22:13:02 GMT+08:00 2025 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2025-05-05 22:13:03.554 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2025-05-05 22:13:03.557 [job-0] WARN OriginalConfPretreatmentUtil - 您的配置文件中的列配置存在一定的风险. 因为您未配置读取数据库表的列,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

Mon May 05 22:13:03 GMT+08:00 2025 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2025-05-05 22:13:04.344 [job-0] INFO OriginalConfPretreatmentUtil - table:[users] all columns:[

user_id,username,email

].

2025-05-05 22:13:04.344 [job-0] WARN OriginalConfPretreatmentUtil - 您的配置文件中的列配置信息存在风险. 因为您配置的写入数据库表的列为*,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

2025-05-05 22:13:04.348 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

insert INTO %s (user_id,username,email) VALUES(?,?,?)

], which jdbcUrl like:[jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&rewriteBatchedStatements=true&tinyInt1isBit=false]

2025-05-05 22:13:04.349 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2025-05-05 22:13:04.350 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2025-05-05 22:13:04.351 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

2025-05-05 22:13:04.353 [job-0] INFO JobContainer - jobContainer starts to do split ...

2025-05-05 22:13:04.355 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2025-05-05 22:13:04.367 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks.

2025-05-05 22:13:04.369 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

2025-05-05 22:13:04.454 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2025-05-05 22:13:04.462 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2025-05-05 22:13:04.467 [job-0] INFO JobContainer - Running by standalone Mode.

2025-05-05 22:13:04.481 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2025-05-05 22:13:04.513 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2025-05-05 22:13:04.513 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2025-05-05 22:13:04.554 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2025-05-05 22:13:04.602 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select * from users

] jdbcUrl:[jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

Mon May 05 22:13:04 GMT+08:00 2025 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Mon May 05 22:13:04 GMT+08:00 2025 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Mon May 05 22:13:04 GMT+08:00 2025 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2025-05-05 22:13:04.738 [0-0-0-reader] INFO CommonRdbmsReader$Task - Finished read record by Sql: [select * from users

] jdbcUrl:[jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2025-05-05 22:13:04.865 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[316]ms

2025-05-05 22:13:04.868 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2025-05-05 22:13:14.517 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 133 bytes | Speed 13B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2025-05-05 22:13:14.519 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2025-05-05 22:13:14.520 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work.

2025-05-05 22:13:14.522 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do post work.

2025-05-05 22:13:14.523 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2025-05-05 22:13:14.526 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/module/datax/hook

2025-05-05 22:13:14.533 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 1 | 1 | 1 | 0.132s | 0.132s | 0.132s

PS Scavenge | 1 | 1 | 1 | 0.022s | 0.022s | 0.022s

2025-05-05 22:13:14.534 [job-0] INFO JobContainer - PerfTrace not enable!

2025-05-05 22:13:14.537 [job-0] INFO StandAloneJobContainerCommunicator - Total 4 records, 133 bytes | Speed 13B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2025-05-05 22:13:14.541 [job-0] INFO JobContainer -

任务启动时刻 : 2025-05-05 22:13:01

任务结束时刻 : 2025-05-05 22:13:14

任务总计耗时 : 12s

任务平均流量 : 13B/s

记录写入速度 : 0rec/s

读出记录总数 : 4

读写失败总数 : 0

[root@hadoop102 job]#

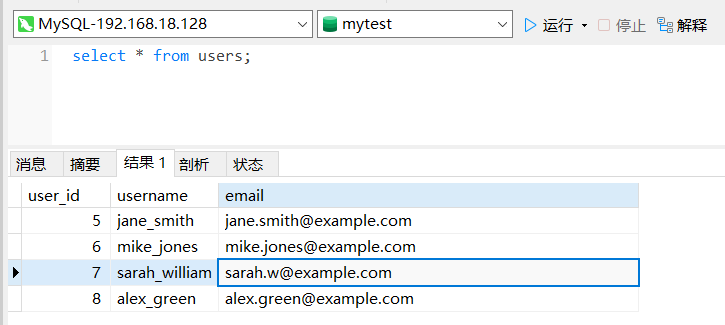

查看结果:

第4章 java整合datax

参考网址:https://cloud.tencent.com/developer/article/1895562

4.1.0 去datax官网下载datax.tar.gz

下载完成之后,我们会得到一个datax.tar.gz的文件,解压缩之后,在lib文件夹中找到datax-common-0.0.1-SNAPSHOT.jar 和 datax-core-0.0.1-SNAPSHOT.jar这两个文件。

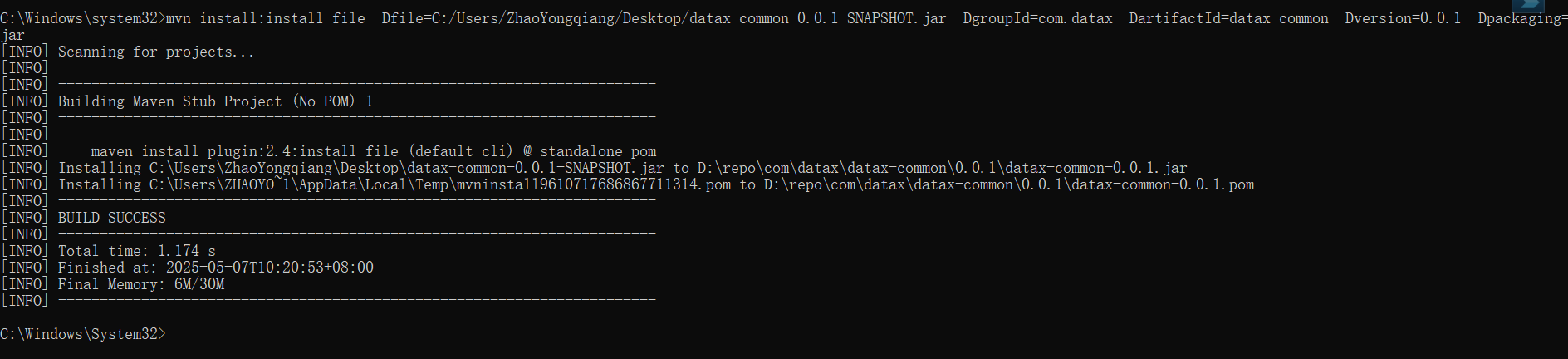

4.1.1 将下面这两个jar包安装到本地maven仓库中

执行下面这两条命令:

mvn install:install-file -Dfile=C:/Users/ZhaoYongqiang/Desktop/datax-common-0.0.1-SNAPSHOT.jar -DgroupId=com.datax -DartifactId=datax-common -Dversion=0.0.1 -Dpackaging=jar

mvn install:install-file -Dfile=C:/Users/ZhaoYongqiang/Desktop/datax-core-0.0.1-SNAPSHOT.jar -DgroupId=com.datax -DartifactId=datax-core -Dversion=0.0.1 -Dpackaging=jar

4.1.2 创建一个maven项目

我这里创建的是一个springboot项目。

在pom.xml配置文件中导入datax的依赖。

<!--引入datax的依赖-->

<dependency>

<groupId>com.datax</groupId>

<artifactId>datax-core</artifactId>

<version>0.0.1</version>

</dependency>

<dependency>

<groupId>com.datax</groupId>

<artifactId>datax-common</artifactId>

<version>0.0.1</version>

</dependency>

除了以上两个依赖,datax还依赖下面的一些依赖,我们也要加上

<!--同时还需要引入下面这几个依赖,否则会报错-->

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.4</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.13</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-io</artifactId>

<version>1.3.2</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.12.0</version>

</dependency>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>2.6</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.60</version>

</dependency>

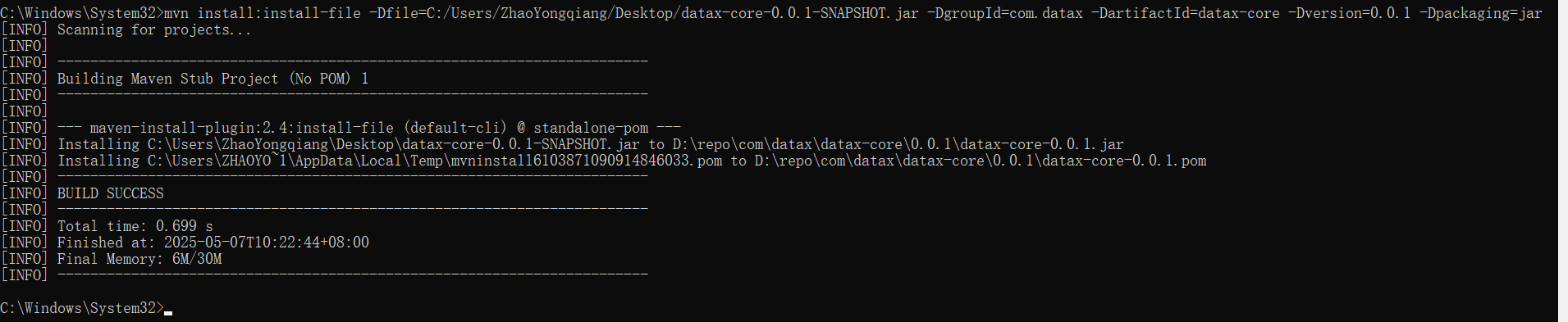

4.1.3 编写测试类和任务json文件

package com.example.datax_demo;

import com.alibaba.datax.core.Engine;

public class TestMain {

public static String getCurrentClasspath(){

ClassLoader classLoader = Thread.currentThread().getContextClassLoader();

String currentClasspath = classLoader.getResource("").getPath();

// 当前操作系统

String osName = System.getProperty("os.name");

if (osName.startsWith("Win")) {

// 删除path中最前面的/

currentClasspath = currentClasspath.substring(1, currentClasspath.length()-1);

}

return currentClasspath;

}

public static void main(String[] args) {

System.setProperty("datax.home","D:\\softwareAdd\\datax\\datax");

String[] datxArgs2 = {"-job", getCurrentClasspath()+"/datax/test.json", "-mode", "standalone", "-jobid", "-1"};

try {

Engine.entry(datxArgs2);

} catch (Throwable e) {

e.printStackTrace();

}

}

}

再编写一个test.json(图片上有指定)

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": ["*"],

"connection": [

{

"jdbcUrl": ["jdbc:mysql://192.168.18.128:3306/test"],

"table": ["users"]

}

],

"password": "123456",

"username": "root",

"where": ""

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": ["*"],

"connection": [

{

"jdbcUrl": "jdbc:mysql://192.168.18.128:3306/mytest",

"table": ["users"]

}

],

"password": "123456",

"preSql": [],

"session": [],

"username": "root",

"writeMode": "insert"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

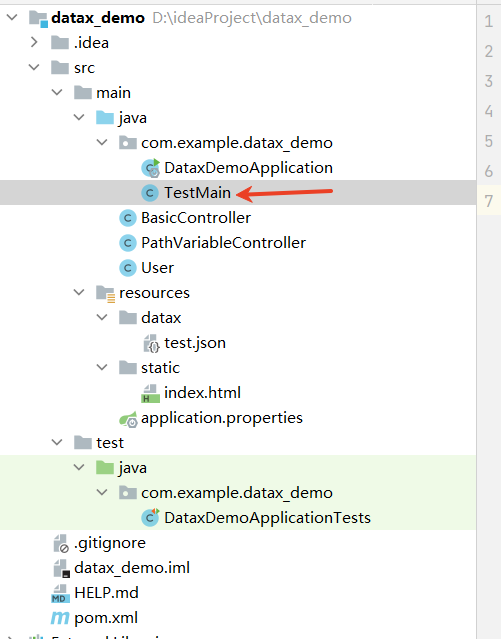

4.1.4 将从github 官网下载下来的 datax.tar.gz 解压到电脑D盘的某个路径下

4.1.5 运行测试类

遇到的报错:

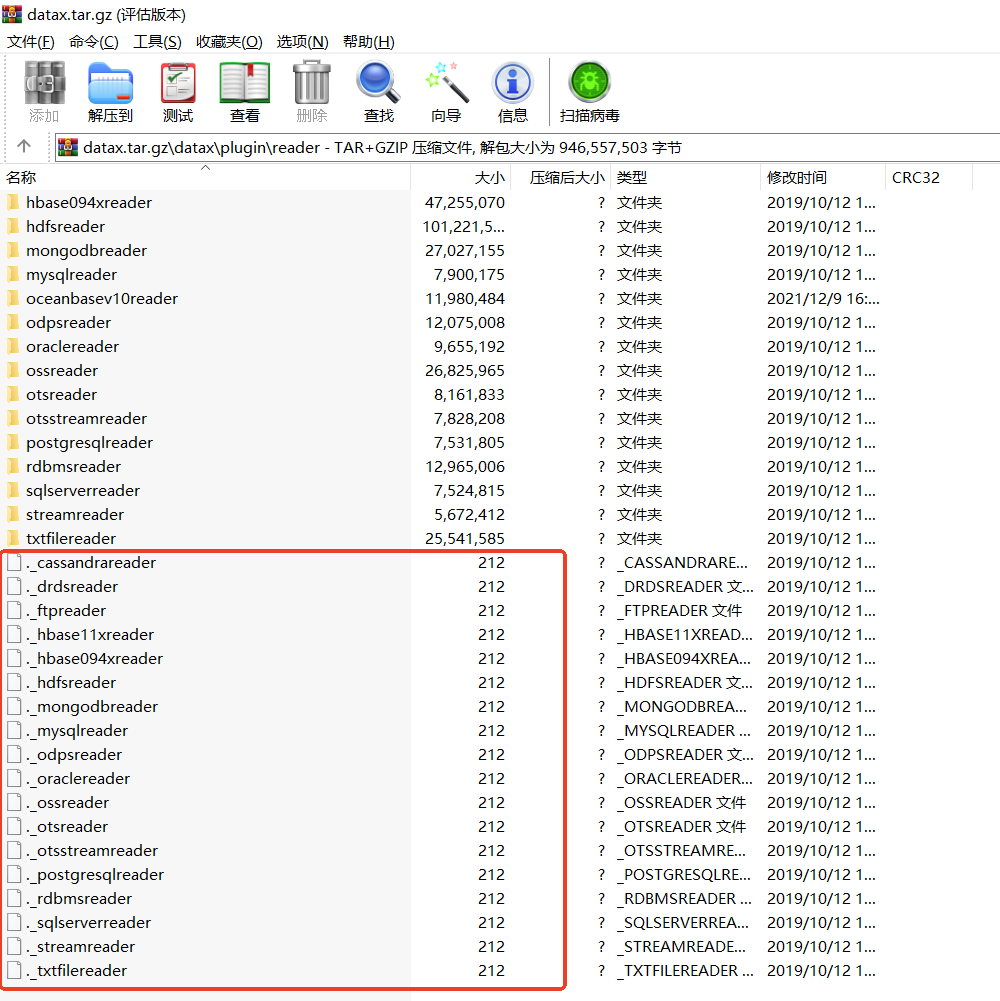

15:45:28.175 [main] WARN com.alibaba.datax.core.util.ConfigParser - 插件[mysqlreader,mysqlwriter]加载失败,1s后重试... Exception:Code:[Common-00], Describe:[您提供的配置文件存在错误信息,请检查您的作业配置 .] - 配置信息错误,您提供的配置文件[D:\softwareAdd\datax\datax\plugin\reader\._cassandrareader\plugin.json]不存在. 请检查您的配置文件.

com.alibaba.datax.common.exception.DataXException: Code:[Common-00], Describe:[您提供的配置文件存在错误信息,请检查您的作业配置 .] - 配置信息错误,您提供的配置文件[D:\softwareAdd\datax\datax\plugin\reader\._cassandrareader\plugin.json]不存在. 请检查您的配置文件.

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26)

at com.alibaba.datax.common.util.Configuration.from(Configuration.java:95)

at com.alibaba.datax.core.util.ConfigParser.parseOnePluginConfig(ConfigParser.java:153)

at com.alibaba.datax.core.util.ConfigParser.parsePluginConfig(ConfigParser.java:125)

at com.alibaba.datax.core.util.ConfigParser.parse(ConfigParser.java:63)

at com.alibaba.datax.core.Engine.entry(Engine.java:137)

at com.example.datax_demo.TestMain.main(TestMain.java:22)

我就将D:\softwareAdd\datax\datax\plugin\reader\下面的cassandrareader相关文件删掉了。

紧接着又报下面的错误

15:51:28.134 [main] WARN com.alibaba.datax.core.util.ConfigParser - 插件[mysqlreader,mysqlwriter]加载失败,1s后重试... Exception:Code:[Common-00], Describe:[您提供的配置文件存在错误信息,请检查您的作业配置 .] - 配置信息错误,您提供的配置文件[D:\softwareAdd\datax\datax\plugin\reader\._drdsreader\plugin.json]不存在. 请检查您的配置文件.

com.alibaba.datax.common.exception.DataXException: Code:[Common-00], Describe:[您提供的配置文件存在错误信息,请检查您的作业配置 .] - 配置信息错误,您提供的配置文件[D:\softwareAdd\datax\datax\plugin\reader\._drdsreader\plugin.json]不存在. 请检查您的配置文件.

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26)

at com.alibaba.datax.common.util.Configuration.from(Configuration.java:95)

at com.alibaba.datax.core.util.ConfigParser.parseOnePluginConfig(ConfigParser.java:153)

at com.alibaba.datax.core.util.ConfigParser.parsePluginConfig(ConfigParser.java:125)

at com.alibaba.datax.core.util.ConfigParser.parse(ConfigParser.java:63)

at com.alibaba.datax.core.Engine.entry(Engine.java:137)

at com.example.datax_demo.TestMain.main(TestMain.java:22)

如果报上面的错误,解决办法是将解压后的reader 和 writer目录下面以 . 开头的这些文件都删掉就好了。

解决完上面的问题,又出现了新问题:(我的数据库链接写的肯定是没有问题的)

5:55:35.645 [job-0] WARN com.alibaba.datax.plugin.rdbms.util.DBUtil - test connection of [jdbc:mysql://192.168.18.128:3306/test] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

15:55:35.653 [job-0] ERROR com.alibaba.datax.common.util.RetryUtil - Exception when calling callable, 异常Msg:DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。

java.lang.Exception: DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。

at com.alibaba.datax.plugin.rdbms.util.DBUtil$2.call(DBUtil.java:71)

at com.alibaba.datax.plugin.rdbms.util.DBUtil$2.call(DBUtil.java:51)

at com.alibaba.datax.common.util.RetryUtil$Retry.call(RetryUtil.java:164)

at com.alibaba.datax.common.util.RetryUtil$Retry.doRetry(RetryUtil.java:111)

at com.alibaba.datax.common.util.RetryUtil.executeWithRetry(RetryUtil.java:30)

at com.alibaba.datax.plugin.rdbms.util.DBUtil.chooseJdbcUrl(DBUtil.java:51)

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.dealJdbcAndTable(OriginalConfPretreatmentUtil.java:92)

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.simplifyConf(OriginalConfPretreatmentUtil.java:59)

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.doPretreatment(OriginalConfPretreatmentUtil.java:33)

at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Job.init(CommonRdbmsReader.java:55)

at com.alibaba.datax.plugin.reader.mysqlreader.MysqlReader$Job.init(MysqlReader.java:37)

at com.alibaba.datax.core.job.JobContainer.initJobReader(JobContainer.java:673)

at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:303)

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113)

at com.alibaba.datax.core.Engine.start(Engine.java:92)

at com.alibaba.datax.core.Engine.entry(Engine.java:171)

at com.example.datax_demo.TestMain.main(TestMain.java:22)

15:55:36.653 [job-0] ERROR com.alibaba.datax.common.util.RetryUtil - Exception when calling callable, 即将尝试执行第1次重试.本次重试计划等待[1000]ms,实际等待[1000]ms, 异常Msg:[DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。]

15:55:36.657 [job-0] WARN com.alibaba.datax.plugin.rdbms.util.DBUtil - test connection of [jdbc:mysql://192.168.18.128:3306/test] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

15:55:38.658 [job-0] ERROR com.alibaba.datax.common.util.RetryUtil - Exception when calling callable, 即将尝试执行第2次重试.本次重试计划等待[2000]ms,实际等待[2001]ms, 异常Msg:[DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。]

15:55:38.660 [job-0] WARN com.alibaba.datax.plugin.rdbms.util.DBUtil - test connection of [jdbc:mysql://192.168.18.128:3306/test] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

15:55:42.660 [job-0] ERROR com.alibaba.datax.common.util.RetryUtil - Exception when calling callable, 即将尝试执行第3次重试.本次重试计划等待[4000]ms,实际等待[4000]ms, 异常Msg:[DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。]

15:55:42.663 [job-0] WARN com.alibaba.datax.plugin.rdbms.util.DBUtil - test connection of [jdbc:mysql://192.168.18.128:3306/test] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

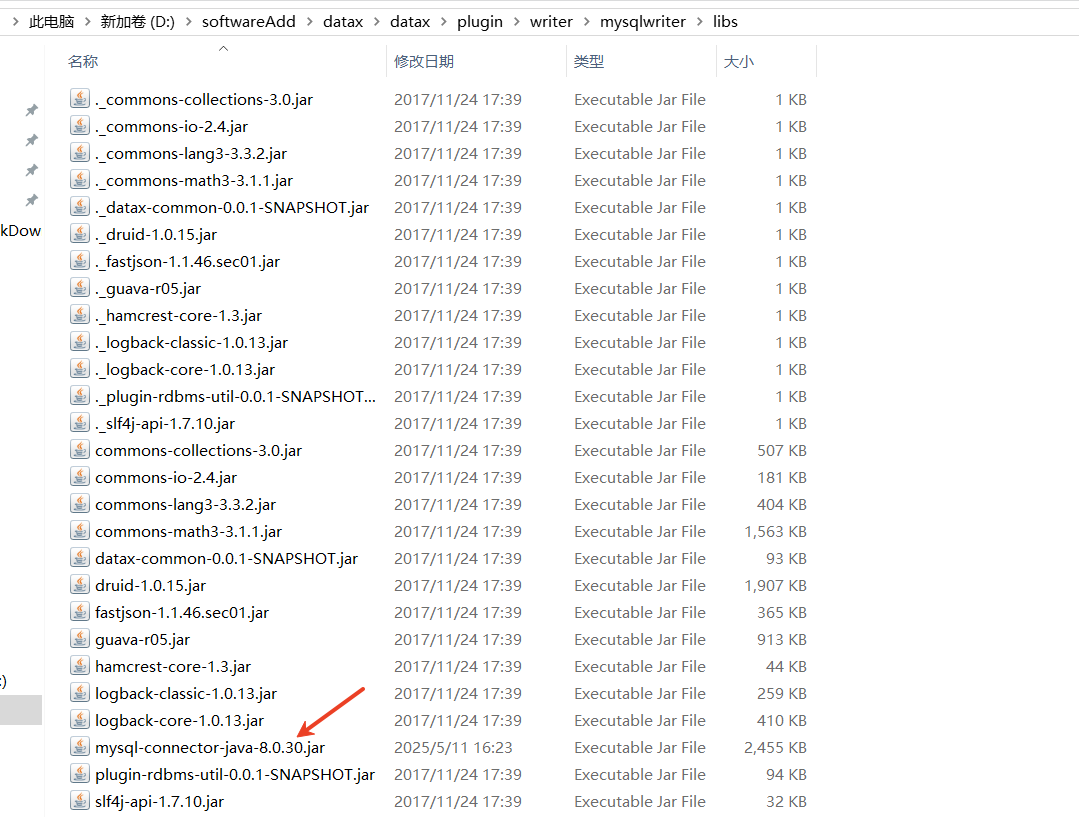

解决办法:

将reader目录和writer目录下面的自带的低版本的MySQL驱动包删除掉,从https://mvnrepository.com/官网下载比较高版本的MySQL驱动包放到这两个目录下面就好使了。

运行结果:

17:07:55.331 [main] INFO com.alibaba.datax.common.statistics.VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

17:07:55.345 [main] INFO com.alibaba.datax.core.Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.131-b11

jvmInfo: Windows 10 amd64 10.0

cpu num: 12

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 2,003.50MB | 96.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 15.50MB | 15.50MB

PS Old Gen | 4,069.50MB | 255.00MB

Metaspace | -0.00MB | 0.00MB

17:07:55.359 [main] INFO com.alibaba.datax.core.Engine -

{

"content":[

{

"reader":{

"parameter":{

"password":"******",

"column":[

"*"

],

"connection":[

{

"jdbcUrl":[

"jdbc:mysql://192.168.18.128:3306/test"

],

"table":[

"users"

]

}

],

"where":"",

"username":"root"

},

"name":"mysqlreader"

},

"writer":{

"parameter":{

"password":"******",

"session":[],

"column":[

"*"

],

"connection":[

{

"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest",

"table":[

"users"

]

}

],

"writeMode":"insert",

"preSql":[],

"username":"root"

},

"name":"mysqlwriter"

}

}

],

"setting":{

"speed":{

"channel":"1"

}

}

}

17:07:55.360 [main] DEBUG com.alibaba.datax.core.Engine - {"core":{"container":{"trace":{"enable":"false"},"job":{"reportInterval":10000,"id":-1},"taskGroup":{"channel":5}},"dataXServer":{"reportDataxLog":false,"address":"http://localhost:7001/api","timeout":10000,"reportPerfLog":false},"transport":{"exchanger":{"class":"com.alibaba.datax.core.plugin.BufferedRecordExchanger","bufferSize":32},"channel":{"byteCapacity":67108864,"flowControlInterval":20,"class":"com.alibaba.datax.core.transport.channel.memory.MemoryChannel","speed":{"byte":-1,"record":-1},"capacity":512}},"statistics":{"collector":{"plugin":{"taskClass":"com.alibaba.datax.core.statistics.plugin.task.StdoutPluginCollector","maxDirtyNumber":10}}}},"entry":{"jvm":"-Xms1G -Xmx1G"},"common":{"column":{"dateFormat":"yyyy-MM-dd","datetimeFormat":"yyyy-MM-dd HH:mm:ss","timeFormat":"HH:mm:ss","extraFormats":["yyyyMMdd"],"timeZone":"GMT+8","encoding":"utf-8"}},"plugin":{"reader":{"mysqlreader":{"path":"D:\\softwareAdd\\datax\\datax\\plugin\\reader\\mysqlreader","name":"mysqlreader","description":"useScene: prod. mechanism: Jdbc connection using the database, execute select sql, retrieve data from the ResultSet. warn: The more you know about the database, the less problems you encounter.","developer":"alibaba","class":"com.alibaba.datax.plugin.reader.mysqlreader.MysqlReader"}},"writer":{"mysqlwriter":{"path":"D:\\softwareAdd\\datax\\datax\\plugin\\writer\\mysqlwriter","name":"mysqlwriter","description":"useScene: prod. mechanism: Jdbc connection using the database, execute insert sql. warn: The more you know about the database, the less problems you encounter.","developer":"alibaba","class":"com.alibaba.datax.plugin.writer.mysqlwriter.MysqlWriter"}}},"job":{"content":[{"reader":{"parameter":{"password":"123456","column":["*"],"connection":[{"jdbcUrl":["jdbc:mysql://192.168.18.128:3306/test"],"table":["users"]}],"where":"","username":"root"},"name":"mysqlreader"},"writer":{"parameter":{"password":"123456","session":[],"column":["*"],"connection":[{"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest","table":["users"]}],"writeMode":"insert","preSql":[],"username":"root"},"name":"mysqlwriter"}}],"setting":{"speed":{"channel":"1"}}}}

17:07:55.437 [main] WARN com.alibaba.datax.core.Engine - prioriy set to 0, because NumberFormatException, the value is: null

17:07:55.441 [main] INFO com.alibaba.datax.common.statistics.PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

17:07:55.441 [main] INFO com.alibaba.datax.core.job.JobContainer - DataX jobContainer starts job.

17:07:55.444 [main] DEBUG com.alibaba.datax.core.job.JobContainer - jobContainer starts to do preHandle ...

17:07:55.444 [main] DEBUG com.alibaba.datax.core.job.JobContainer - jobContainer starts to do init ...

17:07:55.444 [main] INFO com.alibaba.datax.core.job.JobContainer - Set jobId = 0

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

17:07:57.411 [job-0] INFO com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

17:07:57.414 [job-0] WARN com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil - 您的配置文件中的列配置存在一定的风险. 因为您未配置读取数据库表的列,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

17:07:57.414 [job-0] DEBUG com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Job - After job init(), job config now is:[

{"password":"123456","isTableMode":true,"fetchSize":-2147483648,"column":"*","connection":[{"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","table":["users"]}],"where":"","tableNumber":1,"username":"root"}

]

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

17:07:57.689 [job-0] INFO com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil - table:[users] all columns:[

user_id,username,email

].

17:07:57.689 [job-0] WARN com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil - 您的配置文件中的列配置信息存在风险. 因为您配置的写入数据库表的列为*,当您的表字段个数、类型有变动时,可能影响任务正确性甚至会运行出错。请检查您的配置并作出修改.

17:07:57.690 [job-0] INFO com.alibaba.datax.plugin.rdbms.writer.util.OriginalConfPretreatmentUtil - Write data [

insert INTO %s (user_id,username,email) VALUES(?,?,?)

], which jdbcUrl like:[jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]

17:07:57.690 [job-0] DEBUG com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Job - After job init(), originalConfig now is:[

{"password":"123456","session":[],"column":["user_id","username","email"],"connection":[{"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","table":["users"]}],"insertOrReplaceTemplate":"insert INTO %s (user_id,username,email) VALUES(?,?,?)","writeMode":"insert","batchSize":2048,"tableNumber":1,"preSql":[],"username":"root"}

]

17:07:57.690 [job-0] INFO com.alibaba.datax.core.job.JobContainer - jobContainer starts to do prepare ...

17:07:57.692 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

17:07:57.692 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

17:07:57.692 [job-0] DEBUG com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Job - After job prepare(), originalConfig now is:[

{"password":"123456","session":[],"column":["user_id","username","email"],"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","insertOrReplaceTemplate":"insert INTO %s (user_id,username,email) VALUES(?,?,?)","writeMode":"insert","batchSize":2048,"tableNumber":1,"table":"users","preSql":[],"username":"root"}

]

17:07:57.692 [job-0] INFO com.alibaba.datax.core.job.JobContainer - jobContainer starts to do split ...

17:07:57.692 [job-0] INFO com.alibaba.datax.core.job.JobContainer - Job set Channel-Number to 1 channels.

17:07:57.695 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks.

17:07:57.696 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

17:07:57.696 [job-0] DEBUG com.alibaba.datax.core.job.JobContainer - transformer configuration: null

17:07:57.711 [job-0] DEBUG com.alibaba.datax.core.job.JobContainer - contentConfig configuration: [{"internal":{"reader":{"parameter":{"querySql":"select * from users ","password":"123456","isTableMode":true,"fetchSize":-2147483648,"loadBalanceResourceMark":"192.168.18.128","column":"*","jdbcUrl":"jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","where":"","tableNumber":1,"table":"users","username":"root"},"name":"mysqlreader"},"writer":{"parameter":{"password":"123456","session":[],"column":["user_id","username","email"],"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","insertOrReplaceTemplate":"insert INTO %s (user_id,username,email) VALUES(?,?,?)","writeMode":"insert","batchSize":2048,"tableNumber":1,"table":"users","preSql":[],"username":"root"},"name":"mysqlwriter"},"taskId":0},"keys":["writer.parameter.column[1]","writer.parameter.tableNumber","writer.parameter.jdbcUrl","writer.parameter.writeMode","reader.parameter.fetchSize","reader.parameter.password","writer.name","reader.parameter.querySql","reader.parameter.loadBalanceResourceMark","writer.parameter.password","reader.name","reader.parameter.where","writer.parameter.column[2]","reader.parameter.column","writer.parameter.column[0]","reader.parameter.jdbcUrl","writer.parameter.insertOrReplaceTemplate","writer.parameter.batchSize","writer.parameter.table","reader.parameter.tableNumber","reader.parameter.table","reader.parameter.isTableMode","reader.parameter.username","taskId","writer.parameter.username"],"secretKeyPathSet":[]}]

17:07:57.711 [job-0] INFO com.alibaba.datax.core.job.JobContainer - jobContainer starts to do schedule ...

17:07:57.714 [job-0] INFO com.alibaba.datax.core.job.JobContainer - Scheduler starts [1] taskGroups.

17:07:57.716 [job-0] INFO com.alibaba.datax.core.job.JobContainer - Running by standalone Mode.

17:07:57.722 [taskGroup-0] DEBUG com.alibaba.datax.core.taskgroup.TaskGroupContainer - taskGroup[0]'s task configs[[{"internal":{"reader":{"parameter":{"querySql":"select * from users ","password":"123456","isTableMode":true,"fetchSize":-2147483648,"loadBalanceResourceMark":"192.168.18.128","column":"*","jdbcUrl":"jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","where":"","tableNumber":1,"table":"users","username":"root"},"name":"mysqlreader"},"writer":{"parameter":{"password":"123456","session":[],"column":["user_id","username","email"],"jdbcUrl":"jdbc:mysql://192.168.18.128:3306/mytest?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true","insertOrReplaceTemplate":"insert INTO %s (user_id,username,email) VALUES(?,?,?)","writeMode":"insert","batchSize":2048,"tableNumber":1,"table":"users","preSql":[],"username":"root"},"name":"mysqlwriter"},"taskId":0},"keys":["writer.parameter.column[1]","writer.parameter.tableNumber","writer.parameter.jdbcUrl","writer.parameter.writeMode","reader.parameter.fetchSize","reader.parameter.password","writer.name","reader.parameter.querySql","reader.parameter.loadBalanceResourceMark","writer.parameter.password","reader.name","reader.parameter.where","writer.parameter.column[2]","reader.parameter.column","writer.parameter.column[0]","reader.parameter.jdbcUrl","writer.parameter.insertOrReplaceTemplate","writer.parameter.batchSize","writer.parameter.table","reader.parameter.tableNumber","reader.parameter.table","reader.parameter.isTableMode","reader.parameter.username","taskId","writer.parameter.username"],"secretKeyPathSet":[]}]]

17:07:57.722 [taskGroup-0] INFO com.alibaba.datax.core.taskgroup.TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

17:07:57.726 [taskGroup-0] INFO com.alibaba.datax.core.transport.channel.Channel - Channel set byte_speed_limit to -1, No bps activated.

17:07:57.726 [taskGroup-0] INFO com.alibaba.datax.core.transport.channel.Channel - Channel set record_speed_limit to -1, No tps activated.

17:07:57.747 [job-0] DEBUG com.alibaba.datax.core.job.scheduler.AbstractScheduler - com.alibaba.datax.core.statistics.communication.Communication@658c5a19[

counter={}

message={}

state=RUNNING

throwable=<null>

timestamp=1746954477721

]

17:07:57.747 [taskGroup-0] INFO com.alibaba.datax.core.taskgroup.TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

17:07:57.748 [0-0-0-writer] DEBUG com.alibaba.datax.core.taskgroup.runner.WriterRunner - task writer starts to do init ...

17:07:57.748 [0-0-0-reader] DEBUG com.alibaba.datax.core.taskgroup.runner.ReaderRunner - task reader starts to do init ...

17:07:57.750 [0-0-0-writer] DEBUG com.alibaba.datax.core.taskgroup.runner.WriterRunner - task writer starts to do prepare ...

17:07:57.753 [0-0-0-reader] DEBUG com.alibaba.datax.core.taskgroup.runner.ReaderRunner - task reader starts to do prepare ...

17:07:57.753 [0-0-0-reader] DEBUG com.alibaba.datax.core.taskgroup.runner.ReaderRunner - task reader starts to read ...

17:07:57.753 [0-0-0-reader] INFO com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task - Begin to read record by Sql: [select * from users

] jdbcUrl:[jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

17:07:57.769 [0-0-0-writer] DEBUG com.alibaba.datax.core.taskgroup.runner.WriterRunner - task writer starts to write ...

17:07:57.810 [0-0-0-reader] INFO com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Task - Finished read record by Sql: [select * from users

] jdbcUrl:[jdbc:mysql://192.168.18.128:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

17:07:57.814 [0-0-0-reader] DEBUG com.alibaba.datax.core.taskgroup.runner.ReaderRunner - task reader starts to do post ...

17:07:57.814 [0-0-0-reader] DEBUG com.alibaba.datax.core.taskgroup.runner.ReaderRunner - task reader starts to do destroy ...

17:07:58.033 [0-0-0-writer] DEBUG com.alibaba.datax.core.taskgroup.runner.WriterRunner - task writer starts to do post ...

17:07:58.033 [0-0-0-writer] DEBUG com.alibaba.datax.core.taskgroup.runner.WriterRunner - task writer starts to do destroy ...

17:07:58.050 [taskGroup-0] INFO com.alibaba.datax.core.taskgroup.TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[304]ms

17:07:58.050 [taskGroup-0] INFO com.alibaba.datax.core.taskgroup.TaskGroupContainer - taskGroup[0] completed it's tasks.

17:08:07.748 [job-0] DEBUG com.alibaba.datax.core.job.scheduler.AbstractScheduler - com.alibaba.datax.core.statistics.communication.Communication@4167d97b[

counter={writeSucceedRecords=5, readSucceedRecords=4, totalErrorBytes=0, writeSucceedBytes=133, byteSpeed=0, totalErrorRecords=0, recordSpeed=0, waitReaderTime=0, writeReceivedBytes=133, stage=1, waitWriterTime=57200, percentage=1.0, totalReadRecords=4, writeReceivedRecords=5, readSucceedBytes=133, totalReadBytes=133}

message={}

state=SUCCEEDED

throwable=<null>

timestamp=1746954487748

]

17:08:07.749 [job-0] INFO com.alibaba.datax.core.statistics.container.communicator.job.StandAloneJobContainerCommunicator - Total 4 records, 133 bytes | Speed 13B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

17:08:07.749 [job-0] INFO com.alibaba.datax.core.job.scheduler.AbstractScheduler - Scheduler accomplished all tasks.

17:08:07.749 [job-0] DEBUG com.alibaba.datax.core.job.JobContainer - jobContainer starts to do post ...

17:08:07.749 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Writer.Job [mysqlwriter] do post work.

17:08:07.750 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX Reader.Job [mysqlreader] do post work.

17:08:07.750 [job-0] DEBUG com.alibaba.datax.core.job.JobContainer - jobContainer starts to do postHandle ...

17:08:07.750 [job-0] INFO com.alibaba.datax.core.job.JobContainer - DataX jobId [0] completed successfully.

17:08:07.750 [job-0] INFO com.alibaba.datax.core.container.util.HookInvoker - No hook invoked, because base dir not exists or is a file: D:\softwareAdd\datax\datax\hook

17:08:07.751 [job-0] INFO com.alibaba.datax.core.job.JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 1 | 1 | 1 | 0.011s | 0.011s | 0.011s

17:08:07.751 [job-0] INFO com.alibaba.datax.core.job.JobContainer - PerfTrace not enable!

17:08:07.752 [job-0] INFO com.alibaba.datax.core.statistics.container.communicator.job.StandAloneJobContainerCommunicator - Total 4 records, 133 bytes | Speed 13B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

17:08:07.754 [job-0] INFO com.alibaba.datax.core.job.JobContainer -

任务启动时刻 : 2025-05-11 17:07:55

任务结束时刻 : 2025-05-11 17:08:07

任务总计耗时 : 12s

任务平均流量 : 13B/s

记录写入速度 : 0rec/s

读出记录总数 : 4

读写失败总数 : 0

Process finished with exit code 0

查看目标数据库也同步到了源库的数据。

4.1.6 总结

学习完了,我们当然要总结一下了,通过以上,我们可以能够轻松的推导出来在公司实际项目中我们应该怎样使用Java结合datax实现数据同步了。

1.从datax官网下载datax.tar.gz压缩包,将其解压到我们的真是服务器(一般是linux服务器)上面。

2.编写我们的java代码。参考以上讲解,重点就是要把这行代码System.setProperty("datax.home","换成你linux服务器的解压datax安装包的目录");

重点就是这两步吧。

浙公网安备 33010602011771号

浙公网安备 33010602011771号