【4-2】Tensorboard网络运行

一、参考代码

1 import tensorflow as tf

2 from tensorflow.examples.tutorials.mnist import input_data

3

4 #载入数据集

5 mnist = input_data.read_data_sets("MNIST_data",one_hot=True)

6

7 #每个批次的大小

8 batch_size = 100

9 #计算一共有多少个批次

10 n_batch = mnist.train.num_examples // batch_size

11

12 #参数概要

13 def variable_summaries(var): #定义了一个函数,用来看平均值、标准差、最值

14 mean = tf.reduce_mean(var)

15 tf.summary.scalar('mean',mean) #平均值

16 with tf.name_scope('stddev'):

17 stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

18 tf.summary.scalar('stddev',stddev) #标准差

19 tf.summary.scalar('max',tf.reduce_max(var)) #最大值

20 tf.summary.scalar('min',tf.reduce_min(var)) #最小值

21 tf.summary.histogram('histogram',var) #直方图

22

23 #命名空间

24 with tf.name_scope('input'):

25 #定义两个placeholder

26 x = tf.placeholder(tf.float32,[None,784],name='x-input')

27 y = tf.placeholder(tf.float32,[None,10],name='y-input')

28

29

30 with tf.name_scope('layer'):

31 #创建一个简单的神经网络

32 with tf.name_scope('wights'):

33 W = tf.Variable(tf.zeros([784,10]),name='W')

34 variable_summaries(W) #将参数W传入创建的函数中

35 with tf.name_scope('biases'):

36 b = tf.Variable(tf.zeros([10]),name='b')

37 variable_summaries(b) #将参数b传入创建的函数中

38 with tf.name_scope('wx_plus_b'):

39 wx_plus_b = tf.matmul(x,W) + b

40 with tf.name_scope('softmax'):

41 prediction = tf.nn.softmax(wx_plus_b)

42

43 #二次代价函数

44 # loss = tf.reduce_mean(tf.square(y-prediction))

45 with tf.name_scope('loss'):

46 loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=prediction))

47 tf.summary.scalar('loss',loss) #观察loss值的变化

48 with tf.name_scope('train'):

49 #使用梯度下降法

50 train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

51

52 #初始化变量

53 init = tf.global_variables_initializer()

54

55 with tf.name_scope('accuracy'):

56 with tf.name_scope('correct_prediction'):

57 #结果存放在一个布尔型列表中

58 correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))#argmax返回一维张量中最大的值所在的位置

59 with tf.name_scope('accuracy'):

60 #求准确率

61 accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

62 tf.summary.scalar('accuracy',accuracy) #观察准确率的变化

63

64 #合并所有的summary:我们要观测的参数

65 merged = tf.summary.merge_all()

66

67 with tf.Session() as sess:

68 sess.run(init)

69 writer = tf.summary.FileWriter('logs/',sess.graph)

70 for epoch in range(51):

71 for batch in range(n_batch):

72 batch_xs,batch_ys = mnist.train.next_batch(batch_size)

73 summary,_ = sess.run([merged,train_step],feed_dict={x:batch_xs,y:batch_ys}) #执行train_step的同时,也执行merged

74

75 writer.add_summary(summary,epoch) #写入文件里

76

77 acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

78 print("Iter " + str(epoch) + ",Testing Accuracy " + str(acc))

首先定义了一个函数variable_summaries(),用来看数据(比如W,b参数)的平均值,标准差,最值和直方图。

tf.summary.scalar('name',tensor)绘制变换的图表,第一项是字符命名,第二项是要记录跟踪的变量。

tf.summary.merge_all对所有训练图进行合并打包,最后必须用sess.run一下打包的图,writer.add_summary添加相应的记录。

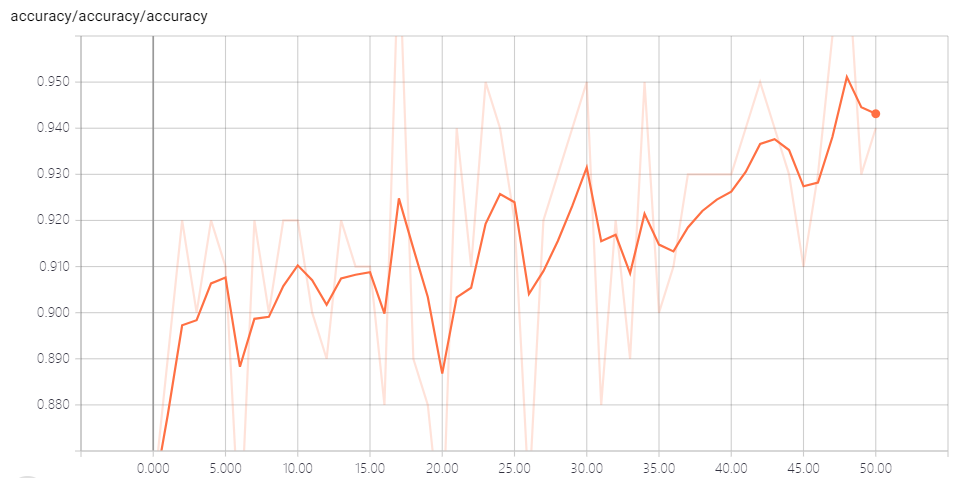

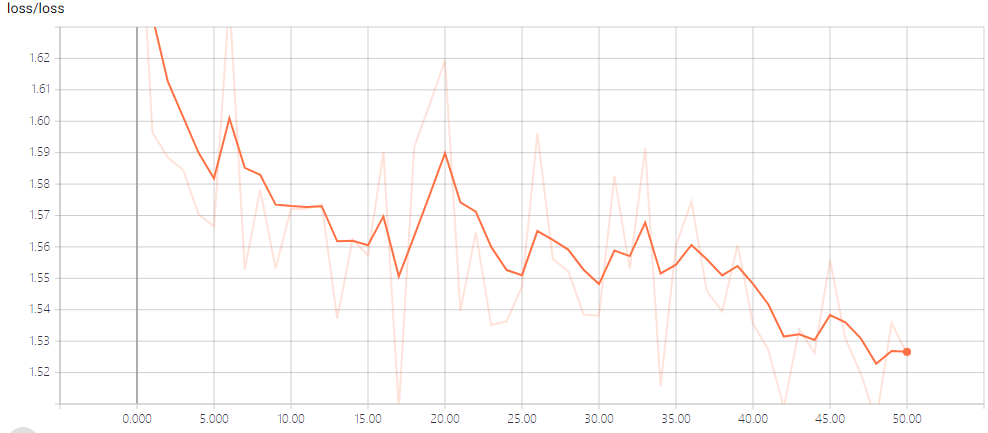

二、Tensorboard中的结果:

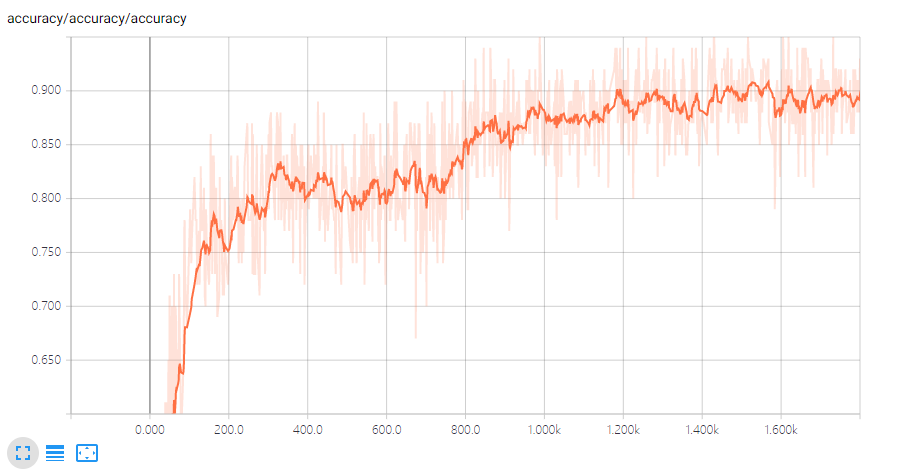

还有显示一些参数的最值,分布等。但是因为只迭代了50次,所以图中点数为50。下面将点数改成2000,循环程序更改为:

1 for i in range(2001):

2 batch_xs,batch_ys = mnist.train.next_batch(batch_size)

3 summary,_ = sess.run([merged,train_step],feed_dict={x:batch_xs,y:batch_ys})

4 writer.add_summary(summary,i)

5 if i%500 == 0:

6 print(sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels}))

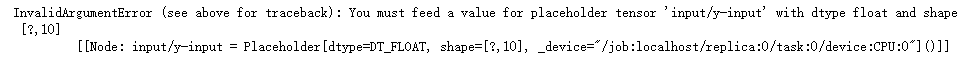

有时会出现以下错误:

百度之后,说是因为当前路径不能有一个以上的events文件,所以将events文件删除,然后重新Restart&Run all之后,问题果真解决了。

参考:https://www.cnblogs.com/fydeblog/p/7429344.html 中例三:线性拟合

1 import tensorflow as tf 2 import numpy as np 3 4 #继续改进,试试其他功能:scalars,histograms,distributions 5 with tf.name_scope('data'): 6 #原始数据 7 x_data = np.random.rand(100).astype(np.float32) 8 y_data = 0.3*x_data + 0.1 9 10 with tf.name_scope('parameters'): 11 with tf.name_scope('weight'): 12 weight = tf.Variable(tf.random_uniform([1],-1.0,1.0)) 13 tf.summary.histogram('weight',weight) #histogram 14 with tf.name_scope('biases'): 15 bias = tf.Variable(tf.zeros([1])) 16 tf.summary.histogram('bias',bias) 17 18 with tf.name_scope('y_prediction'): 19 y_prediction = weight*x_data + bias 20 21 with tf.name_scope('loss'): 22 loss = tf.reduce_mean(tf.square(y_data-y_prediction)) 23 tf.summary.scalar('loss',loss) 24 25 optimizer = tf.train.GradientDescentOptimizer(0.5) 26 27 with tf.name_scope('train'): 28 train = optimizer.minimize(loss) 29 30 init = tf.global_variables_initializer() 31 32 with tf.Session() as sess: 33 sess.run(init) 34 merged = tf.summary.merge_all() 35 writer = tf.summary.FileWriter('logs/',sess.graph) 36 for step in range(101): 37 sess.run(train) 38 rs = sess.run(merged) 39 writer.add_summary(rs,step)

2019-06-05 21:35:28

浙公网安备 33010602011771号

浙公网安备 33010602011771号