1. 乱码问题

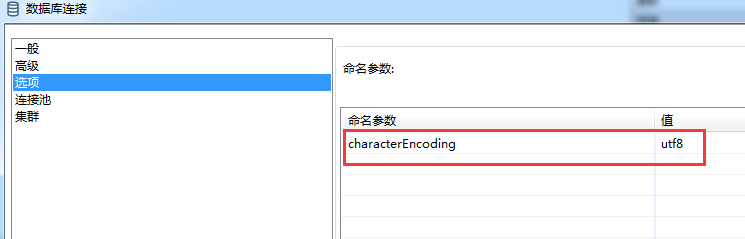

编辑目标数据库的链接:

配置编码参数即可。

2. 报错 No operations allowed after statement closed.

需要调整wait_timeout: set global wait_timeout=1000000;

3. net_write_timeout 参数也需要调整:set global net_write_timeout='60000'

kettle在迁移数据时,运行速度很慢,如果数量很大时,需要调整相关参数,不然运行到一半就报错。

迁移完成之后,可以恢复相关参数。

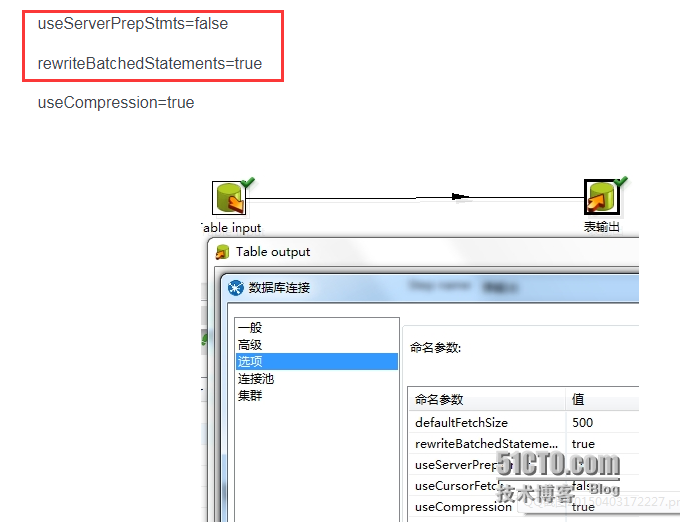

4. kettle 加速

原理是把 单条的insert转换为 批量 batch insert

To remedy this, in PDI I create a separate, specialized Database Connection I use for batch inserts. Set these two MySQL-specific options on your Database Connection:

useServerPrepStmts false

rewriteBatchedStatements true

Used together, these "fake" batch inserts on the client. Specificially, the insert statements:

INSERT INTO t (c1,c2) VALUES ('One',1);

INSERT INTO t (c1,c2) VALUES ('Two',2);

INSERT INTO t (c1,c2) VALUES ('Three',3);

will be rewritten into:

INSERT INTO t (c1,c2) VALUES ('One',1),('Two',2),('Three',3);

So that the batched rows will be inserted with one statement (and one network round-trip). With this simple change, Table Output is very fast and close to performance of the bulk loader steps.

设置后写入速度有明显提升。

另外 目标 端的mysql可以调整一下参数:

innodb_flush_log_at_trx_commit = 0

sync_binlog = 0

浙公网安备 33010602011771号

浙公网安备 33010602011771号