搭建HDFS HA

搭建HDFS HA

1.服务器角色规划

| hd-01(192.168.1.99) | hd-02 (192.168.1.100) | hd-03 (192.168.1.101) |

|---|---|---|

| NameNode | NameNode | |

| Zookeeper | Zookeeper | Zookeeper |

| DataNode | DataNode | DataNode |

| ResourceManage | ResourceManage | |

| NodeManager | NodeManager | NodeManager |

2.搭建

解压Hadoop 2.8.5

tar -zxvf hadoop-2.8.5.tar.gz

配置Hadoop JDK路径

# 修改hadoop-env.sh、mapred-env.sh、yarn-env.sh文件中的JDK路径

export JAVA_HOME="/opt/jdk1.8.0_112"

配置hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<!-- 为namenode集群定义一个services name -->

<name>dfs.nameservices</name>

<value>ns1</value>

</property>

<property>

<!-- nameservice 包含哪些namenode,为各个namenode起名 -->

<name>dfs.ha.namenodes.ns1</name>

<value>nn1,nn2</value>

</property>

<property>

<!-- 名为nn1的namenode 的rpc地址和端口号,rpc用来和datanode通讯 -->

<name>dfs.namenode.rpc-address.ns1.nn1</name>

<value>hd-01:8020</value>

</property>

<property>

<!-- 名为nn2的namenode 的rpc地址和端口号,rpc用来和datanode通讯 -->

<name>dfs.namenode.rpc-address.ns1.nn2</name>

<value>hd-02:8020</value>

</property>

<property>

<!-- 名为nn1的namenode 的http地址和端口号,web客户端 -->

<name>dfs.namenode.http-address.ns1.nn1</name>

<value>hd-01:50070</value>

</property>

<property>

<!--名为nn2的namenode 的http地址和端口号,web客户端 -->

<name>dfs.namenode.http-address.ns1.nn2</name>

<value>hd-02:50070</value>

</property>

<property>

<!-- namenode间用于共享编辑日志的journal节点列表 -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hd-01:8485;hd-02:8485;hd-03:8485/ns1</value>

</property>

<property>

<!-- journalnode 上用于存放edits日志的目录 -->

<name>dfs.journalnode.edits.dir</name>

<value>/opt/hadoop-2.8.5/tmp/data/dfs/jn</value>

</property>

<property>

<!-- 客户端连接可用状态的NameNode所用的代理类 -->

<name>dfs.client.failover.proxy.provider.ns1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<!-- -->

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

</configuration>

配置core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<!-- hdfs 地址,ha中是连接到nameservice -->

<name>fs.defaultFS</name>

<value>hdfs://ns1</value>

</property>

<property>

<!-- -->

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop-2.8.5/data/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hd-01:2181,hd-02:2181,hd-03:2181</value>

</property>

</configuration>

配置slaves文件

[root@hd-01 opt]# cat /opt/hadoop-2.8.5/etc/hadoop/slaves

hd-01

hd-02

hd-03

分发到其他节点

scp -r /opt/hadoop-2.8.5/ hd-01:/opt/

scp -r /opt/hadoop-2.8.5/ hd-02:/opt/

三台机器分别启动Journalnode

# 三台机器分别给hadoop赋权

chown -R hadoop:hadoop /opt/hadoop-2.8.5/

# 启动,三台机器分别执行

sbin/hadoop-daemon.sh start journalnode

# JPS命令查看是否启动

jps

在三台节点上启动Zookeeper

# 启动,三台机器分别执行

bin/zkServer.sh start

# 查看zk状态

bin/zkServer.sh status

启动NameNode

# 启动之前先格式化NameNode

# 1.格式话hd-01

bin/hdfs namenode -format

# 启动hd-01

sbin/hadoop-daemon.sh start namenode

# 2.格式化hd-02

bin/hdfs namenode -bootstrapStandby

# 启动hd-02

sbin/hadoop-daemon.sh start namenode

查看HDFS Web页面,此时两个NameNode都是standby状态。

切换第一台为active状态:

添加上forcemanual参数,强制将一个NameNode转换为Active状态

bin/hdfs haadmin -transitionToActive nn1

3.配置故障自动转移

利用zookeeper集群实现故障自动转移,在配置故障自动转移之前,要先关闭集群,不能在HDFS运行期间进行配置。

关闭NameNode、DataNode、JournalNode、zookeeper

# 三台分别到对应的路径执行

sbin/hadoop-daemon.sh stop namenode

sbin/hadoop-daemon.sh stop datanode

sbin/hadoop-daemon.sh stop journalnode

bin/zkServer.sh stop

修改hdfs-site.xml

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

修改core-site.xml

<property>

<name>ha.zookeeper.quorum</name>

<value>hd-01:2181,hd-02:2181,hd-03:2181</value>

</property>

将hdfs-site.xml和core-site.xml分发到其他机器

scp /opt/hadoop-2.8.5/etc/hadoop/hdfs-site.xml hd-02:/opt/hadoop-2.8.5/etc/hadoop/

scp /opt/hadoop-2.8.5/etc/hadoop/core-site.xml hd-02:/opt/hadoop-2.8.5/etc/hadoop/

三台机器启动zookeeper

bin/zkServer.sh start

创建一个zNode

cd /opt/hadoop-2.8.5/

bin/hdfs zkfc -formatZK

启动HDFS、JournalNode、zkfc

# vm-01上执行

cd /opt/hadoop-2.8.5/

sbin/start-dfs.sh

4.测试故障自动转移和数据是否共享

# 在hd-01上上传文件

cd /opt/hadoop-2.8.5/

bin/hdfs dfs -put /opt/data/wc.input /

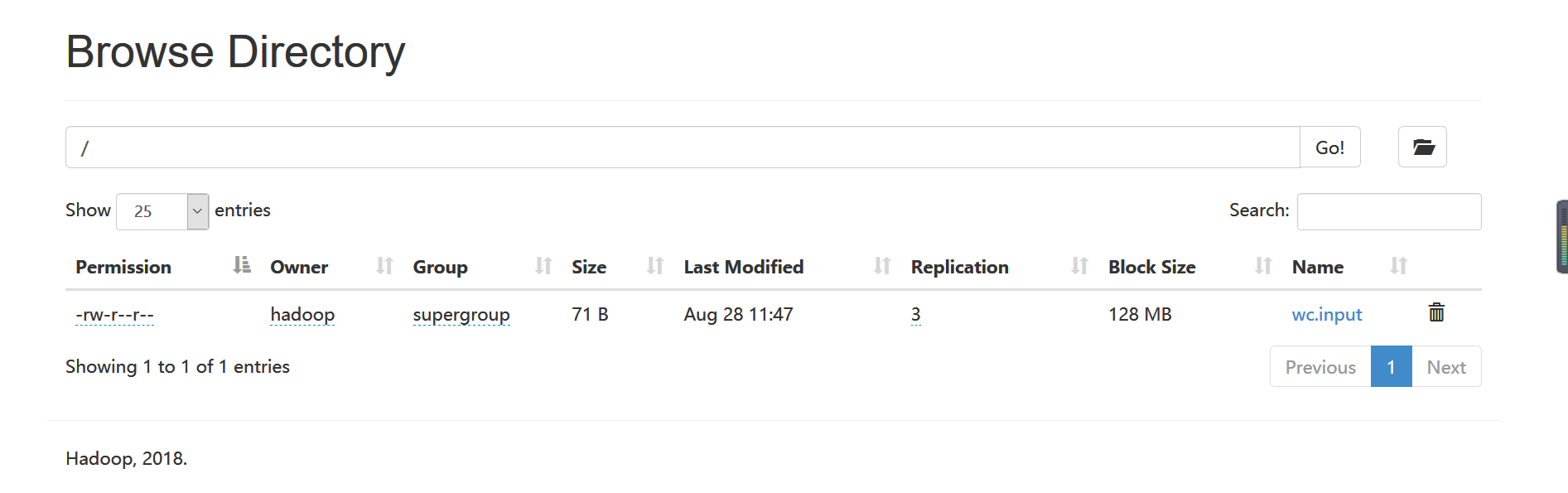

在http://192.168.1.99:50070/explorer.html#/上可以看到已经上传的文件

将hd-01上的NameNode杀掉

jps | grep NameNode | awk '{print $1}' | xargs kill -9

在nn2上查看是否看见文件

已经验证,已经实现nn1和nn2之间的文件同步和故障自动转移。

浙公网安备 33010602011771号

浙公网安备 33010602011771号