数据采集第四次作业

数据采集第四次作业

152301219 李志阳

作业①:

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头

代码逻辑

代码:

点击查看代码

import time

import pymysql

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

db = pymysql.connect(

host="localhost",

user="root",

password="Lzy.0417",

database="stock",

charset="utf8mb4"

)

cursor = db.cursor()

chrome_path = r"D:\pythonlearning\python3.1\Scripts\chromedriver-win64\chromedriver.exe"

service = Service(chrome_path)

options = webdriver.ChromeOptions()

options.add_argument("--start-maximized")

options.add_argument("--disable-blink-features=AutomationControlled")

driver = webdriver.Chrome(service=service, options=options)

boards = {

"hs": "http://quote.eastmoney.com/center/gridlist.html#hs_a_board",

"sh": "http://quote.eastmoney.com/center/gridlist.html#sh_a_board",

"sz": "http://quote.eastmoney.com/center/gridlist.html#sz_a_board"

}

def scroll_to_bottom():

last = 0

max_scroll_attempts = 5

attempts = 0

while attempts < max_scroll_attempts:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2)

new = driver.execute_script("return document.body.scrollHeight")

if new == last:

break

last = new

attempts += 1

print(f"滚动到底部完成,共滚动 {attempts} 次。")

def parse_and_save(board_name):

print("等待股票列表加载中……")

WebDriverWait(driver, 20).until(

EC.presence_of_element_located((By.CSS_SELECTOR, "tbody tr"))

)

rows = driver.find_elements(By.CSS_SELECTOR, "tbody tr")

print(f"【{board_name}】找到 {len(rows)} 条数据")

for row in rows:

cols = row.find_elements(By.TAG_NAME, "td")

if len(cols) < 14:

continue

data = {

"bStockNo": cols[1].text,

"bName": cols[2].text,

"bPrice": cols[4].text,

"bChangePercent": cols[5].text,

"bChangeAmount": cols[6].text,

"bVolume": cols[7].text,

"bTurnover": cols[8].text,

"bAmplitude": cols[9].text,

"bHigh": cols[10].text,

"bLow": cols[11].text,

"bOpen": cols[12].text,

"bPrevClose": cols[13].text,

"board": board_name

}

sql = """

INSERT INTO stock_info(

bStockNo, bName, bPrice, bChangePercent, bChangeAmount,

bVolume, bTurnover, bAmplitude, bHigh, bLow, bOpen,

bPrevClose, board

)

VALUES (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)

"""

cursor.execute(sql, list(data.values()))

db.commit()

db.rollback()

try:

for board, url in boards.items():

driver.get(url)

print("当前页面标题:", driver.title)

print("当前 URL:", driver.current_url)

time.sleep(3)

scroll_to_bottom()

parse_and_save(board)

finally:

driver.quit()

db.close()

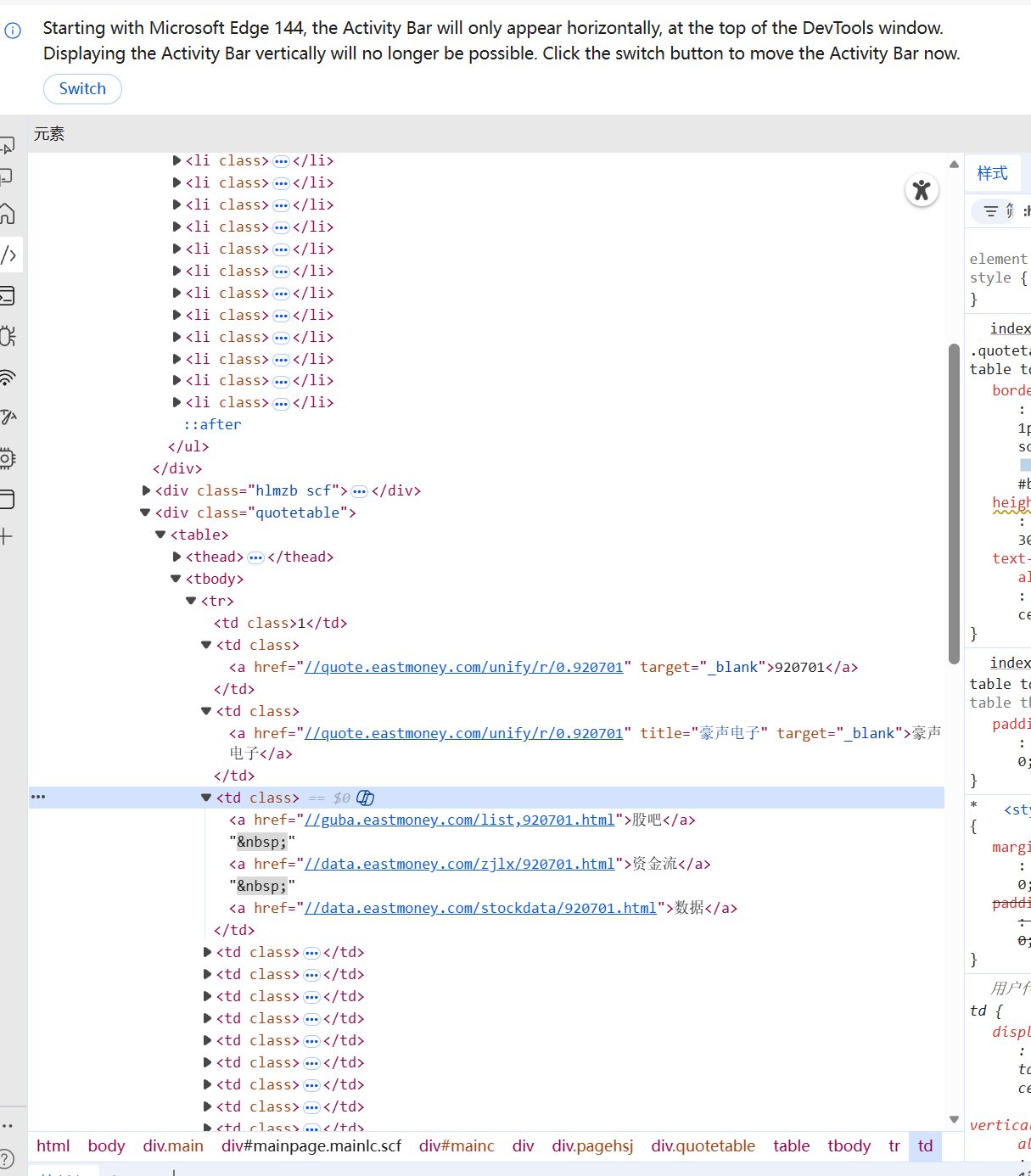

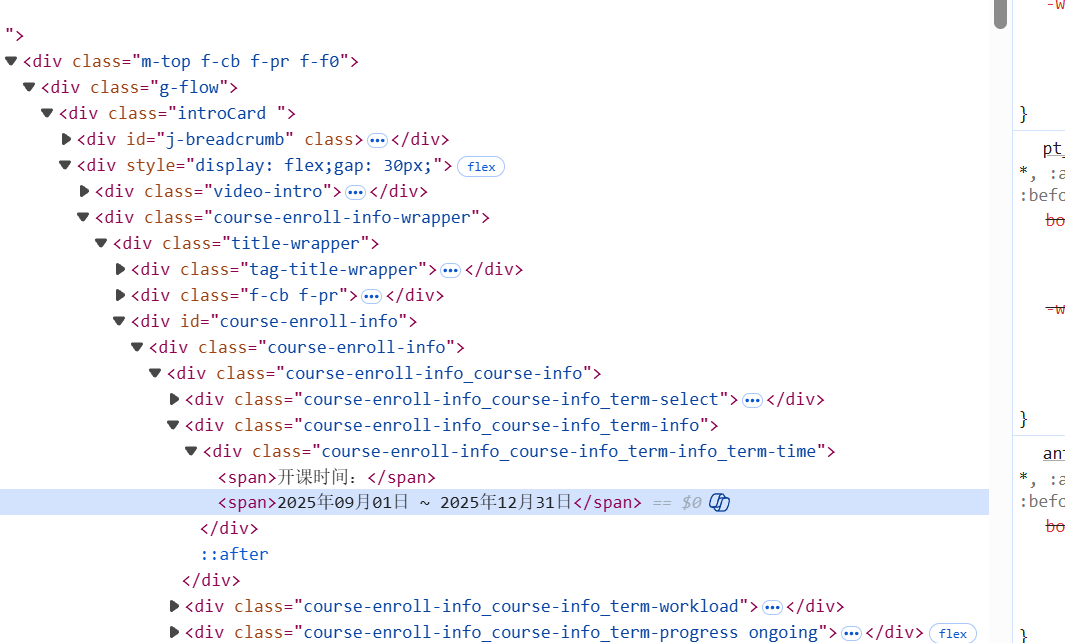

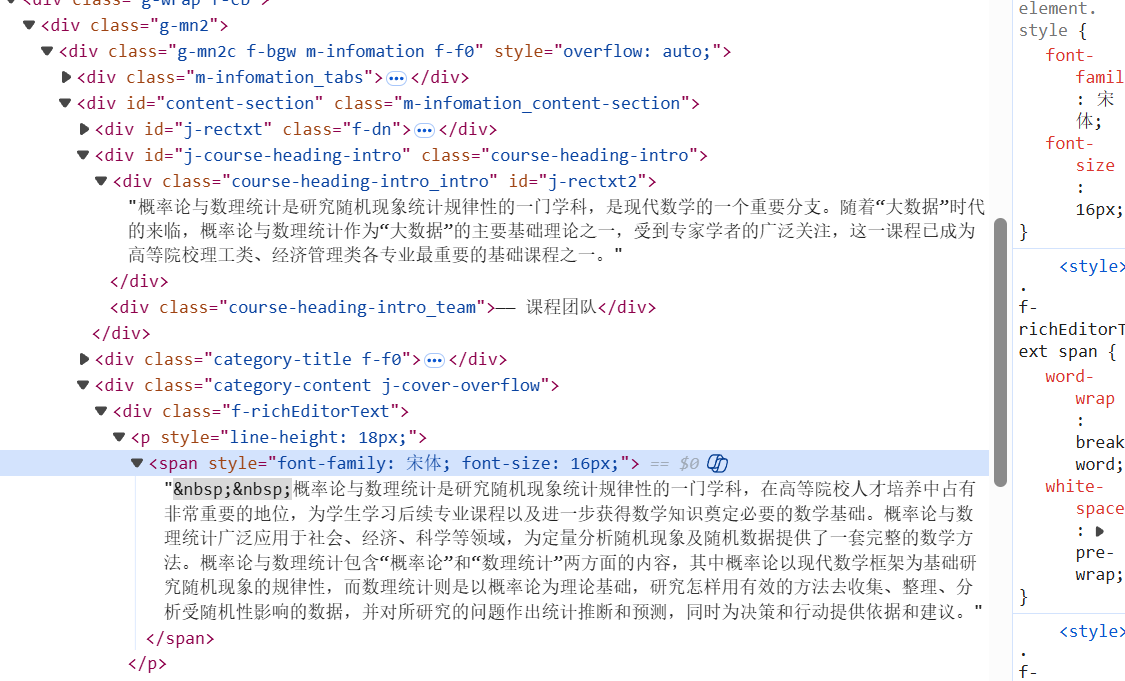

通过分析网页的HTML结构,发现:股票数据都在标签内,每一只股票对应一个标签,使用"tbody tr"这个CSS选择器就能定位到所有股票行

直接按标签名"td"找所有单元格通过索引位置(第0-13列)获取对应数据

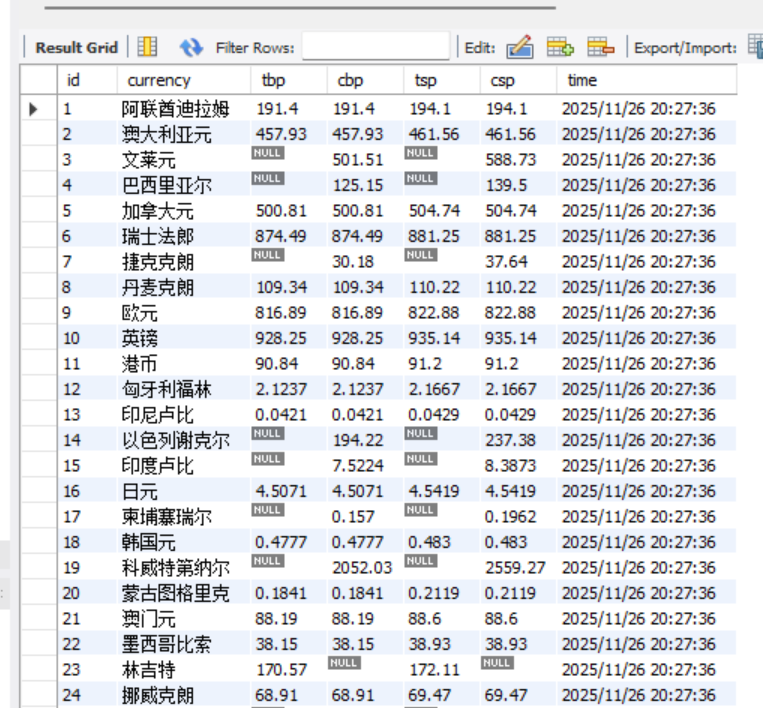

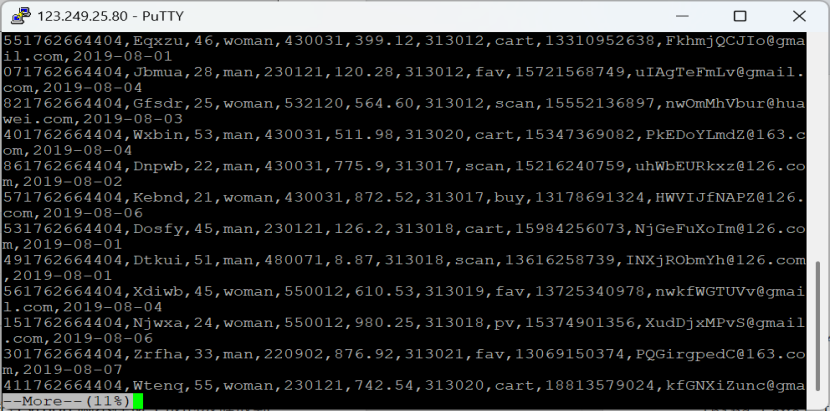

代码运行结果

心得体会:

通过编写这个爬虫程序,我深刻体会到理论与实践相结合的重要性。一开始面对动态加载的网页感到无从下手,但通过分析DOM结构和实际测试,逐渐掌握了Selenium处理JavaScript渲染内容的技巧。特别是学会了如何通过滚动页面触发数据加载,以及使用CSS选择器精准定位元素。数据库操作部分让我明白了数据持久化的必要性,从简单的数据采集到完整的入库流程,构成了一个完整的数据获取系统。这次实践不仅提升了我的编程能力,更重要的是培养了解构复杂问题、分步骤解决的思维方式。遇到反爬虫机制时的调试过程,也让我对网络爬虫的伦理和技术边界有了更深的理解。

作业②:

要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

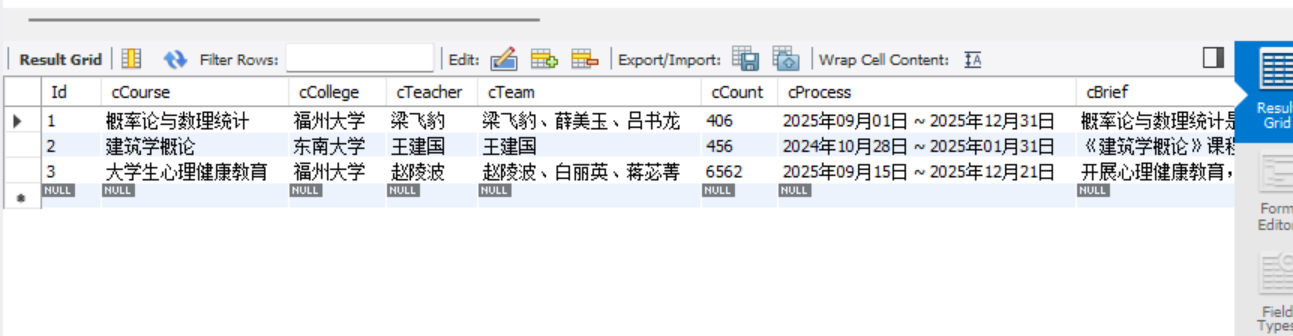

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

候选网站:中国mooc网:https://www.icourse163.org

输出信息:MYSQL数据库存储和输出格式

代码

点击查看代码

import time

import re

import pymysql

from selenium import webdriver

from selenium.webdriver.chrome.service import Service as ChromeService

from selenium.webdriver.chrome.options import Options as ChromeOptions

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from tqdm import tqdm

import pymysql.err

CHROME_DRIVER_PATH = r"D:\pythonlearning\python3.1\Scripts\chromedriver-win64\chromedriver.exe"

# 2. MySQL 数据库配置

DB_CONFIG = {

'host': 'localhost',

'user': 'root',

'password': 'Lzy.0417',

'database': 'stock',

'charset': 'utf8mb4',

}

# 3. Cookies 字符串 (从浏览器获取的有效Cookies)

MOOC_COOKIES_STR = """

WM_TID=xYA2T62WLDVFFFABFAPXV589L95M19iR; NTESSTUDYSI=3d0f41ed4fd8486f90e7ba2a142c5a95; EDUWEBDEVICE=49bf8f621fcb41a6abbe44762b248bf7; utm="eyJjIjoiIiwiY3QiOiIiLCJpIjoiIiwibSI6IiIsInMiOiIiLCJ0IjoiIn0=|aHR0cHM6Ly9lZHUuY25ibG9ncy5jb20v"; hb_MA-A976-948FFA05E931_source=edu.cnblogs.com; Hm_lvt_77dc9a9d49448cf5e629e5bebaa5500b=1764585035; HMACCOUNT=DFB509C7AFB0A519; WM_NI=HY4R7n0J46VM3N%2BM7DVXMN98H8zBVWhrm0Pd%2F%2BlELCwAHn48J7EnYElAGVZKKmkOAjoJmci4wOo3e%2F%2Fh1qaHPH97%2F5Vxk1X%2FS9HnN22cGLhQ6EvPO%2BxLNhG78Lv5%2B6cVaXY%3D; WM_NIKE=9ca17ae2e6ffcda170e2e6eeb0f46b8da7f7a8db7db3b88eb7d84b838e8eb0c26790958cb1fc39a9bf8c89cc2af0fea7c3b92a8c87fcb3b666f496fcd7d547f192e5aeb650968883afbc348ce8b6a3d55dacaea3d1d370edf5a4a6cf49b59f888fb7348ae98b8fdc4fb09f89b7d94189b3a5b0c462ad96a384ec40b591a1d4e825edb8ff92e4449498f7d8ae3f96a8a5d2db2591e7888fcd3af2bd89adeb5bf69bbab1ce21bc948289c63dfc94bad6aa3ea18aafb9dc37e2a3; __yadk_uid=enEQ9n66EmyqjJVwFpZcaqEzOzgYK; NTES_YD_SESS=KLPG9YVcE22FLfW6U5fsc9ldWZG9elFZUmY.zf9PrwozqC5iqgFBt6nRd8n4_VHOxNu_iNKvpul6Aqxdi2L7s.3jQ0Jox7vDmNnmUE.TRELdae3YZ1QDAgXWFDDjZL6m.sbPpF2GpIPR8j2xQTbQ4mx8TJSvZOVOXH3DhPEZpqJUi1QB3q5VVhQsbHT_ka8HKlcD1OK27dCHNGctncidpHpxgcZenxAo5; NTES_YD_PASSPORT=XeZYrrQymveIa3N6xWEcmnOa60sRUNhgbZtsCaM_QjA1wsISwc4gjpothvou2GRqfer2SeyXbrNfYd2FbScp6.WuW7I.gKSesxN6fDCn9lMraH.Bcywo6HLlawLJfjQ28KRrRRsteC11gloT1YOlObfwMfrioVGCqttw_FlqhGjc5fu6P5i8z9hak9jUgfH7Ucor9hcx57L6SoA0A5TuKe_3; STUDY_INFO="yd.c6b2e576984d4ddb8@163.com|8|1559006252|1764585169725"; STUDY_SESS="3SOQ5b/bk0/5hapHjnBMgYiXPA3CoYtK90bB2jIZgnlKxEErNp7TnkDhwaJBAid4avPVl4LrJNYbbA2xWbLcAwTfR6lbBMC3JRiVByMRIZGUhk8GglD7mu0+voTHohZXeyOv6tZAg6v0/Gd5eUVnCQj8GwnpqA6PXKNZGXvuOuwLhur2Nm2wEb9HcEikV+3FTI8+lZKyHhiycNQo+g+/oA=="; STUDY_PERSIST="Y0un9yOE6Ms7zfGad1a5839iJWZRduzz/T1KOs2b0KDU8V4KJSZIBEVgLeJN1qepkmxk66l8wGZ4abqPzlfKSz2jWp4ZcFaCNQ5pXbIBWNYT8wXlQYtWjLsOXT2jEa5hGVfYNW0MU7QwU1JKh5DSyqMqHCQkmXpOG5xcWHcYzld9SdoArPjLh3WYmRaRif6jos4Hmg11X9HqR/UE4xcq6lVL7CqcChhzHW8mO+9wBfXZgpjCC7Iso4RP9U87vJE8LtaQzUT1ovP2MqtW5+L3Hw+PvH8+tZRDonbf3gEH7JU="; NETEASE_WDA_UID=1559006252#|#1695776594079; Hm_lpvt_77dc9a9d49448cf5e629e5bebaa5500b=1764586681

"""

# 【数据库操作函数】

def connect_db():

"""连接到 MySQL 数据库"""

conn = pymysql.connect(**DB_CONFIG)

return conn

def create_table_if_not_exists(conn):

cursor = conn.cursor()

sql = """

CREATE TABLE IF NOT EXISTS mooc_courses (

Id INT AUTO_INCREMENT PRIMARY KEY,

cCourse VARCHAR(255) NOT NULL,

cCollege VARCHAR(255) NOT NULL,

cTeacher VARCHAR(255),

cTeam TEXT,

cCount INT,

cProcess VARCHAR(255),

cBrief TEXT,

UNIQUE KEY unique_course (cCourse, cCollege)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

"""

cursor.execute(sql)

conn.commit()

def truncate_table(conn):

cursor = conn.cursor()

sql = "TRUNCATE TABLE mooc_courses;"

cursor.execute(sql)

conn.commit()

cursor.close()

def insert_course(conn, cursor, course_data):

"""插入课程数据到 mooc_courses 表。"""

sql = """

INSERT INTO mooc_courses

(cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief)

VALUES (%s, %s, %s, %s, %s, %s, %s)

"""

participant_count = course_data[4]

if not isinstance(participant_count, int) and participant_count is not None:

count_match = re.search(r'\d+', str(participant_count))

participant_count = int(count_match.group(0)) if count_match else None

process_value = course_data[5] if course_data[5] != "" else None

data_to_insert = course_data[:5] + (process_value,) + course_data[6:]

cursor.execute(sql, data_to_insert)

conn.commit()

# 【Selenium 爬取函数】

def parse_list_info(card):

title = "N/A"

school = "N/A"

progress = ""

detail_link = ""

WebDriverWait(card.parent, 5).until(EC.visibility_of(card))

title_elem = card.find_element(By.CSS_SELECTOR, ".title")

title = title_elem.text.strip() if title_elem else "N/A"

school_elem = card.find_element(By.CSS_SELECTOR, ".school")

school = school_elem.text.strip() if school_elem else "N/A"

link_elem = card.find_element(By.CSS_SELECTOR, ".menu a")

detail_link = link_elem.get_attribute("href")

if detail_link and detail_link.startswith('//'):

detail_link = "https:" + detail_link

elif detail_link and detail_link.startswith('/course/'):

detail_link = "https://www.icourse163.org" + detail_link

detail_link = detail_link.split('#')[0]

return title, school, progress, detail_link

def parse_detail(driver, url):

teacher = ""

team = ""

count = None

brief = ""

process = ""

driver.get(url)

WebDriverWait(driver, 15).until(

EC.presence_of_element_located(

(By.CSS_SELECTOR, ".course-title-content_course-title-content_3o0Mv, .um-list-slider_con_item")

)

)

time.sleep(2)

# --- 1. 提取教师 (cTeacher) 和团队 (cTeam) ---

teacher_elements = driver.find_elements(By.CSS_SELECTOR, ".um-list-slider_con_item h3")

teacher_names = [t.text.strip() for t in teacher_elements if t.text.strip()]

if teacher_names:

teacher = teacher_names[0]

team = "、".join(teacher_names)

# --- 2. 提取报名人数 (cCount) ---

count_elem = driver.find_element(By.CSS_SELECTOR, "span.count")

count_text = count_elem.text.strip()

count_match = re.search(r'\d+', count_text)

count = int(count_match.group(0)) if count_match else None

# --- 3. 提取 cProcess(开课时间) ---

process = driver.find_element(

By.XPATH, "//span[contains(text(), '开课时间')]/following-sibling::span"

).text.strip()

# --- 4. 提取课程简介 (cBrief) ---

brief_elem = driver.find_elements(By.CSS_SELECTOR, "div.f-richEditorText, div[class*='content_wrap']")

if brief_elem:

brief = brief_elem[0].text.strip()

return teacher, team, count, brief, process

# 【主要流程控制函数】

def mooc_login_via_cookies(driver):

"""加载 Cookies 模拟登录"""

driver.get("https://www.icourse163.org/")

time.sleep(1)

driver.delete_all_cookies()

cookie_items = MOOC_COOKIES_STR.replace("\n", "").split(';')

for item in cookie_items:

if item:

name, value = item.strip().split('=', 1)

driver.add_cookie({

'name': name.strip(),

'value': value.strip(),

'domain': '.icourse163.org'

})

return True

def scrape_logged_in_courses():

# 1. 数据库准备

conn = connect_db()

create_table_if_not_exists(conn)

truncate_table(conn)

cursor = conn.cursor()

# 2. WebDriver 初始化

options = ChromeOptions()

options.add_argument('--disable-application-cache')

options.add_experimental_option("detach", True)

service = ChromeService(executable_path=CHROME_DRIVER_PATH)

driver = webdriver.Chrome(service=service, options=options)

driver.maximize_window()

# 3. 登录

mooc_login_via_cookies(driver)

# 4. 访问课程列表页面

course_list_url = "https://www.icourse163.org/home.htm#/home/course/studentcourse"

driver.get(course_list_url)

# 等待页面加载

WebDriverWait(driver, 20).until(EC.url_contains("studentcourse"))

WebDriverWait(driver, 20).until(EC.presence_of_element_located((By.CSS_SELECTOR, ".course-panel-body-wrapper")))

# 模拟下拉加载全部课程

last_height = driver.execute_script("return document.body.scrollHeight")

max_scrolls = 10

scroll_count = 0

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2)

new_height = driver.execute_script("return document.body.scrollHeight")

scroll_count += 1

if new_height == last_height or scroll_count >= max_scrolls:

break

last_height = new_height

course_cards = driver.find_elements(By.CSS_SELECTOR, ".course-panel-body-wrapper .course-card-wrapper")

time.sleep(1)

print(f"\n共找到 {len(course_cards)} 门课程。开始爬取详情...")

course_list_data = []

for i, card in enumerate(course_cards):

title, school, progress_placeholder, link = parse_list_info(card)

course_list_data.append({'title': title, 'school': school, 'link': link})

for course_info in tqdm(course_list_data, desc="处理课程详情"):

course_name = course_info['title']

college_name = course_info['school']

detail_url = course_info['link']

if detail_url and detail_url not in ["N/A", ""]:

teacher_name, team_str, participant_count, brief, process = parse_detail(driver, detail_url)

course_data = (course_name, college_name, teacher_name, team_str, participant_count, process, brief)

insert_course(conn, cursor, course_data)

# 关闭资源

driver.quit()

cursor.close()

conn.close()

if __name__ == "__main__":

scrape_logged_in_courses()

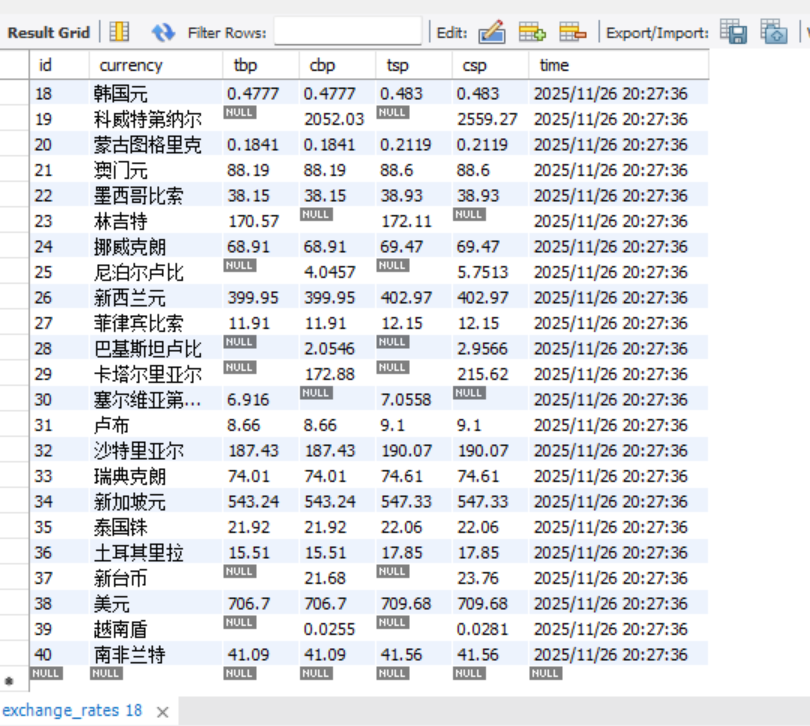

找到进入每个课程详情的位置

定位每个字段

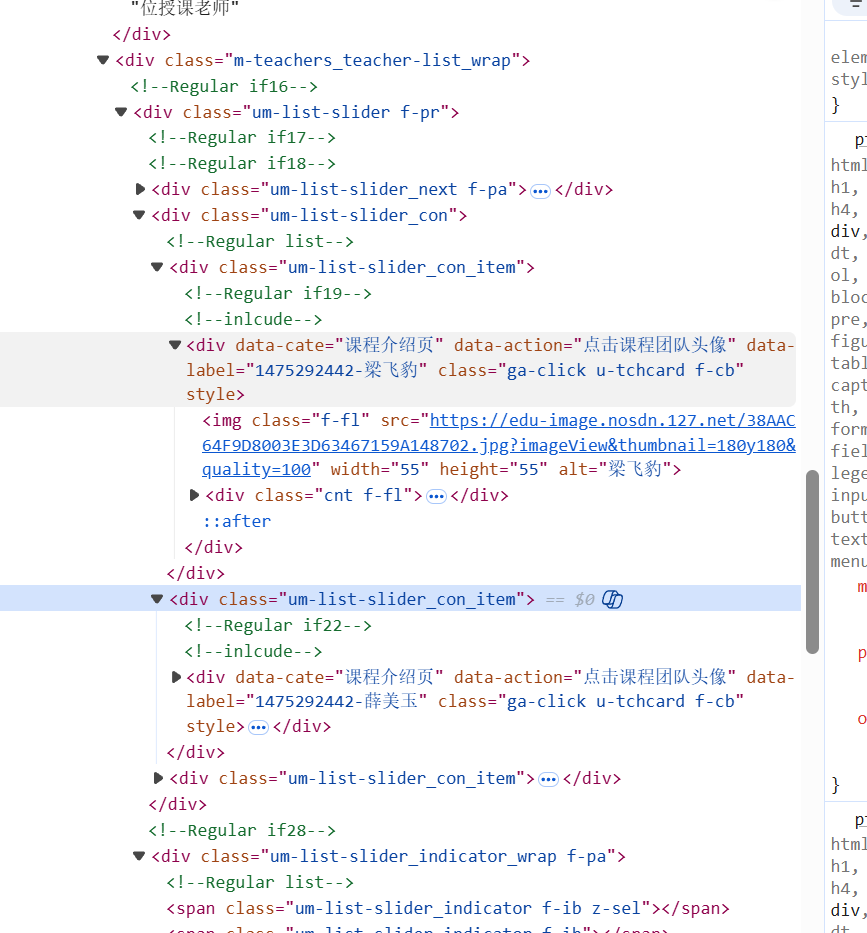

将第一位爬取的老师作为主讲教师,其余归为团队

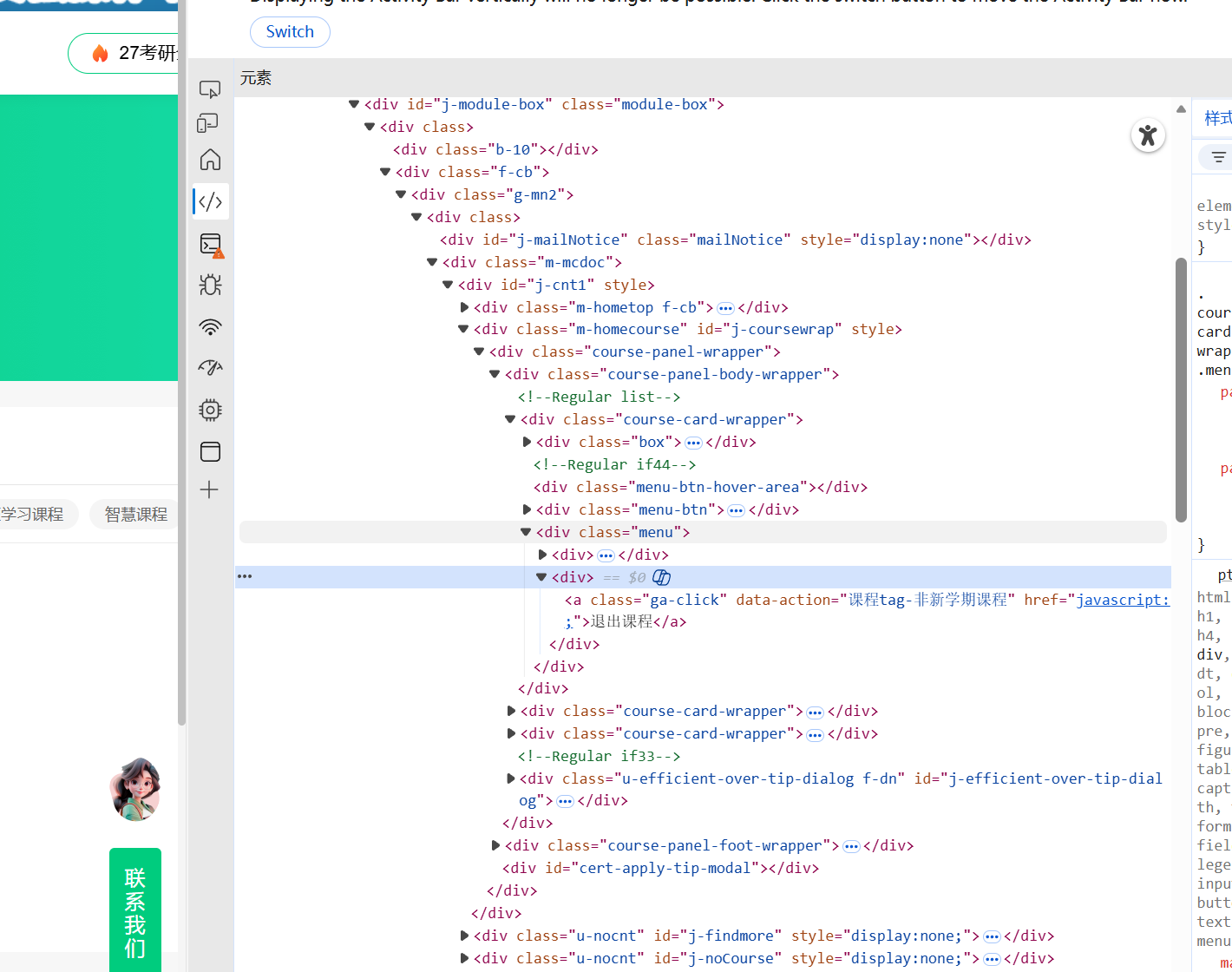

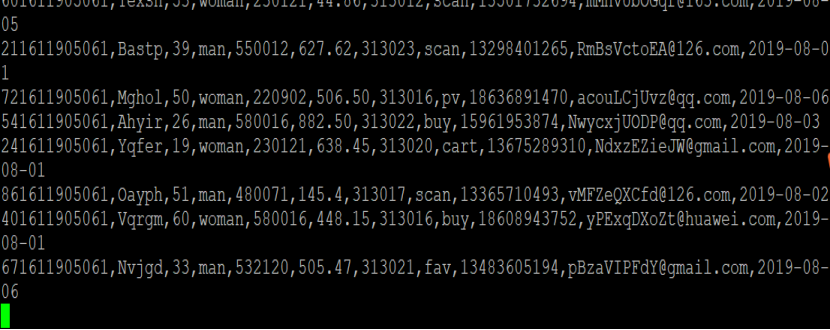

运行结果

心得体会

期初我首先由于没有给代码添加网页的cookies,导致网页一进入就显示无访问,随后添加了页面cookies成功用selenium模拟登录了个人界面,分别进入每门课程爬取信息,这次编写中国大学 MOOC 课程爬取代码的过程Selenium 的使用让我熟悉了网页元素定位、动态加载处理和 Cookies 登录的技巧,尤其是处理课程详情页的多类元素时,XPath 和 CSS 选择器的灵活运用至关重要。整个过程不仅提升了我的 Python 编程能力,更培养了我拆解问题、逐步优化的思维方式,也让我认识到实际项目中代码可读性和可维护性的重要价值

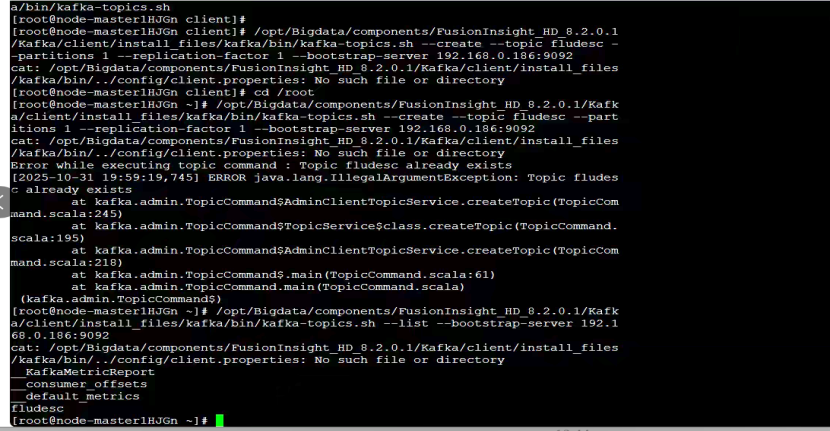

作业③:

要求:

掌握大数据相关服务,熟悉Xshell的使用

完成文档 华为云_大数据实时分析处理实验手册-Flume日志采集实验(部分)v2.docx 中的任务,即为下面5个任务,具体操作见文档。

环境搭建:

任务一:开通MapReduce服务

实时分析开发实战:

任务一:Python脚本生成测试数据

任务二:配置Kafka

任务三: 安装Flume客户端

任务四:配置Flume采集数据

心得体会

这次实战让我系统掌握了数据采集全流程:用 Python 生成测试数据时,学会了构造贴合业务的结构化数据;配置 Kafka 理解了消息队列的核心作用;Flume 的安装与配置则让我掌握了日志采集的灵活策略。

gitee地址:https://gitee.com/li-zhiyang-dejavu/2025_crawl_project/tree/master/4

浙公网安备 33010602011771号

浙公网安备 33010602011771号