pytorch实践(八) 绘制多轮训练和测试曲线

绘制训练/测试的 Loss 和 Accuracy 曲线,直观判断模型训练效果是否良好。

| 图像 | 解释 |

|---|---|

| Loss 曲线(损失函数) | 反映模型在训练和测试过程中的错误程度,越低越好。 |

| Accuracy 曲线(准确率) | 反映模型预测正确的比例,越高越好。 |

你可以从图中看到什么?

1. 是否在收敛?

-

Loss 是否逐步降低?

-

Accuracy 是否逐步上升?

如果 loss 一直很高,说明模型学不到东西,可能是网络设计、学习率等参数有问题。

2. 是否过拟合?

-

如果训练集 Accuracy 很高,但测试集 Accuracy 很低,就说明模型只记住了训练数据,没有学会泛化能力(过拟合)。

3. 是否欠拟合?

-

如果训练集和测试集 Accuracy 都很低,说明模型还没有学好,可能是模型太简单,或训练轮数不够。

neural_network_model.py

from torch import nn # 定义神经网络模型 class NeuralNetwork(nn.Module): def __init__(self): super().__init__() self.flatten = nn.Flatten() # 将 1x28x28 展平为 784 self.linear_relu_stack = nn.Sequential( nn.Linear(28*28, 128), nn.ReLU(), nn.Linear(128, 64), nn.ReLU(), nn.Linear(64, 10) # 最终10类输出 ) def forward(self, x): x = self.flatten(x) logits = self.linear_relu_stack(x) return logits

loss_acc_plot.py

import torch from torch import nn from torch.utils.data import DataLoader from torchvision import datasets, transforms import matplotlib.pyplot as plt from neural_network_model import NeuralNetwork from torchvision.transforms import ToTensor # 下载 FashionMNIST 训练集和测试集 training_data = datasets.FashionMNIST( root="data", train=True, download=True, transform=ToTensor() ) test_data = datasets.FashionMNIST( root="data", train=False, download=True, transform=ToTensor() ) # 用 DataLoader 封装 train_dataloader = DataLoader(training_data, batch_size=64, shuffle=True) # batch_size=64:每次迭代从训练集中取出 64 个样本。 # shuffle=True:每轮训练(epoch)前会打乱数据顺序,提高训练效果,防止模型记住顺序。 test_dataloader = DataLoader(test_data, batch_size=64, shuffle=True) # 设置训练设备、实例化模型和损失函数 device = "cuda" if torch.cuda.is_available() else "cpu" model = NeuralNetwork().to(device) # 创建该网络的一个实例对象并存储到设备 loss_fn = nn.CrossEntropyLoss() #设置损失函数 optimizer = torch.optim.SGD(model.parameters(), lr=1e-2) # 训练函数 def train_loop(dataloader, model, loss_fn, optimizer): size = len(dataloader.dataset) num_batches = len(dataloader) model.train() total_loss = 0 correct = 0 for X, y in dataloader: X, y = X.to(device), y.to(device) pred = model(X) loss = loss_fn(pred, y) optimizer.zero_grad() loss.backward() optimizer.step() total_loss += loss.item() correct += (pred.argmax(1) == y).type(torch.float).sum().item() avg_loss = total_loss / num_batches accuracy = correct / size return avg_loss, accuracy # 测试函数 def test_loop(dataloader, model, loss_fn): size = len(dataloader.dataset) num_batches = len(dataloader) model.eval() total_loss = 0 correct = 0 with torch.no_grad(): for X, y in dataloader: X, y = X.to(device), y.to(device) pred = model(X) loss = loss_fn(pred, y) total_loss += loss.item() correct += (pred.argmax(1) == y).type(torch.float).sum().item() avg_loss = total_loss / num_batches accuracy = correct / size return avg_loss, accuracy # 准备画图数据 train_losses, train_accuracies = [], [] test_losses, test_accuracies = [], [] # 训练过程 epochs = 10 for epoch in range(epochs): print(f"Epoch {epoch+1}/{epochs}") train_loss, train_acc = train_loop(train_dataloader, model, loss_fn, optimizer) test_loss, test_acc = test_loop(test_dataloader, model, loss_fn) train_losses.append(train_loss) train_accuracies.append(train_acc) test_losses.append(test_loss) test_accuracies.append(test_acc) print(f"Train Loss: {train_loss:.4f}, Train Acc: {train_acc:.4f}") print(f"Test Loss: {test_loss:.4f}, Test Acc: {test_acc:.4f}") print("-" * 50) # 设置支持中文显示 plt.rcParams['font.family'] = 'SimHei' plt.rcParams['axes.unicode_minus'] = False # 绘制 loss / accuracy 曲线 epochs_range = range(1, epochs + 1) plt.figure(figsize=(10, 4)) # Loss 曲线 plt.subplot(1, 2, 1) plt.plot(epochs_range, train_losses, label="Train Loss 训练损失") plt.plot(epochs_range, test_losses, label="Test Loss 测试损失") plt.xlabel("Epoch 训练轮数") plt.ylabel("Loss 损失") plt.title("Loss Curve 损失曲线") plt.legend() plt.grid(True) # Accuracy 曲线 plt.subplot(1, 2, 2) plt.plot(epochs_range, train_accuracies, label="Train Acc 训练准确率") plt.plot(epochs_range, test_accuracies, label="Test Acc 测试准确率") plt.xlabel("Epoch 训练轮数") plt.ylabel("Accuracy 准确率") plt.title("Accuracy Curve 准确率曲线") plt.legend() plt.grid(True) plt.tight_layout() plt.show()

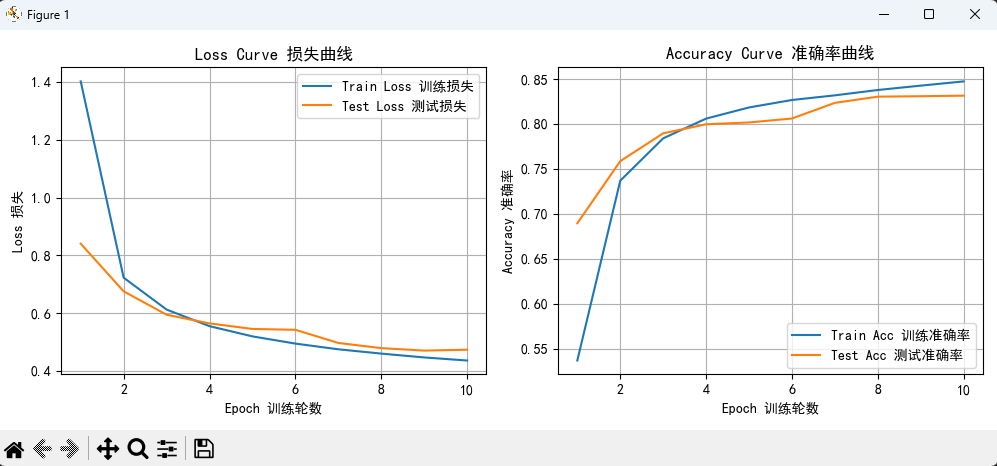

训练10轮,绘图效果:

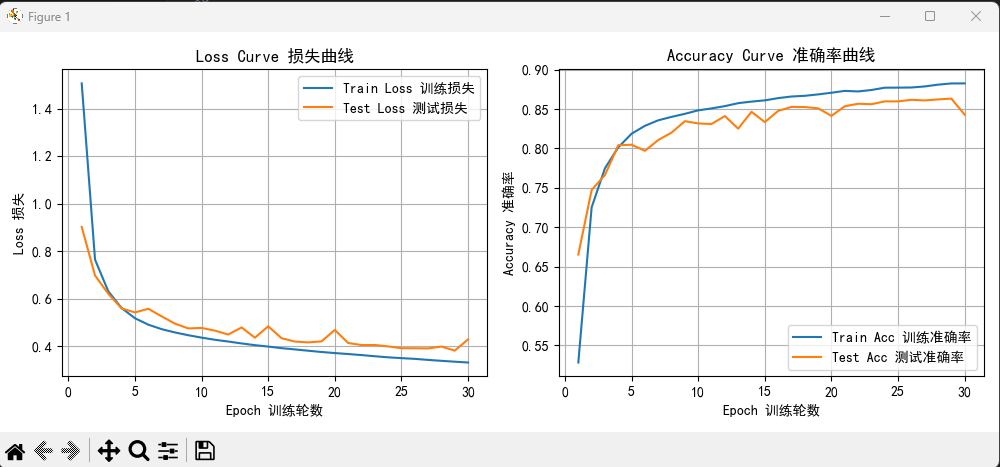

训练30轮效果:

可以看的大概在25轮之后,测试准确率就不再增长,甚至到了28轮之后,测试准确率显著下降,也就是过拟合了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号