大数据总结

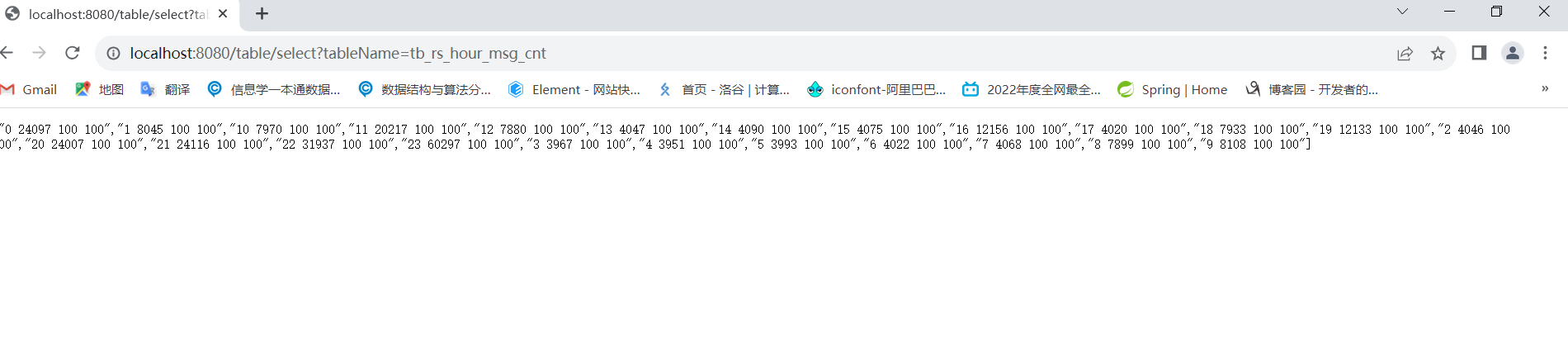

这周四开始试写java web,其中数据库换成了hive,我用的springbootl来集成的,简单的增查可以实现,接下来去写完整逻辑的web网站。

hive整个服务启动流程

首先是 start-all.sh 然后打开历史服务器: mapred --daemon start historyserver 最后首先启动metastore服务,然后启动hiveserver2服务 nohup bin/hive --service metastore >> logs/metastore.log 2>&1 & nohup bin/hive --service hiveserver2 >> logs/hiveserver2.log 2>&1 &

spingboot集成hive小demo

首先呢就是pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.7.14</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>hive</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>hive</name>

<description>hive</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-thymeleaf</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- 德鲁伊连接池依赖 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.2.5</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<!-- 添加hive依赖 -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>3.1.2</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty</groupId>

<artifactId>*</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.hive</groupId>

<artifactId>hive-shims</artifactId>

</exclusion>

<exclusion>

<groupId>org.glassfish</groupId>

<artifactId>javax-el</artifactId>

</exclusion>

<!--<exclusion>-->

<!--<groupId>org.slf4j</groupId>-->

<!--<artifactId>slf4j-log4j12</artifactId>-->

<!--</exclusion>-->

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>

然后是yaml文件:

hive: url: jdbc:hive2://node1:10000/db_msg(这个是你的数据库) driver-class-name: org.apache.hive.jdbc.HiveDriver type: com.alibaba.druid.pool.DruidDataSource user: hadoop password: 123456 initialSize: 1 minIdle: 3 maxActive: 20 maxWait: 60000 timeBetweenEvictionRunsMillis: 60000 minEvictableIdleTimeMillis: 30000 validationQuery: select 1 testWhileIdle: true testOnBorrow: false testOnReturn: false poolPreparedStatements: true maxPoolPreparedStatementPerConnectionSize: 20

然后是config配置文件:

package com.example.hive;

import javax.sql.DataSource;

import lombok.Data;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.jdbc.core.JdbcTemplate;

import com.alibaba.druid.pool.DruidDataSource;

@Data

@Configuration

@ConfigurationProperties(prefix = "hive")

public class HiveDruidConfig {

private String url;

private String user;

private String password;

private String driverClassName;

private int initialSize;

private int minIdle;

private int maxActive;

private int maxWait;

private int timeBetweenEvictionRunsMillis;

private int minEvictableIdleTimeMillis;

private String validationQuery;

private boolean testWhileIdle;

private boolean testOnBorrow;

private boolean testOnReturn;

private boolean poolPreparedStatements;

private int maxPoolPreparedStatementPerConnectionSize;

@Bean(name = "hiveDruidDataSource")

@Qualifier("hiveDruidDataSource")

public DataSource dataSource() {

DruidDataSource datasource = new DruidDataSource();

datasource.setUrl(url);

datasource.setUsername(user);

datasource.setPassword(password);

datasource.setDriverClassName(driverClassName);

// pool configuration

datasource.setInitialSize(initialSize);

datasource.setMinIdle(minIdle);

datasource.setMaxActive(maxActive);

datasource.setMaxWait(maxWait);

datasource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

datasource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

datasource.setValidationQuery(validationQuery);

datasource.setTestWhileIdle(testWhileIdle);

datasource.setTestOnBorrow(testOnBorrow);

datasource.setTestOnReturn(testOnReturn);

datasource.setPoolPreparedStatements(poolPreparedStatements);

datasource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize);

return datasource;

}

@Bean(name = "hiveDruidTemplate")

public JdbcTemplate hiveDruidTemplate(@Qualifier("hiveDruidDataSource") DataSource dataSource) {

return new JdbcTemplate(dataSource);

}

}

测试:

package com.example.hive;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

import java.util.List;

import javax.sql.DataSource;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

/**

* 使用 DataSource 操作 Hive

*/

@RestController

public class HiveDataSourceController {

private static final Logger logger = LoggerFactory.getLogger(HiveDataSourceController.class);

@Autowired

@Qualifier("hiveDruidDataSource")

DataSource druidDataSource;

/**

* 测试spring boot是否正常启动

*/

@RequestMapping("/")

public String hello(){

return "hello world";

}

/**

* 列举当前Hive库中的所有数据表

*/

@RequestMapping("/table/list")

public List<String> listAllTables() throws SQLException {

List<String> list = new ArrayList<String>();

Statement statement = druidDataSource.getConnection().createStatement();

String sql = "show tables";

logger.info("Running: " + sql);

ResultSet res = statement.executeQuery(sql);

while (res.next()) {

list.add(res.getString(1));

}

return list;

}

/**

* 查询指定tableName表中的数据

*/

@RequestMapping("/table/select")

public List<String> selectFromTable(String tableName) throws SQLException {

List<String> list = new ArrayList<String>();

Statement statement = druidDataSource.getConnection().createStatement();

String sql = "select * from " + tableName;

logger.info("Running: " + sql);

ResultSet res = statement.executeQuery(sql);

int count = res.getMetaData().getColumnCount();

String str = null;

while (res.next()) {

str = "";

for (int i = 1; i < count; i++) {

str += res.getString(i) + " ";

}

str += res.getString(count);

logger.info(str);

list.add(str);

}

return list;

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号