python爬虫02

1、爬取代理IP,使用xpath

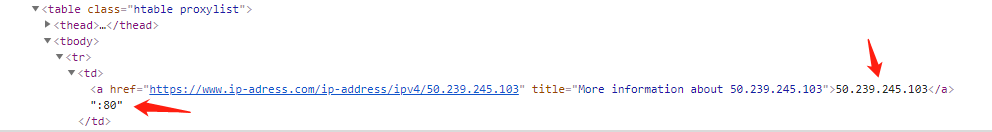

网页分析,需要这两个参数

import requests

from bs4 import BeautifulSoup

from lxml import etree

headers={'Host':"www.ip-adress.com",

'User-Agent':"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0",

'Accept':"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

'Accept-Language':"zh-cn,zh;q=0.8,en-us;q=0.5,en;q=0.3",

'Accept-Encoding':"gzip, deflate",

'Referer':"http://www.ip-adress.com/Proxy_Checker/",

'Connection':'keep-alive'

}

url="http://www.ip-adress.com/proxy_list/"

req=requests.get(url,headers=headers)

#

ret = requests.get(url=url, headers=headers)

page_text = ret.content.decode('utf-8')

tree = etree.HTML(page_text)

div_list = tree.xpath('//table[@class="htable proxylist"]/tbody/tr/td[1]')

proxy_ip_list = []

for i in div_list:

ip = i.xpath('./a/text()')[0]

port = i.xpath('./text()')[0]

a = str(ip) + str(port)

proxy_ip_list.append(a)

print(proxy_ip_list)

['50.239.245.103:80', '92.222.180.156:8080',]

xpath 取出的是一个列表,用[0]取出,再str字符串拼接。

另一种,取IP,但port获取不到

soup=BeautifulSoup(ret.content, 'html.parser', from_encoding='utf-8')

#查找所有td标签

rsp = soup.find_all('table', attrs={'class':'htable proxylist'})

print(rsp)

# <tr>

# <td><a href="https://www.ip-adress.com/ip-address/ipv4/185.62.224.17" title="More information about 185.62.224.17">185.62.224.17</a>:4550</td>

# <td>highly-anonymous</td>

# <td>France</td>

# <td><time datetime="2019-04-17T08:24:27+02:00">1 day ago</time></td>

# </tr>

#取包含www.ip-adress.com/ip-address/ipv4关键字的标签

li = soup.find_all(href=re.compile("www.ip-adress.com/ip-address/ipv4"))

proxy_ip_list = []

for i in li:

proxy_ip_list.append(i.string)

print(proxy_ip_list)

soup = BeautifulSoup(str(rsp[0]), 'html.parser')

for k in soup.find_all('a'):

print(k['href'].split('/')[5])#查a标签的href值

print(k.string)#查a标签的string

print(k.get_text())

爬取梨视频首页的视频,使用xpath

https://www.pearvideo.com/category_1

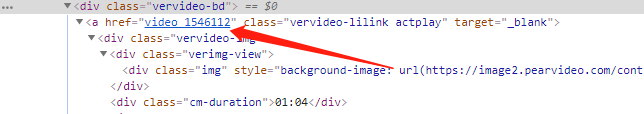

获取视频列表,用get请求,--> https://www.pearvideo.com/video_1546112,

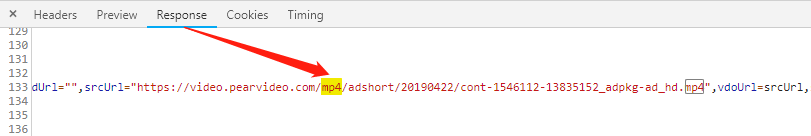

视频URL就在响应中

import requests

import re

from lxml import etree

from multiprocessing.dummy import Pool

import random

url = "https://www.pearvideo.com/category_1"

head = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.118 Safari/537.36',

'referer': 'https://www.pearvideo.com/'

}

page_text = requests.get(url=url,headers=head).text

tree = etree.HTML(page_text)

video = []

li_list = tree.xpath('//div[@id="listvideoList"]/ul/li')

for li in li_list:

detail_url = 'https://www.pearvideo.com/'+li.xpath('./div/a/@href')[0]

detail_page = requests.get(url=detail_url,headers=head).text

video_url = re.findall('srcUrl="(.*?)",vdoUrl', detail_page, re.S)[0] #re.S扩展到整个字符串,包括“\n”

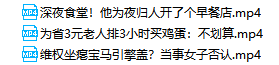

video_title = re.findall('data-title="(.*?)" data-summary',detail_page, re.S)[0]

#print(video_title)

video.append([video_title, video_url])

li_list = tree.xpath('//div[@class="vervideo-bd"]')

for li in li_list:

detail_url = 'https://www.pearvideo.com/'+li.xpath('./a/@href')[0]

detail_page = requests.get(url=detail_url,headers=head).text

video_url = re.findall('srcUrl="(.*?)",vdoUrl', detail_page, re.S)[0] #re.S扩展到整个字符串,包括“\n”

video_title = re.findall('data-title="(.*?)" data-summary', detail_page, re.S)[0]

video.append([video_title, video_url])

print(video)

'''

#此法不加title,文件命名乱

pool = Pool(5)

def getVideoData(url):

return requests.get(url=url,headers=head).content

def saveVideo(data):

num = str(random.randint(0,5000))

fileName = num + '.mp4'

with open(fileName, 'wb')as f:

f.write(data)

#5个线程的数据打包在一个content中

video_content_list = pool.map(getVideoData, video)

#5个线程数据再打包保存

pool.map(saveVideo, video_content_list)

'''

#加title

pool = Pool(5)

def saveVideo(data):

url = data[1]

title =data[0]

ret = requests.get(url=url, headers=head).content

fileName = str(title) + '.mp4'

with open(fileName, 'wb')as f:

f.write(ret)

pool.map(saveVideo, video)

浙公网安备 33010602011771号

浙公网安备 33010602011771号