Redis集群管理(二)

1.进入集群客户端

[root@mysql-db01 ~]# redis-cli -h 10.0.0.51 -p 6379 10.0.0.51:6379>

2.查看集群中各个节点状态

集群(cluster)

cluster info 打印集群的信息

cluster nodes 列出集群当前已知的所有节点(node),以及这些节点的相关信息

节点(node) cluster meet <ip> <port> 将ip和port所指定的节点添加到集群当中,让它成为集群的一份子 cluster forget <node_id> 从集群中移除node_id指定的节点 cluster replicate <node_id> 将当前节点设置为node_id指定的节点的从节点 cluster saveconfig 将节点的配置文件保存到硬盘里面 cluster slaves <node_id> 列出该slave节点的master节点 cluster set-config-epoch 强制设置configEpoch 槽(slot) cluster addslots <slot> [slot ...] 将一个或多个槽(slot)指派(assign)给当前节点 cluster delslots <slot> [slot ...] 移除一个或多个槽对当前节点的指派 cluster flushslots 移除指派给当前节点的所有槽,让当前节点变成一个没有指派任何槽的节点 cluster setslot <slot> node <node_id> 将槽slot指派给node_id指定的节点,如果槽已经指派给另一个节点,那么先让另一个节点删除该槽,然后再进行指派 cluster setslot <slot> migrating <node_id> 将本节点的槽slot迁移到node_id指定的节点中 cluster setslot <slot> importing <node_id> 从node_id 指定的节点中导入槽slot到本节点 cluster setslot <slot> stable 取消对槽slot的导入(import)或者迁移(migrate) 键(key) cluster keyslot <key> 计算键key应该被放置在哪个槽上 cluster countkeysinslot <slot> 返回槽slot目前包含的键值对数量 cluster getkeysinslot <slot> <count> 返回count个slot槽中的键 其它 cluster myid 返回节点的ID cluster slots 返回节点负责的slot cluster reset 重置集群,慎用

10.0.0.51:6379> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:1 cluster_stats_messages_sent:23695 cluster_stats_messages_received:23690 10.0.0.51:6379> cluster nodes e2cfd53b8083539d1a4546777d0a81b036ddd82a 10.0.0.70:6384 slave f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad(主节点为:10.0.0.51:6380) 0 1510021756842 6 connected 857a5132c844d695c002f94297f294f8e173e393 10.0.0.51:6379 myself,master - 0 0 1 connected 0-5460 e4394d43cf18aa00c0f6833f6f498ba286b55ca1 10.0.0.70:6382 master - 0 1510021759865 4 connected 5461-10922 16eca138ce2767fd8f9d0c8892a38de0a042a355 10.0.0.70:6383 slave 857a5132c844d695c002f94297f294f8e173e393 0 1510021757849 5 connected f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad 10.0.0.51:6380 master - 0 1510021754824 2 connected 10923-16383 ##红色字体可以看出只有主节点会被分配哈希槽 d14e2f0538dc6925f04d1197b57f44ccdb7c683a 10.0.0.51:6381 slave e4394d43cf18aa00c0f6833f6f498ba286b55ca1 0 1510021758855 4 connected 10.0.0.51:6379>

3.写入记录

[root@mysql-db01 src]# redis-cli -h 10.0.0.51 -p 6380 10.0.0.51:6380> get mao (nil) 10.0.0.51:6380> set mao 123 OK

4.主节点和备节点切换

在需要的slaves节点上执行命令:CLUSTER FAILOVER

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.51 -p 6380 ###切换主备需要先进入备节点,然后在备节点中切换到主节点

10.0.0.51:6380> cluster failover

(error) ERR You should send CLUSTER FAILOVER to a slave

10.0.0.51:6380> exit

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6383

10.0.0.70:6383> cluster failover ##切换到主节点

OK

10.0.0.70:6383> cluster nodes

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511223574993 6 connected

92dfe8ab12c47980dcc42508672de62bae4921b1 10.0.0.70:6383 myself,master - 0 0 8 connected 500-5460

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 slave 92dfe8ab12c47980dcc42508672de62bae4921b1 0 1511223577007 8 connected

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1511223578014 7 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1511223572983 2 connected 15464-16383

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1511223573988 7 connected 0-499 5461-15463

10.0.0.70:6383>

5.读取记录

10.0.0.51:6380> get mao "123" 10.0.0.51:6380>

6.新加入master 节点

在将redis实例添加到集群之前,一定要确保这个redis实例没有存储过数据,也不能持久化的数据文件,否则在添加的时候会报错的!

节点的维护需要使用redis-trib.rb 工具,而不是redis-cli客户端,退出客户端,使用如下命令

/redis所在目录/src/redis-trib.rb add-node 新节点ip:端口号 集群中任意节点ip:端口号

新加入slave节点

/redis所在目录/src/redis-trib.rb add-node --slave --master-id 主节点的id(用redis-cli,使用cluster node查看) 新节点ip:端口号 集群中任意节点ip:端口号

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-trib.rb add-node 10.0.0.70:6383 10.0.0.51:6380

>>> Adding node 10.0.0.70:6383 to cluster 10.0.0.51:6380

>>> Performing Cluster Check (using node 10.0.0.51:6380)

M: c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380

slots:500-5460,15464-16383 (5881 slots) master

2 additional replica(s)

S: 2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379

slots: (0 slots) slave

replicates c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c

M: 2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382

slots:0-499,5461-15463 (10503 slots) master

1 additional replica(s)

S: c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381

slots: (0 slots) slave

replicates 2da5edfcbb1abc2ed799789cb529309c70cb769e

S: 777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384

slots: (0 slots) slave

replicates c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.0.0.70:6383 to make it join the cluster.

[OK] New node added correctly.

[root@mysql-db01 ~]#

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6383

10.0.0.70:6383> cluster nodes

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511226605993 9 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1511226604987 9 connected 500-5460 15464-16383

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1511226603979 7 connected

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1511226600944 7 connected 0-499 5461-15463

8c6534cbfbd2b5453ab4c90c7724a75d55011c27 10.0.0.70:6383 myself,master - 0 0 0 connected ##这里可以看出10.0.0.70:6383已经加入了集群中

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511226602958 9 connected

10.0.0.70:6383>

7.为slave节点重新分配master

登录从节点的redis-cli

执行如下命令

cluster replicate 5d8ef5a7fbd72ac586bef04fa6de8a88c0671052

后边的id为新的master的id

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6383

10.0.0.70:6383> cluster nodes

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511226605993 9 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1511226604987 9 connected 500-5460 15464-16383

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1511226603979 7 connected

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1511226600944 7 connected 0-499 5461-15463

8c6534cbfbd2b5453ab4c90c7724a75d55011c27 10.0.0.70:6383 myself,master - 0 0 0 connected

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511226602958 9 connected

##上面可以看出来10.0.0.70:6384和10.0.0.51:6379都是10.0.0.51:6380的从节点,我们接下来将10.0.0.51变成10.0.0.70:6383的从节点

10.0.0.70:6383> exit

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.51 -p 6379

10.0.0.51:6379> cluster replicate 8c6534cbfbd2b5453ab4c90c7724a75d55011c27

OK

10.0.0.51:6379> cluster nodes

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 myself,slave 8c6534cbfbd2b5453ab4c90c7724a75d55011c27 0 0 1 connected

8c6534cbfbd2b5453ab4c90c7724a75d55011c27 10.0.0.70:6383 master - 0 1510054872161 0 connected

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1510054875185 7 connected 0-499 5461-15463

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1510054876192 7 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1510054874177 9 connected 500-5460 15464-16383

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1510054875688 9 connected

10.0.0.51:6379>

8.分配哈希槽

/redis所在目录/src/redis-trib.rb reshard 新节点ip:端口号

节点添加到我们的集群中了,但是他没有分配哈希槽,没有分配哈希槽的话表示就没有存储数据的能力,所以我们需要将其他主节点上的哈希槽分配到这个节点上(相当于到菜市场卖菜,但是摊位已经被占了,这时候就需要从其他人的位置分出一个地方)。

为新的master重新分配slot。

/data/redis-3.2.8/src/redis-trib.rb reshard 10.0.0.70:6383

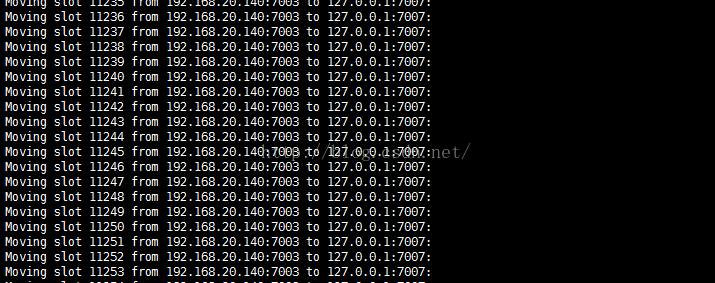

接下来就会询问我们需要借用多少个哈希槽(这个数值随意填,本文我们填1000),完以后,接下来的接收node id 就是我们刚创建的节点id(10.0.0.70:6383)。然后让我们输入源节点,如果这里我们输入all的话,他会随机的从所有的节点中抽取多少个(如1000)作为新节点的哈希槽。我们输入all以后,会出下下图所示东西,表示hash槽正在移动。

移动完以后,我们进入客户端,执行cluster nodes 命令,查看集群节点的状态,我们会看到原来没有的哈希槽现在有了,这样我们一个新的节点就添加好了。

reshard是redis cluster另一核心功能,它通过迁移哈希槽来达到负载匀衡和可扩展目的。

10.0.0.51:6379> cluster nodes

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 myself,slave 8c6534cbfbd2b5453ab4c90c7724a75d55011c27 0 0 1 connected

8c6534cbfbd2b5453ab4c90c7724a75d55011c27 10.0.0.70:6383 master - 0 1510055392655 10 connected 0-857 5461-5601 ##这里我们就可以看出来已经分配了1000个哈希槽

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1510055391648 7 connected 5602-15463

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1510055393661 7 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1510055394667 9 connected 858-5460 15464-16383

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1510055395675 9 connected

10.0.0.51:6379>

9.删除从节点

删除节点也分两种,一种是主节点,一种是从节点。在从节点中,我们没有分配哈希槽,所以删除很简单,我们直接执行下面语句即可

[root@mysql-db01 src]# /data/redis-3.2.8/src/redis-trib.rb del-node 10.0.0.51:6381 d14e2f0538dc6925f04d1197b57f44ccdb7c683a

>>> Removing node d14e2f0538dc6925f04d1197b57f44ccdb7c683a from cluster 10.0.0.51:6381

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

[root@mysql-db01 src]# /data/redis-3.2.8/src/redis-trib.rb del-node 10.0.0.70:6384 e2cfd53b8083539d1a4546777d0a81b036ddd82a

>>> Removing node e2cfd53b8083539d1a4546777d0a81b036ddd82a from cluster 10.0.0.70:6384

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

[root@mysql-db01 src]#

10.删除主节点

而在删除主节点的时候,因为在主节点中存放着数据,所以我们在删除之前,要把这些数据迁移走,并且把该节点上的哈希槽分配到其他主节点上。

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6383

10.0.0.70:6383> cluster replicate f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad ###将该节点的主节点分配到10.0.0.51:6380

OK

10.0.0.70:6383> cluster nodes

16eca138ce2767fd8f9d0c8892a38de0a042a355 10.0.0.70:6383 myself,slave f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad 0 0 5 connected

857a5132c844d695c002f94297f294f8e173e393 10.0.0.51:6379 master - 0 1511203901122 1 connected 4386-5460

f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad 10.0.0.51:6380 master - 0 1511203906160 7 connected 0-4385 5461-16383

10.0.0.70:6383> exit

a.迁移主节点下的slot(槽)

要删除的节点必须是空的,也就是不能缓存任何数据,否则会出现下面删除不成功。对于非空节点,在删除之前需要重新分片,将缓存的数据转移到别的节点。

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-trib.rb del-node 10.0.0.51:6379 857a5132c844d695c002f94297f294f8e173e393 >>> Removing node 857a5132c844d695c002f94297f294f8e173e393 from cluster 10.0.0.51:6379 [ERR] Node 10.0.0.51:6379 is not empty! Reshard data away and try again. [root@mysql-db01 ~]#

[root@mysql-db01 conf]# /data/redis-3.2.8/src/redis-trib.rb reshard 10.0.0.51:6380(需要迁移哈希槽的redis主节点)

>>> Performing Cluster Check (using node 10.0.0.51:6380)

M: c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379

slots:0-5460 (5463 slots) master

1 additional replica(s)

M: 2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: 92dfe8ab12c47980dcc42508672de62bae4921b1 10.0.0.70:6383

slots: (0 slots) slave

replicates 2f003cfd139ae4f2bbdac40b0055b46bdff96e0a

S: c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381

slots: (0 slots) slave

replicates 2da5edfcbb1abc2ed799789cb529309c70cb769e

S: 777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384

slots: (0 slots) slave

replicates c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461

##输入待删除主节点的slots(比如我们要把10.0.0.51:6380的数据迁移,可以上面红色字体可以看出)

What is the receiving node ID?

##输入接受哈希槽的node ID(10.0.0.51:6379的node id)

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: ##从哪里移动数据槽 (这里输入10.0.0.51:6380的node id)

source node #1: ##输入done 即可。

...........

Do you want to proceed with the proposed reshard plan (yes/no)?yes

[root@mysql-db01 conf]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.51 -p 6380

10.0.0.51:6380> cluster nodes

92dfe8ab12c47980dcc42508672de62bae4921b1 10.0.0.70:6383 slave 2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 0 1510037366081 5 connected

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 myself,master - 0 0 1 connected 500-5460

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 master - 0 1510037366583 7 connected 0-499 5461-11422

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1510037364065 7 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1510037363056 2 connected ##没有了数据槽

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1510037365075 6 connected

b.删除主节点

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6383

Could not connect to Redis at 10.0.0.70:6383: Connection refused

Could not connect to Redis at 10.0.0.70:6383: Connection refused

not connected> exit

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-cli -h 10.0.0.70 -p 6382

10.0.0.70:6382> cluster nodes

2f003cfd139ae4f2bbdac40b0055b46bdff96e0a 10.0.0.51:6379 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511225889772 9 connected

2da5edfcbb1abc2ed799789cb529309c70cb769e 10.0.0.70:6382 myself,master - 0 0 7 connected 0-499 5461-15463

c0e1784f0359f986972c1f9a0d9788f3d69e6c99 10.0.0.51:6381 slave 2da5edfcbb1abc2ed799789cb529309c70cb769e 0 1511225890780 7 connected

c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 10.0.0.51:6380 master - 0 1511225891285 9 connected 500-5460 15464-16383

777c9eab94812d13d8b9dc768460dcf1316283f1 10.0.0.70:6384 slave c93b6d1edd6bc4c69d48f9f49e75c2c7f0d1a70c 0 1511225891788 9 connected

10.0.0.70:6382>

11.检查集群所有节点是否正常

[root@mysql-db01 ~]# /data/redis-3.2.8/src/redis-trib.rb check 10.0.0.70:6382

>>> Performing Cluster Check (using node 10.0.0.70:6382)

M: e4394d43cf18aa00c0f6833f6f498ba286b55ca1 10.0.0.70:6382

slots:5461-10922 (5462 slots) master

1 additional replica(s)

M: 857a5132c844d695c002f94297f294f8e173e393 10.0.0.51:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 16eca138ce2767fd8f9d0c8892a38de0a042a355 10.0.0.70:6383

slots: (0 slots) slave

replicates 857a5132c844d695c002f94297f294f8e173e393

S: d14e2f0538dc6925f04d1197b57f44ccdb7c683a 10.0.0.51:6381

slots: (0 slots) slave

replicates e4394d43cf18aa00c0f6833f6f498ba286b55ca1

S: e2cfd53b8083539d1a4546777d0a81b036ddd82a 10.0.0.70:6384

slots: (0 slots) slave

replicates f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad

M: f1f6e93e625e8e0cef0da1b3dfe0a1ea8191a1ad 10.0.0.51:6380

slots:10923-16383 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@mysql-db01 ~]#

以上都是本人测试过后总结,可放心使用,如有不明白,可留言。

您的资助是我最大的动力!

金额随意,欢迎来赏!

浙公网安备 33010602011771号

浙公网安备 33010602011771号