k8s安装

kubeadmin方式安装

-

如果是测试环境建议关闭防火墙

systemctl stop firewalld #关闭防火墙 systemctl disable firewalld #禁用防火墙 -

管理selinux

#修改 /etc/selinux/config 里面的enforcing为disable,也就是永久关闭selinux sed -i 's/enforcing/disable/' /etc/selinux/config #零时生效,重启无效 setenforce 0 -

关闭虚拟内存

#零时 swapoff -a #永久 vi /etc/fstab 注释掉下面的行,然后重启 #/dev/mapper/centos-swap swap swap defaults 0 0 #确认 swap已经关闭,使用 free -m 看到 swap 下面都是0 就是关闭了 [root@localhost ~]# free -m total used free shared buff/cache available Mem: 1819 229 1307 9 281 1437 Swap: 0 0 0 -

给主机命名,编辑主机对应的 /etc/hosts文件,给ip取别名

192.168.100.181 master 192.168.100.182 node1 192.168.100.183 node2 -

配置流量转移

#常见配置文件 touch /etc/sysctl.d/k8s.conf #编辑k8s.conf,写入一下内容 vi /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1然后 sysctl --system 刷新配置

-

开启linux网络时间同步,并且设置时区

#安装ntpdate 并且设置网络同步地址 yum install ntpdate -y ntpdate time.windows.com #设置时区东8区 timedatectl set-timezone Asia/Shanghai -

安装docker 需要指定版本,参看docker安装文档,k8s 1.23.6支持 docker 20.10版本,高的不支持

-

添加k8s镜像仓库并且切换到国内源

# vi /etc/yum.repos.d/kubernetes.repo 写入一下内容 [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgchech=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg -

安装 kubeadm,kubelet,kubectl

yum install -y kubeadm-1.23.6 kubelet-1.23.6 kubectl-1.23.6 -

设置kubelet开机启动

systemctl enable kubelet -

修改docker文件 vi /etc/docker/daemon.json 添加 "exec-opts":["native.cgroupdriver=systemd"]

原因是docker cgroup默认是system,k8s cgroup默认是systemd{ #多配点镜像源,不然有些镜像很拉取下来 "registry-mirrors": [ "https://kn0t2bca.mirror.aliyuncs.com","https://docker.mirrors.ustc.edu.cn", "https://cr.console.aliyun.com/", "https://reg-mirror.qiniu.com", "https://6kx4zyno.mirror.aliyuncs.com", "https://docker.mirrors.ustc.edu.cn", "https://cr.console.aliyun.com/", "https://reg-mirror.qiniu.com", "https://hub-mirror.c.163.com/", "https://dockerproxy.com" ], "exec-opts":["native.cgroupdriver=systemd"] }然后重启docker和kubelet

systemctl daemon-reload systemctl restart docker systemctl status kubelet所有的节点都要执行这个步骤

-

初始化master节点,只在master执行

kubeadm init \ --apiserver-advertise-address=192.168.100.181 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version=v1.23.6 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16如果安装出错排查异常以后 可以使用 kubeadm reset 重置,然后重新初始化master

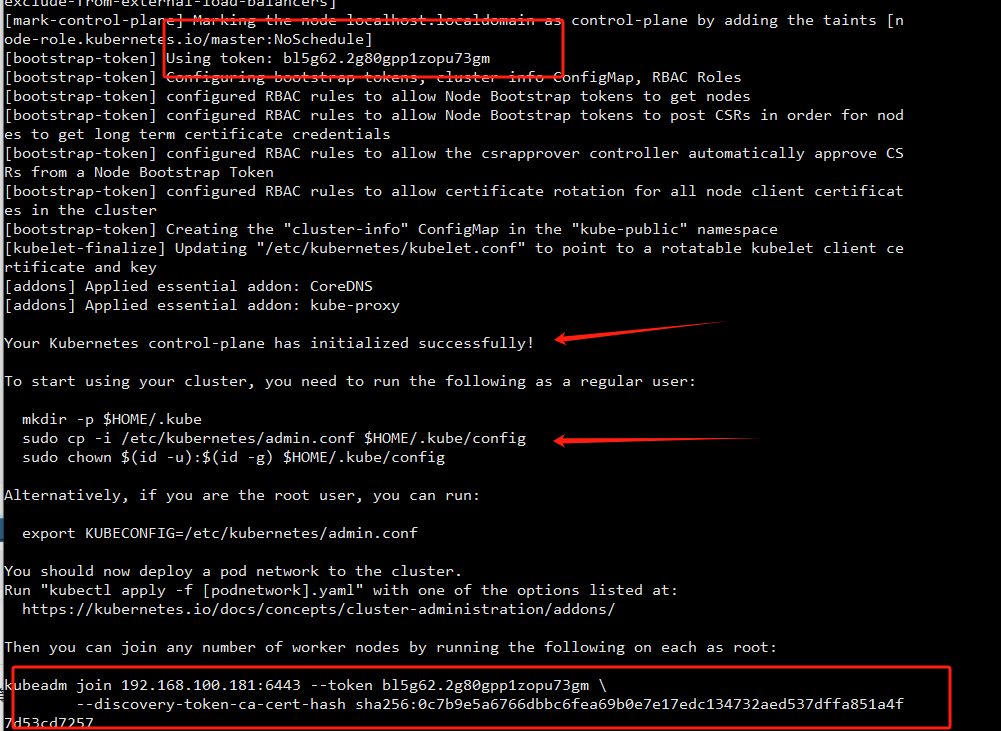

看到下面初始化标记就OK了,然后执行第二个箭头标记的三行命令

![image-20231231215307169]()

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config -

查看 状态 systemctl status kubelet

Active: active (running) 表示启动成功

[root@localhost sysctl.d]# systemctl status kubelet ● kubelet.service - kubelet: The Kubernetes Node Agent Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Drop-In: /usr/lib/systemd/system/kubelet.service.d └─10-kubeadm.conf Active: active (running) since Sun 2023-12-31 21:22:25 CST; 4min 29s ago Docs: https://kubernetes.io/docs/ Main PID: 11318 (kubelet) Tasks: 15 Memory: 48.1M CGroup: /system.slice/kubelet.service └─11318 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf... -

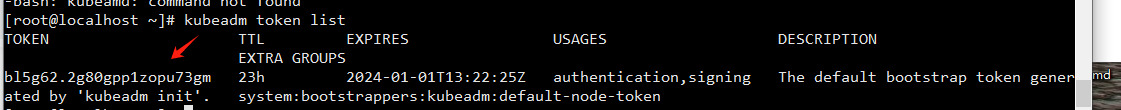

使用 kubeadm token list 查看token,如果token过期 使用 kubeadm token create 创建新的,这个token在 kubeadm init 的时候也有输出(上面初始化完成 标记上面的 红色框里面),token 过期时间1天

![image-20231231214532225]()

-

查询hash值,kubeadm init 成功的最后一行也有输出

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'得到hash要在前面加上 sha256:

-

新节点加入

kubeadm join 192.168.100.181:6443 --token bl5g62.2g80gpp1zopu73gm \ --discovery-token-ca-cert-hash sha256:0c7b9e5a6766dbbc6fea69b0e7e17edc134732aed537dffa851a4f7d53cd7257备注:如果因为docker版本不匹配比如安装了默认版本的docker,卸载docker重新安装指定版本,然后

kubeadm reset 重置子节点在加入 -

子节点加入以后 kubectl get node 查看子节点,这里node1,node2 就是新加入的节点

[root@master ~]# kubectl get no NAME STATUS ROLES AGE VERSION master NotReady control-plane,master 7m53s v1.23.6 node1 NotReady <none> 3m16s v1.23.6 node2 NotReady <none> 2m16s v1.23.6 -

上面的 节点还是noReady 是因为有些镜像拉取不下来

#如果拉取不下来,可以在浏览器获取到这个文件,然后传上去 mkdir /opt/k8s cur https://docs.projectcalico.org/manifests/calico.yaml -O查找文件里面IPV4POOL_CIDR替换值为前面 master init时候的 pod 的cidr

# The default IPv4 pool to create on startup if none exists. Pod IPs will be # chosen from this range. Changing this value after installation will have # no effect. This should fall within `--cluster-cidr`. - name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16"为了速度替换镜像前缀

sed -i 's#docker.io/##g' calico.yaml构建calico

kubectl apply -f calico.yaml这里有等一会,有些镜像拉取比较慢,可以通过配置跟多更好的国内镜像源来解决

备注:docker pull docker.io/calico/cni:v3.26.1 这个镜像拉取死慢,而且很多镜像源都拉取不到 -

查看no 和pod 的 状态,如果有那个镜像拉取不下来修改镜像源在试试

#查看nodes 状态 [root@master ~]# kubectl get no NAME STATUS ROLES AGE VERSION master Ready control-plane,master 13h v1.23.6 node1 Ready <none> 13h v1.23.6 node2 Ready <none> 13h v1.23.6#查看 pods状态 [root@master ~]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-57c6dcfb5b-pq6b8 1/1 Running 2 (25m ago) 12h calico-node-9xvn5 1/1 Running 0 12h calico-node-cnmz5 1/1 Running 2 (25m ago) 12h calico-node-m8sgd 1/1 Running 0 12h coredns-6d8c4cb4d-frjnq 1/1 Running 2 (25m ago) 13h coredns-6d8c4cb4d-mck4t 1/1 Running 2 (25m ago) 13h etcd-master 1/1 Running 6 (31m ago) 13h kube-apiserver-master 1/1 Running 5 (31m ago) 13h kube-controller-manager-master 1/1 Running 5 (31m ago) 13h kube-proxy-67tc2 1/1 Running 4 (25m ago) 13h kube-proxy-qhqrb 1/1 Running 5 (31m ago) 13h kube-proxy-rnbxn 1/1 Running 3 (40m ago) 13h kube-scheduler-master 1/1 Running 6 (31m ago) 13h如果有po不是readiy 使用 kubectl describe po calico-node-9xvn5(po名字) -n kube-system 查看 异常原因

-

给 node 指定 k8s api server的地址

#复制master 配置文件到node1 和node2 scp /etc/kubernetes/admin.conf root@node1:/etc/kubernetes/#导入环境变量 echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile source ~/.bash_profile这样就可以在 子node 上使用 kubectl get no等等 api了

#在node2上的可以调用 api server了 [root@node2 ~]# kubectl get no NAME STATUS ROLES AGE VERSION master Ready control-plane,master 14h v1.23.6 node1 Ready <none> 13h v1.23.6 node2 Ready <none> 13h v1.23.6 -

创建一个nginx 的 pod

创建一个 nginx-demo.yaml 写入下面内容

apiVersion: v1 kind: Pod metadata: name: nginx-demo-metadate labels: type: app version: 0.0.1 namespace: 'default' spec: containers: - name: nginx-name-container image: nginx:1.7.9 imagePullPolicy: IfNotPresent command: - nginx - -g - 'daemon off;' workingDir: /usr/share/nginx/html ports: - name: http containerPort: 80 protocol: TCP env: - name: JVM_OPTS value: '-Xms128m -Xmx256m' resources: requests: cpu: 100m memory: 128Mi limits: cpu: 200m memory: 256Mi restartPolicy: OnFailure创建这个pod

kubectl create -f nginx-demo.yaml查看这个pod

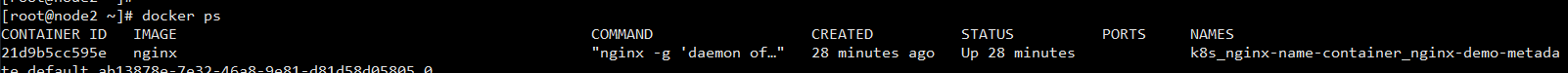

[root@master pods]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-demo-metadate 1/1 Running 0 27m 10.244.104.1 node2 <none> <none>这个pod位置在node2上,可以去node2上使用 docker ps 查看这个容器

![image-20240101183904103]()

查看这个pod详情,可以看到这个这个pod的创建过程和配置信息

kubectl describe pods nginx-demo-metadateEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 24m default-scheduler Successfully assigned default/nginx-demo-metadate to node2 Normal Pulling 24m kubelet Pulling image "nginx:1.7.9" Normal Pulled 24m kubelet Successfully pulled image "nginx:1.7.9" in 22.714137674s Normal Created 24m kubelet Created container nginx-name-container Normal Started 24m kubelet Started container nginx-name-container

能耍的时候就一定要耍,不能耍的时候一定要学。

--天道酬勤,贵在坚持posted on 2023-12-30 20:00 zhangyukun 阅读(77) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号