18_朴素贝叶斯案例

1.案例:

sklearn20类新闻分类;

20个新闻组数据集包含20个主题的18000个新闻组帖子;

2.朴素贝叶斯案例流程:

1、加载20类新闻数据,并进行分割

2、生成文章特征词

3、朴素贝叶斯estimator流程进行预估

3.代码实现:

from sklearn.datasets import fetch_20newsgroups

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

def naviebayes():

news = fetch_20newsgroups()

x_train,x_test,y_train,y_test = train_test_split(news.data,news.target,test_size=0.25)

# 对数据集进行特征抽取

tf = TfidfVectorizer()

# 以训练集中的词列表进行每篇文章的重要性统计,x_train得到一些词,来预测x_test

x_train = tf.fit_transform(x_train)

print(tf.get_feature_names())

x_test = tf.transform(x_test)

# 进行朴素贝叶斯的预测

mlt = MultinomialNB(alpha=1.0)

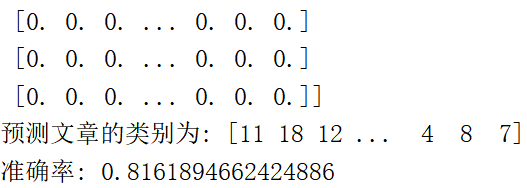

print(x_train.toarray()) # toarray()作用,转为矩阵形式

mlt.fit(x_train,y_train)

y_predict = mlt.predict(x_test)

print("预测文章的类别为:",y_predict)

print("准确率:",mlt.score(x_test,y_test))

if __name__ == '__main__':

naviebayes()

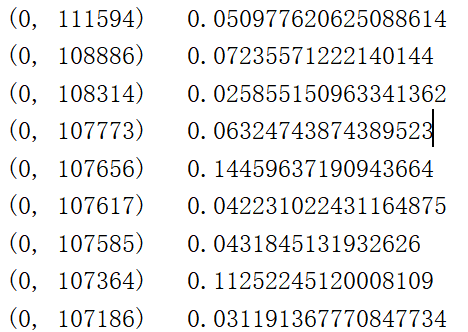

x_test的结果为:

浙公网安备 33010602011771号

浙公网安备 33010602011771号