【langchain】prompt

prompt源码分析

PromptTemplate

提示词模板,由一个字符串模板组成,接受用户输入的参数用来生成语言模型的提示词。

from_template方法

@classmethod

def from_template(

cls,

template: str,

*,

template_format: PromptTemplateFormat = "f-string",

partial_variables: Optional[dict[str, Any]] = None,

**kwargs: Any,

) -> PromptTemplate:

"""

20250708 -

template 模板内容,如果是是用f""那么变量就需要赋值,如果是不带f直接使用{}那么就不需要赋值

PromptTemplateFormat 模板的格式类型,默认是f-string

partial_variables 带变量参数的模板,部分参数

Load a prompt template from a template.

Args:

template: The template to load.

template_format: The format of the template. Use `jinja2` for jinja2,

`mustache` for mustache, and `f-string` for f-strings.

Defaults to `f-string`.

partial_variables: A dictionary of variables that can be used to partially

fill in the template. For example, if the template is

`"{variable1} {variable2}"`, and `partial_variables` is

`{"variable1": "foo"}`, then the final prompt will be

`"foo {variable2}"`. Defaults to None.

kwargs: Any other arguments to pass to the prompt template.

Returns:

The prompt template loaded from the template.

"""

# 20250708 - 从模板中提取变量,默认是{}类型的变量

input_variables = get_template_variables(template, template_format)

# 20250708 - 字典对象,可以部分不设置

_partial_variables = partial_variables or {}

# 20250708 - 去掉部分不需要设置的参数

if _partial_variables:

input_variables = [

var for var in input_variables if var not in _partial_variables

]

# 20250708 - 实例化对象

return cls(

input_variables=input_variables,

template=template,

template_format=template_format, # type: ignore[arg-type]

partial_variables=_partial_variables,

**kwargs,

)

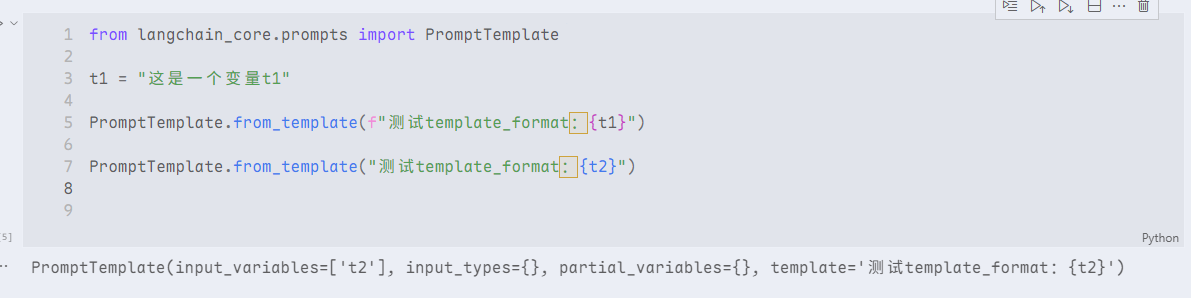

from langchain_core.prompts import PromptTemplate

t1 = "这是一个变量t1"

PromptTemplate.from_template(f"测试template_format:{t1}")

PromptTemplate.from_template("测试template_format:{t2}")

template1 = PromptTemplate.from_template("可以提前设置的变量{t1},测试template_format:{t2}", partial_variables={"t1":t1})

template1.format(t2 = 'xxxxx')

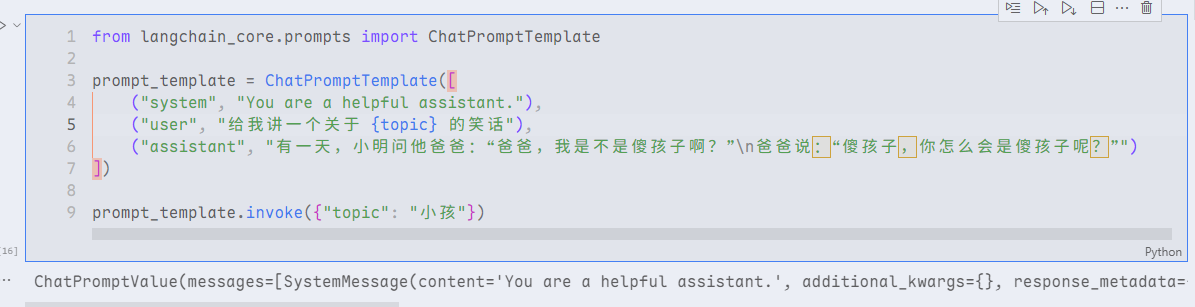

ChatPromptTemplate

这个提示词模板主要用于格式化消息列表,适用于多轮对话

template = ChatPromptTemplate([

("system", "You are a helpful AI bot. Your name is {name}."),

("human", "Hello, how are you doing?"),

("ai", "I'm doing well, thanks!"),

("human", "{user_input}"),

])

from langchain_core.prompts import ChatPromptTemplate

prompt_template = ChatPromptTemplate([

("system", "You are a helpful assistant."),

("user", "给我讲一个关于 {topic} 的笑话"),

("assistant", "有一天,小明问他爸爸:“爸爸,我是不是傻孩子啊?”\n爸爸说:“傻孩子,你怎么会是傻孩子呢?”")

])

prompt_template.invoke({"topic": "小孩"})

prompt_template1 = ChatPromptTemplate([

("assistant", "有一天,小明问他爸爸:“爸爸,我是不是傻孩子啊?”\n爸爸说:“傻孩子,你怎么会是傻孩子呢?”"),

("ai", "有一天sssssssscccccxxx"),

("placeholder", "{param_test1}"),

("placeholder", ["{param_test1}", True]),

])

prompt_template1

MessagesPlaceholder & callback

在刚刚的chat提示词模板中可以格式化多个消息列表,每个消息都是字符串,但是如果我们希望直接传一个消息数组,并放入特定位置,那么就需要用到这个类

其中ChatPromptTemplate的runnableconfig的callbacks对象是从外部传入handler对象,这个handler对象会传递到内部的callbackmanager对象,然后在通过configure内部的构造方法设置inheritable_callbacks对象为外部传入的callbackhandler对象

最后触发调用on_chain_start -》 handle_event -》 event = getattr(handler, event_name)(*args, **kwargs) -》 触发回调

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

from langchain.callbacks.base import BaseCallbackHandler

from langchain_core.runnables import RunnableConfig

prompt_template = ChatPromptTemplate([

("system", "You are a helpful assistant"),

MessagesPlaceholder("msgs"),

("placeholder", ["{param_test1}", True])

])

# 自定义回调类

class MyCustomHandler(BaseCallbackHandler):

def on_chain_start(self, serialized, input, **kwargs):

print(f"开始执行: {input}")

def on_chain_end(self, output, **kwargs):

print(f"执行完成: {output}")

config = RunnableConfig(

callbacks=[MyCustomHandler()]

)

pv1 = prompt_template.invoke({"msgs": [HumanMessage(content="hi!"), AIMessage(content="你好")], "param_test1":[

("user", "给我讲一个关于 {topci1} 的笑话"),

("ai", "有一天sssssssscccccxxx")

], "topci1":"cess"}, config=config)

print(prompt_template.pretty_repr())

pv1

也可以直接用placeholder代表MessagesPlaceholder

prompt_template = ChatPromptTemplate([

("system", "You are a helpful assistant"),

("placeholder", "{msgs}") # <-- This is the changed part

])

prompt_template.invoke({"msgs": [HumanMessage(content="hi!"), AIMessage(content="你好")]})

prompt_template.invoke 方法

invoke方法调用之后是触发到_call_with_config方法,这个方法中在调用set_config_context中的context.run方法

# 调用invoke的时候

return self._call_with_config(

self._format_prompt_with_error_handling,

input,

config,

run_type="prompt",

serialized=self._serialized,

)

with set_config_context(child_config) as context:

output = cast(

"Output",

context.run(

call_func_with_variable_args, # type: ignore[arg-type]

func,

input,

config,

run_manager,

**kwargs,

),

)

这个函数的作用是调用call_func_with_variable_args中的方法,这个方法中调用的func函数实质是调用self._format_prompt_with_error_handling方法

继而调用

def _format_prompt_with_error_handling(self, inner_input: dict) -> PromptValue:

_inner_input = self._validate_input(inner_input)

return self.format_prompt(**_inner_input)

实质的底层调用format_prompt函数,进行数据格式化

少样本提示词模板:

FewShotPromptTemplate

FewShotPromptTemplate

在调用大模型的时候,光有角色设定,用户输入得到的效果一般会比较差,需要通过给大模型一些参考样例,才能得到比较好的效果

from langchain_core.prompts import PromptTemplate

example_prompt = PromptTemplate.from_template("Question: {question}\n{answer}")

examples = [

{

"question": "Who lived longer, Muhammad Ali or Alan Turing?",

"answer": """1

Are follow up questions needed here: Yes.

Follow up: How old was Muhammad Ali when he died?

Intermediate answer: Muhammad Ali was 74 years old when he died.

Follow up: How old was Alan Turing when he died?

Intermediate answer: Alan Turing was 41 years old when he died.

So the final answer is: Muhammad Ali

""",

},

{

"question": "When was the founder of craigslist born?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who was the founder of craigslist?

Intermediate answer: Craigslist was founded by Craig Newmark.

Follow up: When was Craig Newmark born?

Intermediate answer: Craig Newmark was born on December 6, 1952.

So the final answer is: December 6, 1952

""",

},

{

"question": "Who was the maternal grandfather of George Washington?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

""",

},

{

"question": "Are both the directors of Jaws and Casino Royale from the same country?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who is the director of Jaws?

Intermediate Answer: The director of Jaws is Steven Spielberg.

Follow up: Where is Steven Spielberg from?

Intermediate Answer: The United States.

Follow up: Who is the director of Casino Royale?

Intermediate Answer: The director of Casino Royale is Martin Campbell.

Follow up: Where is Martin Campbell from?

Intermediate Answer: New Zealand.

So the final answer is: No

""",

},

]

from langchain_core.prompts import FewShotPromptTemplate

prompt = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

suffix="Question: {input}",

input_variables=["input"],

)

print(

prompt.invoke({"input": "Who was the father of Mary Ball Washington?"}).to_string()

)

- 提示词模板部分格式化:

Partial with strings

Partial with functions

from langchain_core.prompts import PromptTemplate

prompt = PromptTemplate.from_template("{foo}{bar}")

partial_prompt = prompt.partial(foo="foo")

print(partial_prompt.format(bar="baz"))

prompt = PromptTemplate(

template="{foo}{bar}", input_variables=["bar"], partial_variables={"foo": "foo"}

)

print(prompt.format(bar="baz"))

# 调用函数构造提示词

from datetime import datetime

def _get_datetime():

now = datetime.now()

return now.strftime("%m/%d/%Y, %H:%M:%S")

prompt = PromptTemplate(

template="Tell me a {adjective} joke about the day {date}",

input_variables=["adjective", "date"],

)

partial_prompt = prompt.partial(date=_get_datetime)

print(partial_prompt.format(adjective="funny"))

prompt = PromptTemplate(

template="Tell me a {adjective} joke about the day {date}",

input_variables=["adjective"],

partial_variables={"date": _get_datetime},

)

print(prompt.format(adjective="funny"))

- 提示词组合

多个提示词连接起来,提示词内容可以有字符串,也可以是变量占位符

from langchain_core.prompts import PromptTemplate

prompt = (

PromptTemplate.from_template("Tell me a joke about {topic}")

+ ", make it funny"

+ "\n\nand in {language}"

)

prompt.format(topic="sports", language="spanish")

# 如果是聊天的时候,提示词如何组成

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

prompt = SystemMessage(content="你是一个乐于助人的AI帮手")

new_prompt = (

prompt + HumanMessage(content="你好") + AIMessage(content="你需要什么帮助?") + "{input}"

)

new_prompt.format_messages(input="你好")

总结

- prompt默认一般就是f-string类型的字符串,通过变量替换的方式支持修改prompt

- message支持有4种类型的消息:human,ai,system,placeholder,分别代表用户输入,ai生成,系统提示,最后有个位置占位符用来匹配不确定的内容,内容是数组

浙公网安备 33010602011771号

浙公网安备 33010602011771号