Android 集成第三方SDK

6月 一切有为法 有如梦幻泡影 如梦亦如幻 如露亦如电 当作如是观

一.客户需求

1.1.在系统里面集成 第三方SDK 即将aar资源集成到系统层 让应用调用

1.2.客户的算法代码也集成到源码当中去

二.先做到 能调用jar包吧

2.1.内置

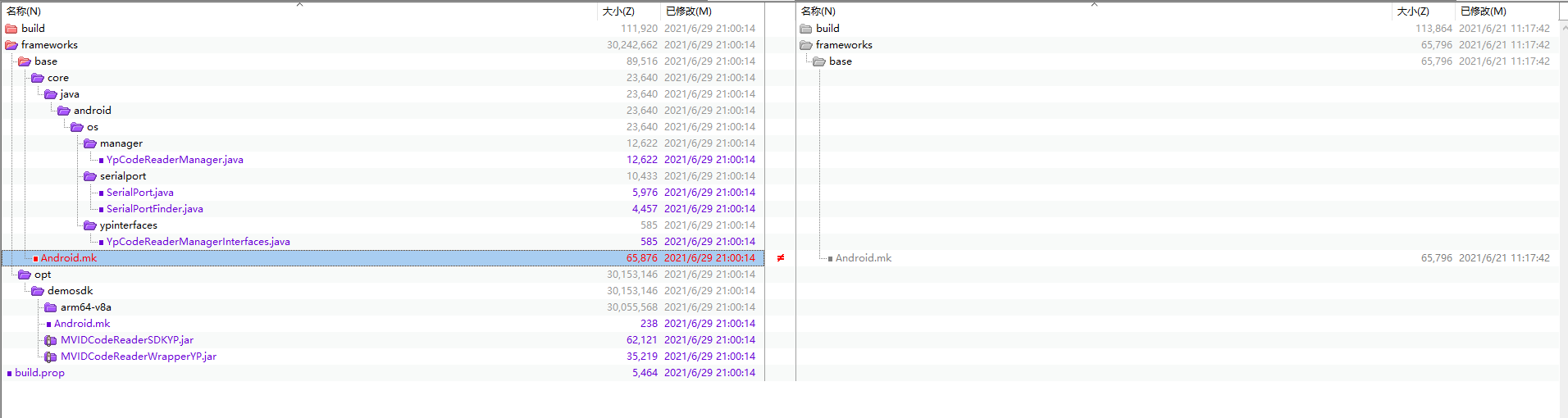

a./frameworks/opt/demosdk/ 加入资源 并加入编译规则

Android.mk

LOCAL_PATH := $(my-dir) include $(CLEAR_VARS) LOCAL_PREBUILT_STATIC_JAVA_LIBRARIES := MVIDCodeReaderSDKYP:MVIDCodeReaderSDKYP.jar \ MVIDCodeReaderWrapperYP:MVIDCodeReaderWrapperYP.jar include $(BUILD_MULTI_PREBUILT)

b.这里是调用jar 的方法

package android.os.manager;

import android.content.Context;

import android.graphics.Color;

import android.graphics.ImageFormat;

import android.util.Log;

import android.widget.FrameLayout;

import android.os.ypinterfaces.YpCodeReaderManagerInterfaces;

import android.serialport.SerialPort;

import android.serialport.SerialPortFinder;

import java.io.File;

import java.io.BufferedInputStream;

import java.io.BufferedOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.nio.ByteBuffer;

import java.util.ArrayList;

import java.util.List;

import java.util.regex.Pattern;

import com.yunpan.codereader.MVIDCodeReader;

import com.yunpan.codereader.MVIDCodeReaderDefine;

import com.yunpan.codereader.code.CodeInfo;

import com.yunpan.codereader.manager.MVIDCodeReaderManager;

import com.yunpan.codereader.manager.OnCodeInfoCallback;

import com.yunpan.codereader.manager.OnPreviewCallbackWithBuffer;

import com.yunpan.codereader.parameter.CameraException;

import com.yunpan.codereader.parameter.CameraFloatValue;

import com.yunpan.codereader.parameter.CodeAbility;

import static com.yunpan.codereader.MVIDCodeReaderDefine.MVID_IMAGE_TYPE.MVID_IMAGE_NV21;

import static com.yunpan.codereader.parameter.ExposureMode.Auto_Exposure;

import static com.yunpan.codereader.parameter.ExposureMode.Manual_Exposure;

import static com.yunpan.codereader.parameter.GainMode.Manual_Gain;

import static com.yunpan.codereader.MVIDCodeReaderDefine.MVID_IMAGE_INFO;//add gatsby

public class YpCodeReaderManager {

public static final String TAG = "YpCodeReaderManager";

public static MVIDCodeReaderManager iyunpanCodeReaderManager = new MVIDCodeReaderManager();

//interface YpCodeReaderManagerInterfaces OnListen

public static YpCodeReaderManagerInterfaces mlistener;

public FrameLayout mFrameLayout;

public static List<String> listCode = new ArrayList<>();

//MVID_IMAGE_TYPE

public static byte[] pImageInfo;

public static byte[] NV21_Data;

public static int l_nImageLen = 0;

public static MVIDCodeReaderDefine.MVID_IMAGE_TYPE imageType;

public static int mYpImageType;

public static int mWidth = 0;

public static int mHeight = 0;

public static int mFrameNum = 0;

//SerialPort

/*public static String mDevice;

public static mBaudRate;

public static mDataBits;

public static mParity;

public static mStopBits;*/

public static String ttyPort = "/dev/ttyS3";

public static BufferedInputStream mInputStream;

public static BufferedOutputStream mOutputStream;

public static SerialPort mSerialPort;

//防止标定的时候获取重量,导致错误 校准

public static boolean isCalibration = false;

public static int readWeightCount = 0;

public static boolean weightReadWeightAgain = true;

public static Thread mThread = null;

//test

public static Thread receiveThread;

public static Thread sendThread;

public static long i = 0;

//SerialPort(File device, int baudrate, int dataBits, int parity, int stopBits, int flags)

public static void openSerialPort() {

try {

mSerialPort = new SerialPort(new File(ttyPort), 9600, 8, 0, 1, 0);

mInputStream = new BufferedInputStream(mSerialPort.getInputStream());

mOutputStream = new BufferedOutputStream(mSerialPort.getOutputStream());

//getSerialData();

getXSerialData();

} catch (Exception e) {

Log.d("gatsby", "open serialport failed! " + e.getMessage());

Log.e(TAG, "open serialport failed! connectSerialPort: " + e.getMessage());

e.printStackTrace();

}

}

public static void getSerialData() {

if (mThread == null) {

isCalibration = false;

mThread = new Thread(new Runnable() {

@Override

public void run() {

while (true) {

if (isCalibration) {

break;

}

if (weightReadWeightAgain) {

weightReadWeightAgain = false;

//readModbusWeight();

}

try {

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

});

mThread.start();

}

}

public static void sendSerialData() {

sendThread = new Thread() {

@Override

public void run() {

while (true) {

try {

i++;

mOutputStream.write((String.valueOf(i)).getBytes());

//Log.d("gatsby", "send serialport sucess!" + i);

Thread.sleep(50);

} catch (Exception e) {

Log.d("gatsby", "send serialport failed!");

e.printStackTrace();

}

}

}

};

sendThread.start();

}

public static void getXSerialData() {

receiveThread = new Thread() {

@Override

public void run() {

while (true) {

int size;

try {

byte[] buffer = new byte[512];

if (mInputStream == null)

return;

size = mInputStream.read(buffer);

if (size > 0) {

String recinfo = new String(buffer, 0,

size);

Log.d("gatsby", "recevier serialport info :" + recinfo);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

};

receiveThread.start();

}

public static void closeSerialPort() {

if (mSerialPort != null) {

mSerialPort.close();

}

if (mInputStream != null) {

try {

mInputStream.close();

} catch (IOException e) {

e.printStackTrace();

}

}

if (mOutputStream != null) {

try {

mOutputStream.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

public List<String> getAllModbusDevices() {

SerialPortFinder serialPortFinder = new SerialPortFinder();

String[] allDevicesPath = serialPortFinder.getAllDevicesPath();

List<String> ttysXPahts = new ArrayList<>();

if (allDevicesPath.length > 0) {

String pattern = "^/dev/tty(S|(USB)|(XRUSB))\\d+$";

for (String s : allDevicesPath) {

if (Pattern.matches(pattern, s)) {

ttysXPahts.add(s);

}

}

}

return ttysXPahts;

}

//CallBack CameraCodeReadData

public static void setCodeInfoListener(YpCodeReaderManagerInterfaces listener) {

mlistener = listener;

}

public static void startCamera(Context context, FrameLayout mFrameLayout) {

try {

iyunpanCodeReaderManager.openCamera("V");

iyunpanCodeReaderManager.statGrabbing();

iyunpanCodeReaderManager.setPreview(mFrameLayout.getContext(), mFrameLayout, true);

int colors[] = {Color.GREEN};

iyunpanCodeReaderManager.setCodeBoxColor(colors);

iyunpanCodeReaderManager.setCodeBoxStrokeWidth(1.5f);

iyunpanCodeReaderManager.setOnCodeInfoCallBack(new OnCodeInfoCallback() {

@Override

public void onCodeInfo(ArrayList<CodeInfo> arrayList, MVIDCodeReaderDefine.MVID_PROC_PARAM yunpan_proc_param) {

listCode.clear();

for (CodeInfo codeInfo : arrayList) {

listCode.add(codeInfo.getStrCode());

//Callback error while mlistener is null

mlistener.getCodeInfo(listCode);

}

}

});

iyunpanCodeReaderManager.setOnPreviewCallBack(new OnPreviewCallbackWithBuffer() {

@Override

public void onImageInfo(MVIDCodeReaderDefine.MVID_IMAGE_INFO yunpan_image_info) {

pImageInfo = yunpan_image_info.pImageBuf;

l_nImageLen = yunpan_image_info.nImageLen;

imageType = yunpan_image_info.enImageType;//enum

switch (imageType) {

case MVID_IMAGE_NV12: {

mYpImageType = 1003;

break;

}

case MVID_IMAGE_NV21: {

mYpImageType = 1002;

break;

}

case MVID_IMAGE_YUYV: {

mYpImageType = 1001;

break;

}

default: {

mYpImageType = 0;

break;

}

}

mWidth = yunpan_image_info.nWidth;

mHeight = yunpan_image_info.nHeight;

mFrameNum = yunpan_image_info.nFrameNum;

//doSaveJpg(pI mageInfo,l_nImageLen,mWidth,mHeight);

mlistener.getImageInfo(pImageInfo, l_nImageLen, mYpImageType, mWidth, mHeight, mFrameNum);

}

});

} catch (CameraException e) {

closeCodeReaderCamera();

e.printStackTrace();

Log.d(TAG, "opean camera failed!");

}

}

public static void closeCodeReaderCamera() {

if (iyunpanCodeReaderManager != null) {

iyunpanCodeReaderManager.stopGrabbing();

iyunpanCodeReaderManager.closeCamera();

}

}

public static void setExposureAuto() {

if (iyunpanCodeReaderManager != null) {

Log.d(TAG, "setExposureAuto: " + Auto_Exposure);

try {

iyunpanCodeReaderManager.setExposureMode(Auto_Exposure);

} catch (CameraException e) {

e.printStackTrace();

}

}

}

public static void setExposureTime(float value) {

try {

if (iyunpanCodeReaderManager != null) {

iyunpanCodeReaderManager.setExposureMode(Manual_Exposure);

iyunpanCodeReaderManager.setExposureMode(Manual_Exposure);

iyunpanCodeReaderManager.setExposureValue(value);

}

} catch (CameraException e) {

e.printStackTrace();

}

}

public static CameraFloatValue getGain() {

try {

if (iyunpanCodeReaderManager != null) {

return iyunpanCodeReaderManager.getGainValue();

}

} catch (CameraException e) {

e.printStackTrace();

}

return null;

}

public static void setGain(float value) {

try {

if (iyunpanCodeReaderManager != null) {

iyunpanCodeReaderManager.setGainMode(Manual_Gain);

iyunpanCodeReaderManager.setGainValue(value);

}

} catch (CameraException e) {

e.printStackTrace();

}

}

public static void setCameraBarcodeType(int type) {

try {

if (iyunpanCodeReaderManager != null) {

if (type == 1) {

iyunpanCodeReaderManager.setCodeAbility(CodeAbility.BCRCODE);

} else if (type == 2) {

iyunpanCodeReaderManager.setCodeAbility(CodeAbility.TDCRCODE);

} else if (type == 3) {

iyunpanCodeReaderManager.setCodeAbility(CodeAbility.BOTH);

}

}

} catch (CameraException e) {

e.printStackTrace();

}

}

}

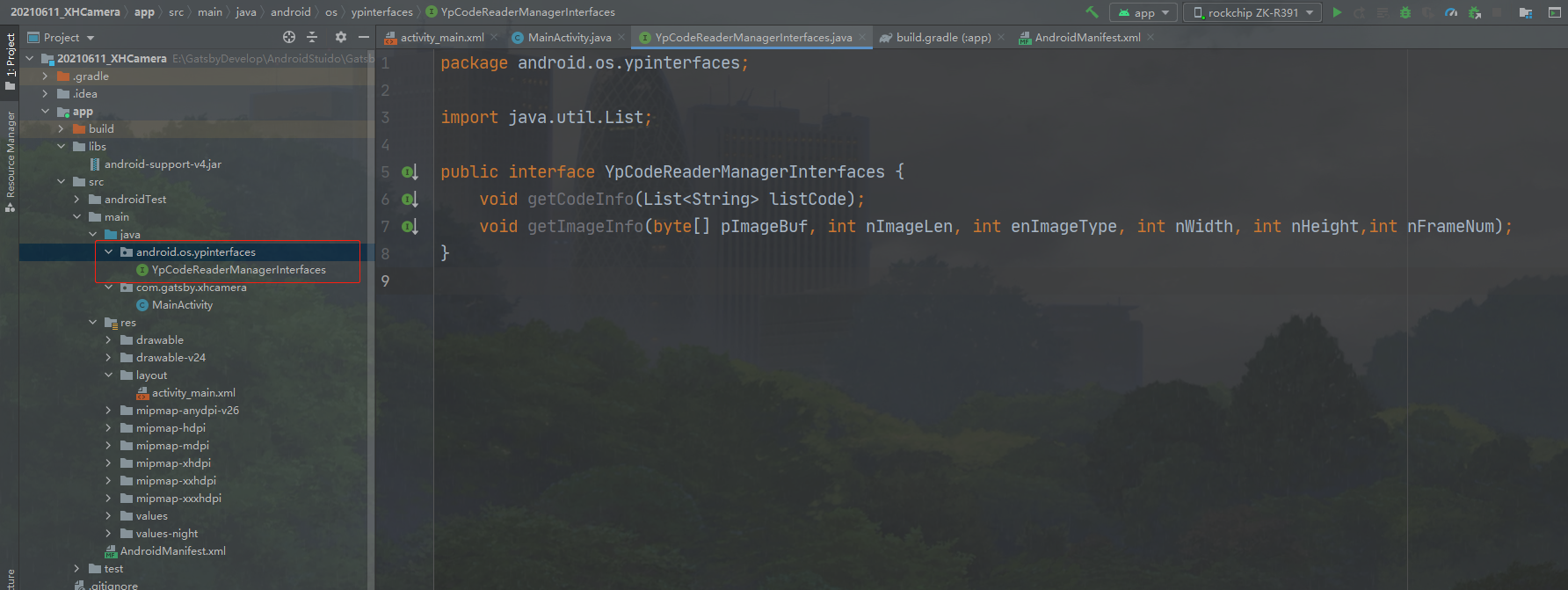

需要实现的接口

package android.os.ypinterfaces;

import java.util.List;

import static com.yunpan.codereader.MVIDCodeReaderDefine.MVID_IMAGE_INFO;

public interface YpCodeReaderManagerInterfaces {

void getCodeInfo(List<String> listCode);

/* public byte[] pImageBuf;

public int nImageLen = 0;

public MVIDCodeReaderDefine.MVID_IMAGE_TYPE enImageType;

public short nWidth = 0;

public short nHeight = 0;

public int nFrameNum = 0;*/

void getImageInfo(byte[] pImageBuf, int nImageLen,int enImageType ,int nWidth, int nHeight,int nFrameNum);

}

d. 加入编译

--- a/frameworks/base/Android.mk +++ b/frameworks/base/Android.mk @@ -1357,7 +1357,10 @@ LOCAL_SRC_FILES := $(ext_src_files) LOCAL_NO_STANDARD_LIBRARIES := true LOCAL_JAVA_LIBRARIES := core-oj core-libart -LOCAL_STATIC_JAVA_LIBRARIES := libphonenumber-platform +LOCAL_STATIC_JAVA_LIBRARIES := libphonenumber-platform \ + CodeReaderSDK \ + MVIDCodeReaderWrapper + LOCAL_MODULE_TAGS := optional LOCAL_MODULE := ext

--- a/build/core/tasks/check_boot_jars/package_whitelist.txt +++ b/build/core/tasks/check_boot_jars/package_whitelist.txt @@ -109,6 +109,10 @@ dalvik\..* libcore\..* android\..* com\.android\..* +com\.example\.mvidcodereader +com\.mvid\.codereader\..* +MVIDCodeReaderWrapper +

e.上层应用 调用 framework层能用到的jar包

同写一样的接口 这里是为了 能够拿到回调方法的数据

iyunpanCodeReaderManager.setOnPreviewCallBack

mlistener.getImageInfo(pImageInfo, l_nImageLen, mYpImageType, mWidth, mHeight, mFrameNum);

package android.os.ypinterfaces;

import java.util.List;

public interface YpCodeReaderManagerInterfaces {

void getCodeInfo(List<String> listCode);

void getImageInfo(byte[] pImageBuf, int nImageLen, int enImageType, int nWidth, int nHeight,int nFrameNum);

}

都是通过反射来 获取上层的方法

activity_main.xml

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:gravity="center"

android:orientation="vertical">

<Button

android:id="@+id/btn1"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Onclik111" />

<Button

android:id="@+id/btn2"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Onclik222" />

<Button

android:id="@+id/btn3"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Onclik333" />

<Button

android:id="@+id/btn4"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Onclik444" />

<FrameLayout

android:id="@+id/frameLayout"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:background="#000000"></FrameLayout>

</LinearLayout>

MainActivity.java

package com.gatsby.xhcamera;

import android.content.Context;

import android.graphics.ImageFormat;

import android.graphics.Rect;

import android.graphics.YuvImage;

import android.os.Bundle;

import android.os.ypinterfaces.YpCodeReaderManagerInterfaces;

import android.view.View;

import android.widget.Button;

import android.widget.FrameLayout;

import androidx.appcompat.app.AppCompatActivity;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.lang.reflect.Method;

import java.nio.ByteBuffer;

import java.util.ArrayList;

import java.util.List;

public class MainActivity extends AppCompatActivity implements View.OnClickListener, YpCodeReaderManagerInterfaces {

FrameLayout myFrameLayout;

Button btn1, btn2, btn3, btn4;

public static YpCodeReaderManagerInterfaces mlistener;

public byte[] mImageBuf;

public int mImageLen = 0;

public int xImageLen;

public int menImageType = 0;

public int mWidth = 0;

public int mHeight = 0;

public int mFrameNum = 0;

private byte[] NV21_Data;

public List<String> getCode = new ArrayList<>();

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

myFrameLayout = (FrameLayout) findViewById(R.id.frameLayout);

mlistener = (YpCodeReaderManagerInterfaces) this;

setCodeInfoListener(mlistener);

startCamera(this, myFrameLayout);

btn1 = (Button) findViewById(R.id.btn1);

btn2 = (Button) findViewById(R.id.btn2);

btn3 = (Button) findViewById(R.id.btn3);

btn4 = (Button) findViewById(R.id.btn4);

btn1.setOnClickListener(this);

btn2.setOnClickListener(this);

btn3.setOnClickListener(this);

btn4.setOnClickListener(this);

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.btn1:

//Log.d("gatsby", "menImageType->" + menImageType + "mImageLen->" + mImageLen + "nHeigth->" + mHeight);

if (NV21_Data == null && xImageLen < mImageLen) {

xImageLen = mImageLen;

NV21_Data = new byte[xImageLen];

}

swapNV21ToNV12(NV21_Data, mImageBuf, mWidth, mHeight);

saveCodeImg(mImageBuf, mWidth, mHeight, ImageFormat.NV21, "gatsby");

break;

case R.id.btn2:

openSerialPort();

break;

case R.id.btn3:

sendSerialData();

break;

case R.id.btn4:

closeSerialPort();

break;

}

}

public static void sendSerialData() {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("sendSerialData");

set.invoke(c);

} catch (Exception e) {

e.printStackTrace();

}

}

public static void openSerialPort() {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("openSerialPort");

set.invoke(c);

} catch (Exception e) {

e.printStackTrace();

}

}

public static void closeSerialPort() {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("closeSerialPort");

set.invoke(c);

} catch (Exception e) {

e.printStackTrace();

}

}

public void setCodeInfoListener(YpCodeReaderManagerInterfaces listener) {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("setCodeInfoListener", YpCodeReaderManagerInterfaces.class);

set.invoke(c, listener);

} catch (Exception e) {

e.printStackTrace();

}

}

public void startCamera(Context context, FrameLayout mFrameLayout) {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("startCamera", Context.class, FrameLayout.class);

set.invoke(c, context, mFrameLayout);

} catch (Exception e) {

e.printStackTrace();

}

}

public void closeCamera() {

try {

Class<?> c = Class.forName("android.os.manager.YpCodeReaderManager");

Method set = c.getMethod("closeCodeReaderCamera");

set.invoke(c);

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

protected void onDestroy() {

closeCamera();

// TODO Auto-generated method stub

super.onDestroy();

}

@Override

public void getCodeInfo(List<String> listCode) {

getCode = listCode;

}

@Override

public void getImageInfo(byte[] pImageBuf, int nImageLen, int enImageType, int nWidth, int nHeight, int nFrameNum) {

mImageBuf = pImageBuf;

mImageLen = nImageLen;

menImageType = enImageType;

mWidth = nWidth;

mHeight = nHeight;

mFrameNum = nFrameNum;

}

public static void swapNV21ToNV12(byte[] dst_nv12, byte[] src_nv21, int width, int height) {

if (src_nv21 == null || dst_nv12 == null) {

return;

}

int framesize = width * height;

int i = 0, j = 0;

System.arraycopy(src_nv21, 0, dst_nv12, 0, framesize);

for (j = 0; j < framesize / 2; j += 2) {

dst_nv12[framesize + j + 1] = src_nv21[j + framesize];

}

for (j = 0; j < framesize / 2; j += 2) {

dst_nv12[framesize + j] = src_nv21[j + framesize + 1];

}

}

public static void swapNV12ToNV21(byte[] dst_nv21, byte[] src_nv12, int width, int height) {

swapNV21ToNV12(dst_nv21, dst_nv21, width, height);

}

public static void saveCodeImg(byte[] data, int w, int h, int nImageType, String fileName) {

ByteBuffer byteBuffer;

int width = 0;

int height = 0;

byteBuffer = ByteBuffer.wrap(data);

width = w;

height = h;

try {

byte[] yuv = byteBuffer.array();

String fullName = fileName + ".jpg";

File file = new File("/sdcard/DCIM/" + fullName);

if (!file.getParentFile().exists()) {

file.getParentFile().mkdirs();

}

FileOutputStream fileOutputStream;

fileOutputStream = new FileOutputStream(file);

ByteArrayOutputStream baos = new ByteArrayOutputStream();

YuvImage yuvImage = new YuvImage(yuv, nImageType, width, height, null);

Rect rect = new Rect(0, 0, width, height);

yuvImage.compressToJpeg(rect, 90, fileOutputStream);

fileOutputStream.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号