安装多节点的kubersphere集群

多节点集群的安装(https://kubesphere.com.cn/docs/installing-on-linux/introduction/multioverview/)

主机规划

| 主机 IP | 主机名 | 角色 | 操作系统 |

|---|---|---|---|

| 172.30.47.67 | master | master, etcd | CentOS 8.3.2011 |

| 172.30.47.68 | node1 | worker | CentOS 8.3.2011 |

| 172.30.47.69 | node2 | worker | CentOS 8.3.2011 |

准备工作

- 关闭防火墙

systemctl disable firewalld

systemctl stop firewalld

- 关闭selinux

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

getenforce

- 关闭swap分区

swapoff -a

- 时间同步(非必要)

yum -y install chrony

#修改时间同步服务器

sed -i.bak '3,6d' /etc/chrony.conf && sed -i '3cserver ntp1.aliyun.com iburst' /etc/chrony.conf

systemctl start chronyd && systemctl enable chronyd

#查看同步结果

chronyc sources

- host解析

echo -e "172.30.47.67 master\n172.30.47.68 node1\n172.30.47.69 node2\n" >>/etc/hosts

- 内核参数设置

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

- 检查DNS

cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 100.100.2.136

nameserver 100.100.2.138

- 安装依赖组件

yum -y install socat

yum -y install conntrack

yum -y install ebtables

yum -y install ipset

- 安装、设置dockerm

yum remove docker*

yum install -y yum-utils

#配置docker的yum地址

wget -O /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#安装指定版本

yum install -y docker-ce-20.10.12 docker-ce-cli-20.10.12 containerd.io-1.4.12

# 启动&开机启动docker

systemctl enable docker --now

# docker加速配置

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ke9h1pt4.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

- 配置k8s的yum源地址,这里指定为阿里云的yum源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

下载 KubeKey

[root@master ~]# export KKZONE=cn

[root@master ~]# curl -sfL https://get-kk.kubesphere.io | VERSION=v1.2.1 sh -

Downloading kubekey v1.2.1 from https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/v1.2.1/kubekey-v1.2.1-linux-amd64.tar.gz ...

Kubekey v1.2.1 Download Complete!

[root@master ~]#

创建集群

[root@master ~]# chmod +x kk

[root@master ~]# ./kk create config --with-kubernetes v1.21.5 --with-kubesphere v3.2.1

[root@master ~]# ll

total 23116

-rw-r--r-- 1 root root 4557 Jan 3 15:36 config-sample.yaml

-rwxr-xr-x 1 1001 docker 11970124 Dec 17 14:46 kk

-rw-r--r-- 1 root root 11640487 Jan 3 15:33 kubekey-v1.2.1-linux-amd64.tar.gz

-rw-r--r-- 1 1001 docker 24539 Dec 17 14:44 README.md

-rw-r--r-- 1 1001 docker 24468 Dec 17 14:44 README_zh-CN.md

[root@master ~]#

config-sample.yaml内容如下:

点击查看代码

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 172.30.47.67, internalAddress: 172.30.47.67, user: root, password: Admin@123} #需要修改为自己的主机名/IP地址/用户名/密码

- {name: node1, address: 172.30.47.68, internalAddress: 172.30.47.68, user: root, password: Admin@123} #需要修改为自己的主机名/IP地址/用户名/密码

- {name: node2, address: 172.30.47.69, internalAddress: 172.30.47.69, user: root, password: Admin@123} #需要修改为自己的主机名/IP地址/用户名/密码

roleGroups:

etcd:

- master #修改为master

master:

- master #修改为master

worker:

- node1

- node2

controlPlaneEndpoint:

##Internal loadbalancer for apiservers

#internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.21.5

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.2.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

local_registry: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging:

enabled: false

containerruntime: docker

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# adapter:

# resources: {}

# node_exporter:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

使用配置文件创建集群

[root@master ~]# ./kk create cluster -f config-sample.yaml

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | conntrack | docker | nfs client | ceph client | glusterfs client | time |

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

| node1 | y | y | y | y | y | y | y | 20.10.12 | | | | CST 15:44:41 |

| node2 | y | y | y | y | y | y | y | 20.10.12 | | | | CST 15:44:42 |

| master | y | y | y | y | y | y | y | 20.10.12 | | | | CST 15:44:42 |

+--------+------+------+---------+----------+-------+-------+-----------+----------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, you should ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

下面是安装具体过程:

点击查看代码

Continue this installation? [yes/no]: yes

INFO[15:47:00 CST] Downloading Installation Files

INFO[15:47:00 CST] Downloading kubeadm ...

INFO[15:47:42 CST] Downloading kubelet ...

INFO[15:49:33 CST] Downloading kubectl ...

INFO[15:50:15 CST] Downloading helm ...

INFO[15:50:58 CST] Downloading kubecni ...

INFO[15:51:34 CST] Downloading etcd ...

INFO[15:51:49 CST] Downloading docker ...

INFO[15:51:54 CST] Downloading crictl ...

INFO[15:52:09 CST] Configuring operating system ...

[node1 172.30.47.68] MSG:

vm.swappiness = 1

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_slow_start_after_idle = 0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

no crontab for root

[node2 172.30.47.69] MSG:

vm.swappiness = 1

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_slow_start_after_idle = 0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

no crontab for root

[master 172.30.47.67] MSG:

vm.swappiness = 1

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_slow_start_after_idle = 0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

fs.inotify.max_user_instances = 524288

no crontab for root

INFO[15:52:12 CST] Get cluster status

INFO[15:52:12 CST] Installing Container Runtime ...

INFO[15:52:13 CST] Start to download images on all nodes

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.4.1

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.21.5

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.21.5

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.21.5

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.21.5

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.21.5

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.21.5

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.20.0

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.20.0

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.20.0

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.20.0

[node1] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.20.0

[node2] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.20.0

[master] Downloading image: registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.20.0

INFO[15:52:57 CST] Getting etcd status

[master 172.30.47.67] MSG:

Configuration file will be created

INFO[15:52:57 CST] Generating etcd certs

INFO[15:52:58 CST] Synchronizing etcd certs

INFO[15:52:58 CST] Creating etcd service

Push /root/kubekey/v1.21.5/amd64/etcd-v3.4.13-linux-amd64.tar.gz to 172.30.47.67:/tmp/kubekey/etcd-v3.4.13-linux-amd64.tar.gz Done

INFO[15:52:59 CST] Starting etcd cluster

INFO[15:52:59 CST] Refreshing etcd configuration

[master 172.30.47.67] MSG:

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /etc/systemd/system/etcd.service.

INFO[15:53:05 CST] Backup etcd data regularly

INFO[15:53:11 CST] Installing kube binaries

Push /root/kubekey/v1.21.5/amd64/kubeadm to 172.30.47.68:/tmp/kubekey/kubeadm Done

Push /root/kubekey/v1.21.5/amd64/kubeadm to 172.30.47.69:/tmp/kubekey/kubeadm Done

Push /root/kubekey/v1.21.5/amd64/kubeadm to 172.30.47.67:/tmp/kubekey/kubeadm Done

Push /root/kubekey/v1.21.5/amd64/kubelet to 172.30.47.67:/tmp/kubekey/kubelet Done

Push /root/kubekey/v1.21.5/amd64/kubectl to 172.30.47.67:/tmp/kubekey/kubectl Done

Push /root/kubekey/v1.21.5/amd64/kubelet to 172.30.47.69:/tmp/kubekey/kubelet Done

Push /root/kubekey/v1.21.5/amd64/helm to 172.30.47.67:/tmp/kubekey/helm Done

Push /root/kubekey/v1.21.5/amd64/kubectl to 172.30.47.69:/tmp/kubekey/kubectl Done

Push /root/kubekey/v1.21.5/amd64/kubelet to 172.30.47.68:/tmp/kubekey/kubelet Done

Push /root/kubekey/v1.21.5/amd64/cni-plugins-linux-amd64-v0.9.1.tgz to 172.30.47.67:/tmp/kubekey/cni-plugins-linux-amd64-v0.9.1.tgz Done

Push /root/kubekey/v1.21.5/amd64/helm to 172.30.47.69:/tmp/kubekey/helm Done

Push /root/kubekey/v1.21.5/amd64/kubectl to 172.30.47.68:/tmp/kubekey/kubectl Done

Push /root/kubekey/v1.21.5/amd64/cni-plugins-linux-amd64-v0.9.1.tgz to 172.30.47.69:/tmp/kubekey/cni-plugins-linux-amd64-v0.9.1.tgz Done

Push /root/kubekey/v1.21.5/amd64/helm to 172.30.47.68:/tmp/kubekey/helm Done

Push /root/kubekey/v1.21.5/amd64/cni-plugins-linux-amd64-v0.9.1.tgz to 172.30.47.68:/tmp/kubekey/cni-plugins-linux-amd64-v0.9.1.tgz Done

INFO[15:53:21 CST] Initializing kubernetes cluster

[master 172.30.47.67] MSG:

W0103 15:53:22.651333 4701 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.21.5

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master master.cluster.local node1 node1.cluster.local node2 node2.cluster.local] and IPs [10.233.0.1 172.30.47.67 127.0.0.1 172.30.47.68 172.30.47.69]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 15.002758 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: ezhn82.8l46ipaqzuz7w1m5

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token ezhn82.8l46ipaqzuz7w1m5 \

--discovery-token-ca-cert-hash sha256:a0cef84429da1170acaef53bd551f8a1795764ead7b6da4de68f3c7e88effa3e \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token ezhn82.8l46ipaqzuz7w1m5 \

--discovery-token-ca-cert-hash sha256:a0cef84429da1170acaef53bd551f8a1795764ead7b6da4de68f3c7e88effa3e

[master 172.30.47.67] MSG:

service "kube-dns" deleted

[master 172.30.47.67] MSG:

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

[master 172.30.47.67] MSG:

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

[master 172.30.47.67] MSG:

configmap/nodelocaldns created

INFO[15:54:06 CST] Get cluster status

INFO[15:54:07 CST] Joining nodes to cluster

[node2 172.30.47.69] MSG:

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0103 15:54:09.421434 3911 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[node1 172.30.47.68] MSG:

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0103 15:54:09.534801 3810 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[node2 172.30.47.69] MSG:

node/node2 labeled

[node1 172.30.47.68] MSG:

node/node1 labeled

INFO[15:54:17 CST] Deploying network plugin ...

[master 172.30.47.67] MSG:

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

[master 172.30.47.67] MSG:

storageclass.storage.k8s.io/local created

serviceaccount/openebs-maya-operator created

clusterrole.rbac.authorization.k8s.io/openebs-maya-operator created

clusterrolebinding.rbac.authorization.k8s.io/openebs-maya-operator created

deployment.apps/openebs-localpv-provisioner created

INFO[15:54:21 CST] Deploying KubeSphere ...

v3.2.1

[master 172.30.47.67] MSG:

namespace/kubesphere-system created

namespace/kubesphere-monitoring-system created

[master 172.30.47.67] MSG:

secret/kube-etcd-client-certs created

[master 172.30.47.67] MSG:

namespace/kubesphere-system unchanged

serviceaccount/ks-installer unchanged

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer unchanged

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

deployment.apps/ks-installer unchanged

clusterconfiguration.installer.kubesphere.io/ks-installer created

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://172.30.47.67:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-01-03 15:59:07

#####################################################

INFO[15:59:13 CST] Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

[root@master ~]#

验证安装

查看所有安装的pod:

[root@master ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-75ddb95444-j4v9w 1/1 Running 0 18m

kube-system calico-node-6d6p5 1/1 Running 0 18m

kube-system calico-node-8st2j 1/1 Running 0 18m

kube-system calico-node-rrxlf 1/1 Running 0 18m

kube-system coredns-5495dd7c88-mvjkv 1/1 Running 0 19m

kube-system coredns-5495dd7c88-wpg52 1/1 Running 0 19m

kube-system kube-apiserver-master 1/1 Running 0 19m

kube-system kube-controller-manager-master 1/1 Running 0 19m

kube-system kube-proxy-fwvqg 1/1 Running 0 18m

kube-system kube-proxy-tz4qm 1/1 Running 0 19m

kube-system kube-proxy-z7b8t 1/1 Running 0 18m

kube-system kube-scheduler-master 1/1 Running 0 19m

kube-system nodelocaldns-7jtzz 1/1 Running 0 18m

kube-system nodelocaldns-9pmn9 1/1 Running 0 18m

kube-system nodelocaldns-px6dk 1/1 Running 0 19m

kube-system openebs-localpv-provisioner-6c9dcb5c54-b8sq5 1/1 Running 0 18m

kube-system snapshot-controller-0 1/1 Running 0 17m

kubesphere-controls-system default-http-backend-5bf68ff9b8-wxfhw 1/1 Running 0 16m

kubesphere-controls-system kubectl-admin-6667774bb-lj8m4 1/1 Running 0 13m

kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 15m

kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 15m

kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 15m

kubesphere-monitoring-system kube-state-metrics-5547ddd4cc-fhdgg 3/3 Running 0 15m

kubesphere-monitoring-system node-exporter-92sbs 2/2 Running 0 15m

kubesphere-monitoring-system node-exporter-kzfdq 2/2 Running 0 15m

kubesphere-monitoring-system node-exporter-qhpmm 2/2 Running 0 15m

kubesphere-monitoring-system notification-manager-deployment-78664576cb-d2mmz 2/2 Running 0 12m

kubesphere-monitoring-system notification-manager-deployment-78664576cb-d9kxq 2/2 Running 0 12m

kubesphere-monitoring-system notification-manager-operator-7d44854f54-j9ct8 2/2 Running 0 15m

kubesphere-monitoring-system prometheus-k8s-0 2/2 Running 1 15m

kubesphere-monitoring-system prometheus-k8s-1 2/2 Running 1 15m

kubesphere-monitoring-system prometheus-operator-5c5db79546-52nd2 2/2 Running 0 15m

kubesphere-system ks-apiserver-79545b79cc-6cbbl 1/1 Running 0 14m

kubesphere-system ks-console-65f4d44d88-hrbkj 1/1 Running 0 16m

kubesphere-system ks-controller-manager-6764654f75-6vlkm 1/1 Running 0 14m

kubesphere-system ks-installer-69df988b79-t2lrn 1/1 Running 0 18m

[root@master ~]#

检查安装结果:

点击查看代码

[root@master ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

2022-01-03T15:54:52+08:00 INFO : shell-operator latest

2022-01-03T15:54:52+08:00 INFO : Use temporary dir: /tmp/shell-operator

2022-01-03T15:54:52+08:00 INFO : Initialize hooks manager ...

2022-01-03T15:54:52+08:00 INFO : Search and load hooks ...

2022-01-03T15:54:52+08:00 INFO : Load hook config from '/hooks/kubesphere/installRunner.py'

2022-01-03T15:54:52+08:00 INFO : HTTP SERVER Listening on 0.0.0.0:9115

2022-01-03T15:54:53+08:00 INFO : Load hook config from '/hooks/kubesphere/schedule.sh'

2022-01-03T15:54:53+08:00 INFO : Initializing schedule manager ...

2022-01-03T15:54:53+08:00 INFO : KUBE Init Kubernetes client

2022-01-03T15:54:53+08:00 INFO : KUBE-INIT Kubernetes client is configured successfully

2022-01-03T15:54:53+08:00 INFO : MAIN: run main loop

2022-01-03T15:54:53+08:00 INFO : MAIN: add onStartup tasks

2022-01-03T15:54:53+08:00 INFO : Running schedule manager ...

2022-01-03T15:54:53+08:00 INFO : QUEUE add all HookRun@OnStartup

2022-01-03T15:54:53+08:00 INFO : MSTOR Create new metric shell_operator_live_ticks

2022-01-03T15:54:53+08:00 INFO : MSTOR Create new metric shell_operator_tasks_queue_length

2022-01-03T15:54:53+08:00 INFO : GVR for kind 'ClusterConfiguration' is installer.kubesphere.io/v1alpha1, Resource=clusterconfigurations

2022-01-03T15:54:53+08:00 INFO : EVENT Kube event '3a0eff02-f59d-4da7-8da9-c300b7bb1704'

2022-01-03T15:54:53+08:00 INFO : QUEUE add TASK_HOOK_RUN@KUBE_EVENTS kubesphere/installRunner.py

2022-01-03T15:54:56+08:00 INFO : TASK_RUN HookRun@KUBE_EVENTS kubesphere/installRunner.py

2022-01-03T15:54:56+08:00 INFO : Running hook 'kubesphere/installRunner.py' binding 'KUBE_EVENTS' ...

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : Generating images list] ***************************************

skipping: [localhost]

TASK [download : Synchronizing images] *****************************************

TASK [kubesphere-defaults : KubeSphere | Setting images' namespace override] ***

skipping: [localhost]

TASK [kubesphere-defaults : KubeSphere | Configuring defaults] *****************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [preinstall : KubeSphere | Stopping if Kubernetes version is nonsupport] ***

ok: [localhost] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [preinstall : KubeSphere | Checking StorageClass] *************************

changed: [localhost]

TASK [preinstall : KubeSphere | Stopping if StorageClass was not found] ********

skipping: [localhost]

TASK [preinstall : KubeSphere | Checking default StorageClass] *****************

changed: [localhost]

TASK [preinstall : KubeSphere | Stopping if default StorageClass was not found] ***

ok: [localhost] => {

"changed": false,

"msg": "All assertions passed"

}

TASK [preinstall : KubeSphere | Checking KubeSphere component] *****************

changed: [localhost]

TASK [preinstall : KubeSphere | Getting KubeSphere component version] **********

skipping: [localhost]

TASK [preinstall : KubeSphere | Getting KubeSphere component version] **********

skipping: [localhost] => (item=ks-openldap)

skipping: [localhost] => (item=ks-redis)

skipping: [localhost] => (item=ks-minio)

skipping: [localhost] => (item=ks-openpitrix)

skipping: [localhost] => (item=elasticsearch-logging)

skipping: [localhost] => (item=elasticsearch-logging-curator)

skipping: [localhost] => (item=istio)

skipping: [localhost] => (item=istio-init)

skipping: [localhost] => (item=jaeger-operator)

skipping: [localhost] => (item=ks-jenkins)

skipping: [localhost] => (item=ks-sonarqube)

skipping: [localhost] => (item=logging-fluentbit-operator)

skipping: [localhost] => (item=uc)

skipping: [localhost] => (item=metrics-server)

PLAY RECAP *********************************************************************

localhost : ok=6 changed=3 unreachable=0 failed=0 skipped=6 rescued=0 ignored=0

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : Generating images list] ***************************************

skipping: [localhost]

TASK [download : Synchronizing images] *****************************************

TASK [kubesphere-defaults : KubeSphere | Setting images' namespace override] ***

skipping: [localhost]

TASK [kubesphere-defaults : KubeSphere | Configuring defaults] *****************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [Metrics-Server | Getting metrics-server installation files] **************

skipping: [localhost]

TASK [metrics-server : Metrics-Server | Creating manifests] ********************

skipping: [localhost] => (item={'file': 'metrics-server.yaml'})

TASK [metrics-server : Metrics-Server | Checking Metrics-Server] ***************

skipping: [localhost]

TASK [Metrics-Server | Uninstalling old metrics-server] ************************

skipping: [localhost]

TASK [Metrics-Server | Installing new metrics-server] **************************

skipping: [localhost]

TASK [metrics-server : Metrics-Server | Waitting for metrics.k8s.io ready] *****

skipping: [localhost]

TASK [Metrics-Server | Importing metrics-server status] ************************

skipping: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=1 changed=0 unreachable=0 failed=0 skipped=10 rescued=0 ignored=0

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : Generating images list] ***************************************

skipping: [localhost]

TASK [download : Synchronizing images] *****************************************

TASK [kubesphere-defaults : KubeSphere | Setting images' namespace override] ***

skipping: [localhost]

TASK [kubesphere-defaults : KubeSphere | Configuring defaults] *****************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [common : KubeSphere | Checking kube-node-lease namespace] ****************

changed: [localhost]

TASK [common : KubeSphere | Getting system namespaces] *************************

ok: [localhost]

TASK [common : set_fact] *******************************************************

ok: [localhost]

TASK [common : debug] **********************************************************

ok: [localhost] => {

"msg": [

"kubesphere-system",

"kubesphere-controls-system",

"kubesphere-monitoring-system",

"kubesphere-monitoring-federated",

"kube-node-lease"

]

}

TASK [common : KubeSphere | Creating KubeSphere namespace] *********************

changed: [localhost] => (item=kubesphere-system)

changed: [localhost] => (item=kubesphere-controls-system)

changed: [localhost] => (item=kubesphere-monitoring-system)

changed: [localhost] => (item=kubesphere-monitoring-federated)

changed: [localhost] => (item=kube-node-lease)

TASK [common : KubeSphere | Labeling system-workspace] *************************

changed: [localhost] => (item=default)

changed: [localhost] => (item=kube-public)

changed: [localhost] => (item=kube-system)

changed: [localhost] => (item=kubesphere-system)

changed: [localhost] => (item=kubesphere-controls-system)

changed: [localhost] => (item=kubesphere-monitoring-system)

changed: [localhost] => (item=kubesphere-monitoring-federated)

changed: [localhost] => (item=kube-node-lease)

TASK [common : KubeSphere | Labeling namespace for network policy] *************

changed: [localhost]

TASK [common : KubeSphere | Getting Kubernetes master num] *********************

changed: [localhost]

TASK [common : KubeSphere | Setting master num] ********************************

ok: [localhost]

TASK [KubeSphere | Getting common component installation files] ****************

changed: [localhost] => (item=common)

TASK [common : KubeSphere | Checking Kubernetes version] ***********************

changed: [localhost]

TASK [KubeSphere | Getting common component installation files] ****************

changed: [localhost] => (item=snapshot-controller)

TASK [common : KubeSphere | Creating snapshot controller values] ***************

changed: [localhost] => (item={'name': 'custom-values-snapshot-controller', 'file': 'custom-values-snapshot-controller.yaml'})

TASK [common : KubeSphere | Updating snapshot crd] *****************************

changed: [localhost]

TASK [common : KubeSphere | Deploying snapshot controller] *********************

changed: [localhost]

TASK [KubeSphere | Checking openpitrix common component] ***********************

changed: [localhost]

TASK [common : include_tasks] **************************************************

skipping: [localhost] => (item={'op': 'openpitrix-db', 'ks': 'mysql-pvc'})

skipping: [localhost] => (item={'op': 'openpitrix-etcd', 'ks': 'etcd-pvc'})

TASK [common : Getting PersistentVolumeName (mysql)] ***************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeSize (mysql)] ***************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeName (mysql)] ***************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeSize (mysql)] ***************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeName (etcd)] ****************************

skipping: [localhost]

TASK [common : Getting PersistentVolumeSize (etcd)] ****************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeName (etcd)] ****************************

skipping: [localhost]

TASK [common : Setting PersistentVolumeSize (etcd)] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Checking mysql PersistentVolumeClaim] **************

changed: [localhost]

TASK [common : KubeSphere | Setting mysql db pv size] **************************

skipping: [localhost]

TASK [common : KubeSphere | Checking redis PersistentVolumeClaim] **************

changed: [localhost]

TASK [common : KubeSphere | Setting redis db pv size] **************************

skipping: [localhost]

TASK [common : KubeSphere | Checking minio PersistentVolumeClaim] **************

changed: [localhost]

TASK [common : KubeSphere | Setting minio pv size] *****************************

skipping: [localhost]

TASK [common : KubeSphere | Checking openldap PersistentVolumeClaim] ***********

changed: [localhost]

TASK [common : KubeSphere | Setting openldap pv size] **************************

skipping: [localhost]

TASK [common : KubeSphere | Checking etcd db PersistentVolumeClaim] ************

changed: [localhost]

TASK [common : KubeSphere | Setting etcd pv size] ******************************

skipping: [localhost]

TASK [common : KubeSphere | Checking redis ha PersistentVolumeClaim] ***********

changed: [localhost]

TASK [common : KubeSphere | Setting redis ha pv size] **************************

skipping: [localhost]

TASK [common : KubeSphere | Checking es-master PersistentVolumeClaim] **********

changed: [localhost]

TASK [common : KubeSphere | Setting es master pv size] *************************

skipping: [localhost]

TASK [common : KubeSphere | Checking es data PersistentVolumeClaim] ************

changed: [localhost]

TASK [common : KubeSphere | Setting es data pv size] ***************************

skipping: [localhost]

TASK [KubeSphere | Creating common component manifests] ************************

changed: [localhost] => (item={'path': 'redis', 'file': 'redis.yaml'})

TASK [common : KubeSphere | Deploying etcd and mysql] **************************

skipping: [localhost] => (item=etcd.yaml)

skipping: [localhost] => (item=mysql.yaml)

TASK [common : KubeSphere | Getting minio installation files] ******************

skipping: [localhost] => (item=minio-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-minio', 'file': 'custom-values-minio.yaml'})

TASK [common : KubeSphere | Checking minio] ************************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying minio] ***********************************

skipping: [localhost]

TASK [common : debug] **********************************************************

skipping: [localhost]

TASK [common : fail] ***********************************************************

skipping: [localhost]

TASK [common : KubeSphere | Importing minio status] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Generet Random password] ***************************

skipping: [localhost]

TASK [common : KubeSphere | Creating Redis Password Secret] ********************

skipping: [localhost]

TASK [common : KubeSphere | Getting redis installation files] ******************

skipping: [localhost] => (item=redis-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-redis', 'file': 'custom-values-redis.yaml'})

TASK [common : KubeSphere | Checking old redis status] *************************

skipping: [localhost]

TASK [common : KubeSphere | Deleting and backup old redis svc] *****************

skipping: [localhost]

TASK [common : KubeSphere | Deploying redis] ***********************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying redis] ***********************************

skipping: [localhost] => (item=redis.yaml)

TASK [common : KubeSphere | Importing redis status] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Getting openldap installation files] ***************

skipping: [localhost] => (item=openldap-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-openldap', 'file': 'custom-values-openldap.yaml'})

TASK [common : KubeSphere | Checking old openldap status] **********************

skipping: [localhost]

TASK [common : KubeSphere | Shutdown ks-account] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Deleting and backup old openldap svc] **************

skipping: [localhost]

TASK [common : KubeSphere | Checking openldap] *********************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying openldap] ********************************

skipping: [localhost]

TASK [common : KubeSphere | Loading old openldap data] *************************

skipping: [localhost]

TASK [common : KubeSphere | Checking openldap-ha status] ***********************

skipping: [localhost]

TASK [common : KubeSphere | Getting openldap-ha pod list] **********************

skipping: [localhost]

TASK [common : KubeSphere | Getting old openldap data] *************************

skipping: [localhost]

TASK [common : KubeSphere | Migrating openldap data] ***************************

skipping: [localhost]

TASK [common : KubeSphere | Disabling old openldap] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting openldap] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting ks-account] *****************************

skipping: [localhost]

TASK [common : KubeSphere | Importing openldap status] *************************

skipping: [localhost]

TASK [common : KubeSphere | Generet Random password] ***************************

skipping: [localhost]

TASK [common : KubeSphere | Creating Redis Password Secret] ********************

skipping: [localhost]

TASK [common : KubeSphere | Getting redis installation files] ******************

skipping: [localhost] => (item=redis-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-redis', 'file': 'custom-values-redis.yaml'})

TASK [common : KubeSphere | Checking old redis status] *************************

skipping: [localhost]

TASK [common : KubeSphere | Deleting and backup old redis svc] *****************

skipping: [localhost]

TASK [common : KubeSphere | Deploying redis] ***********************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying redis] ***********************************

skipping: [localhost] => (item=redis.yaml)

TASK [common : KubeSphere | Importing redis status] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Getting openldap installation files] ***************

skipping: [localhost] => (item=openldap-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-openldap', 'file': 'custom-values-openldap.yaml'})

TASK [common : KubeSphere | Checking old openldap status] **********************

skipping: [localhost]

TASK [common : KubeSphere | Shutdown ks-account] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Deleting and backup old openldap svc] **************

skipping: [localhost]

TASK [common : KubeSphere | Checking openldap] *********************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying openldap] ********************************

skipping: [localhost]

TASK [common : KubeSphere | Loading old openldap data] *************************

skipping: [localhost]

TASK [common : KubeSphere | Checking openldap-ha status] ***********************

skipping: [localhost]

TASK [common : KubeSphere | Getting openldap-ha pod list] **********************

skipping: [localhost]

TASK [common : KubeSphere | Getting old openldap data] *************************

skipping: [localhost]

TASK [common : KubeSphere | Migrating openldap data] ***************************

skipping: [localhost]

TASK [common : KubeSphere | Disabling old openldap] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting openldap] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Restarting ks-account] *****************************

skipping: [localhost]

TASK [common : KubeSphere | Importing openldap status] *************************

skipping: [localhost]

TASK [common : KubeSphere | Getting minio installation files] ******************

skipping: [localhost] => (item=minio-ha)

TASK [common : KubeSphere | Creating manifests] ********************************

skipping: [localhost] => (item={'name': 'custom-values-minio', 'file': 'custom-values-minio.yaml'})

TASK [common : KubeSphere | Checking minio] ************************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying minio] ***********************************

skipping: [localhost]

TASK [common : debug] **********************************************************

skipping: [localhost]

TASK [common : fail] ***********************************************************

skipping: [localhost]

TASK [common : KubeSphere | Importing minio status] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Getting elasticsearch and curator installation files] ***

skipping: [localhost]

TASK [common : KubeSphere | Creating custom manifests] *************************

skipping: [localhost] => (item={'name': 'custom-values-elasticsearch', 'file': 'custom-values-elasticsearch.yaml'})

skipping: [localhost] => (item={'name': 'custom-values-elasticsearch-curator', 'file': 'custom-values-elasticsearch-curator.yaml'})

TASK [common : KubeSphere | Checking elasticsearch data StatefulSet] ***********

skipping: [localhost]

TASK [common : KubeSphere | Checking elasticsearch storageclass] ***************

skipping: [localhost]

TASK [common : KubeSphere | Commenting elasticsearch storageclass parameter] ***

skipping: [localhost]

TASK [common : KubeSphere | Creating elasticsearch credentials secret] *********

skipping: [localhost]

TASK [common : KubeSphere | Checking internal es] ******************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying elasticsearch-logging] *******************

skipping: [localhost]

TASK [common : KubeSphere | Getting PersistentVolume Name] *********************

skipping: [localhost]

TASK [common : KubeSphere | Patching PersistentVolume (persistentVolumeReclaimPolicy)] ***

skipping: [localhost]

TASK [common : KubeSphere | Deleting elasticsearch] ****************************

skipping: [localhost]

TASK [common : KubeSphere | Waiting for seconds] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying elasticsearch-logging] *******************

skipping: [localhost]

TASK [common : KubeSphere | Importing es status] *******************************

skipping: [localhost]

TASK [common : KubeSphere | Deploying elasticsearch-logging-curator] ***********

skipping: [localhost]

TASK [common : KubeSphere | Getting fluentbit installation files] **************

skipping: [localhost]

TASK [common : KubeSphere | Creating custom manifests] *************************

skipping: [localhost] => (item={'path': 'fluentbit', 'file': 'custom-fluentbit-fluentBit.yaml'})

skipping: [localhost] => (item={'path': 'init', 'file': 'custom-fluentbit-operator-deployment.yaml'})

TASK [common : KubeSphere | Preparing fluentbit operator setup] ****************

skipping: [localhost]

TASK [common : KubeSphere | Deploying new fluentbit operator] ******************

skipping: [localhost]

TASK [common : KubeSphere | Importing fluentbit status] ************************

skipping: [localhost]

TASK [common : Setting persistentVolumeReclaimPolicy (mysql)] ******************

skipping: [localhost]

TASK [common : Setting persistentVolumeReclaimPolicy (etcd)] *******************

skipping: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=26 changed=21 unreachable=0 failed=0 skipped=107 rescued=0 ignored=0

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that

the implicit localhost does not match 'all'

PLAY [localhost] ***************************************************************

TASK [download : Generating images list] ***************************************

skipping: [localhost]

TASK [download : Synchronizing images] *****************************************

TASK [kubesphere-defaults : KubeSphere | Setting images' namespace override] ***

skipping: [localhost]

TASK [kubesphere-defaults : KubeSphere | Configuring defaults] *****************

ok: [localhost] => {

"msg": "Check roles/kubesphere-defaults/defaults/main.yml"

}

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere directory] *********

ok: [localhost]

TASK [ks-core/init-token : KubeSphere | Getting installation init files] *******

changed: [localhost] => (item=jwt-script)

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

ok: [localhost]

TASK [ks-core/init-token : KubeSphere | Creating KubeSphere Secret] ************

skipping: [localhost]

TASK [ks-core/init-token : KubeSphere | Enabling Token Script] *****************

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Getting KubeSphere Token] **************

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Checking KubeSphere secrets] ***********

changed: [localhost]

TASK [ks-core/init-token : KubeSphere | Deleting KubeSphere secret] ************

skipping: [localhost]

TASK [ks-core/init-token : KubeSphere | Creating components token] *************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Setting Kubernetes version] ***************

ok: [localhost]

TASK [ks-core/ks-core : KubeSphere | Getting Kubernetes master num] ************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Setting master num] ***********************

ok: [localhost]

TASK [ks-core/ks-core : KubeSphere | Override master num] **********************

skipping: [localhost]

TASK [ks-core/ks-core : KubeSphere | Setting enableHA] *************************

ok: [localhost]

TASK [ks-core/ks-core : KubeSphere | Checking ks-core Helm Release] ************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Checking ks-core Exsit] *******************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Convert ks-core to helm mananged] *********

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'serviceaccounts', 'resource': 'kubesphere-cluster-admin', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'serviceaccounts', 'resource': 'kubesphere-router-serviceaccount', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'role', 'resource': 'system:kubesphere-router-role', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'rolebinding', 'resource': 'nginx-ingress-role-nisa-binding', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'deployment', 'resource': 'default-http-backend', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-controls-system', 'kind': 'service', 'resource': 'default-http-backend', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'secrets', 'resource': 'ks-controller-manager-webhook-cert', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'serviceaccounts', 'resource': 'kubesphere', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'configmaps', 'resource': 'ks-console-config', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'configmaps', 'resource': 'ks-router-config', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'configmaps', 'resource': 'sample-bookinfo', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'clusterroles', 'resource': 'system:kubesphere-router-clusterrole', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'clusterrolebindings', 'resource': 'system:nginx-ingress-clusterrole-nisa-binding', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'clusterrolebindings', 'resource': 'system:kubesphere-cluster-admin', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'clusterrolebindings', 'resource': 'kubesphere', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'services', 'resource': 'ks-apiserver', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'services', 'resource': 'ks-console', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'services', 'resource': 'ks-controller-manager', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'deployments', 'resource': 'ks-apiserver', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'deployments', 'resource': 'ks-console', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'deployments', 'resource': 'ks-controller-manager', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'validatingwebhookconfigurations', 'resource': 'users.iam.kubesphere.io', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'validatingwebhookconfigurations', 'resource': 'resourcesquotas.quota.kubesphere.io', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'validatingwebhookconfigurations', 'resource': 'network.kubesphere.io', 'release': 'ks-core'})

skipping: [localhost] => (item={'ns': 'kubesphere-system', 'kind': 'users.iam.kubesphere.io', 'resource': 'admin', 'release': 'ks-core'})

TASK [ks-core/ks-core : KubeSphere | Patch admin user] *************************

skipping: [localhost]

TASK [ks-core/ks-core : KubeSphere | Getting ks-core helm charts] **************

changed: [localhost] => (item=ks-core)

TASK [ks-core/ks-core : KubeSphere | Creating manifests] ***********************

changed: [localhost] => (item={'path': 'ks-core', 'file': 'custom-values-ks-core.yaml'})

TASK [ks-core/ks-core : KubeSphere | Upgrade CRDs] *****************************

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/app_v1beta1_application.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/application.kubesphere.io_helmapplications.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/application.kubesphere.io_helmapplicationversions.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/application.kubesphere.io_helmcategories.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/application.kubesphere.io_helmreleases.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/application.kubesphere.io_helmrepos.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/cluster.kubesphere.io_clusters.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/gateway.kubesphere.io_gateways.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/gateway.kubesphere.io_nginxes.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_federatedrolebindings.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_federatedroles.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_federatedusers.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_globalrolebindings.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_globalroles.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_groupbindings.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_groups.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_loginrecords.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_rolebases.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_users.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_workspacerolebindings.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/iam.kubesphere.io_workspaceroles.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/network.kubesphere.io_ipamblocks.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/network.kubesphere.io_ipamhandles.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/network.kubesphere.io_ippools.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/network.kubesphere.io_namespacenetworkpolicies.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/quota.kubesphere.io_resourcequotas.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/servicemesh.kubesphere.io_servicepolicies.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/servicemesh.kubesphere.io_strategies.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/tenant.kubesphere.io_workspaces.yaml)

changed: [localhost] => (item=/kubesphere/kubesphere/ks-core/crds/tenant.kubesphere.io_workspacetemplates.yaml)

TASK [ks-core/ks-core : KubeSphere | Creating ks-core] *************************

changed: [localhost]

TASK [ks-core/ks-core : KubeSphere | Importing ks-core status] *****************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Checking core components (1)] *************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Checking core components (2)] *************

changed: [localhost]

TASK [ks-core/prepare : KubeSphere | Checking core components (3)] *************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Checking core components (4)] *************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Updating ks-core status] ******************

skipping: [localhost]

TASK [ks-core/prepare : set_fact] **********************************************

skipping: [localhost]

TASK [ks-core/prepare : KubeSphere | Creating KubeSphere directory] ************

ok: [localhost]

TASK [ks-core/prepare : KubeSphere | Getting installation init files] **********

changed: [localhost] => (item=ks-init)

TASK [ks-core/prepare : KubeSphere | Initing KubeSphere] ***********************

changed: [localhost] => (item=role-templates.yaml)

TASK [ks-core/prepare : KubeSphere | Generating kubeconfig-admin] **************

skipping: [localhost]

PLAY RECAP *********************************************************************

localhost : ok=25 changed=18 unreachable=0 failed=0 skipped=13 rescued=0 ignored=0

Start installing monitoring

Start installing multicluster

Start installing openpitrix

Start installing network

**************************************************

Waiting for all tasks to be completed ...

task multicluster status is successful (1/4)

task openpitrix status is successful (2/4)

task network status is successful (3/4)

task monitoring status is successful (4/4)

**************************************************

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://172.30.47.67:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-01-03 15:59:07

#####################################################

访问界面:

http://172.30.47.67:30880

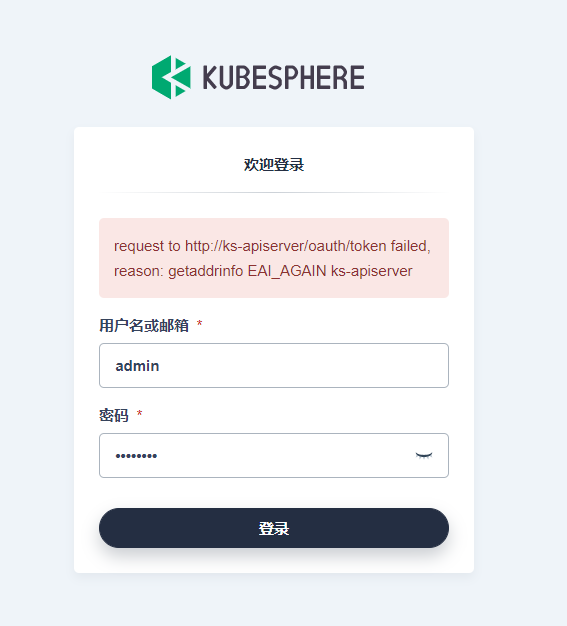

ps: 访问异常:request to http://ks-apiserver/oauth/token failed, reason: getaddrinfo EAI_AGAIN ks-apiserver

查看console日志:

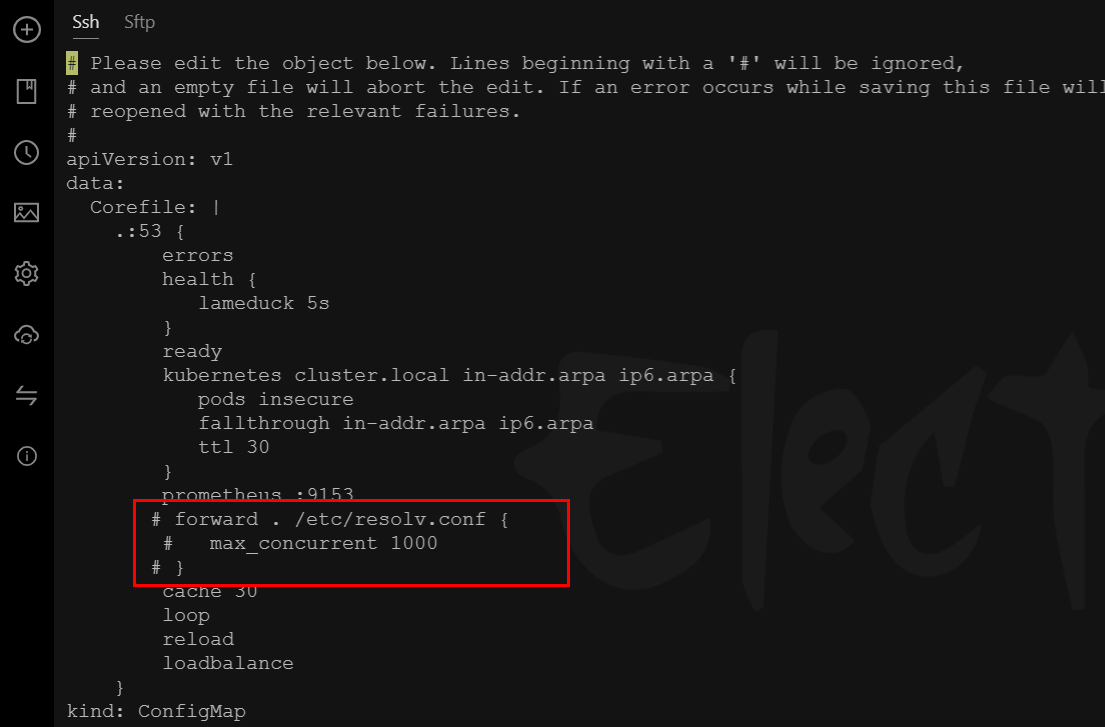

kubectl logs ks-console-65f4d44d88-hrbkj -n kubesphere-system |less--> GET /login 200 5,009ms 15.68kb 2022/01/03T19:17:19.559 <-- POST /login 2022/01/03T19:20:20.437 FetchError: request to http://ks-apiserver/oauth/token failed, reason: getaddrinfo EAI_AGAIN ks-apiserver at ClientRequest.<anonymous> (/opt/kubesphere/console/server/server.js:47991:11) at ClientRequest.emit (events.js:314:20) at Socket.socketErrorListener (_http_client.js:427:9) at Socket.emit (events.js:314:20) at emitErrorNT (internal/streams/destroy.js:92:8) at emitErrorAndCloseNT (internal/streams/destroy.js:60:3) at processTicksAndRejections (internal/process/task_queues.js:84:21) { type: 'system', errno: 'EAI_AGAIN', code: 'EAI_AGAIN' FetchError: request to http://ks-apiserver/kapis/config.kubesphere.io/v1alpha2/configs/oauth failed, reason: getaddrinfo EAI_AGAIN ks-apiserver at ClientRequest.<anonymous> (/opt/kubesphere/console/server/server.js:47991:11) at ClientRequest.emit (events.js:314:20) at Socket.socketErrorListener (_http_client.js:427:9) at Socket.emit (events.js:314:20) at emitErrorNT (internal/streams/destroy.js:92:8) at emitErrorAndCloseNT (internal/streams/destroy.js:60:3) at processTicksAndRejections (internal/process/task_queues.js:84:21) { type: 'system', errno: 'EAI_AGAIN', code: 'EAI_AGAIN'问题解决方法:https://github.com/kubesphere/website/issues/1896

kubectl -n kube-system edit cm coredns -o yaml注释掉 forward

然后重启coredns pod

启用可插拔组件

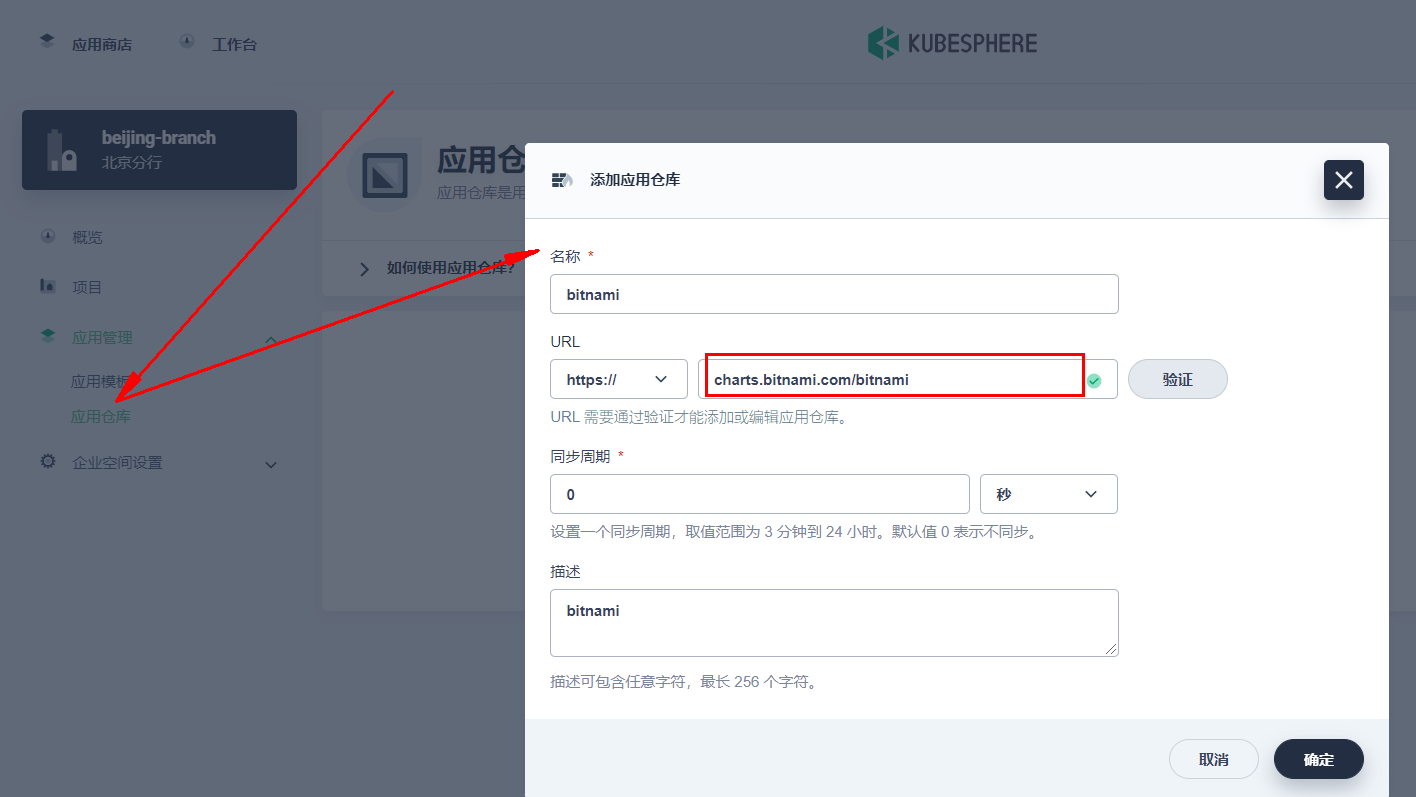

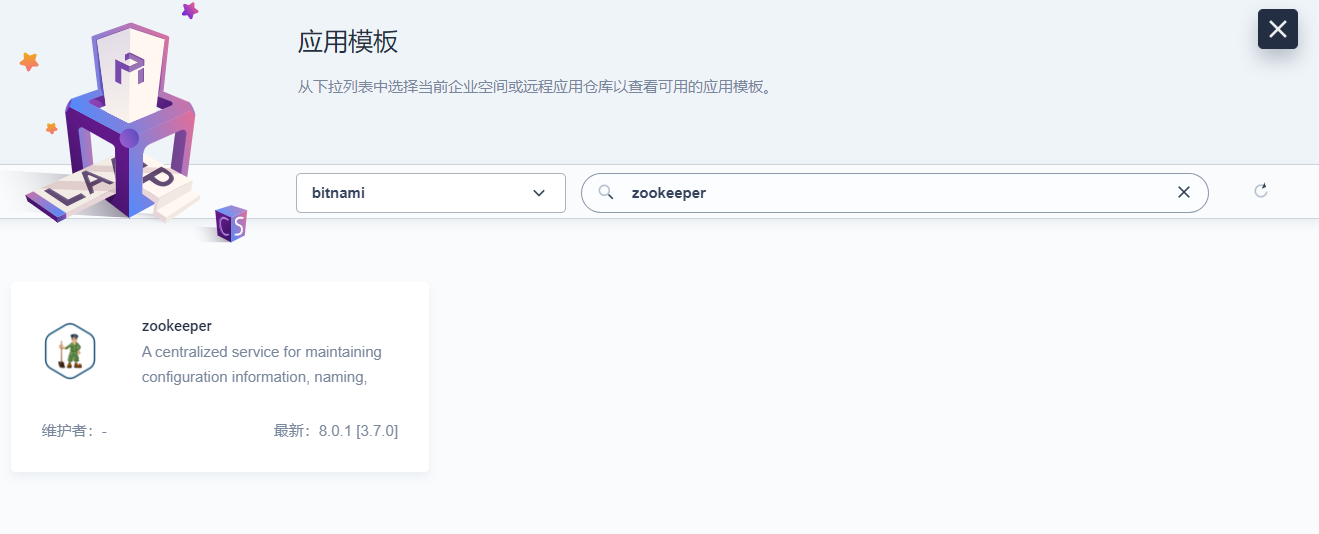

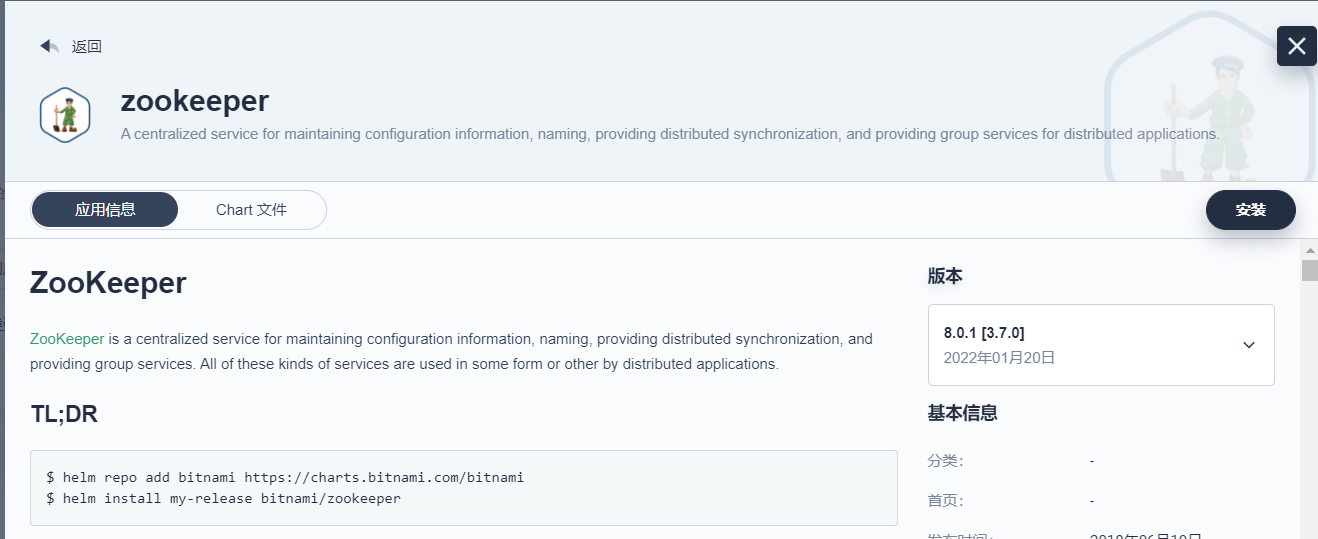

在上面的安装配置文件“config-sample.yaml”中,为了快速安装,我们并没有开启应用商店,日志监控等功能,现在可以手动开启这些功能:

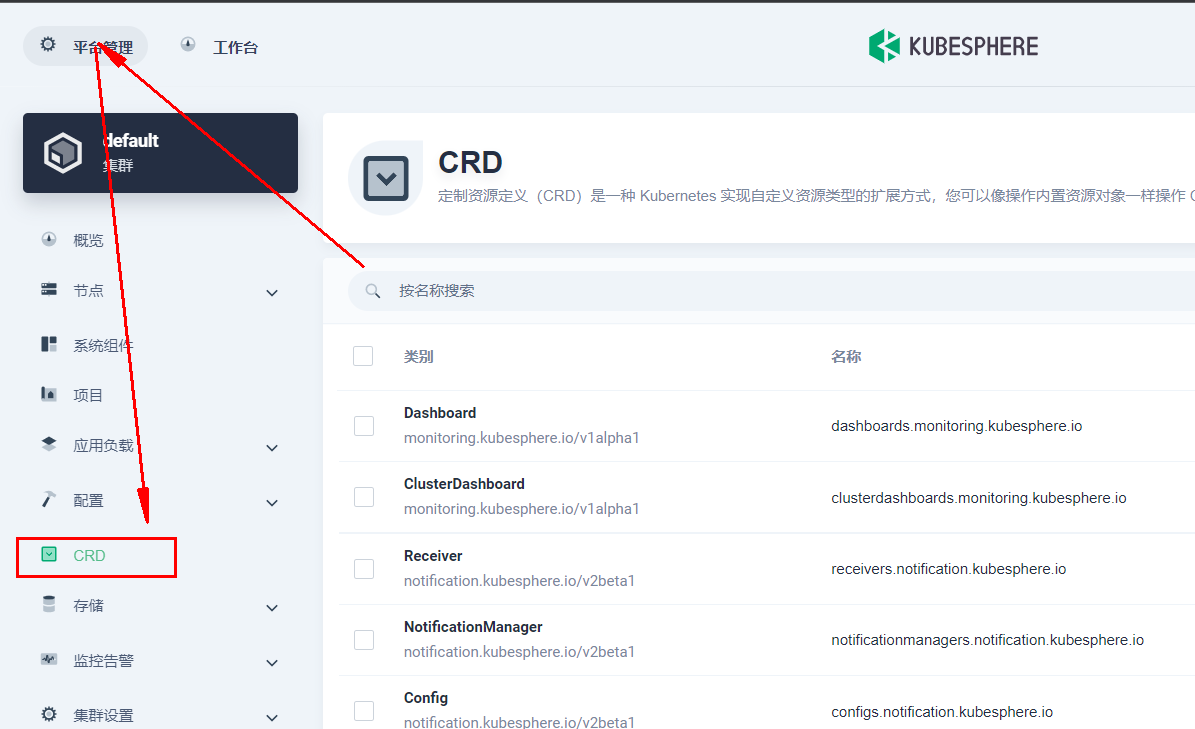

- 以 admin 身份登录控制台。点击左上角的平台管理 ,然后选择集群管理。

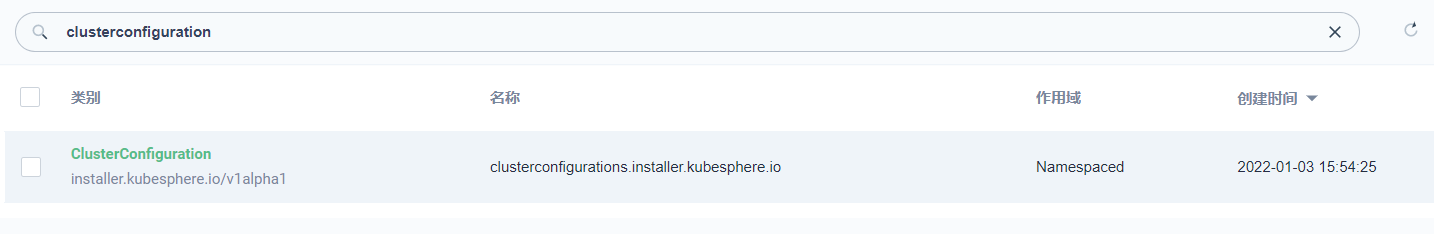

- 点击 CRD,然后在搜索栏中输入 clusterconfiguration,点击搜索结果进入其详情页面。

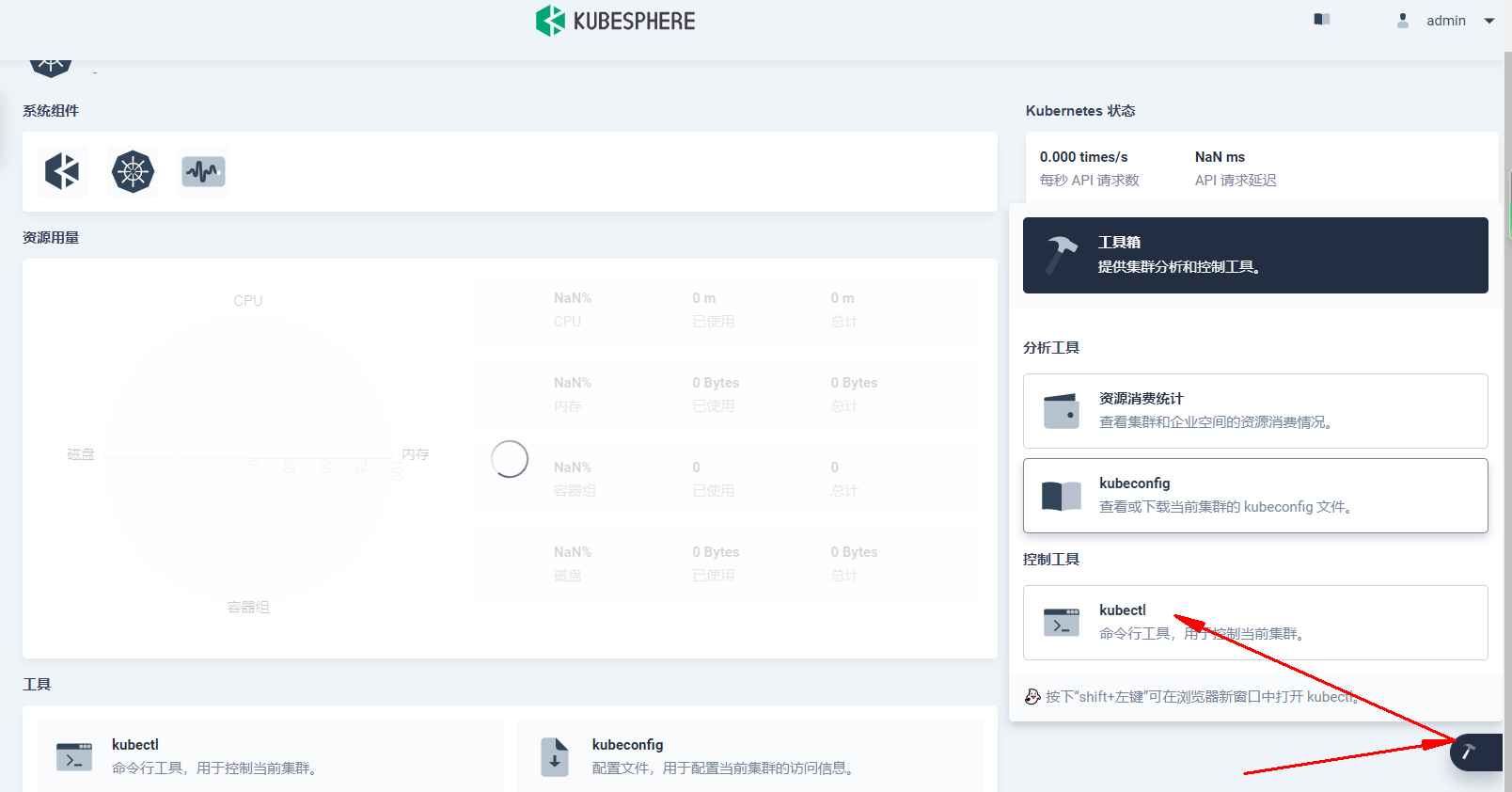

- 在自定义资源中,点击 ks-installer 右侧的三个点,然后选择编辑 YAML。

- 在该配置文件中,将对应组件 enabled 的 false 更改为 true,以启用要安装的组件。完成后,点击更新以保存配置。

下面,我们要开启这些功能:

| 功能 | 修改项 |

|---|---|

| etcd的监控功能 | etcd: monitoring: true . endpointIps: 172.30.47.67 port: 2379 tlsEnable: true |

| alerting | enabled: true |

| auditing | enabled: true |

| devops | enabled: true |

| events | enabled: true |

| logging | enabled: true |

| networkpolicy | enabled: true |

| ippool | type: calico |

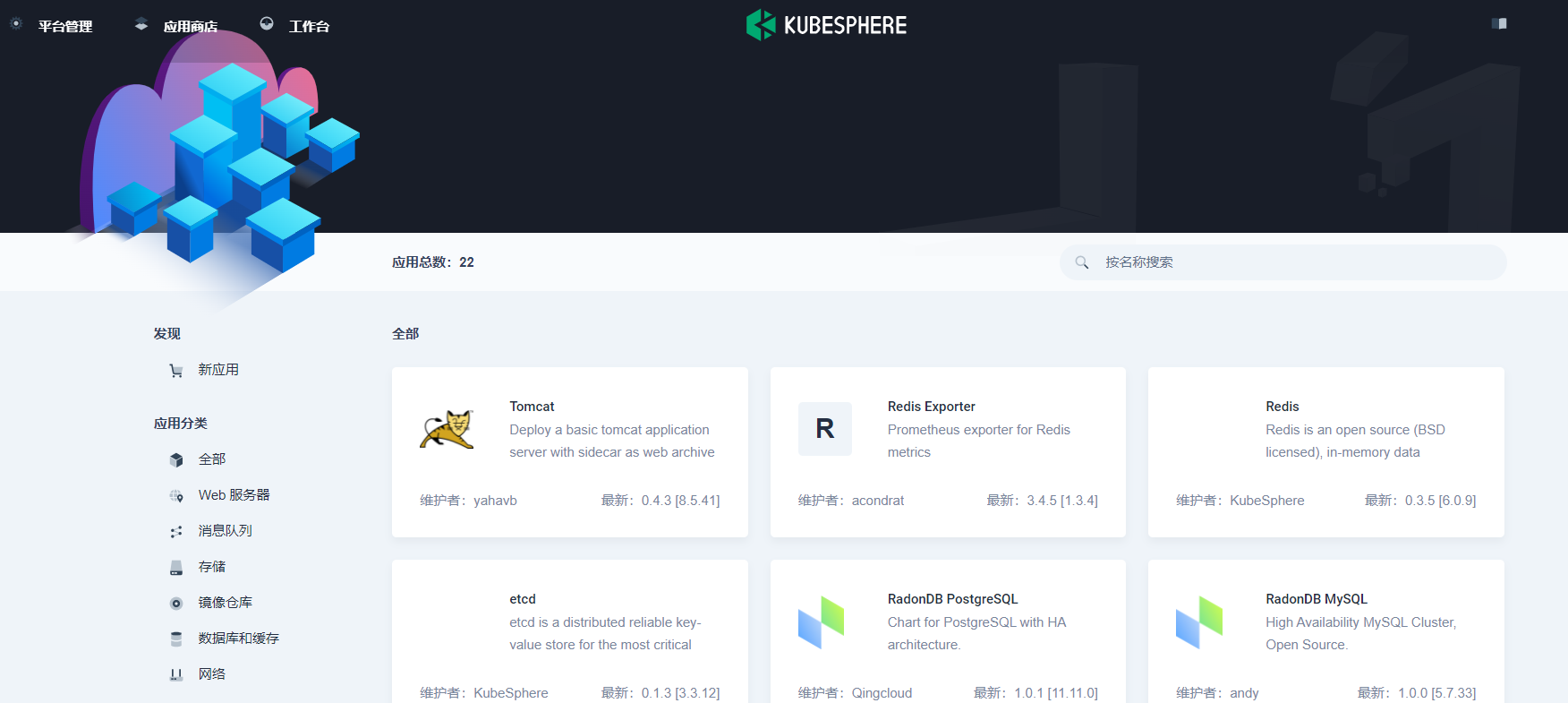

| openpitrix(应用商店) | enabled: true |

| servicemesh | enabled: true |

修改后的配置内容:

点击查看代码

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: >

{"apiVersion":"installer.kubesphere.io/v1alpha1","kind":"ClusterConfiguration","metadata":{"annotations":{},"labels":{"version":"v3.2.1"},"name":"ks-installer","namespace":"kubesphere-system"},"spec":{"alerting":{"enabled":false},"auditing":{"enabled":false},"authentication":{"jwtSecret":""},"common":{"core":{"console":{"enableMultiLogin":true,"port":30880,"type":"NodePort"}},"es":{"basicAuth":{"enabled":false,"password":"","username":""},"elkPrefix":"logstash","externalElasticsearchHost":"","externalElasticsearchPort":"","logMaxAge":7},"gpu":{"kinds":[{"default":true,"resourceName":"nvidia.com/gpu","resourceType":"GPU"}]},"minio":{"volumeSize":"20Gi"},"monitoring":{"GPUMonitoring":{"enabled":false},"endpoint":"http://prometheus-operated.kubesphere-monitoring-system.svc:9090"},"openldap":{"enabled":false,"volumeSize":"2Gi"},"redis":{"enabled":false,"volumeSize":"2Gi"}},"devops":{"enabled":false,"jenkinsJavaOpts_MaxRAM":"2g","jenkinsJavaOpts_Xms":"512m","jenkinsJavaOpts_Xmx":"512m","jenkinsMemoryLim":"2Gi","jenkinsMemoryReq":"1500Mi","jenkinsVolumeSize":"8Gi"},"etcd":{"endpointIps":"172.30.47.67","monitoring":false,"port":2379,"tlsEnable":true},"events":{"enabled":false},"kubeedge":{"cloudCore":{"cloudHub":{"advertiseAddress":[""],"nodeLimit":"100"},"cloudhubHttpsPort":"10002","cloudhubPort":"10000","cloudhubQuicPort":"10001","cloudstreamPort":"10003","nodeSelector":{"node-role.kubernetes.io/worker":""},"service":{"cloudhubHttpsNodePort":"30002","cloudhubNodePort":"30000","cloudhubQuicNodePort":"30001","cloudstreamNodePort":"30003","tunnelNodePort":"30004"},"tolerations":[],"tunnelPort":"10004"},"edgeWatcher":{"edgeWatcherAgent":{"nodeSelector":{"node-role.kubernetes.io/worker":""},"tolerations":[]},"nodeSelector":{"node-role.kubernetes.io/worker":""},"tolerations":[]},"enabled":false},"logging":{"containerruntime":"docker","enabled":false,"logsidecar":{"enabled":true,"replicas":2}},"metrics_server":{"enabled":false},"monitoring":{"gpu":{"nvidia_dcgm_exporter":{"enabled":false}},"storageClass":""},"multicluster":{"clusterRole":"none"},"network":{"ippool":{"type":"none"},"networkpolicy":{"enabled":false},"topology":{"type":"none"}},"openpitrix":{"store":{"enabled":false}},"persistence":{"storageClass":""},"servicemesh":{"enabled":false}}}

labels:

version: v3.2.1

name: ks-installer

namespace: kubesphere-system

spec:

alerting:

enabled: true

auditing:

enabled: true

authentication:

jwtSecret: ''

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

es:

basicAuth:

enabled: false

password: ''

username: ''

elkPrefix: logstash

externalElasticsearchHost: ''

externalElasticsearchPort: ''

logMaxAge: 7

gpu:

kinds:

- default: true

resourceName: nvidia.com/gpu

resourceType: GPU

minio:

volumeSize: 20Gi

monitoring:

GPUMonitoring:

enabled: false

endpoint: 'http://prometheus-operated.kubesphere-monitoring-system.svc:9090'

openldap:

enabled: false

volumeSize: 2Gi

redis:

enabled: false

volumeSize: 2Gi

devops:

enabled: true

jenkinsJavaOpts_MaxRAM: 2g

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

etcd:

endpointIps: 172.30.47.67

monitoring: true

port: 2379

tlsEnable: true

events:

enabled: true

kubeedge:

cloudCore:

cloudHub:

advertiseAddress:

- ''

nodeLimit: '100'

cloudhubHttpsPort: '10002'

cloudhubPort: '10000'

cloudhubQuicPort: '10001'

cloudstreamPort: '10003'

nodeSelector:

node-role.kubernetes.io/worker: ''

service:

cloudhubHttpsNodePort: '30002'

cloudhubNodePort: '30000'

cloudhubQuicNodePort: '30001'

cloudstreamNodePort: '30003'

tunnelNodePort: '30004'

tolerations: []

tunnelPort: '10004'

edgeWatcher:

edgeWatcherAgent:

nodeSelector:

node-role.kubernetes.io/worker: ''

tolerations: []

nodeSelector:

node-role.kubernetes.io/worker: ''

tolerations: []

enabled: false

logging:

containerruntime: docker

enabled: true

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: true

monitoring:

gpu:

nvidia_dcgm_exporter:

enabled: false

storageClass: ''

multicluster:

clusterRole: none

network:

ippool:

type: calico

networkpolicy:

enabled: enable

topology:

type: none

openpitrix:

store:

enabled: true

persistence: