![]()

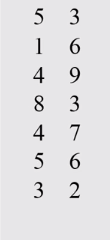

优先根据第一列值排序,如果第一列值相等,根据第二列值排序

package com.zwq

import org.apache.spark.{SparkConf, SparkContext}

object SecondarySortApp extends App {

val conf = new SparkConf().setMaster("local").setAppName("SecondarySortApp")

val sc = new SparkContext(conf)

val array = Array("8 3", "5 6", "5 3", "4 9", "4 7", "3 2", "1 6")

val rdd = sc.parallelize(array)

rdd.map(_.split(" "))

.map(item => (item(0).toInt, item(1).toInt))

.map(item => (new SecondarySortKey(item._1, item._2), s"${item._1} ${item._2}"))

.sortByKey(false)

.foreach(x => println(x._2))

}

class SecondarySortKey(val first:Int, val second: Int) extends Ordered[SecondarySortKey] with Serializable{

override def compare(that: SecondarySortKey): Int = {

if (this.first - that.first != 0){

this.first - that.first

}else {

this.second - that.second

}

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号