【AI】MCP代码实践纯享

使用MCPServer(天气预报与告警)、MCPClient(deepseek-chat),实践基于MCP的AI对话开发

1.依赖安装

pip install --upgrade pip

pip install flask requests

pip install uv

pip install httpx

pip install FastMCP

pip install OpenAI

2.源码

.env

BASE_URL=https://api.deepseek.com

# 可以更换成你对应的支持MCP的模型 MODEL=deepseek-chat OPENAI_API_KEY=sk-xxx

client.py

import asyncio import os import json from typing import Optional from contextlib import AsyncExitStack from openai import OpenAI from dotenv import load_dotenv from mcp import ClientSession, StdioServerParameters from mcp.client.stdio import stdio_client # 加载 .env 文件,确保 API Key 受到保护 load_dotenv() class MCPClient: def __init__(self): """初始化 MCP 客户端""" self.exit_stack = AsyncExitStack() self.openai_api_key = os.getenv("OPENAI_API_KEY") # 读取 OpenAI API Key self.base_url = os.getenv("BASE_URL") # 读取 BASE YRL self.model = os.getenv("MODEL") # 读取 model if not self.openai_api_key: raise ValueError("❌ 未找到 OpenAI API Key,请在 .env 文件中设置 OPENAI_API_KEY") self.client = OpenAI(api_key=self.openai_api_key, base_url=self.base_url) # 创建OpenAI client self.session: Optional[ClientSession] = None self.exit_stack = AsyncExitStack() async def connect_to_server(self, server_script_path: str): """连接到 MCP 服务器并列出可用工具""" is_python = server_script_path.endswith('.py') is_js = server_script_path.endswith('.js') if not (is_python or is_js): raise ValueError("服务器脚本必须是 .py 或 .js 文件") command = "python" if is_python else "node" server_params = StdioServerParameters( command=command, args=[server_script_path], env=None ) # 启动 MCP 服务器并建立通信 stdio_transport = await self.exit_stack.enter_async_context(stdio_client(server_params)) self.stdio, self.write = stdio_transport self.session = await self.exit_stack.enter_async_context(ClientSession(self.stdio, self.write)) await self.session.initialize() # 列出 MCP 服务器上的工具 response = await self.session.list_tools() tools = response.tools print("\n已连接到服务器,支持以下工具:", [tool.name for tool in tools]) async def process_query(self, query: str) -> str: """ 使用大模型处理查询并调用可用的 MCP 工具 (Function Calling) """ messages = [{"role": "user", "content": query}] response = await self.session.list_tools() available_tools = [{ "type": "function", "function": { "name": tool.name, "description": tool.description, "input_schema": tool.inputSchema } } for tool in response.tools] # print(available_tools) response = self.client.chat.completions.create( model=self.model, messages=messages, tools=available_tools ) # 处理返回的内容 content = response.choices[0] if content.finish_reason == "tool_calls": # 如何是需要使用工具,就解析工具 tool_call = content.message.tool_calls[0] tool_name = tool_call.function.name tool_args = json.loads(tool_call.function.arguments) # 执行工具 result = await self.session.call_tool(tool_name, tool_args) print(f"\n\n[Calling tool {tool_name} with args {tool_args}]\n\n") # 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中 messages.append(content.message.model_dump()) messages.append({ "role": "tool", "content": result.content[0].text, "tool_call_id": tool_call.id, }) # 将上面的结果再返回给大模型用于生产最终的结果 response = self.client.chat.completions.create( model=self.model, messages=messages, ) return response.choices[0].message.content return content.message.content async def chat_loop(self): """运行交互式聊天循环""" print("\n🤖 MCP 客户端已启动!输入 'quit' 退出") while True: try: query = input("\n你: ").strip() if query.lower() == 'quit': break response = await self.process_query(query) # 发送用户输入到 OpenAI API print(f"\n🤖 AI {response}") except Exception as e: print(f"\n⚠️ 发生错误: {str(e)}") async def cleanup(self): """清理资源""" await self.exit_stack.aclose() async def main(): if len(sys.argv) < 2: print("Usage: python client.py <path_to_server_script>") sys.exit(1) client = MCPClient() try: await client.connect_to_server(sys.argv[1]) await client.chat_loop() finally: await client.cleanup() if __name__ == "__main__": import sys asyncio.run(main())

server.py

import json import httpx from typing import Any from mcp.server.fastmcp import FastMCP # 初始化 MCP 服务器 mcp = FastMCP("WeatherServer") # 常量 NWS_API_BASE = "https://api.weather.gov" USER_AGENT = "weather-app/1.0" async def make_nws_request(url: str) -> dict[str, Any] | None: """向 NWS API 发送请求,并进行错误处理""" headers = { "User-Agent": USER_AGENT, "Accept": "application/geo+json" } async with httpx.AsyncClient() as client: try: response = await client.get(url, headers=headers, timeout=30.0) response.raise_for_status() return response.json() except Exception: return None def format_alert(feature: dict) -> str: """将天气警报格式化为可读的字符串""" props = feature["properties"] return f""" 事件: {props.get('event', '未知')} 地区: {props.get('areaDesc', '未知')} 严重程度: {props.get('severity', '未知')} 描述: {props.get('description', '无描述')} 指引: {props.get('instruction', '无具体指引')} """ @mcp.tool() async def get_alerts(state: str) -> str: """获取某个美国州的天气警报。 参数: state: 两个字母的州代码(如 CA、NY) """ url = f"{NWS_API_BASE}/alerts/active/area/{state}" data = await make_nws_request(url) if not data or "features" not in data: return "无法获取警报或未找到警报信息。" if not data["features"]: return "该州当前没有活跃的天气警报。" alerts = [format_alert(feature) for feature in data["features"]] return "\n---\n".join(alerts) @mcp.tool() async def get_forecast(latitude: float, longitude: float) -> str: """获取指定位置的天气预报。 参数: latitude: 纬度 longitude: 经度 """ # 获取天气预报的 API 端点 points_url = f"{NWS_API_BASE}/points/{latitude},{longitude}" points_data = await make_nws_request(points_url) if not points_data: return "无法获取该位置的天气预报数据。" # 获取天气预报 URL forecast_url = points_data["properties"]["forecast"] forecast_data = await make_nws_request(forecast_url) if not forecast_data: return "无法获取详细的天气预报信息。" # 格式化天气预报 periods = forecast_data["properties"]["periods"] forecasts = [] for period in periods[:5]: # 仅显示最近 5 个时段的预报 forecast = f""" {period['name']}: 温度: {period['temperature']}°{period['temperatureUnit']} 风速: {period['windSpeed']} {period['windDirection']} 天气: {period['detailedForecast']} """ forecasts.append(forecast) print(forecasts) return "\n---\n".join(forecasts) if __name__ == "__main__": # 以标准 I/O 方式运行 MCP 服务器 mcp.run(transport='stdio')

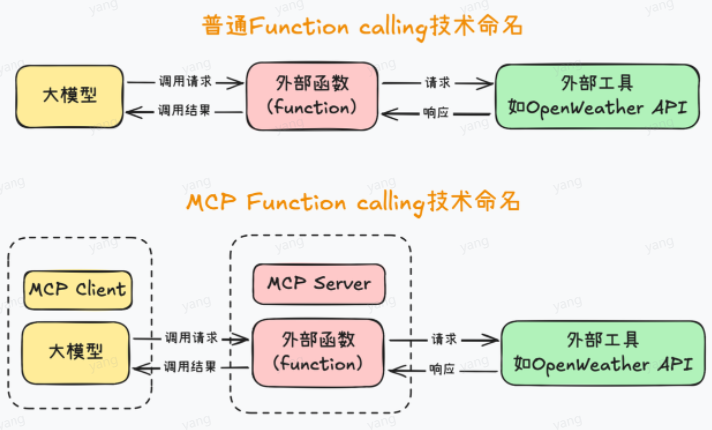

3.原理简述

参考function-calling与mcp的对比图:

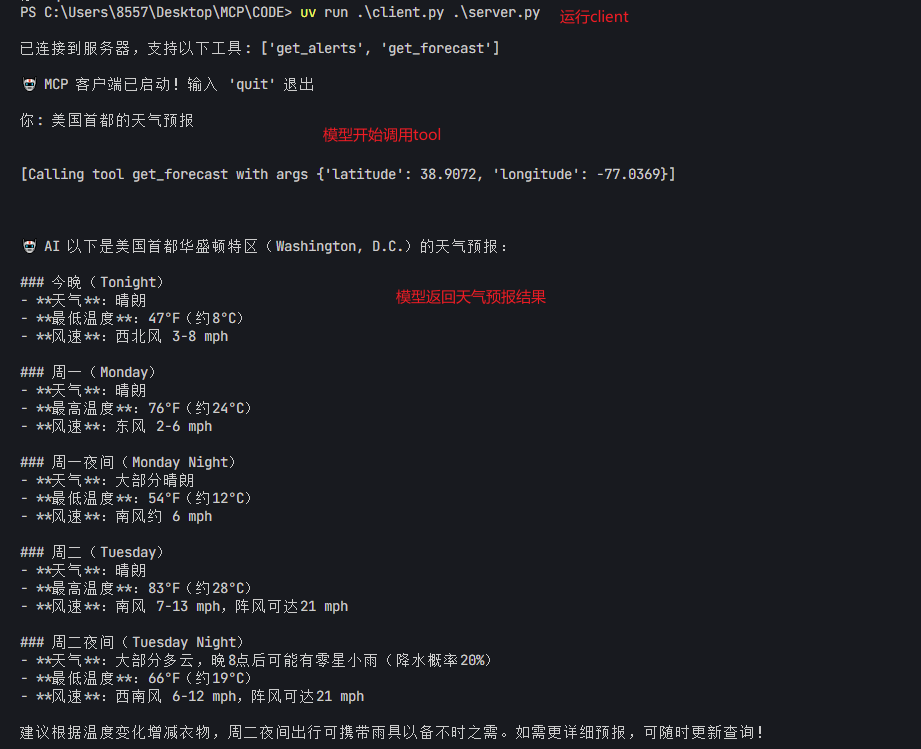

4.效果

浙公网安备 33010602011771号

浙公网安备 33010602011771号