2.安装Spark与Python练习

一、安装Spark

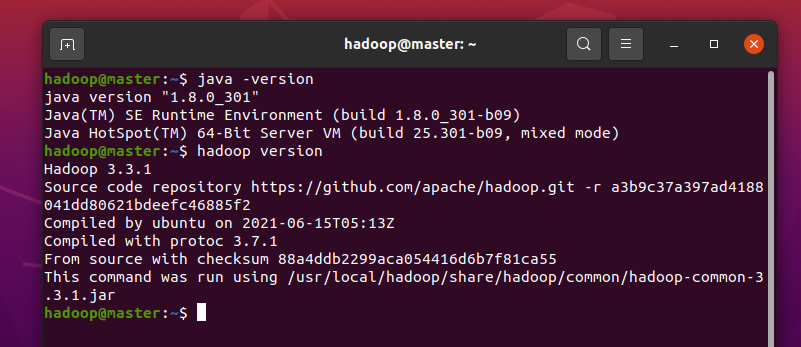

1.检查基础环境hadoop,jdk

java -version //jdk

hadoop -version //hadoop

2、下载spark

https://archive.apache.org/dist/spark/spark-3.2.0/spark-3.2.0-bin-without-hadoop.tgz

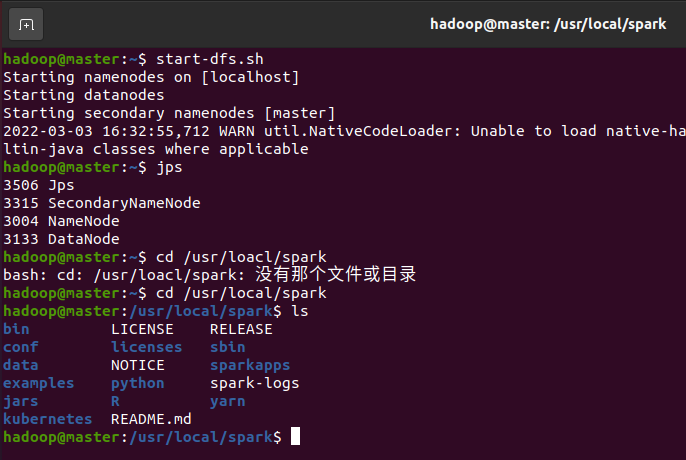

3、解压,文件夹重命名、权限

sudo tar -zxvf ~/VMOS_share_DockerOS/spark-3.2.0-bin-without-hadoop.tgz -C /usr/local/ //解压

sudo mv /usr/local/spark-3.2.0-bin-without-hadoop /usr/local/spark //改名

sudo chown -R hadoop /usr/local/spark //授权

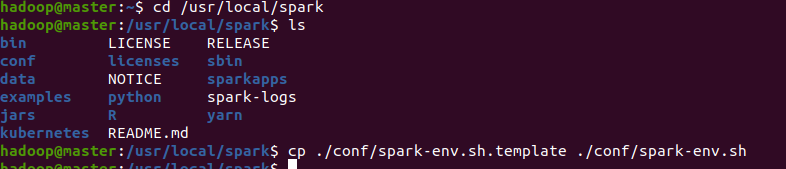

4、配置文件

配置spark的classpath

cd /usr/local/spark

cp ./conf/spark-env.sh.template ./conf/spark-env.sh #拷贝配置文件

在文件中加入

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

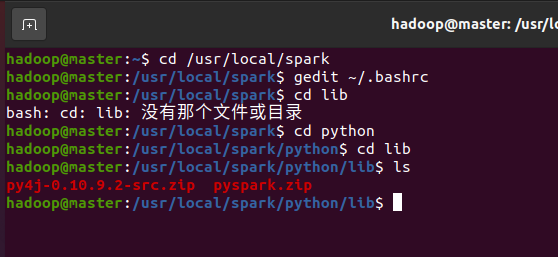

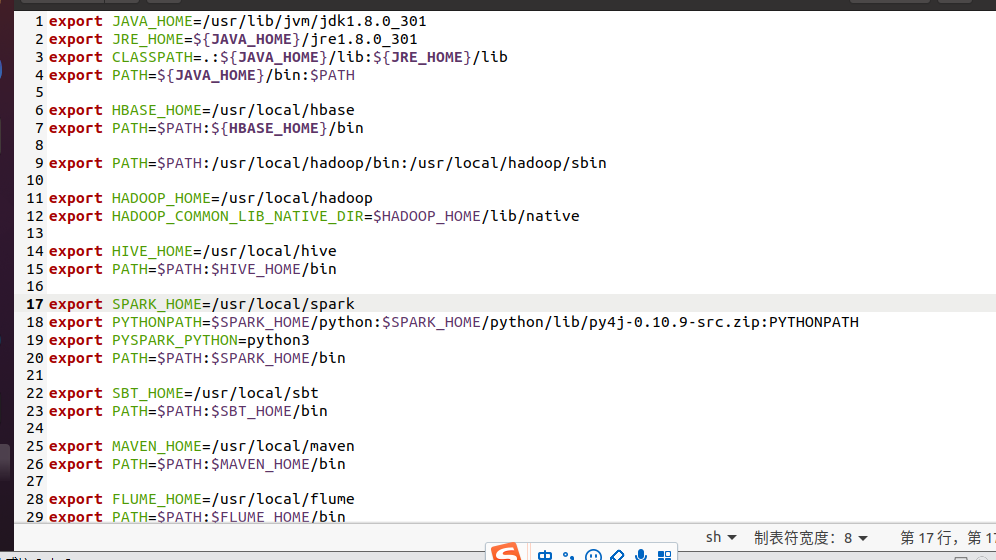

5、环境变量

在gedit ~/.bashrc 插入以下代码

export SPARK_HOME=/usr/local/spark export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.9-src.zip:PYTHONPATH export PYSPARK_PYTHON=python3 export PATH=$PATH:$SPARK_HOME/bin

运行

source ~/.bashrc

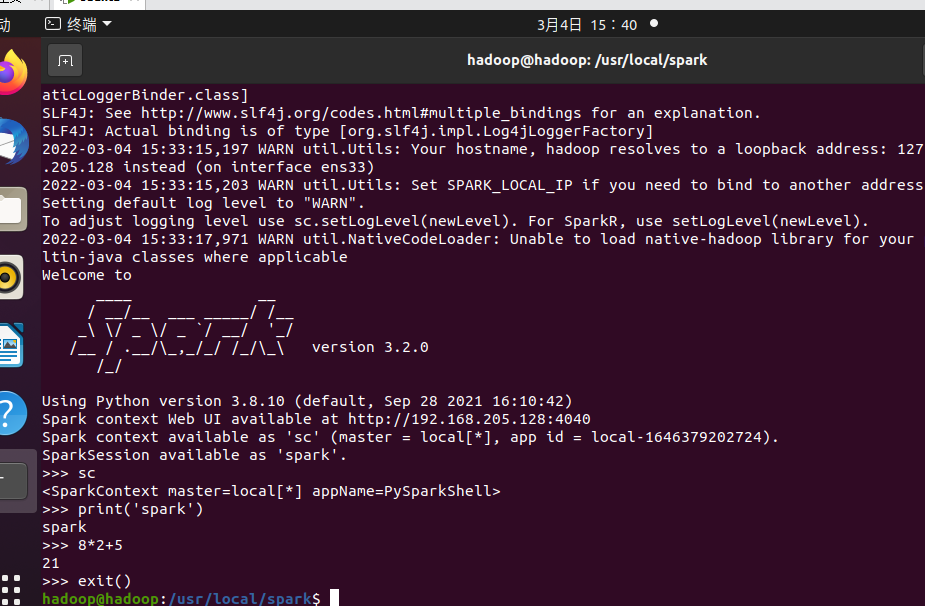

6、试运行Python代码

二、Python编程练习:英文文本的词频统计

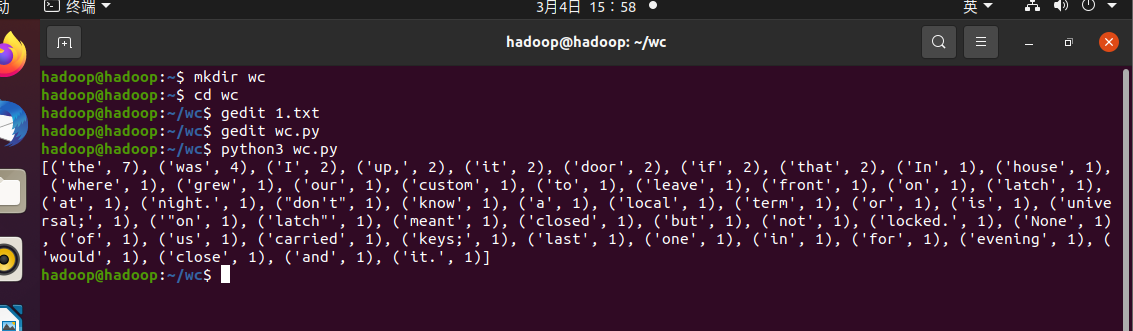

mkdir wc //创建文件夹 cd wc gedit 1.txt

输入下列英文(1.txt)

//1.txt In the house where I grew up, it was our custom to leave the front door on the latch at night. I don't know if that was a local term or if it is universal; "on the latch" meant the door was closed but not locked. None of us carried keys; the last one in for the evening would close up, and that was it.

输入代码(wc.py)

gedit wc.py

path='/home/hadoop/wc/1.txt' with open(path) as f: text=f.read() words = text.split() wc={} for word in words: wc[word]=wc.get(word,0)+1 wclist=list(wc.items()) wclist.sort(key=lambda x:x[1],reverse=True) print(wclist)

运行

python3 wc.py

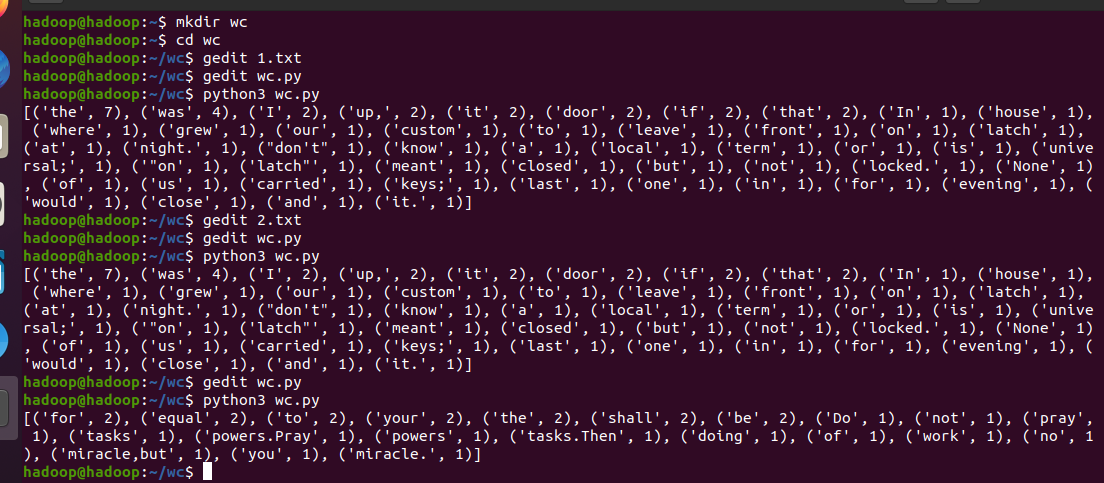

输入下列英文(2.txt)

gedit 2.txt

Do not pray for tasks equal to your powers.Pray for powers equal to your tasks.Then the doing of work shall be no miracle,but you shall be the miracle.

输入以下代码(wc.py)

path='/home/hadoop/wc/2.txt' with open(path) as f: text=f.read() words = text.split() wc={} for word in words: wc[word]=wc.get(word,0)+1 wclist=list(wc.items()) wclist.sort(key=lambda x:x[1],reverse=True) print(wclist)

浙公网安备 33010602011771号

浙公网安备 33010602011771号