深入解析:使用docker部署elk,实现日志追踪

一、前提条件 环境要求: 操作系统:Linux(CentOS/Ubuntu/Debian 均可,本文以 Ubuntu 为例); Docker 版本:20.10+(确保 Docker 服务正常运行); 内存:至少 2GB(建议分配 1GB 给 Elasticsearch JVM)。 检查 Docker 状态: # 确保 Docker 已启动 sudo systemctl status docker # 若未启动,执行启动命令 sudo systemctl start docker && sudo systemctl enable docker拉取elk镜像 docker pull elasticsearch:8.19.2 docker pull kibana:8.19.2 docker pull logstash:8.19.2

安装elasticsearch:8.19.2

Step 1:创建专用网络(ELK 栈互通)

为 Elasticsearch、Kibana、Logstash 创建独立 Docker 网络,避免端口冲突,确保服务间通信: # 创建名为「elk」的桥接网络(后续 Kibana/Logstash 也加入此网络) docker network create elk # 验证网络创建成功 docker network ls | grep elk

Step 2:规划目录结构(避免文件混乱)

提前创建宿主机目录,用于挂载 Elasticsearch 配置、数据、证书(持久化存储,容器删除后数据不丢失): # 创建根目录(根据实际需求调整路径,本文以 /opt/elk 为例) sudo mkdir -p /opt/elk/elasticsearch/{config,certs,data} # 递归修改目录所有者为 1000:1000(匹配容器内 elasticsearch 用户 UID/GID) sudo chown -R 1000:1000 /opt/elk/elasticsearch/ # 设置目录权限(所有者可读写,其他只读) sudo chmod -R 755 /opt/elk/elasticsearch/ # 验证目录结构 tree /opt/elk/elasticsearch/ 预期目录结构: /opt/elk/elasticsearch/ ├── certs # 存储 SSL 证书 ├── config # 存储配置文件(elasticsearch.yml、密钥库等) └── data # 存储数据(持久化)

Step 3:生成 SSL 证书(8.x 必需)

Elasticsearch 8.x 强制要求节点间传输加密,需生成「CA 根证书 + 实例证书」,避免之前的「证书缺失 / 密码错误」问题: 3.1 生成自签名 CA(根证书) bash # 启动临时容器,生成 CA 证书(密码:建议自定义,本文用 ElkCert@2025) docker run -it --rm \ -v /opt/elk/elasticsearch/certs:/usr/share/elasticsearch/certs \ elasticsearch:8.19.2 \ /usr/share/elasticsearch/bin/elasticsearch-certutil ca \ --out /usr/share/elasticsearch/certs/elastic-ca.p12 \ # CA 输出路径 --pass "ElkCert@2025" # CA 密码(记牢,后续生成实例证书需用) 3.2 生成实例证书(用于 Elasticsearch 节点) 用上述 CA 根证书签署实例证书,确保证书包含「节点身份信息 + CA 根证书」: bash docker run -it --rm \ -v /opt/elk/elasticsearch/certs:/usr/share/elasticsearch/certs \ elasticsearch:8.19.2 \ /usr/share/elasticsearch/bin/elasticsearch-certutil cert \ --ca /usr/share/elasticsearch/certs/elastic-ca.p12 \ # 引用 CA 根证书 --ca-pass "ElkCert@2025" \ # 与 CA 密码一致 --out /usr/share/elasticsearch/certs/elasticsearch.p12 \ # 实例证书输出路径 --pass "ElkCert@2025" # 实例证书密码(建议与 CA 一致,方便管理) 3.3 修正证书权限 确保容器内 elasticsearch 用户能读取证书(避免「权限拒绝」错误): bash sudo chown -R 1000:1000 /opt/elk/elasticsearch/certs/ sudo chmod 644 /opt/elk/elasticsearch/certs/* # 证书文件仅可读 # 验证证书生成成功 ls -l /opt/elk/elasticsearch/certs/ 预期输出: -rw-r--r-- 1 1000 1000 2688 Oct 6 17:43 elastic-ca.p12 -rw-r--r-- 1 1000 1000 3612 Oct 6 17:44 elasticsearch.p12

Step 4:配置 elasticsearch.yml(核心配置)

创建并编辑 Elasticsearch 主配置文件,避免「配置缺失 / 格式错误」,使用绝对路径减少路径解析问题: 4.1 创建配置文件 bash sudo nano /opt/elk/elasticsearch/config/elasticsearch.yml 4.2 写入完整配置(复制以下内容) yaml # ======================== 基础集群配置 ======================== cluster.name: es-app-cluster # 集群名称(自定义,需与后续 Kibana 一致) node.name: node-01 # 节点名称(单节点场景自定义) network.host: 0.0.0.0 # 监听所有网卡(允许外部访问) discovery.type: single-node # 单节点模式(无需集群发现) bootstrap.memory_lock: true # 锁定 JVM 内存(避免内存交换,提升性能) # ======================== 安全功能配置 ======================== xpack.security.enabled: true # 启用用户名密码认证(必需) # ======================== SSL 传输加密配置(8.x 必需) ======================== xpack.security.transport.ssl.enabled: true # 启用节点间传输加密 xpack.security.transport.ssl.verification_mode: certificate # 仅验证证书有效性(单节点足够) xpack.security.transport.ssl.client_authentication: required # 强制客户端认证 # 证书绝对路径(容器内路径,避免相对路径解析错误) xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elasticsearch.p12 xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elasticsearch.p12 4.3 修正配置文件权限 sudo chown 1000:1000 /opt/elk/elasticsearch/config/elasticsearch.yml sudo chmod 644 /opt/elk/elasticsearch/config/elasticsearch.yml

Step 5:配置密钥库(存储敏感密码)

Elasticsearch 8.x 废弃明文密码配置,需将证书密码存入「密钥库」(避免配置文件泄露密码): 5.1 自动创建密钥库并添加密码 docker run -it --rm \ -v /opt/elk/elasticsearch/config:/usr/share/elasticsearch/config \ elasticsearch:8.19.2 \ /bin/bash -c " # 自动确认创建密钥库(echo 'y' 处理交互提示) echo 'y' | /usr/share/elasticsearch/bin/elasticsearch-keystore create; # 添加证书密码(与实例证书密码一致:ElkCert@2025) echo 'ElkCert@2025' | /usr/share/elasticsearch/bin/elasticsearch-keystore add -x xpack.security.transport.ssl.keystore.secure_password; echo 'ElkCert@2025' | /usr/share/elasticsearch/bin/elasticsearch-keystore add -x xpack.security.transport.ssl.truststore.secure_password; # 修正密钥库权限(仅 elasticsearch 用户可读写) chown 1000:1000 /usr/share/elasticsearch/config/elasticsearch.keystore; chmod 600 /usr/share/elasticsearch/config/elasticsearch.keystore; " 5.2 验证密钥库 bash ls -l /opt/elk/elasticsearch/config/elasticsearch.keystore 预期输出: -rw------- 1 1000 1000 2560 Oct 6 18:00 elasticsearch.keystore

Step 6:复制默认核心配置文件(避免缺失)

Elasticsearch 启动需 jvm.options(JVM 配置)和 log4j2.properties(日志配置),从官方镜像复制默认文件: 6.1 复制 jvm.options bash docker run -it --rm --name es-tmp elasticsearch:8.19.2 # 新开一个终端,执行复制命令(不要关闭临时容器) docker cp es-tmp:/usr/share/elasticsearch/config/jvm.options /opt/elk/elasticsearch/config/ # 关闭临时容器 docker stop es-tmp 6.2 复制 log4j2.properties bash docker run -it --rm --name es-tmp elasticsearch:8.19.2 # 新开一个终端,执行复制命令 docker cp es-tmp:/usr/share/elasticsearch/config/log4j2.properties /opt/elk/elasticsearch/config/ # 关闭临时容器 docker stop es-tmp 6.3 修正默认文件权限 bash sudo chown -R 1000:1000 /opt/elk/elasticsearch/config/ sudo chmod 644 /opt/elk/elasticsearch/config/{jvm.options,log4j2.properties} # 验证所有配置文件是否齐全 ls -l /opt/elk/elasticsearch/config/ 预期配置文件: plaintext elasticsearch.keystore elasticsearch.yml jvm.options log4j2.properties

Step 7:启动 Elasticsearch 容器

执行完整启动命令,挂载所有必要目录,避免「挂载路径错误」: bash # 停止并删除旧容器(若存在) docker stop elasticsearch 2>/dev/null && docker rm elasticsearch 2>/dev/null # 启动 Elasticsearch 8.19.2 # 停止并删除旧容器(若存在) docker stop elasticsearch 2>/dev/null && docker rm elasticsearch 2>/dev/null # 启动 Elasticsearch 8.19.2 docker run -d \ --name elasticsearch \ --network elk \ # 加入之前创建的 elk 网络 --publish 9200:9200 \ # HTTP 访问端口(外部访问) --publish 9300:9300 \ # 节点间通信端口 # 环境变量配置 --env cluster.name=es-app-cluster \ --env node.name=node-01 \ --env discovery.type=single-node \ --env network.host=0.0.0.0 \ --env xpack.security.enabled=true \ --env ELASTIC_USERNAME=elastic \ # 超级用户名(固定为 elastic) --env ELASTIC_PASSWORD=165656656 \ # 自定义超级用户密码(记牢) --env ES_JAVA_OPTS="-Xms512m -Xmx1g" \ # JVM 内存配置(建议为物理内存的 50%) --env bootstrap.memory_lock=true \ # 挂载宿主机目录(持久化存储) -v /opt/elk/elasticsearch/config:/usr/share/elasticsearch/config \ -v /opt/elk/elasticsearch/data:/usr/share/elasticsearch/data \ -v /opt/elk/elasticsearch/certs:/usr/share/elasticsearch/config/certs \ # 镜像版本 elasticsearch:8.19.2

Step 8:验证 Elasticsearch 服务

8.1 检查容器状态 bash docker ps | grep elasticsearch 若 STATUS 为 Up,说明容器启动成功;若为 Exited,需查看日志排查错误 测试 HTTP 接口访问 bash # 用超级用户密码访问 Elasticsearch(未启用 HTTP SSL,用 http) curl -u elastic:165656656 http://localhost:9200

安装Kibana:8.19.2

前提条件 已安装 Docker 20.10+ 已部署 Elasticsearch 8.19.2 并正常运行 已创建 elk 网络 内存:至少 1.5GB(建议 2GB)

Step 1:创建 Kibana 目录结构

# 创建 Kibana 配置和数据目录 sudo mkdir -p /opt/elk/kibana/{config,data} # 递归修改目录所有者为 1000:1000 sudo chown -R 1000:1000 /opt/elk/kibana/ # 设置目录权限 sudo chmod -R 755 /opt/elk/kibana/ # 验证目录结构 tree /opt/elk/kibana/

Step 2:在 Elasticsearch 中创建服务账户令牌

# 进入 Elasticsearch 容器 docker exec -it elasticsearch bash # 创建 Kibana 服务账户令牌 bin/elasticsearch-service-tokens create elastic/kibana kibana-token # 输出示例(记下这个令牌): # AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpqMU1ZUUM1blFMU3NGOERhQ2xNaXpR # 退出容器 exit

Step 3:创建 Kibana 配置文件

# 创建 Kibana 配置文件 sudo tee /opt/elk/kibana/config/kibana.yml > /dev/null << 'EOF' server.name: kibana server.host: "0.0.0.0" server.port: 5601 elasticsearch.hosts: ["http://elasticsearch:9200"] elasticsearch.serviceAccountToken: "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpqMU1ZUUM1blFMU3NGOERhQ2xNaXpR" xpack.security.enabled: true xpack.encryptedSavedObjects.encryptionKey: "afafsdfdsgegergergweqwrerbhgjntyhtyhtyewergergergegerg" monitoring.ui.container.elasticsearch.enabled: true # 内存优化 node.options: "--max-old-space-size=1024" EOF # 修正配置文件权限 sudo chown 1000:1000 /opt/elk/kibana/config/kibana.yml sudo chmod 644 /opt/elk/kibana/config/kibana.yml

Step 4:启动 Kibana 容器

# 停止并删除旧容器(若存在) docker stop kibana 2>/dev/null && docker rm kibana 2>/dev/null # 启动 Kibana 8.19.2 docker run -d \ --name kibana \ --network elk \ --publish 5601:5601 \ --memory 1.5g \ --memory-swap 2g \ --cpus 1.5 \ -v /opt/elk/kibana/config:/usr/share/kibana/config \ -v /opt/elk/kibana/data:/usr/share/kibana/data \ kibana:8.19.2

Step 5:验证 Kibana 服务

5.1 检查容器状态 bash docker ps | grep kibana 5.2 查看启动日志 bash docker logs -f kibana 等待看到成功启动消息(通常需要1-3分钟): text [info][listening] Server running at http://0.0.0.0:5601 [info][server][Kibana][http] http server running at http://0.0.0.0:5601 5.3 检查服务健康状态 bash # 等待 Kibana 完全启动后测试 curl http://localhost:5601/api/status

安装Logstash:8.19.2

1. 准备工作

创建目录结构 bash # 创建所有必要的目录 sudo mkdir -p /opt/elk/logstash/{config,pipeline,data,logs,certs} # 设置正确的权限(Logstash 使用 1000:1000 用户) sudo chown -R 1000:1000 /opt/elk/logstash/ sudo chmod -R 755 /opt/elk/logstash/ # 验证目录结构 tree /opt/elk/logstash/

2. 配置文件创建

创建 Logstash 主配置文件 bash sudo tee /opt/elk/logstash/config/logstash.yml > /dev/null <

创建管道配置文件

sudo tee /opt/elk/logstash/pipeline/logstash.conf > /dev/null < 5044

codec => json_lines

}

}

filter {

# Token 认证检查

if [token] != "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpqMU1ZUUM1blFMU3NGOERhQ2xNaXpR" {

drop { }

}

# 根据服务名称设置索引

if [service] {

mutate {

add_field => {

"index_name" => "logs-%{service}-%{+YYYY}"

}

}

} else {

mutate {

add_field => {

"index_name" => "logs-unknown-%{+YYYY}"

}

}

}

# 解析时间戳

date {

match => [ "timestamp", "ISO8601" ]

target => "@timestamp"

}

# 添加通用字段

mutate {

#add_field => {

# "environment" => "production"

# "processed_by" => "logstash"

# "log_type" => "application"

#}

# 移除敏感字段

remove_field => [ "token", "host" ]

}

# 数据验证

if ![message] or [message] == "" {

drop { }

}

}

output {

# 输出到 Elasticsearch,使用动态索引名称

elasticsearch {

hosts => ["http://elasticsearch:9200"]

index => "%{index_name}"

user => "elastic"

password => "165656656"

ssl_certificate_verification => false

action => "create" # 关键:使用 create 操作而不是 index

}

# 输出到控制台(用于调试)

stdout {

codec => rubydebug

}

}

EOF

3. 运行 Logstash 容器

docker run -d \

--name logstash \

--network elk \

--user 1000:1000 \

--publish 5044:5044 \

--publish 9600:9600 \

--env LS_JAVA_OPTS="-Xms512m -Xmx512m" \

--env XPACK_MONITORING_ENABLED="true" \

--memory 1g \

--memory-swap 1.5g \

--cpus 1 \

-v /opt/elk/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml \

-v /opt/elk/logstash/pipeline/:/usr/share/logstash/pipeline/ \

-v /opt/elk/logstash/data/:/usr/share/logstash/data/ \

-v /opt/elk/logstash/logs/:/usr/share/logstash/logs/ \

logstash:8.19.2

4. 故障排除

常见问题解决

bash

# 如果容器启动失败,检查日志

docker logs -f logstash

# 检查端口是否监听

netstat -tlnp | grep 5044

netstat -tlnp | grep 9600

# 进入容器检查

docker exec -it logstash bash

ls -la /usr/share/logstash/data/

ls -la /usr/share/logstash/logs/

# 重启服务

docker restart logstash

# 完全重新部署

docker stop logstash

docker rm logstash

# 然后重新运行 docker run 命令

集成到springboot中使用,实现服务日志追踪查看:

注意这里使用的是jdk17版本

1.导入依赖

org.springframework.boot

spring-boot-starter-web

org.projectlombok

lombok

1.18.24

true

org.springframework.boot

spring-boot-starter-test

test

net.logstash.logback

logstash-logback-encoder

7.4

2.添加服务配置文件

application.yml

spring:

application:

name: springboot-elk-demo

profiles:

active: dev # 开发环境使用 dev,生产环境改为 prod

app:

logging:

token: "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpqMU1ZUUM1blFMU3NGOERhQ2xNaXpR"

logging:

config: classpath:logback-spring.xml

level:

org.elk.demo01: DEBUG

logback-spring.xml

%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n

IP:5044

UTC

{

"service": "${appName}",

"token": "${appToken}",

"traceId": "%mdc{traceId}",

"spanId": "%mdc{spanId}",

"environment": "production"

}

5 minutes

5 minutes

1024

0

true

3.添加测试类

LogController

package org.elk.demo01.controller;

import lombok.extern.slf4j.Slf4j;

import org.slf4j.MDC;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.UUID;

@RestController

@Slf4j

public class LogController {

@GetMapping("/test")

public String testLog() {

log.info("测试INFO级别日志");

log.debug("测试DEBUG级别日志");

log.error("测试ERROR级别日志");

logWithCustomFields();

return "日志测试完成";

}

// 使用MDC添加自定义字段

public void logWithCustomFields() {

MDC.put("userId", "12345");

MDC.put("requestId", UUID.randomUUID().toString());

log.info("业务操作日志");

MDC.clear();

}

@GetMapping("/error-test")

public String errorTest() {

try {

int result = 10 / 0;

} catch (Exception e) {

log.error("发生数学运算错误", e);

}

return "错误日志测试";

}

}

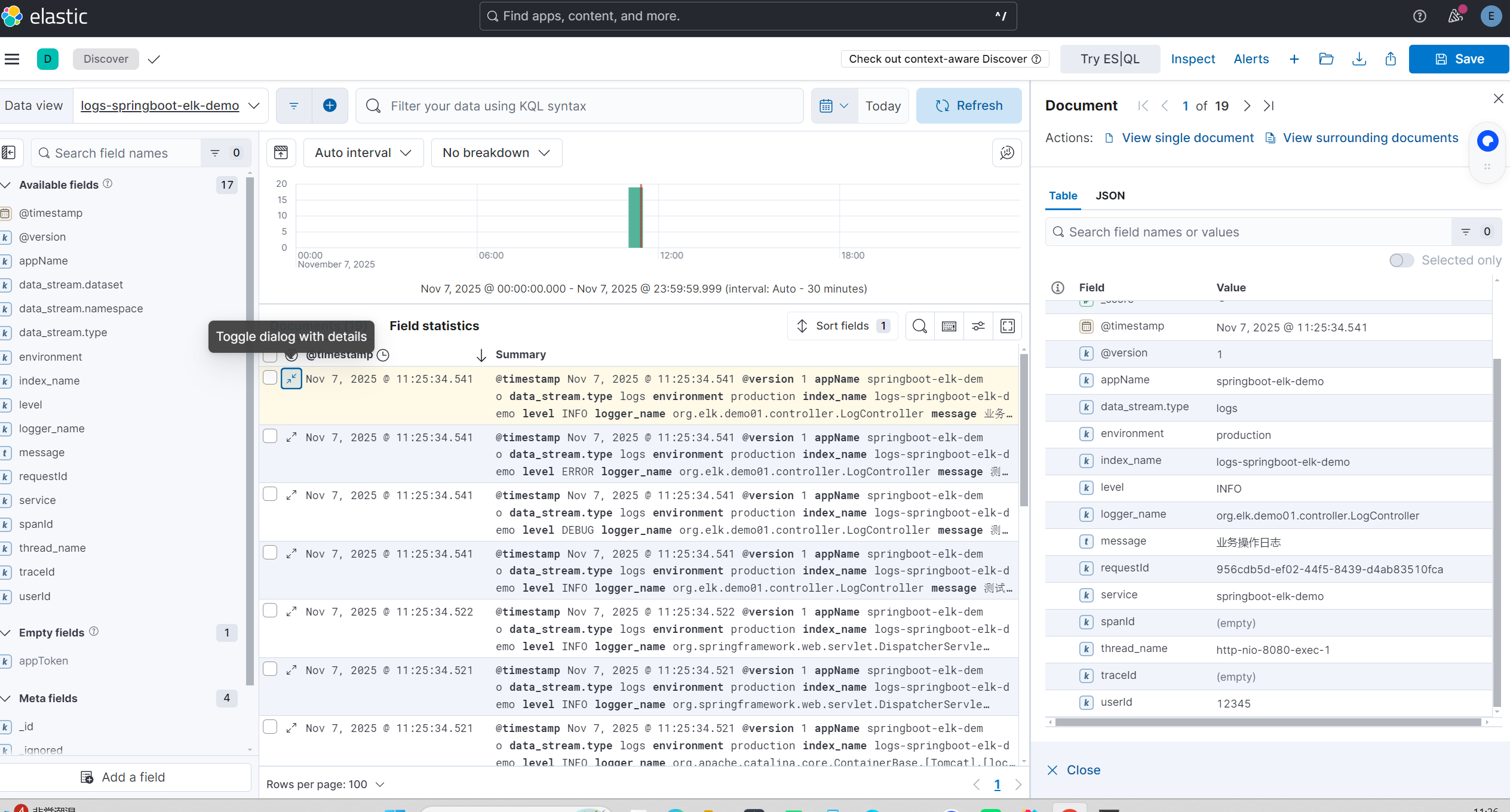

4.查看测试生成的日志

Spring Boot 3.x 全局链路追踪实现:

方案一:Micrometer Tracing

环境:使用jdk17,springboot版本3.3.11,springcloud:2023.0.5

1. 依赖配置

4.0.0

org.springframework.boot

spring-boot-starter-parent

3.3.11-SNAPSHOT

org.example

elk-demo-02

0.0.1-SNAPSHOT

elk-demo-02

elk-demo-02

17

org.springframework.cloud

spring-cloud-dependencies

2023.0.5

pom

import

com.alibaba.cloud

spring-cloud-alibaba-dependencies

2023.0.1.0

pom

import

org.springframework.boot

spring-boot-starter-web

org.projectlombok

lombok

true

org.springframework.boot

spring-boot-starter-test

test

org.springframework.boot

spring-boot-starter-validation

org.springframework.cloud

spring-cloud-starter-bootstrap

com.alibaba.cloud

spring-cloud-starter-alibaba-nacos-config

com.alibaba.cloud

spring-cloud-starter-alibaba-nacos-discovery

net.logstash.logback

logstash-logback-encoder

7.4

io.micrometer

micrometer-tracing

io.micrometer

micrometer-tracing-bridge-brave

org.springframework.boot

spring-boot-starter-actuator

io.github.openfeign

feign-micrometer

org.springframework.cloud

spring-cloud-starter-openfeign

org.springframework.cloud

spring-cloud-starter-loadbalancer

org.projectlombok

lombok-maven-plugin

1.18.20.0

compile

delombok

src/main/java

target/generated-sources/delombok

true

org.apache.maven.plugins

maven-compiler-plugin

3.8.1

1.8

1.8

target/generated-sources/delombok

2. 应用配置

application.yml

spring:

application:

name: springboot-elk-demo02

profiles:

active: dev # 开发环境使用 dev,生产环境改为 prod

#使用elk日志,如果不使用elk日志则把 logging:config修改为:logback-spring.xml

app:

logging:

token: "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpqMU1ZUUM1blFMU3NGOERhQ2xNaXpR"

#指定日志配置文件

logging:

pattern:

# 在日志中显示Trace ID和Span ID

level: "%5p [${spring.application.name:},%X{traceId:-},%X{spanId:-}]"

level:

io.micrometer.tracing: DEBUG

org.springframework.cloud: INFO

config: classpath:logback-elk-spring.xml

management:

tracing:

sampling:

probability: 1.0 # 采样率,生产环境可以设置为0.1

endpoints:

web:

exposure:

include: health,metrics,info

# 不配置zipkin相关属性

# 自定义Tracing配置

tracing:

enabled: true

log-level: INFO

server:

port: 8082

logback-elk-spring.xml

ip:5044

UTC

{

"service": "${appName}",

"token": "${appToken}",

"traceId": "%mdc{traceId}",

"spanId": "%mdc{spanId}",

"environment": "production"

}

5 minutes

5 minutes

1024

0

true

${CONSOLE_PATTERN}

UTF-8

${LOG_PATH}/${APP_NAME}-info.log

${LOG_PATH}/${APP_NAME}-info.%d{yyyy-MM-dd}.%i.log.gz

100MB

30

1GB

${FILE_PATTERN}

UTF-8

INFO

ACCEPT

DENY

${LOG_PATH}/${APP_NAME}-error.log

${LOG_PATH}/${APP_NAME}-error.%d{yyyy-MM-dd}.%i.log.gz

100MB

30

1GB

${FILE_PATTERN}

UTF-8

ERROR

ACCEPT

DENY

3. Tracing配置类

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.cloud.openfeign.EnableFeignClients;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.web.client.RestTemplate;

@Configuration

@EnableConfigurationProperties(TracingProperties.class)

@EnableFeignClients

public class TracingConfig {

@Bean

public RestTemplate restTemplate() {

return new RestTemplate();

}

// 自定义Brave配置

@Bean

public brave.Tracing braveTracing(TracingProperties properties) {

return brave.Tracing.newBuilder()

.localServiceName(properties.getServiceName())

.sampler(brave.sampler.Sampler.create(properties.getSamplingProbability()))

.build();

}

}

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

// Tracing配置属性

@ConfigurationProperties(prefix = "tracing")

@Data

public class TracingProperties {

private boolean enabled = true;

private String serviceName = "default-service";

private float samplingProbability = 1.0f;

private String logLevel = "INFO";

}

4. 业务代码示例

前3个步骤的配置代码需要在两个服务(demo02,demo3)中配置;

demo02业务代码:

AsynService

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Service;

@Service

@Slf4j

public class AsynService {

public void test() {

log.info("异步开始执行");

}

}

TestServiceClient

import org.springframework.cloud.openfeign.FeignClient;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestParam;

import java.util.List;

@FeignClient(name = "springboot-elk-demo03", path = "/demo03")

public interface TestServiceClient {

@GetMapping("/test1")

List demoTest1(@RequestParam Long userId);

@PostMapping("/test2")

List demoTest2();

}

Controller

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

@RefreshScope

@RestController

@Slf4j

public class Controller {

@Autowired

private TestServiceClient testServiceClient;

@Autowired

private AsynService asynService;

@GetMapping("/test2")

public List index2() {

log.info("调用demo03服务的demoTest1");

List list1 = testServiceClient.demoTest1(1L);

asynService.test();

log.info("调用demo03服务的demoTest2");

List list2 = testServiceClient.demoTest2();

list1.addAll(list2);

return list1;

}

}

demo03业务代码:

Controller

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.web.bind.annotation.*;

import java.util.Arrays;

import java.util.List;

@RefreshScope

@RestController

@Slf4j

public class Controller {

@GetMapping("demo03/test1")

List demoTest1(@RequestParam Long userId){

log.info("userId:{}",userId);

return Arrays.asList(userId);

}

@PostMapping("demo03/test2")

List demoTest2(){

try {

log.info("test2");

int a = 1/0;

}catch (Exception e){

log.error("test2 error:{}",e);

}

return Arrays.asList(11L,12L,13L);

}

}

5.调用测试接口

输出:[1,11,12,13]

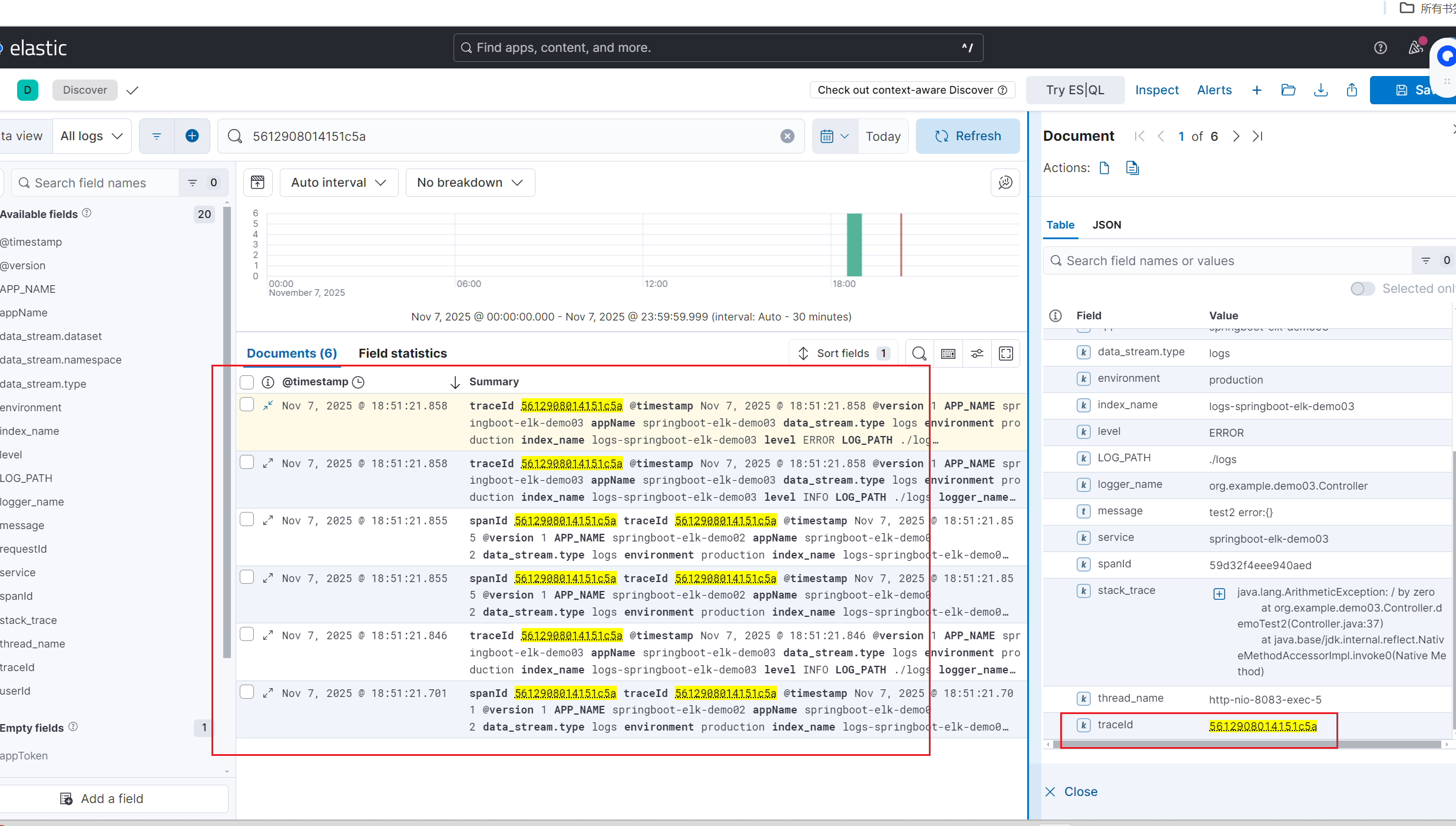

查看es上的日志:

从结果可以看到,demo02和demo03的日志,而且链路id是从demo02生成然后流转到demo03,这样子就可以解决接口调用各个微服务后,可以通过统一的链路id查看同一请求不同服务之间的日志了

浙公网安备 33010602011771号

浙公网安备 33010602011771号