Kubernetes

1、Kubernetes概念

官网:https://kubernetes.io/zh/docs/home/

简称K8s

Kubernetes 是一个可移植、可扩展的开源平台,用于管理容器化的工作负载和服务,方便进行声明式配置和自动化。Kubernetes 拥有一个庞大且快速增长的生态系统,其服务、支持和工具的使用范围广泛。

用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制。

Kubernetes 为你提供:

-

服务发现和负载均衡

Kubernetes 可以使用 DNS 名称或自己的 IP 地址公开容器,如果进入容器的流量很大, Kubernetes 可以负载均衡并分配网络流量,从而使部署稳定。 -

存储编排

Kubernetes 允许你自动挂载你选择的存储系统,例如本地存储、公共云提供商等。 -

自动部署和回滚

你可以使用 Kubernetes 描述已部署容器的所需状态,它可以以受控的速率将实际状态 更改为期望状态。例如,你可以自动化 Kubernetes 来为你的部署创建新容器, 删除现有容器并将它们的所有资源用于新容器。 -

自动完成装箱计算

Kubernetes 允许你指定每个容器所需 CPU 和内存(RAM)。 当容器指定了资源请求时,Kubernetes 可以做出更好的决策来管理容器的资源。 -

自我修复

Kubernetes 重新启动失败的容器、替换容器、杀死不响应用户定义的 运行状况检查的容器,并且在准备好服务之前不将其通告给客户端。 -

密钥与配置管理

Kubernetes 允许你存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。 你可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。Kubernetes 为你提供了一个可弹性运行分布式系统的框架。 Kubernetes 会满足你的扩展要求、故障转移、部署模式等。 例如,Kubernetes 可以轻松管理系统的 Canary 部署。

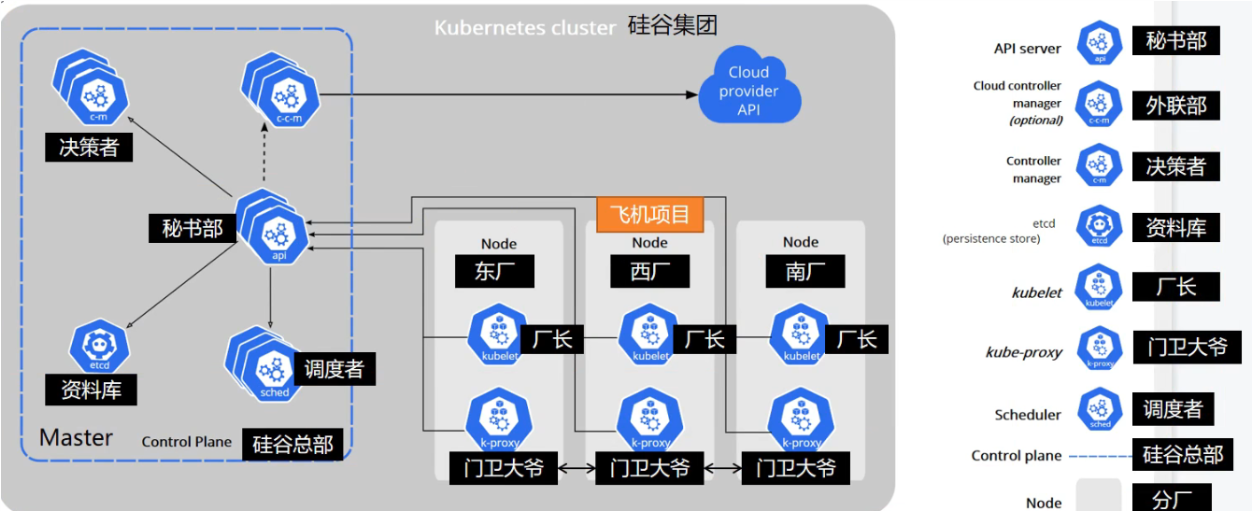

1、架构

1、工作方式:

Kubernetes Cluster = N Master Node + N Work Node; N >= 1

2、组织架构

解析图如下:

控制平面组件(Control Plane Components)

控制平面的组件对集群做出全局决策(比如调度),以及检测和响应集群事件(例如,当不满足部署的 replicas 字段时,启动新的 pod)。

控制平面组件可以在集群中的任何节点上运行。 然而,为了简单起见,设置脚本通常会在同一个计算机上启动所有控制平面组件, 并且不会在此计算机上运行用户容器。 请参阅使用 kubeadm 构建高可用性集群 中关于跨多机器控制平面设置的示例。

kube-apiserver

API 服务器是 Kubernetes 控制面的组件, 该组件公开了 Kubernetes API。 API 服务器是 Kubernetes 控制面的前端。

Kubernetes API 服务器的主要实现是 kube-apiserver。 kube-apiserver 设计上考虑了水平伸缩,也就是说,它可通过部署多个实例进行伸缩。 你可以运行 kube-apiserver 的多个实例,并在这些实例之间平衡流量。

etcd

etcd 是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

您的 Kubernetes 集群的 etcd 数据库通常需要有个备份计划。

要了解 etcd 更深层次的信息,请参考 etcd 文档。

kube-scheduler

控制平面组件,负责监视新创建的、未指定运行节点(node)的 Pods,选择节点让 Pod 在上面运行。

调度决策考虑的因素包括单个 Pod 和 Pod 集合的资源需求、硬件/软件/策略约束、亲和性和反亲和性规范、数据位置、工作负载间的干扰和最后时限。

kube-controller-manager

运行控制器进程的控制平面组件。

从逻辑上讲,每个控制器都是一个单独的进程, 但是为了降低复杂性,它们都被编译到同一个可执行文件,并在一个进程中运行。

这些控制器包括:

- 节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应

- 任务控制器(Job controller): 监测代表一次性任务的 Job 对象,然后创建 Pods 来运行这些任务直至完成

- 端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod)

- 服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名空间创建默认帐户和 API 访问令牌

cloud-controller-manager

云控制器管理器是指嵌入特定云的控制逻辑的 控制平面

cloud-controller-manager 仅运行特定于云平台的控制回路。 如果你在自己的环境中运行 Kubernetes,或者在本地计算机中运行学习环境, 所部署的环境中不需要云控制器管理器。

与 kube-controller-manager 类似,cloud-controller-manager 将若干逻辑上独立的 控制回路组合到同一个可执行文件中,供你以同一进程的方式运行。 你可以对其执行水平扩容(运行不止一个副本)以提升性能或者增强容错能力。

下面的控制器都包含对云平台驱动的依赖:

- 节点控制器(Node Controller): 用于在节点终止响应后检查云提供商以确定节点是否已被删除

- 路由控制器(Route Controller): 用于在底层云基础架构中设置路由

- 服务控制器(Service Controller): 用于创建、更新和删除云提供商负载均衡器

Node 组件

节点组件在每个节点上运行,维护运行的 Pod 并提供 Kubernetes 运行环境。

kubelet

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都 运行在 Pod 中。

kubelet 接收一组通过各类机制提供给它的 PodSpecs,确保这些 PodSpecs 中描述的容器处于运行状态且健康。 kubelet 不会管理不是由 Kubernetes 创建的容器。

kube-proxy

kube-proxy 是集群中每个节点上运行的网络代理, 实现 Kubernetes 服务(Service) 概念的一部分。

kube-proxy 维护节点上的网络规则。这些网络规则允许从集群内部或外部的网络会话与 Pod 进行网络通信。

如果操作系统提供了数据包过滤层并可用的话,kube-proxy 会通过它来实现网络规则。否则, kube-proxy 仅转发流量本身。

容器运行时(Container Runtime)

容器运行环境是负责运行容器的软件。

Kubernetes 支持容器运行时,例如 Docker、 containerd、CRI-O 以及 Kubernetes CRI (容器运行环境接口) 的其他任何实现。

2、Kubernetes创建集群

参考Docker安装,先提前为所有机器安装Docker

官方安装文档:https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

1、安装Kubeadm

1、基础环境

使用 kubeadm 工具来 创建和管理 Kubernetes 集群。 该工具能够执行必要的动作并用一种用户友好的方式启动一个可用的、安全的集群。

- 一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

- 每台机器 2 GB 或更多的 RAM (如果少于这个数字将会影响你应用的运行内存)

- 2 CPU 核或更多

- 集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

- 设置防火墙放行规则

- 节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见这里了解更多详细信息。

- 设置不同hostname

- 开启机器上的某些端口。请参见这里 了解更多详细信息。

- 内网互信

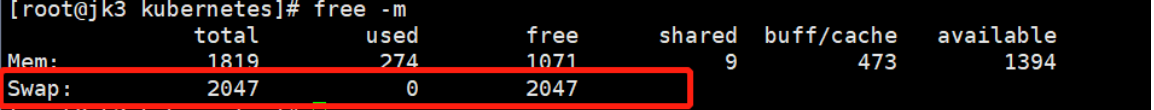

- 禁用交换分区。为了保证 kubelet 正常工作,你 必须 禁用交换分区。

- 永久关闭

# 查看内存

free -l

需要关闭Swap

执行以下操作:

- 准备三台服务器(虚拟机也可),内网可以ping通

- 选定一台作为Master节点,其余两台为Node从节点

# 各个机器设置自己的主机名

hostnamectl set-hostname xxx

# 将SELinux 设置为permissive模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

# 关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

# 允许iptables检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

2、安装kubelet、kubeadm、kubectl

kubeadm:用来初始化集群的指令。kubelet:在集群中的每个节点上用来启动 Pod 和容器等。kubectl:用来与集群通信的命令行工具。

kubeadm 不能 帮你安装或者管理 kubelet 或 kubectl,所以你需要 确保它们与通过 kubeadm 安装的控制平面的版本相匹配。 如果不这样做,则存在发生版本偏差的风险,可能会导致一些预料之外的错误和问题。 然而,控制平面与 kubelet 间的相差一个次要版本不一致是支持的,但 kubelet 的版本不可以超过 API 服务器的版本。 例如,1.7.0 版本的 kubelet 可以完全兼容 1.8.0 版本的 API 服务器,反之则不可以。

基于Red Hat 发行版

# 使用aliyun地址 配置k8s下载地址信息

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# 安装 kubelet、kubeadm、kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

# 设置kubelet开机自启 --now 立即开启

sudo systemctl enable --now kubelet

# 查看状态

systemctl status kubelet

kubelet 现在每隔几秒就会重启,因为它陷入了一个等待kubeadm指令的死循环

2、使用kubeadm引导集群

1、下载各机器需要的镜像

# 新增镜像下载文件

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

# 赋予执行文件 且执行

chmod +x ./images.sh && ./images.sh

2、初始化主节点

# 所有机器添加master主机名映射 以下需要修改为自己的

echo "192.168.1.11 cluster-endpoint" >> /etc/hosts

# 主节点机器上运行 所有网段范围不重叠即可

kubeadm init \

--apiserver-advertise-address=192.168.1.11 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

3、根据提示继续操作

Master成功提示信息:

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token 2cyxyc.lkl1zp63bssbrpna \

--discovery-token-ca-cert-hash sha256:c54722b5ad898e72e5662799a6303373a7a3e81c0916b5e7776e9d882bce706d \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token 2cyxyc.lkl1zp63bssbrpna \

--discovery-token-ca-cert-hash sha256:c54722b5ad898e72e5662799a6303373a7a3e81c0916b5e7776e9d882bce706d

1、设置.kube/config

# 执行以下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看集群部署了那些应用 -w 表示一直监听状态变化

kubectl get pods -A === docker ps

# 在docker中运行的应用叫容器,在k8s中运行的应用叫pod

kubectl get pods -A

# 查看集群所有节点

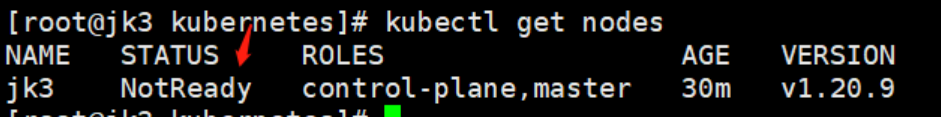

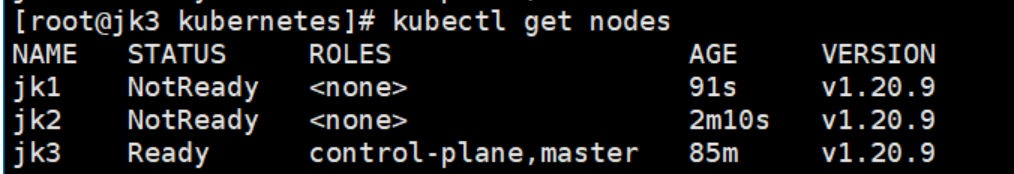

kubectl get nodes

当前状态为NotReady,还需要安装一个网络组件,将各个节点串起来

2、安装网络组件

插件地址:https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 下载calico的配置文件(主节点下执行)

curl https://docs.projectcalico.org/manifests/calico.yaml -O

# 根据配置文件,给集群创建资源 **.yaml

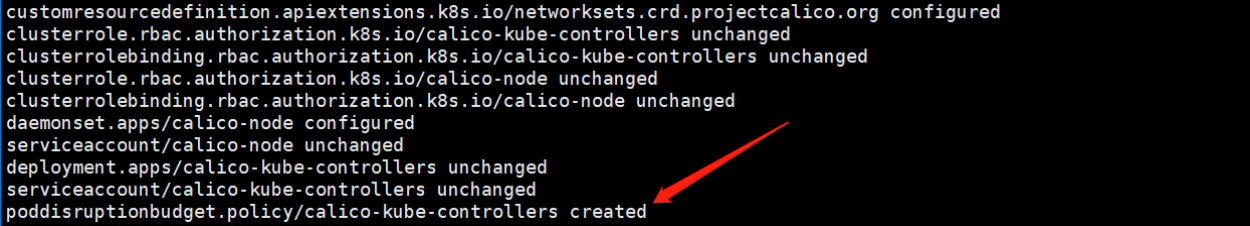

kubectl apply -f calico.yaml

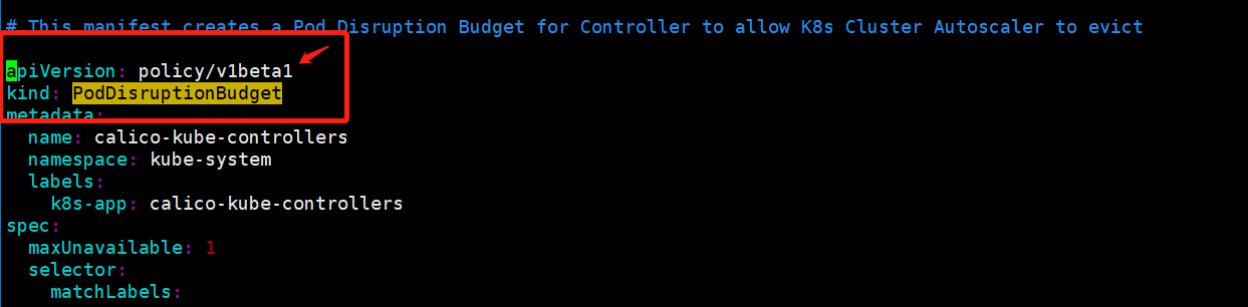

附加报错:

报错:error: unable to recognize "alertmanager/alertmanager-podDisruptionBudget.yaml": no matches for kind "PodDisruptionBudget" in version "policy/v1"

经确认,k8s版本是1.20.11,PodDisruptionBudget 看在1.20中还是v1beta1,修改为policy/v1beta1

vim calico.yaml

再次执行 kubectl apply -f calico.yaml

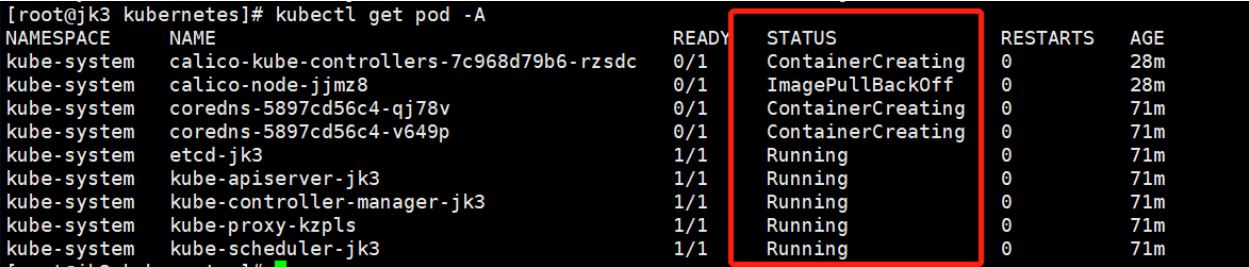

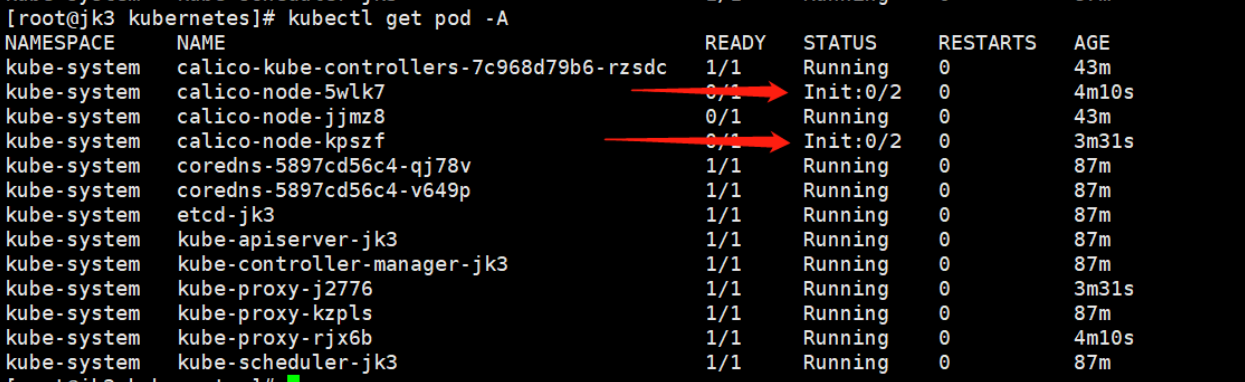

查看部署了那些应用

kubectl get pod -A

等待全部都变成Running(需要一会,请耐心等待)

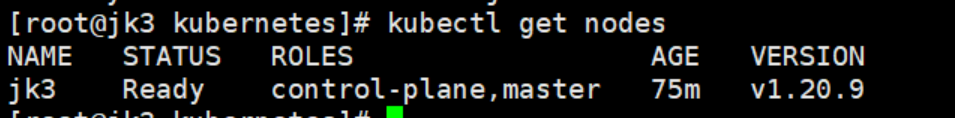

查看Master节点状态

kubectl get nodes

4、节点类型更改

注意:此令牌24小时内有效

将节点变为主节点(Master)

# 在节点主机下执行

kubeadm join cluster-endpoint:6443 --token 2cyxyc.lkl1zp63bssbrpna \

--discovery-token-ca-cert-hash sha256:c54722b5ad898e72e5662799a6303373a7a3e81c0916b5e7776e9d882bce706d \

--control-plane

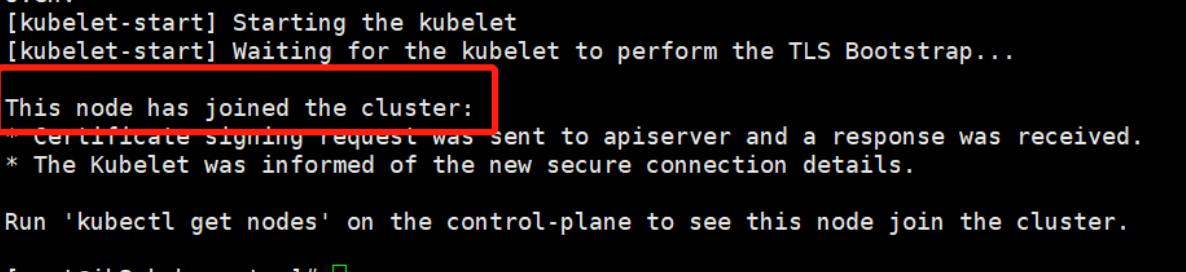

将节点变为从节点(Worker)

# 在该节点主机下执行

kubeadm join cluster-endpoint:6443 --token 2cyxyc.lkl1zp63bssbrpna \

--discovery-token-ca-cert-hash sha256:c54722b5ad898e72e5662799a6303373a7a3e81c0916b5e7776e9d882bce706d

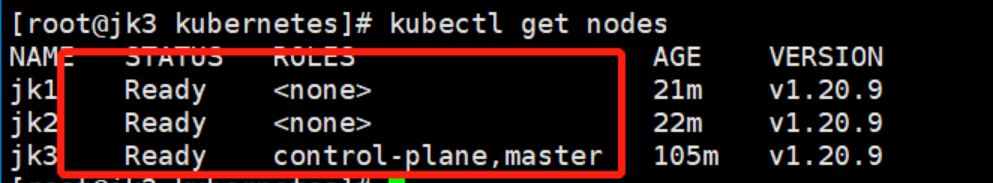

将另外两个节点更改为从节点:

执行之后返回如下,表示添加成功

在Master节点当中查看

kubectl get nodes

稍等片刻(可能会很久哈,视机器性能和网路而定),Worker节点正在初始化

kubectl get pod -A

再次查看节点状态:

至此,Kubernetes集群就搭建完成了

1、新令牌获取

Master节点运行以下命令:

kubeadm token create --print-join-command

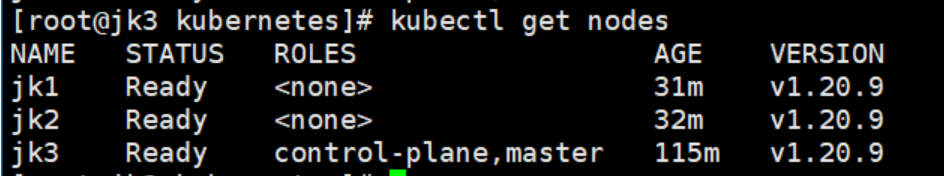

5、自愈测试

# 重启机器

reboot

# 查看节点状态

kubectl get nodes

节点是自启动的,内部会有资源正在自动恢复(稍等片刻)

省去了人工启动和检查

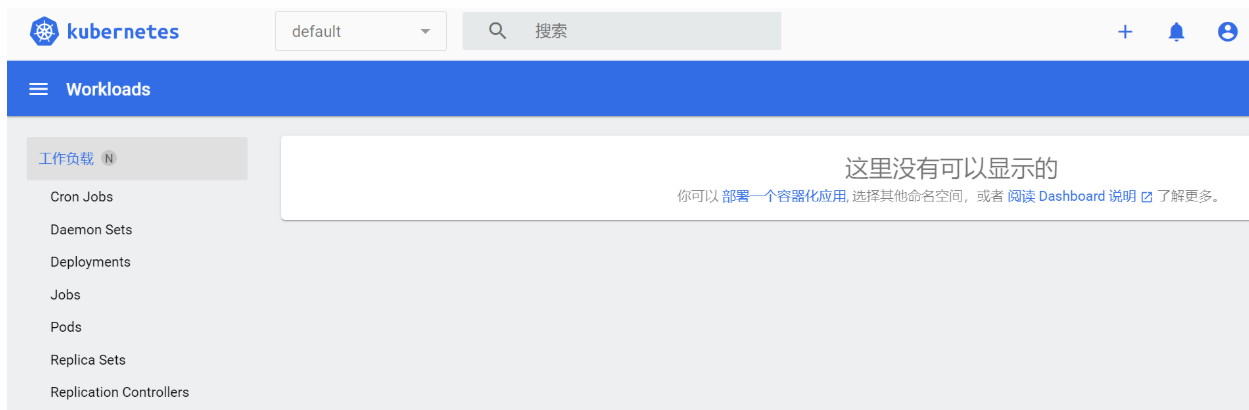

6、加入dashboard(Master)

1、部署

kubernetes官方提供的可视化界面

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

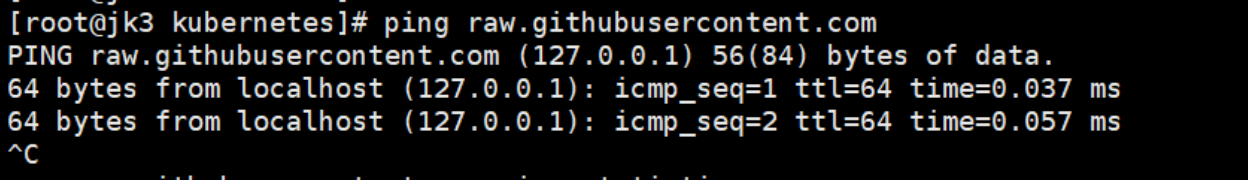

报错:

The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?

发现DNS能正常解析但是就是不能访问

原因:外网不可访问

解决方法:

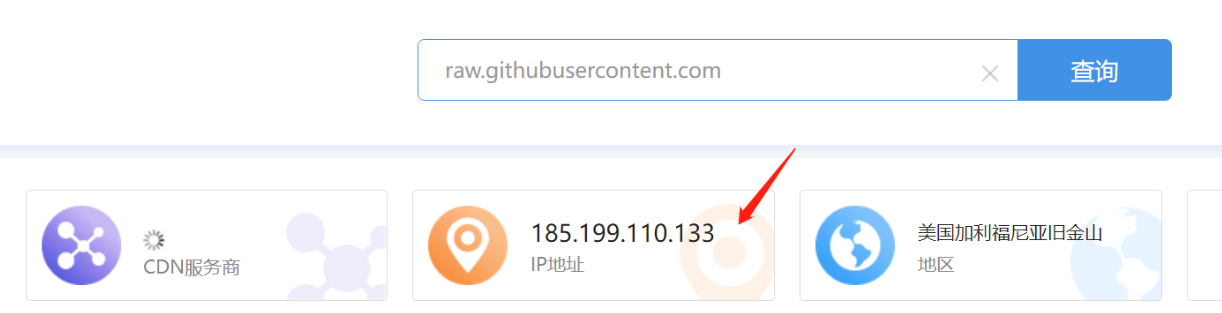

1、找到域名对应的IP

IP域名查看网站:http://mip.chinaz.com/

IP域名查看网站:http://stool.chinaz.com/same

打开网站 http://stool.chinaz.com/same 在里面输入raw.githubusercontent.com,获得域名对应的ip

查找到了域名对应的IP:185.199.110.133

# 设置IP域名映射 vim 编辑

vim /etc/hosts

#在文件末尾输入 Esc:wq 保存退出

185.199.110.133 raw.githubusercontent.com

# 在/etc/hosts文件末尾追加 二选其一即可

echo "185.199.110.133 raw.githubusercontent.com" >> /etc/hosts

# 重新部署

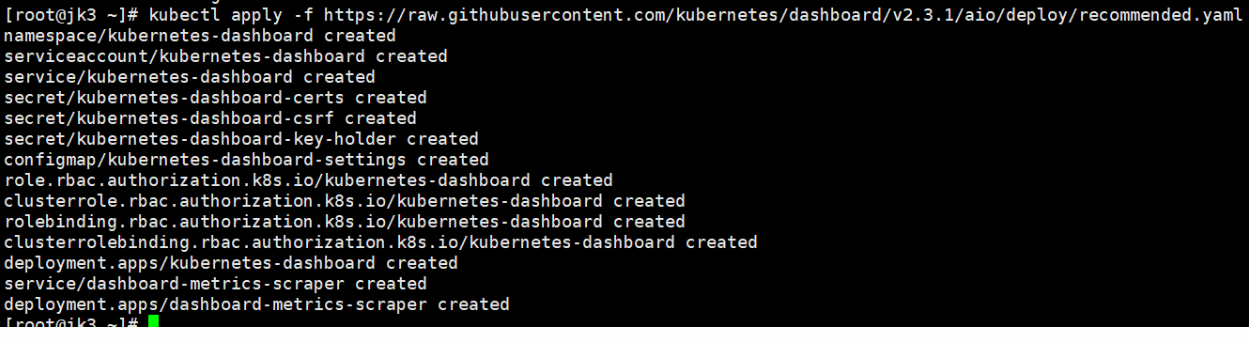

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

成功提示如下:

[root@jk3 ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

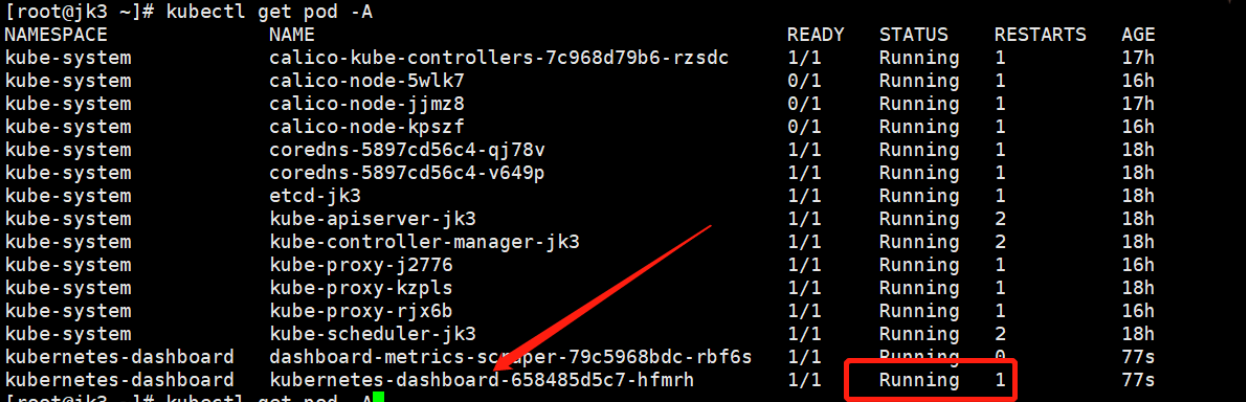

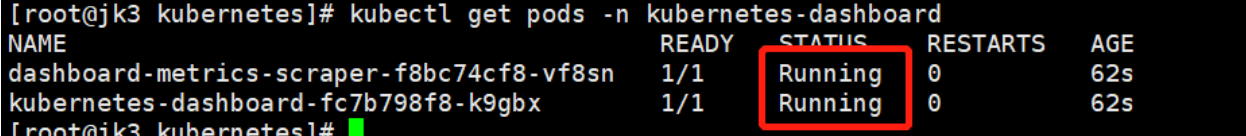

# 检查资源是否安装部署成功:(等待片刻)-n kubernetes-dashboard

kubectl get pod -A

# 检查kubernetes-dashboard 资源状态

kubectl -n kubernetes-dashboard get pods

2、设置访问端口

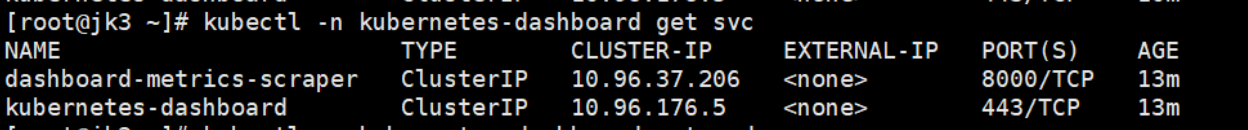

查看service

kubectl -n kubernetes-dashboard get svc

由于默认的service是 ClusterIP类型,为了能在外部访问,这里将其改为Nodeport方式。

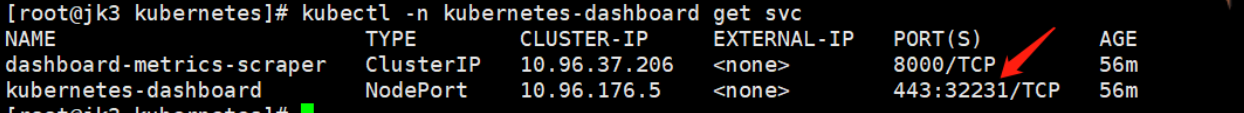

1、修改方式

目前我知道的有三种修改方式,以下三种可任选一种方式进行修改(推荐3)

1、编辑kubernetes-dashboard资源文件

# 编辑kubernetes-dashboard资源文件

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

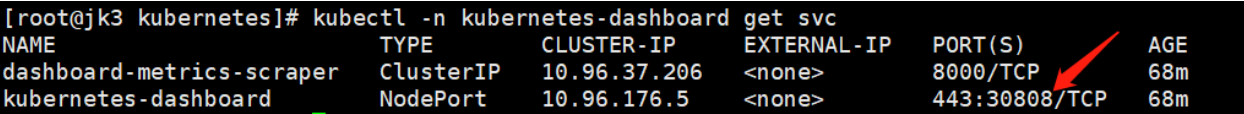

在 ports下面添加nodePort: 30808 外部访问端口(可自定义,不指定则随机生成)

type: ClusterIP改为type: NodePort

spec:

clusterIP: 10.96.176.5

clusterIPs:

- 10.96.176.5

ports:

- port: 443

protocol: TCP

targetPort: 8443

nodePort: 30808

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

未配置端口,随机生成的

自定义配置端口

2、采用打补丁的方式实现:

kubectl patch svc kubernetes-dashboard -n kubernetes-dashboard \

-p '{"spec":{"type":"NodePort","ports":[{"port":443,"targetPort":8443,"nodePort":30808}]}}' service/kubernetes-dashboard patched

3、下载yaml文件手动修改service部分(推荐):

# 下载recommended.yaml到当前目录文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

[root@jk3 kubernetes]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

--2022-05-13 11:30:26-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:7552 (7.4K) [text/plain]

正在保存至: “recommended.yaml”

100%[=================================================================================================================================>] 7,552 --.-K/s 用时 0.009s

2022-05-13 11:30:27 (781 KB/s) - 已保存 “recommended.yaml” [7552/7552])

修改servcie部分:

# 编辑recommended.yaml文件

vim recommended.yaml

在 ports下面添加nodePort: 30808 外部访问端口(可自定义,不指定则随机生成)

type: ClusterIP改为type: NodePort

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30808

selector:

k8s-app: kubernetes-dashboard

更新配置

# 更新配置

kubectl apply -f recommended.yaml

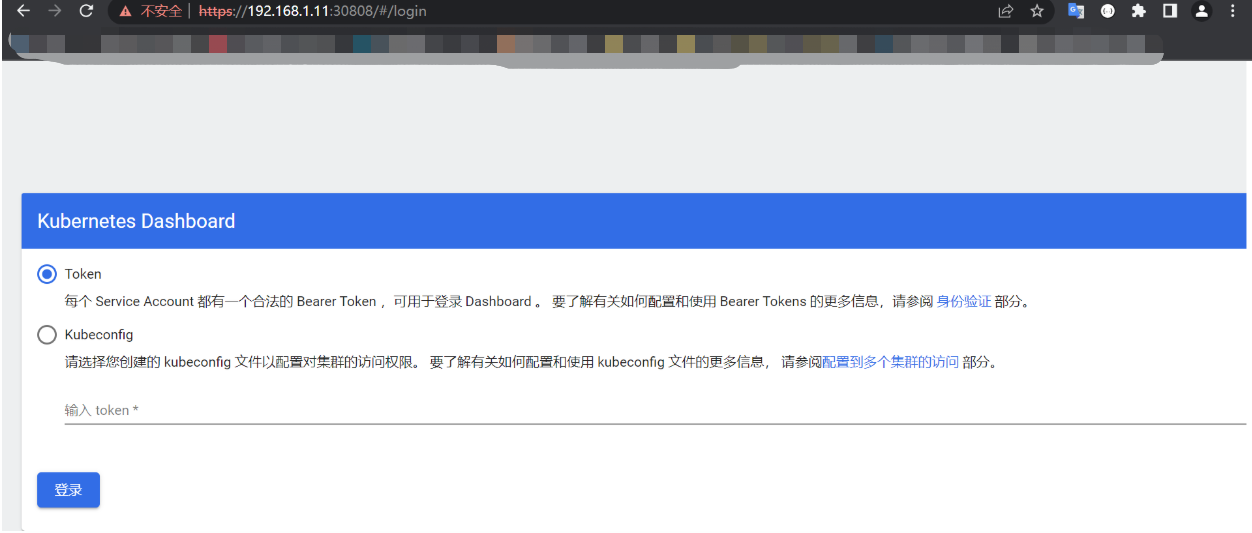

3、 登录dashboard

等待资源分配

kubectl get pods -A -w

等待变成Running即可

登录地址:https://192.168.1.11:30808

注意:kubernetes集群的任意IP:Port都可以访问(内网互信)

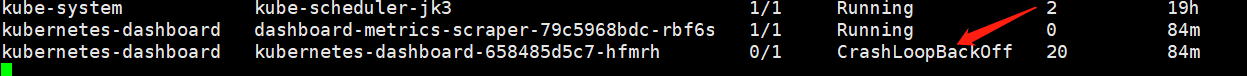

1、连接异常解决

发现输入地址和端口后无法连接,且dashboard一直在重启,查看相关日志:

kubectl logs kubernetes-dashboard-658485d5c7-hfmrh -n kubernetes-dashboard

日志信息如下:

2022/05/13 03:18:15 Starting overwatch

2022/05/13 03:18:15 Using namespace: kubernetes-dashboard

2022/05/13 03:18:15 Using in-cluster config to connect to apiserver

2022/05/13 03:18:15 Using secret token for csrf signing

2022/05/13 03:18:15 Initializing csrf token from kubernetes-dashboard-csrf secret

panic: Get "https://10.96.0.1:443/api/v1/namespaces/kubernetes-dashboard/secrets/kubernetes-dashboard-csrf": dial tcp 10.96.0.1:443: i/o timeout

goroutine 1 [running]:

github.com/kubernetes/dashboard/src/app/backend/client/csrf.(*csrfTokenManager).init(0xc0003c0aa0)

/home/runner/work/dashboard/dashboard/src/app/backend/client/csrf/manager.go:41 +0x413

github.com/kubernetes/dashboard/src/app/backend/client/csrf.NewCsrfTokenManager(...)

/home/runner/work/dashboard/dashboard/src/app/backend/client/csrf/manager.go:66

github.com/kubernetes/dashboard/src/app/backend/client.(*clientManager).initCSRFKey(0xc0004a1400)

/home/runner/work/dashboard/dashboard/src/app/backend/client/manager.go:502 +0xc6

github.com/kubernetes/dashboard/src/app/backend/client.(*clientManager).init(0xc0004a1400)

/home/runner/work/dashboard/dashboard/src/app/backend/client/manager.go:470 +0x47

github.com/kubernetes/dashboard/src/app/backend/client.NewClientManager(...)

/home/runner/work/dashboard/dashboard/src/app/backend/client/manager.go:551

main.main()

/home/runner/work/dashboard/dashboard/src/app/backend/dashboard.go:95 +0x21c

在日志中发现,访问超时:

panic: Get "https://10.96.0.1:443/api/v1/namespaces/kubernetes-dashboard/secrets/kubernetes-dashboard-csrf": dial tcp 10.96.0.1:443: i/o timeout

当有多个节点时,安装到非主节点时,会出现一些问题。dashboard使用https去连接apiServer,由于证书问题会导致dial tcp 10.96.0.1:443: i/o timeout。

把**recommended.yaml****下载下来,修改一些配置: **

# 下载recommended.yaml到当前目录文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

修改内容:

增加nodeName,指定安装到主节点。jk3为主节点名称

172 kind: Deployment

173 apiVersion: apps/v1

174 metadata:

175 labels:

176 k8s-app: kubernetes-dashboard

177 name: kubernetes-dashboard

178 namespace: kubernetes-dashboard

179 spec:

180 replicas: 1

181 revisionHistoryLimit: 10

182 selector:

183 matchLabels:

184 k8s-app: kubernetes-dashboard

185 template:

186 metadata:

187 labels:

188 k8s-app: kubernetes-dashboard

189 spec:

# 增加nodeName,指定安装到主节点。jk3为主节点名称(主机名)

nodeName: jk3

190 containers:

191 - name: kubernetes-dashboard

192 image: kubernetesui/dashboard:v2.3.1

193 imagePullPolicy: Always

194 ports:

195 - containerPort: 8443

196 protocol: TCP

197 args:

198 - --auto-generate-certificates

199 - --namespace=kubernetes-dashboard

200 # Uncomment the following line to manually specify Kubernetes API server Host

201 # If not specified, Dashboard will attempt to auto discover the API server and connect

202 # to it. Uncomment only if the default does not work.

203 # - --apiserver-host=http://my-address:port

注释以下配置

224 volumes:

225 - name: kubernetes-dashboard-certs

226 secret:

227 secretName: kubernetes-dashboard-certs

228 - name: tmp-volume

229 emptyDir: {}

230 serviceAccountName: kubernetes-dashboard

231 nodeSelector:

232 "kubernetes.io/os": linux

233 # Comment the following tolerations if Dashboard must not be deployed on master

234 #tolerations:

235 # - key: node-role.kubernetes.io/master

236 # effect: NoSchedule

297 serviceAccountName: kubernetes-dashboard

298 nodeSelector:

299 "kubernetes.io/os": linux

300 # Comment the following tolerations if Dashboard must not be deployed on master

301 #tolerations:

302 # - key: node-role.kubernetes.io/master

303 # effect: NoSchedule

304 volumes:

更新配置:

kubectl apply -f recommended.yaml

执行:kubectl get pods -n kubernetes-dashboard

登录地址:https://192.168.1.11:30808

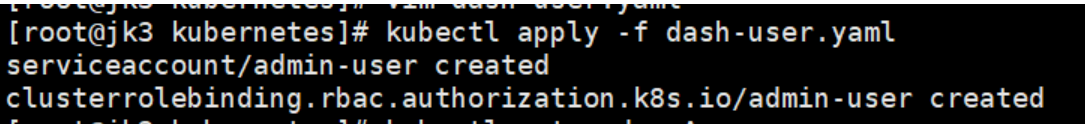

2、创建访问账号

#准备一个yaml文件

vim dash-user.yaml

#设置不自动换行

:set paste

#恢复默认设置

:set nopaste

#创建访问账号

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

应用配置:

kubectl apply -f dash-user.yaml

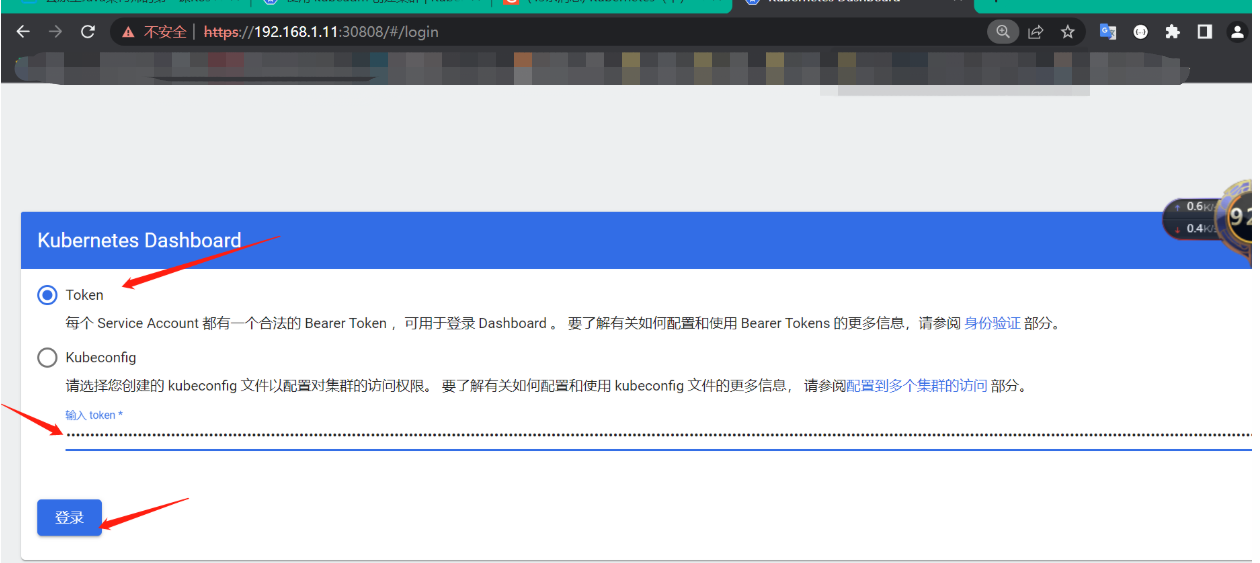

3、令牌访问

# 获取令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IkRFMUpVSF9oXzJfbUxhbzdCd2NrZ3dNQ3JmRHB1WUg3SVlIREIyLW00bTAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW1rZG1xIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkMWQxZjgwNS0xMmEzLTQ3OGItYTM5YS05ZTAzNGIwZGM2ZjgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.cCZqX0vwJWZIZ5WlEeVNTZtwHIO32wBHDkRs7Xsar98SDOximAl3X4wubsXv4mEd2nBsV6cTG_QDt-nusntby-9_NX8wnjKxwEEII--Ub1Gt3zH80eTyqhAj_ppYNSl23nsDcAnOF2wFnYYlwIhGiioHDa6uS7L9eKfNTXM5T1HRvKpmHyrsr-UqPu6oR7AsumYV1jgQuNIAU9zCGVjOLzkLF4777bXLWd1cUyc623stFTjbWBWH-s7T3lAWi1vNjCMsnuDXHj0vsBISfPjdpk6EdUo19u3RDc5sj06it47UUMBnDMD8RgNBJw9yvNiHvsaoE0cxdmoHJmBXdk7hyw

2、Kubernetes核心

1、资源创建方式

- 命令行

- yaml配置文件

2、Namespace

命名空间用来隔离资源(默认只隔离资源,不隔离网络)

命令行:

# 示例 namespace as ns

kubectl create ns hello

# 删除hello命名空间(会删除所属所有部署的资源)慎用

kubectl delete ns hello / xxx.yaml

yaml配置文件形式:

apiVersion: v1

kind: Namespace

metadata:

name: hello

# 更新配置

kubectl apply -f xxx.yaml

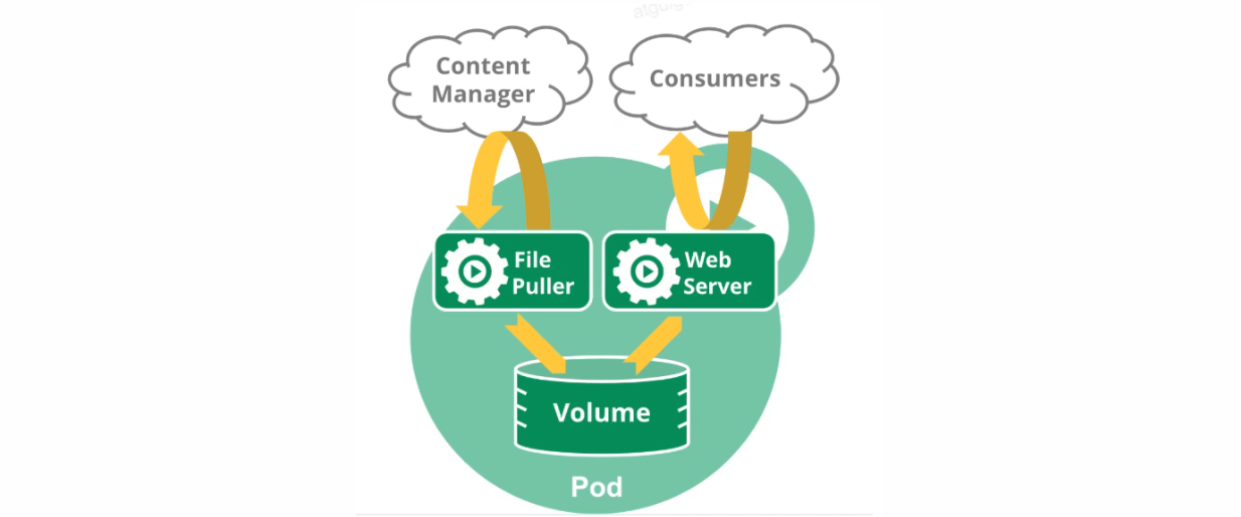

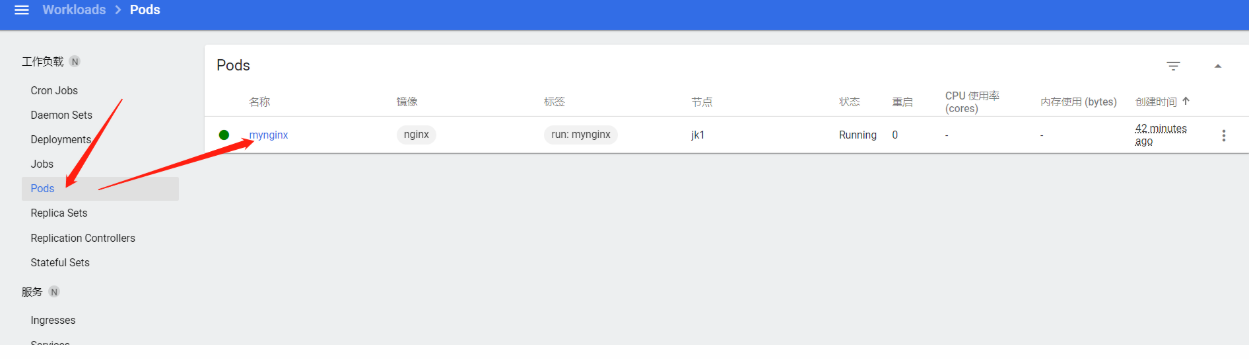

3、Pod

运行中的一组容器,Pod是Kubernetes中应用的最小单位

# 创建pod应用,拉去nginx镜像 默认Namespace为default

kubectl run mynginx --image=nginx

# 查看defaule应用资源

kubectl get pod

# pod运行日志

kubectl logs podName

# 查看应用描述信息

kubectl describe pod mynginx

# 删除pod -n namespace 默认default

kubectl delete pod mynginx -n default

尾部描述信息如下:

Events:

Type Reason Age From Message

Normal Scheduled 4m59s default-scheduler Successfully assigned default/mynginx to jk1

Normal Pulling 4m55s kubelet Pulling image "nginx"

Normal Pulled 4m40s kubelet Successfully pulled image "nginx" in 15.597824808s

Normal Created 4m39s kubelet Created container mynginx

Normal Started 4m39s kubelet Started container mynginx

解读:

- 将kubernetes的没有mynginx应用分配给了jk1节点

- kubelet 拉取nginx镜像

- kubelet 成功拉取nginx镜像,共用时15s

- kubelet 创建mynginx容器

- kubelet 启用mynginx容器

apiVersion: v1

kind: Pod

metadata:

labels:

run: mynginx

name: mynginx

namespace: default

spec:

containers:

- image: nginx

name: mynginx

apiVersion: v1

kind: Pod

metadata:

labels:

run: myapp

name: myapp

namespace: default

spec:

containers:

- image: nginx

name: mynginx

- image: tomcat:8.5.68

name: tomcat

# yaml配置应用删除

kubectl delete -f xxx.yaml

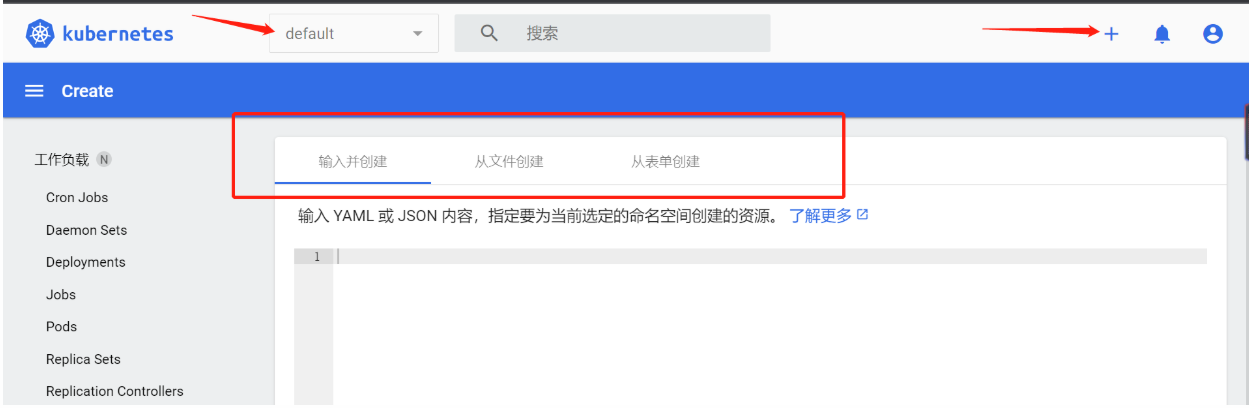

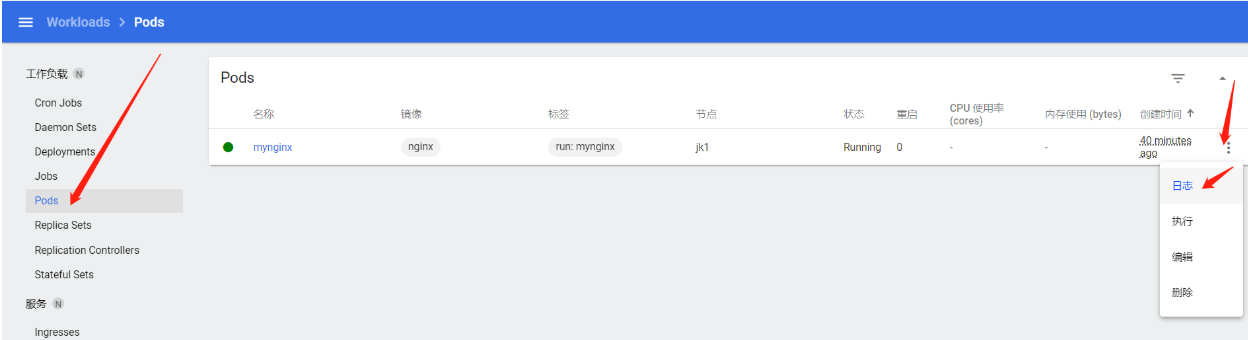

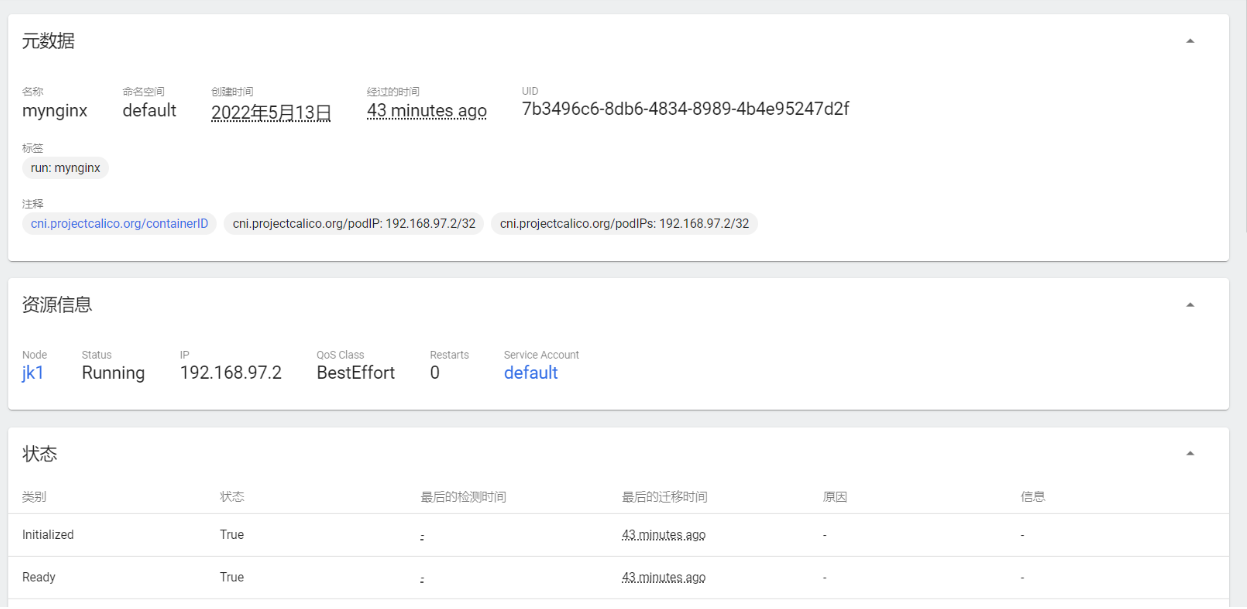

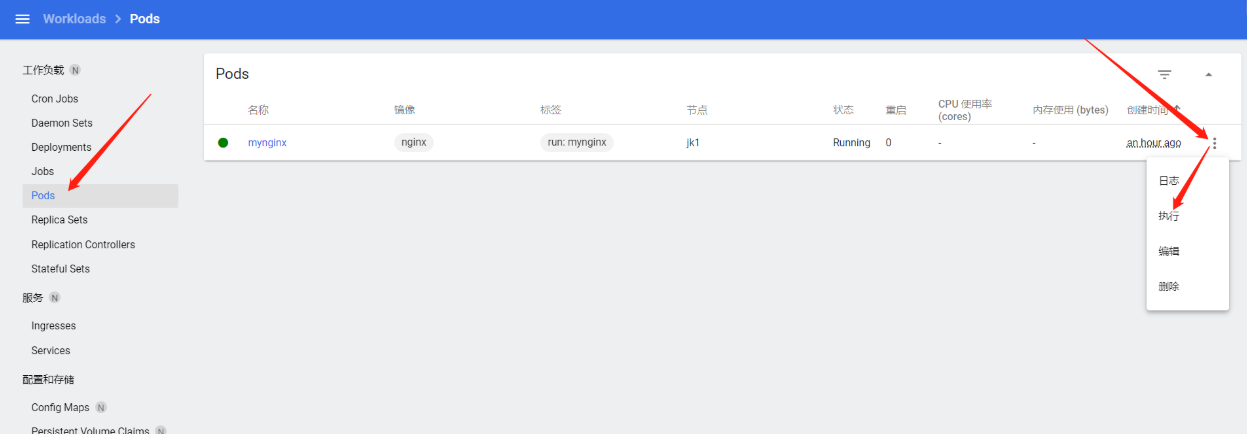

1、Dashboard操作

1、创建Pod

2、查看Pod日志

3、Pod应用详细信息

2、Pod应用访问

# 每个pod k8s都会分配一个ip,用于进行访问

kubectl get pod -owide

# 访问测试

curl localhost

- 每个pod在工作节点(worker)上,并且_每个pod在全局k8s集群中有唯一的IP地址_,此IP是k8s集群内部控制生成的虚拟IP,外网是无法ping通pod的ip

- 一个pod可以有多个(>=1)容器,但是_单个pod内部的容器之间对外开放的端口号必须唯一_,因为pod内部的容器之间可以通过localhost直接访问,本机也可以访问,集群任意机器都可以访问(前提内网互信)

1、进入Pod容器

# 类似docker命令

kubectl exec -it mynginx -- /bin/bash

Dashboard窗口进入Pod容器

3、扩展

- 同一个Pod不能同时创建两个一样的容器,如:两个nginx、两个tomcat,第二个会报错,因为端口冲突。

- 同一个Pod内部多个容器,只要有一个容器有错误,启动不了,程序就会一直尝试重启整个Pod

具体信息可查看描述或日志:

# 描述

kubectl describe pod podName

# 日志

kubectl logs podName

此时的应用还不能外网访问

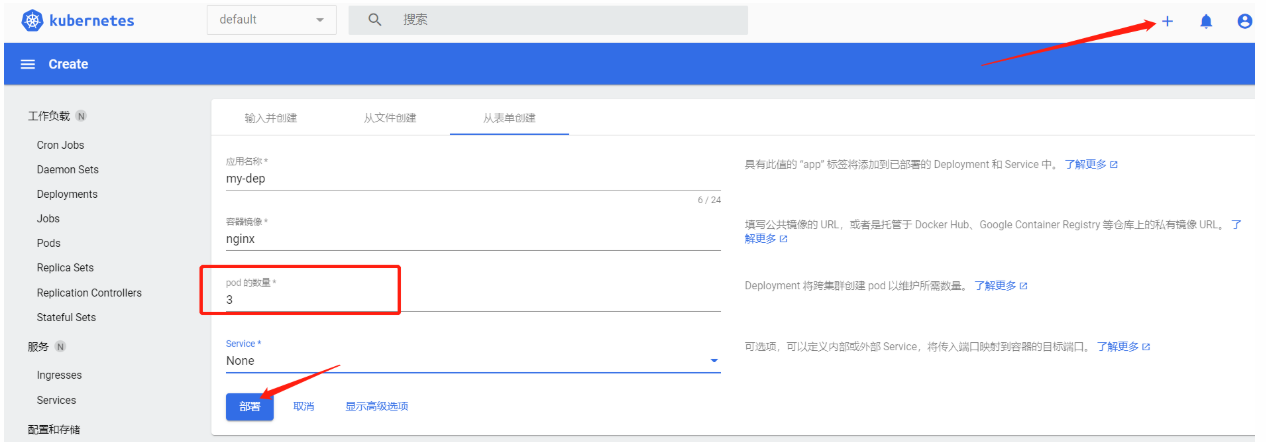

4、Deployment

控制Pod,使Pod拥有副本、自愈、扩缩容等能力

# 清空安装的pod

kubectl delete pod mynginx -n default

# 查看default命名空间pod

kubectl get pod

# 比较下面两个命令有何不同

kubectl run mynginx --image=nginx

# 拥有自愈能力,k8s帮助我们创建的,删除后会自动重新拉取镜像启动容器

kubectl create deployment mytomcat --image=tomcat:8.5.68

# 查看deployment

kubectl get deploy

# 物理删除

kubectl delete deploy mytomcat

1、多副本

命令行方式

# 创建副本 --replicas=3

kubectl create deployment my-dep --image=nginx --replicas=3

yaml方式

apiVersion: v1

kind: Deployment

metadata:

labels:

app: my-dep

name: my-dep

spec:

replicas: 3

selector:

matchLables:

app: my-dep

template:

metadata:

lables:

app: my-dep

spec:

containers:

- image: nginx

name: mynginx

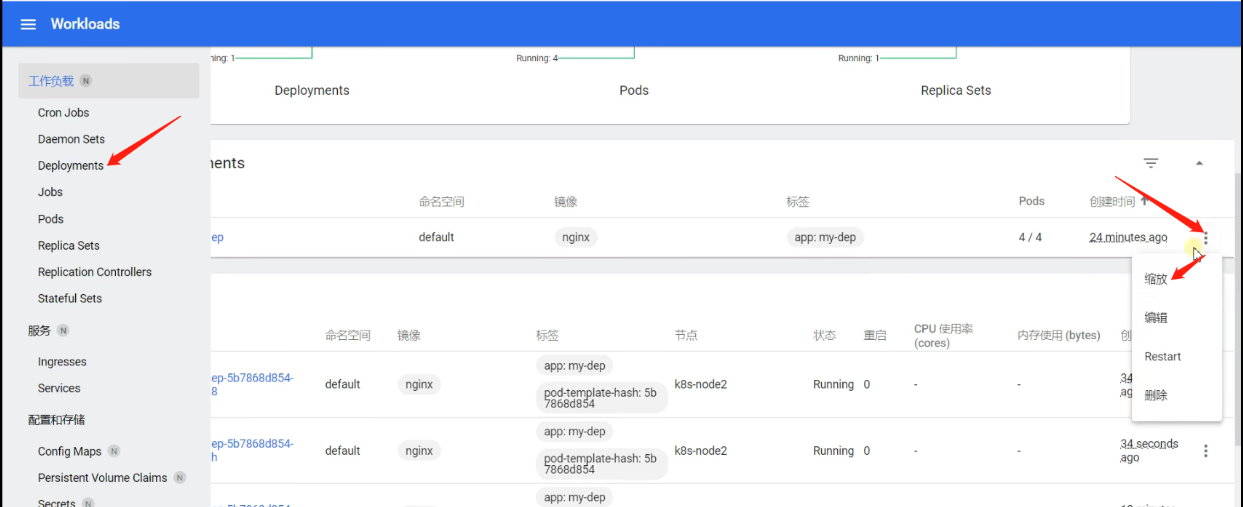

Dashboard界面方式

2、扩缩容

命令行方式

# 扩容deployment的my-dep应用资源副本数量为5

kubectl scale --replicas=5 deployment/my-dep -n default

# 缩容deployment的my-dep应用资源副本数量为2

kubectl scale --replicas=2 deployment/my-dep -n default

编辑yaml配置文件方式

# 编辑应用资源 my-dep资源名称

kubectl edit deploy my-dep

# 修改replicas副本数量

Dashboard界面方式

3、自愈&故障转移

- 停机

- 删除pod

- 容器崩溃

- ......

Deployment保证副本数量的

4、滚动更新

类似于灰度发布

当需要进行版本更替时,无需进行停机更新,即可在线无缝更新。

将新版本应用先启动起来,当启动成功且访问正常,再将请求直接切换到新应用上即可,再将杀掉旧版本镜像应用。

执行过程:

命令行方式

# --record 记录本次版本更新

kubectl set image deployment/my-dep nginx=nginx:1.16.1 --record

# 查看状态

kubectl rollout status deployment/my-dep

yaml配置文件方式

# 编辑应用资源 my-dep资源名称

kubectl edit deployment/my-dep -n default

Dashboard界面方式

5、版本回退

# 查看历史记录

kubectl rollout history deployment/my-dep

# 查看某个历史详情

kubectl rollout history deployment/my-dep --revision=2

# 回滚(回到上次)

kubectl rollout undo deployment/my-dep

# 回滚(指定版本)

kubectl rollout undo deployment/my-dep --to-revision=2

更多:

除了Deployment,k8s还有StatefulSet、DaemonSet、Job等类型资源,我们都称为

工作负载。有状态应用使用StatefulSet部署,无状态应用使用Deployment部署。

https://kubernetes.io/zh/docs/concepts/workloads/controllers/

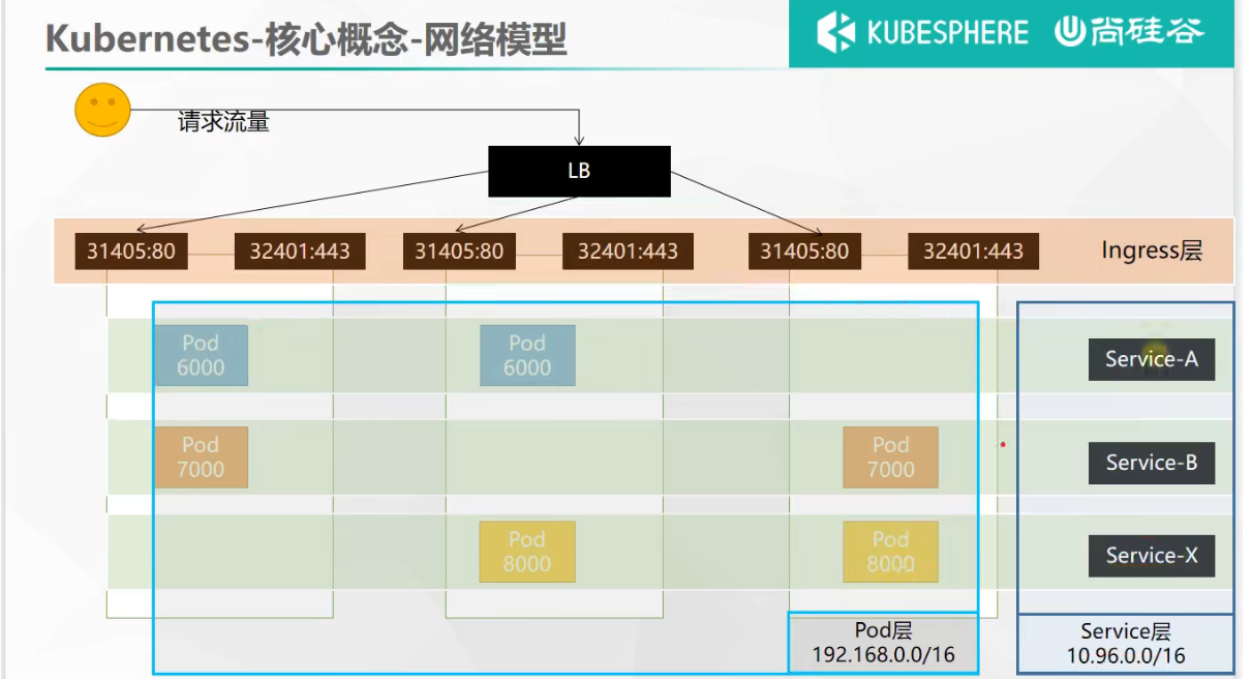

5、Service

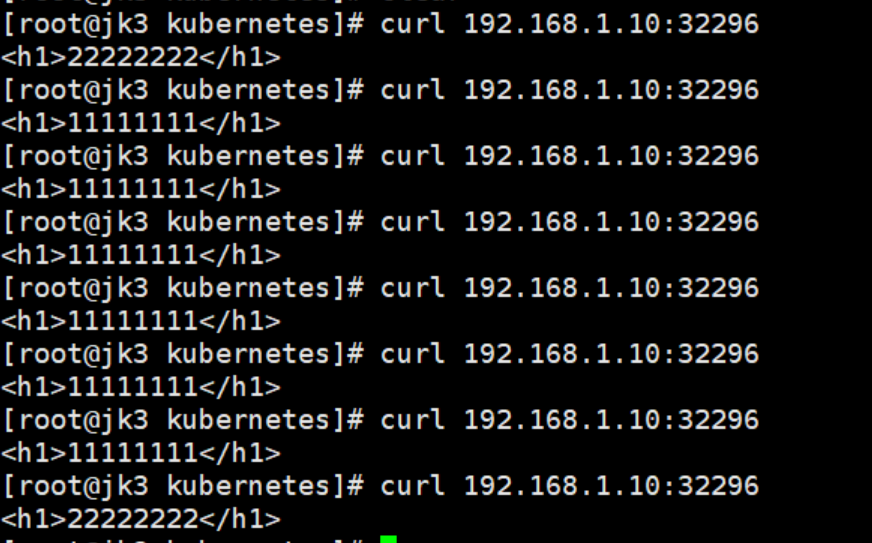

将一组Pods公开为网络服务的抽象方法

Service:Pod的服务发现和负载均衡

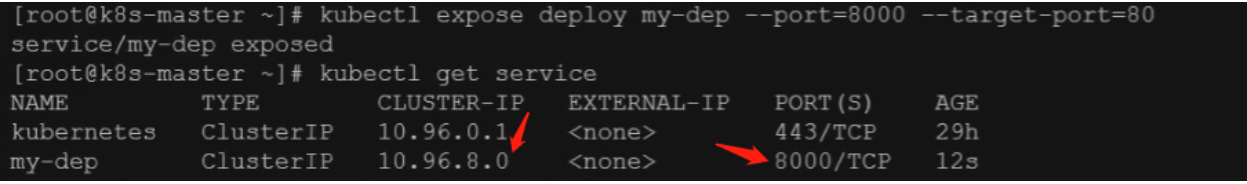

命令行方式

# 暴露Deployment --port=8000 指定服务统一访问端口 --target-port=80 目标映射端口

# --type=ClusterIP / NodePort ClusterIP:集群访问(集群端口) NodePort:外部访问(节点端口)

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=ClusterIP

# 使用标签检索Pod

kubectl get pod -l app=my-dep

# 查看暴露的IP和端口 svc是service的简写

kubectl get service / svc

# 删除暴露的IP和端口

kubectl delete svc my-dep

访问:http://10.96.8.0:8000 即可 curl 10.96.8.0:8000

也可通过域名进行访问:只能在其他Pod里面访问这一组Pod,才可以使用域名,同组pod之间访问不能使用域名

默认域名规则:服务名.所在命名空间.svc

示例:

curl my-dep.default.svc:8000

注意:此时的访问也局限于集群当中访问,此时的Type=ClusterIp ------> Type=NodePort

具体参考:上文目录Kubernetes概念-------------》2、Kubernetes创建集群----------------》2、使用Kubeadm引导集群-------------》6、加入dashboard(Master)--------------------》2、设置访问端口下的内容

yaml配置文件方式

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

selector:

app: my-dep

type: NodePort

ports:

- port: 8000

protocol: TCP

targetPort: 80

无论服务下线还是上线,Service都会进行感知,并将其加入或剔除该Service组当中:称为:服务发现

NodePort范围在30000-32767之间

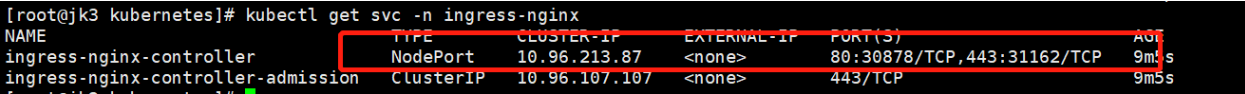

6、Ingress

类似于网关:Pod的访问统一入口

1、安装

# 下载deploy.yaml配置文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.48.1/deploy/static/provider/baremetal/deploy.yaml

# 任选其一

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.48.1/deploy/static/provider/cloud/deploy.yaml

# 修改镜像

mv deploy.yaml ingress-nginx.yaml

vim ingress-nginx.yaml

# 将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/kubernetes-fan/ingress-nginx:v0.48.1

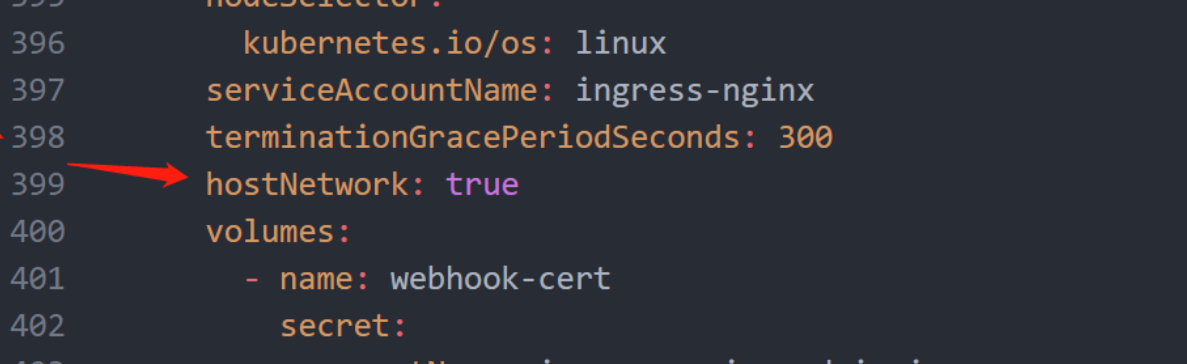

# 添加 hostNetwork: true

# 安装

kubectl apply -f ingress-nginx.yaml

# 检查安装的结果

kubectl get pod,svc -n ingress-nginx

# 最后别忘记把svc暴露的端口要放行

1、修改配置文件

一共需要修改2个地方的image信息,示例:

修改镜像

添加 hostNetwork: true

如果无法下载,使用以下文件即可:

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ipFamilyPolicy: PreferDualStack

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/kubernetes-fan/ingress-nginx:v0.48.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

hostNetwork: true

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.34.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.48.1

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

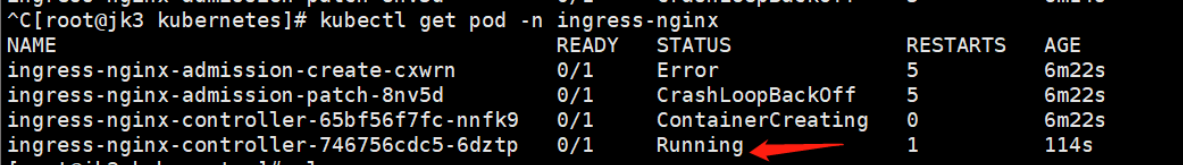

检查安装结果

# 实时监控部署资源状态

kubectl get pod -n ingress-nginx -w

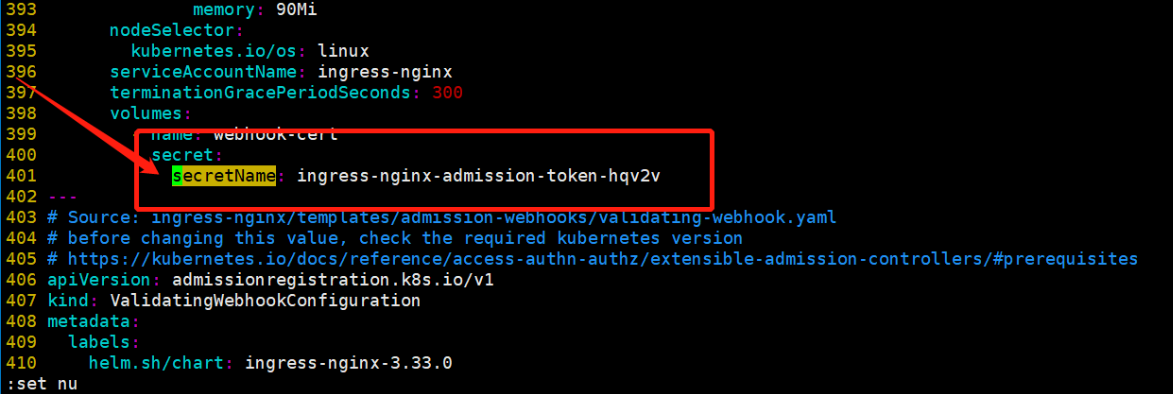

1、secret "ingress-nginx-admission" not found警告

ingress-nginx-controller一直处于ContainerCreating状态

- 查询安装描述

kubectl describe pod -n ingress-nginx ingress-nginx-controller-746756cdc5-nqwhn(podName)

警告日志如下:

MountVolume.SetUp failed for volume "webhook-cert" : secret "ingress-nginx-admission" not found - 查询

secret

kubectl get secret -A|grep ingress

将ingress-nginx.yaml文件中的secretName: ingress-nginx-admission,改为ingress-nginx-admission-token-hqv2v

ESC:wq 退出并保存 - 重新apply

kubectl apply -f ingress-nginx.yaml

2、使用

官网地址:https://kubernetes.github.io/ingress-nginx/

底层也是Nginx

测试环境

# 创建并编辑test.yaml文件

vim test.yaml

# 应用如下yaml粘贴到test.yaml文件当中

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

# 部署test.yaml配置文件资源

kubectl apply -f test.yaml

# 查看资源是否部署完毕

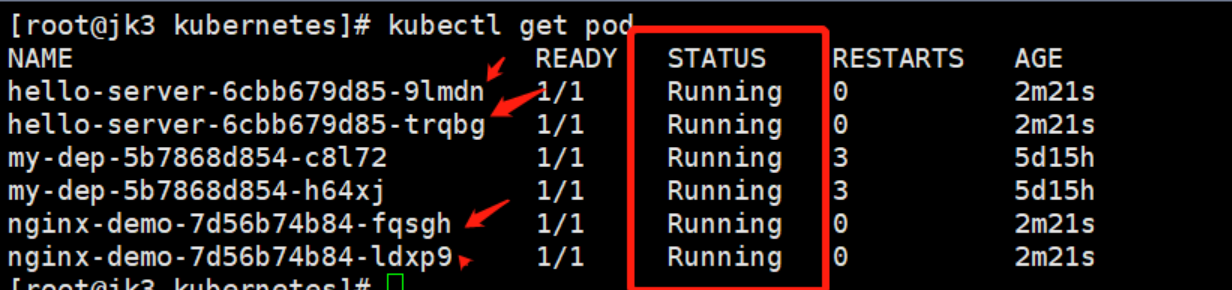

kubectl get pod

访问测试:

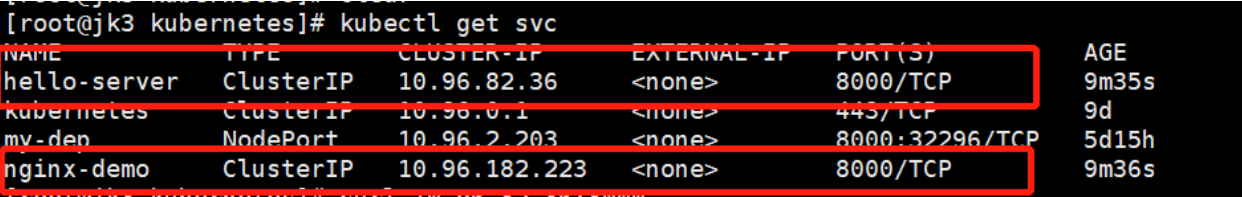

# 查询service的Ip和端口

kubectl get svc

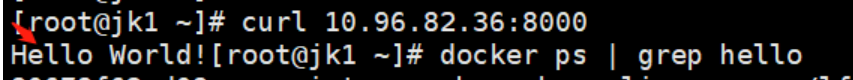

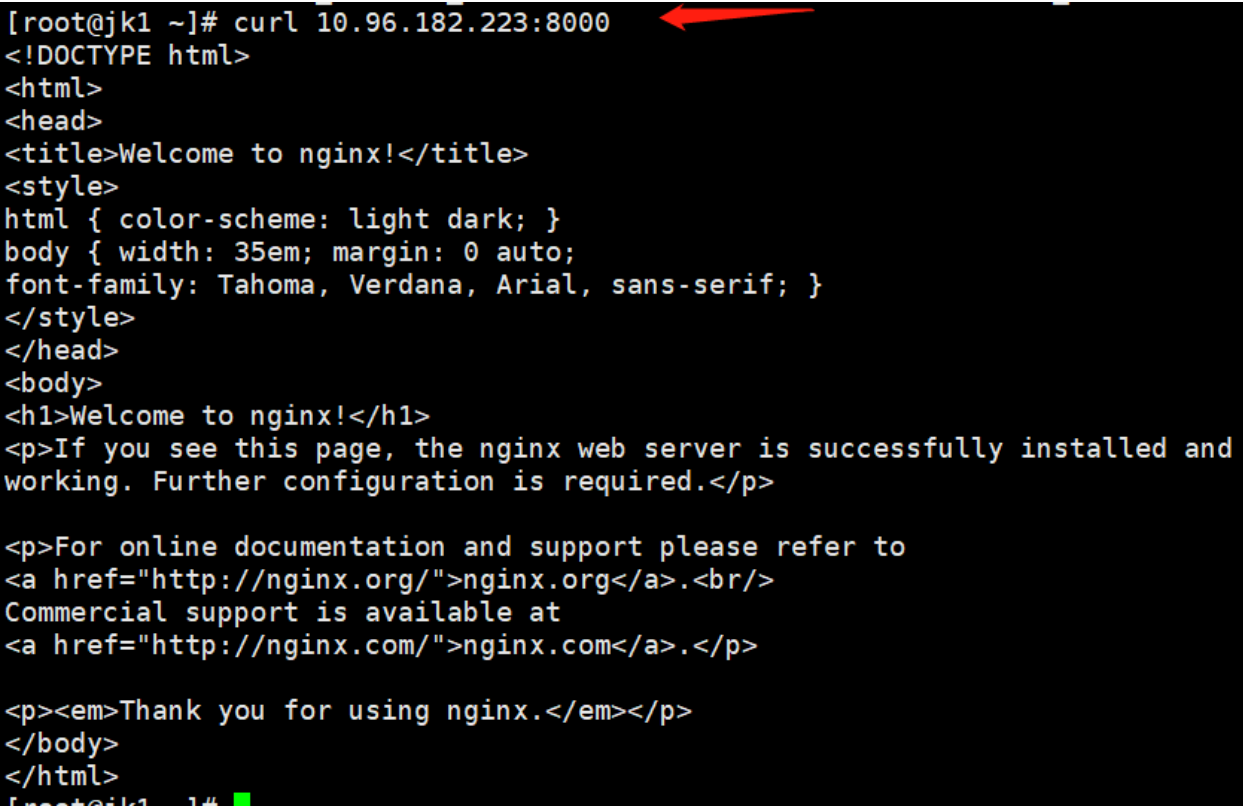

访问测试

curl 10.96.82.36:8000

curl 10.96.182.223:8000

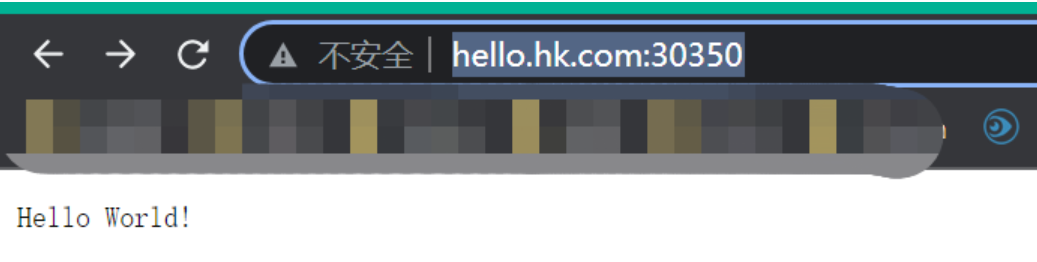

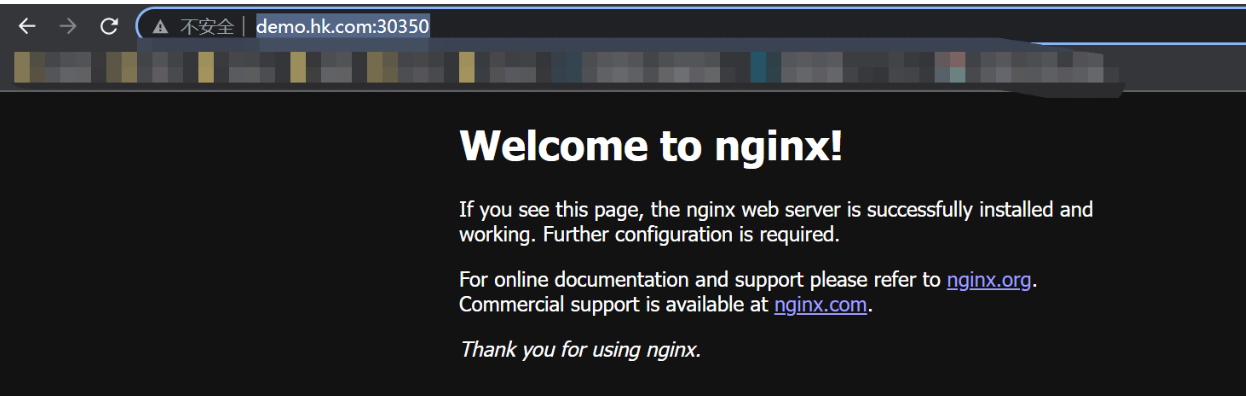

1、域名访问

# 创建并编辑文件

vim ingress-rule.yaml

# 粘贴以下yaml文件

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.hk.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.hk.com"

http:

paths:

- pathType: Prefix

path: "/" # /nginx 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

# 应用资源

kubectl apply -f ingress-rule.yaml

应用报错如下:

Error from server (InternalError): error when creating "ingress-rule.yaml": Internal error occurred: failed calling webhook "validate.nginx.ingress.kubernetes.io": Post "https://ingress-nginx-controller-admission.ingress-nginx.svc:443/networking/v1beta1/ingresses?timeout=10s": remote error: tls: internal error

# 查看 webhook

kubectl get validatingwebhookconfigurations

# 删除ingress-nginx-admission

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

# 重新应用资源

kubectl apply -f ingress-rule.yaml

# 查看集群ingress的规则

kubectl get ingress

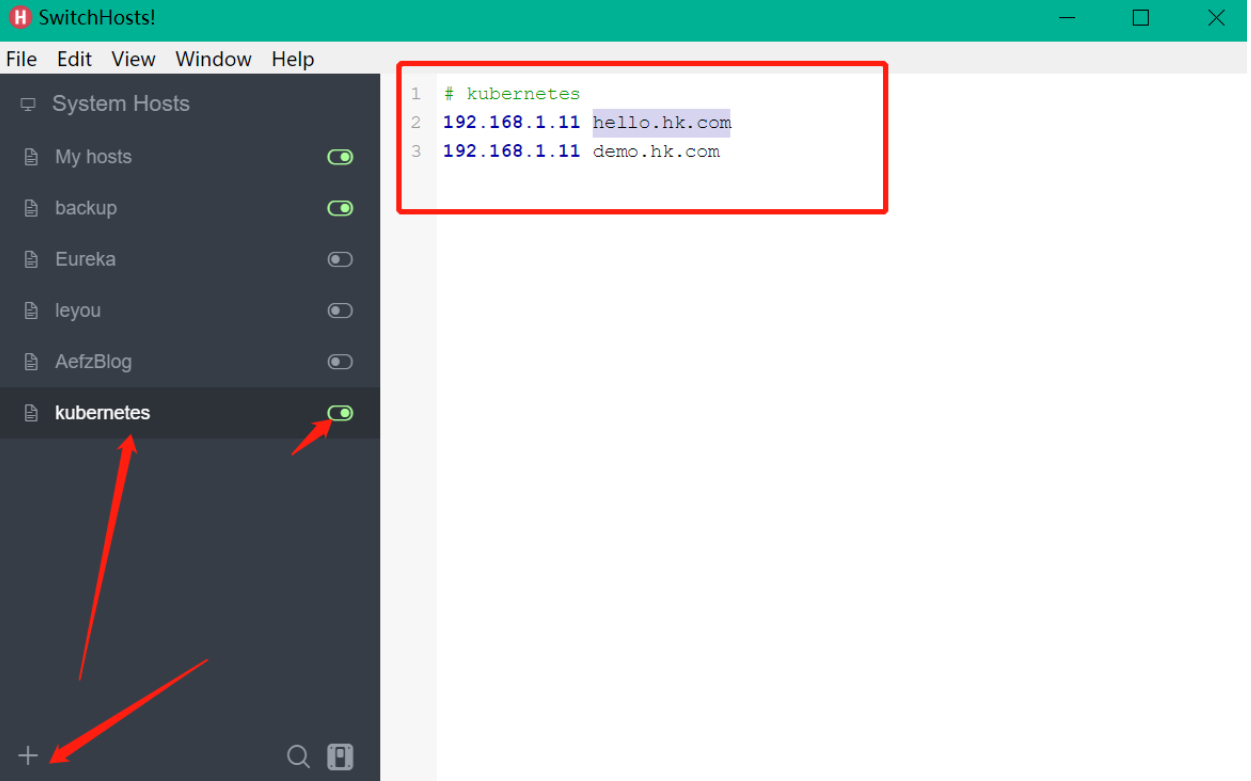

修改本地的Host文件:

软件安装: https://aerfazhe.lanzouw.com/iaKzn058zx1i

# kubernetes

192.168.1.11 hello.hk.com

192.168.1.11 demo.hk.com

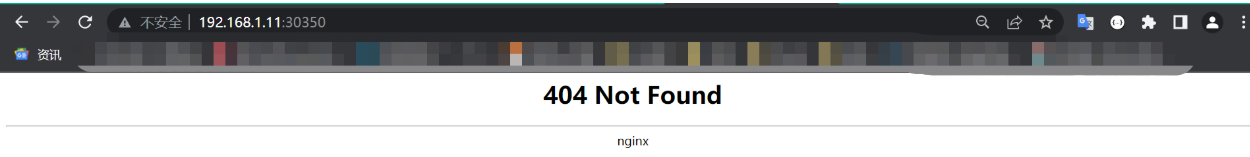

访问地址:

2、路径重写

http://demo.hk.com:30350/nginx ------> http://demo.hk.com:30350/

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.hk.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.hk.com"

http:

paths:

- pathType: Prefix # 前缀模式

path: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

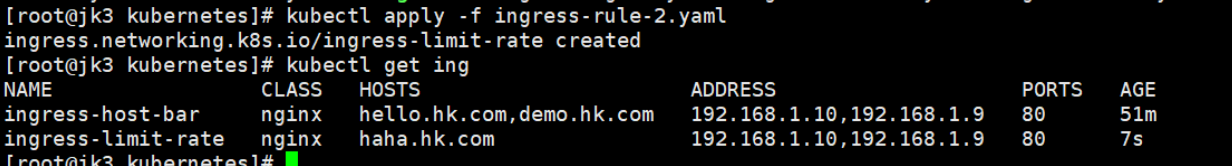

3、流量限制

vim ingress-rule-2.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.hk.com"

http:

paths:

- pathType: Exact # 精准模式

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

kubectl apply -f ingress-rule-2.yaml

快速刷新:表示服务不可用

网络模型

7、存储抽象

1、环境准备

1、所有节点

#所有机器安装

yum install -y nfs-utils

2、主节点

#nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

3、从节点

# 主节点IP 检查那个目录可以挂载

showmount -e 192.168.1.11

#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /nfs/data

mkdir -p /nfs/data

# 挂载

mount -t nfs 192.168.1.11:/nfs/data /nfs/data

# 写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

4、原生方式数据挂载

# 创建挂载目录

mkdir /nfs/data/nginx-pv

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

nfs:

server: 192.168.1.11

path: /nfs/data/nginx-pv

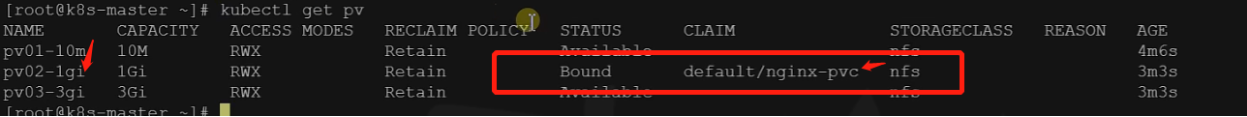

2、PV&PVC

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

1、创建PV池

静态供应

#nfs主节点

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

创建PV

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 192.168.1.11

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 192.168.1.11

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 192.168.1.11

2、PVC创建与绑定

创建PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

创建Pod绑定PVC

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

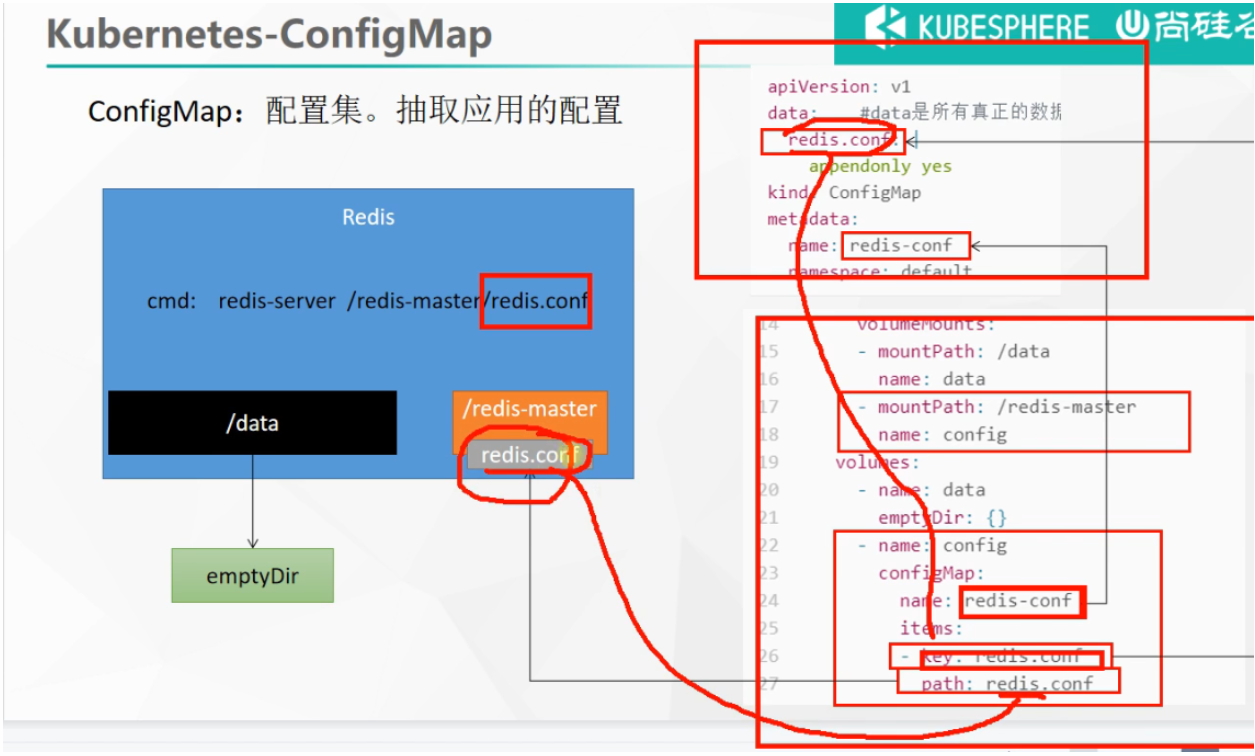

3、ConfigMap

抽取应用配置,并且可以自动更新

1、Redis示例

1、将之前的配置文件创建为配置集

# 创建配置,redis保存到k8s的etcd;

kubectl create cm redis-conf --from-file=redis.conf

# 查看配置信息yaml

kubectl get cm redis-conf -oyaml

apiVersion: v1

data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容

redis.conf: |

appendonly yes

kind: ConfigMap

metadata:

name: redis-conf

namespace: default

2、创建Pod

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf" #指的是redis容器内部的位置

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

原理:

3、检查默认配置

# 进入redis客户端

kubectl exec -it redis -- redis-cli

# 查询配置参数

127.0.0.1:6379> CONFIG GET appendonly

127.0.0.1:6379> CONFIG GET requirepass

4、修改ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: example-redis-config

data:

redis-config: |

maxmemory 2mb

maxmemory-policy allkeys-lru

5、检查配置是否更新

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET maxmemory

127.0.0.1:6379> CONFIG GET maxmemory-policy

检查指定文件内容是否已经更新

修改了CM。Pod里面的配置文件会跟着变

配置值未更改,因为需要重新启动 Pod 才能从关联的 ConfigMap 中获取更新的值。

原因:我们的Pod部署的中间件自己本身没有热更新能力

4、Secret

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

kubectl create secret docker-registry dockerhub123456wk-docker \

--docker-username=dockerhub123456wk \

--docker-password=wk123456 \

--docker-email=2427785116@qq.com

##命令格式

kubectl create secret docker-registry regcred \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: dockerhub123456wk/redis:v1.0

imagePullSecrets:

- name: dockerhub123456wk-docker

3、Kubernetes卸载

按照顺序执行以下命令:

sudo kubeadm reset -f

sudo rm -rvf $HOME/.kube

sudo rm -rvf ~/.kube/

sudo rm -rvf /etc/kubernetes/

sudo rm -rvf /etc/systemd/system/kubelet.service.d

sudo rm -rvf /etc/systemd/system/kubelet.service

sudo rm -rvf /usr/bin/kube*

sudo rm -rvf /etc/cni

sudo rm -rvf /opt/cni

sudo rm -rvf /var/lib/etcd

sudo rm -rvf /var/etcd

查看是否卸载

docker ps

查看是否有关kubernetes的相关容器启动。

没有则卸载成功。

posted on 2025-10-21 15:14 chuchengzhi 阅读(53) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号