spark-3.3.2-bin-hadoop3-scala2.13 Local模式 01

目标

| Step | Target | Status |

| 1 | 搭建单机开发环境,执行pyspark程序 Anaconda3-2022.10-Linux-x86_64.sh pycharm-community-2022.3.2.tar.gz vscode: code_1.75.1-1675893397_amd64.deb |

done |

| 2 | hadoop | todo |

| 3 | scala | |

| 4 | hive |

环境

OS:Ubuntu22

基础包安装

#################################################################################################### ## root #################################################################################################### # 有的可能没用,先装上 # https://github.com/apache/hadoop/blob/rel/release-3.2.1/dev-support/docker/Dockerfile apt-get install -y apt-utils ; apt-get install -y build-essential ; apt-get install -y bzip2 ; apt-get install -y clang ; apt-get install -y curl ; apt-get install -y doxygen ; apt-get install -y fuse ; apt-get install -y g++ ; apt-get install -y gcc ; apt-get install -y git ; apt-get install -y gnupg-agent ; apt-get install -y libbz2-dev ; apt-get install -y libcurl4-openssl-dev ; apt-get install -y libfuse-dev ; apt-get install -y libprotobuf-dev ; apt-get install -y libprotoc-dev ; apt-get install -y libsasl2-dev ; apt-get install -y libsnappy-dev ; apt-get install -y libssl-dev ; apt-get install -y libtool ; apt-get install -y libzstd1-dev ; apt-get install -y locales ; apt-get install -y make ; apt-get install -y pinentry-curses ; apt-get install -y pkg-config ; apt-get install -y python3 ; apt-get install -y python3-pip ; apt-get install -y python3-pkg-resources ; apt-get install -y python3-setuptools ; apt-get install -y python3-wheel ; apt-get install -y rsync ; apt-get install -y snappy ; apt-get install -y sudo ; apt-get install -y valgrind ; apt-get install -y zlib1g-dev ; ln -s /usr/bin/python3 /usr/bin/python

# 安装OPEN JDK

root@apollo-virtualbox:~# java -version

openjdk version "1.8.0_352"

OpenJDK Runtime Environment (build 1.8.0_352-8u352-ga-1~22.04-b08)

OpenJDK 64-Bit Server VM (build 25.352-b08, mixed mode)

root@apollo-virtualbox:~#

root@apollo-virtualbox:~# ll /opt/bigdata/

total 16

drwxr-xr-x 4 hadoop hadoop 4096 2023-02-20 21:32 .

drwxr-xr-x 4 root root 4096 2023-02-20 21:16 ..

drwxr-xr-x 3 hadoop hadoop 4096 2023-02-20 21:32 backup

drwxr-xr-x 14 hadoop hadoop 4096 2023-02-20 21:48 spark-3.3.2-bin-hadoop3-scala2.13

root@apollo-virtualbox:~#

####################################################################################################

## hadoop

####################################################################################################

cd

ssh-keygen -t rsa

hadoop@apollo-virtualbox:~$ find .ssh

.ssh

.ssh/id_rsa.pub

.ssh/id_rsa

.ssh/known_hosts

hadoop@apollo-virtualbox:~$

把产生的公钥文件放置到authorized_keys文件中

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# edit .bashrc

alias ll='ls -al -v --group-directories-first --color=auto --time-style=long-iso'

export SPARK_HOME=/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13

export PATH=$SPARK_HOME/bin:$PATH

$ source .bashrc

hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$ ./sbin/start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13/logs/spark-hadoop-org.apache.spark.deploy.master.Master-1-apollo-virtualbox.out

hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$

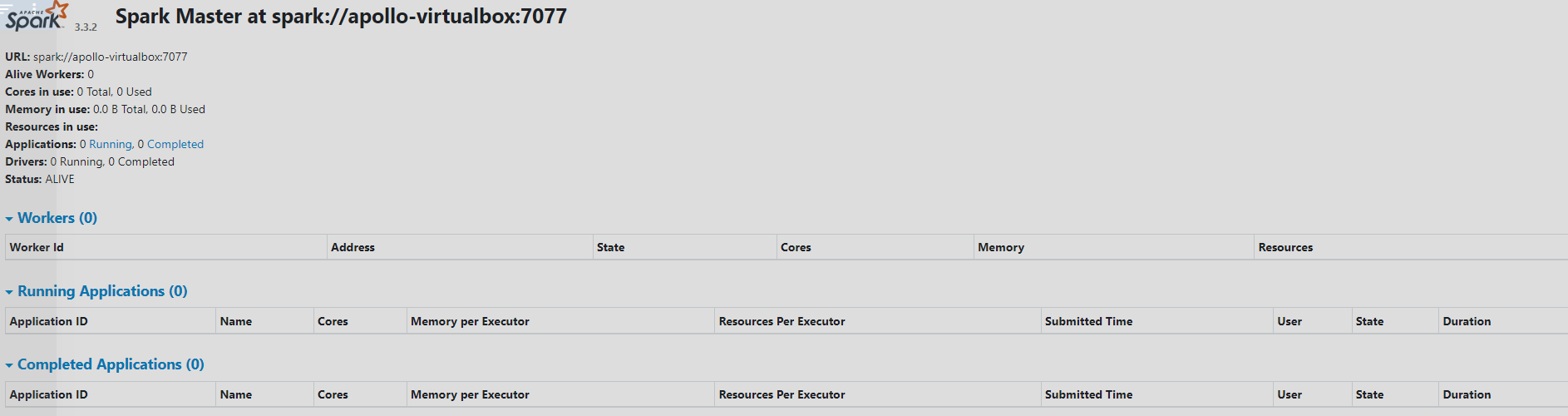

http://192.168.56.100:8080/

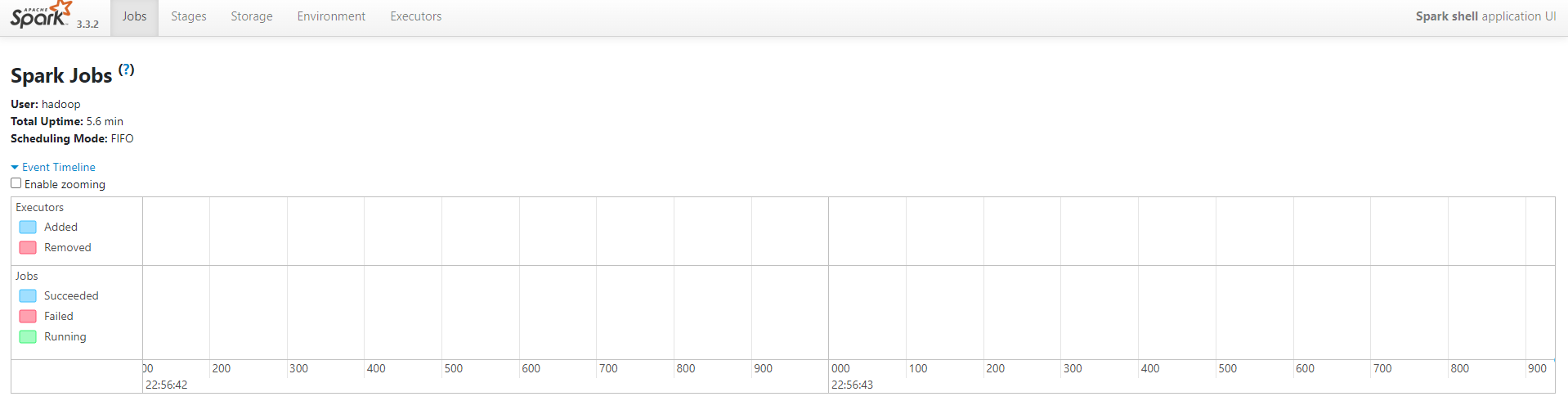

测试

hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$ ./bin/run-example SparkPi 10 23/02/20 22:06:21 WARN Utils: Your hostname, apollo-virtualbox resolves to a loopback address: 127.0.1.1; using 10.0.2.15 instead (on interface enp0s3) 23/02/20 22:06:21 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address 23/02/20 22:06:21 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 23/02/20 22:06:21 INFO SparkContext: Running Spark version 3.3.2 23/02/20 22:06:21 INFO ResourceUtils: ============================================================== 23/02/20 22:06:21 INFO ResourceUtils: No custom resources configured for spark.driver. 23/02/20 22:06:21 INFO ResourceUtils: ============================================================== 23/02/20 22:06:21 INFO SparkContext: Submitted application: Spark Pi 23/02/20 22:06:21 INFO ResourceProfile: Default ResourceProfile created, executor resources: Map(cores -> name: cores, amount: 1, script: , vendor: , memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0) 23/02/20 22:06:21 INFO ResourceProfile: Limiting resource is cpu 23/02/20 22:06:21 INFO ResourceProfileManager: Added ResourceProfile id: 0 23/02/20 22:06:21 INFO SecurityManager: Changing view acls to: hadoop 23/02/20 22:06:21 INFO SecurityManager: Changing modify acls to: hadoop 23/02/20 22:06:21 INFO SecurityManager: Changing view acls groups to: 23/02/20 22:06:21 INFO SecurityManager: Changing modify acls groups to: 23/02/20 22:06:21 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() 23/02/20 22:06:21 INFO Utils: Successfully started service 'sparkDriver' on port 36917. 23/02/20 22:06:21 INFO SparkEnv: Registering MapOutputTracker 23/02/20 22:06:22 INFO SparkEnv: Registering BlockManagerMaster 23/02/20 22:06:22 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 23/02/20 22:06:22 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 23/02/20 22:06:22 INFO SparkEnv: Registering BlockManagerMasterHeartbeat 23/02/20 22:06:22 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-7e2bbdbb-30e4-40ff-8c1d-30a1ee12e1e6 23/02/20 22:06:22 INFO MemoryStore: MemoryStore started with capacity 366.3 MiB 23/02/20 22:06:22 INFO SparkEnv: Registering OutputCommitCoordinator 23/02/20 22:06:22 INFO Utils: Successfully started service 'SparkUI' on port 4040. 23/02/20 22:06:22 INFO SparkContext: Added JAR file:///opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13/examples/jars/scopt_2.13-3.7.1.jar at spark://10.0.2.15:36917/jars/scopt_2.13-3.7.1.jar with timestamp 1676901981632 23/02/20 22:06:22 INFO SparkContext: Added JAR file:///opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13/examples/jars/spark-examples_2.13-3.3.2.jar at spark://10.0.2.15:36917/jars/spark-examples_2.13-3.3.2.jar with timestamp 1676901981632 23/02/20 22:06:22 INFO SparkContext: The JAR file:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13/examples/jars/spark-examples_2.13-3.3.2.jar at spark://10.0.2.15:36917/jars/spark-examples_2.13-3.3.2.jar has been added already. Overwriting of added jar is not supported in the current version. 23/02/20 22:06:22 INFO Executor: Starting executor ID driver on host 10.0.2.15 23/02/20 22:06:22 INFO Executor: Starting executor with user classpath (userClassPathFirst = false): '' 23/02/20 22:06:22 INFO Executor: Fetching spark://10.0.2.15:36917/jars/scopt_2.13-3.7.1.jar with timestamp 1676901981632 23/02/20 22:06:22 INFO TransportClientFactory: Successfully created connection to /10.0.2.15:36917 after 21 ms (0 ms spent in bootstraps) 23/02/20 22:06:22 INFO Utils: Fetching spark://10.0.2.15:36917/jars/scopt_2.13-3.7.1.jar to /tmp/spark-3c59b0eb-bbc2-4c51-b5b2-235b4bceab08/userFiles-ed7b0492-12db-463d-852d-7c7f4943c304/fetchFileTemp5538590310162219393.tmp 23/02/20 22:06:22 INFO Executor: Adding file:/tmp/spark-3c59b0eb-bbc2-4c51-b5b2-235b4bceab08/userFiles-ed7b0492-12db-463d-852d-7c7f4943c304/scopt_2.13-3.7.1.jar to class loader 23/02/20 22:06:22 INFO Executor: Fetching spark://10.0.2.15:36917/jars/spark-examples_2.13-3.3.2.jar with timestamp 1676901981632 23/02/20 22:06:22 INFO Utils: Fetching spark://10.0.2.15:36917/jars/spark-examples_2.13-3.3.2.jar to /tmp/spark-3c59b0eb-bbc2-4c51-b5b2-235b4bceab08/userFiles-ed7b0492-12db-463d-852d-7c7f4943c304/fetchFileTemp7949452791611007828.tmp 23/02/20 22:06:22 INFO Executor: Adding file:/tmp/spark-3c59b0eb-bbc2-4c51-b5b2-235b4bceab08/userFiles-ed7b0492-12db-463d-852d-7c7f4943c304/spark-examples_2.13-3.3.2.jar to class loader 23/02/20 22:06:22 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 35815. 23/02/20 22:06:22 INFO NettyBlockTransferService: Server created on 10.0.2.15:35815 23/02/20 22:06:22 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 23/02/20 22:06:22 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 10.0.2.15, 35815, None) 23/02/20 22:06:22 INFO BlockManagerMasterEndpoint: Registering block manager 10.0.2.15:35815 with 366.3 MiB RAM, BlockManagerId(driver, 10.0.2.15, 35815, None) 23/02/20 22:06:22 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 10.0.2.15, 35815, None) 23/02/20 22:06:22 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 10.0.2.15, 35815, None) 23/02/20 22:06:23 INFO SparkContext: Starting job: reduce at SparkPi.scala:38 23/02/20 22:06:23 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions 23/02/20 22:06:23 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38) 23/02/20 22:06:23 INFO DAGScheduler: Parents of final stage: List() 23/02/20 22:06:23 INFO DAGScheduler: Missing parents: List() 23/02/20 22:06:23 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents 23/02/20 22:06:23 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 4.0 KiB, free 366.3 MiB) 23/02/20 22:06:23 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 2.2 KiB, free 366.3 MiB) 23/02/20 22:06:23 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.0.2.15:35815 (size: 2.2 KiB, free: 366.3 MiB) 23/02/20 22:06:23 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1513 23/02/20 22:06:23 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9)) 23/02/20 22:06:23 INFO TaskSchedulerImpl: Adding task set 0.0 with 10 tasks resource profile 0 23/02/20 22:06:23 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0) (10.0.2.15, executor driver, partition 0, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1) (10.0.2.15, executor driver, partition 1, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 0.0 in stage 0.0 (TID 0) 23/02/20 22:06:23 INFO Executor: Running task 1.0 in stage 0.0 (TID 1) 23/02/20 22:06:23 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 1097 bytes result sent to driver 23/02/20 22:06:23 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1097 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2) (10.0.2.15, executor driver, partition 2, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 2.0 in stage 0.0 (TID 2) 23/02/20 22:06:23 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3) (10.0.2.15, executor driver, partition 3, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 3.0 in stage 0.0 (TID 3) 23/02/20 22:06:23 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 401 ms on 10.0.2.15 (executor driver) (1/10) 23/02/20 22:06:23 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 406 ms on 10.0.2.15 (executor driver) (2/10) 23/02/20 22:06:23 INFO Executor: Finished task 2.0 in stage 0.0 (TID 2). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO Executor: Finished task 3.0 in stage 0.0 (TID 3). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4) (10.0.2.15, executor driver, partition 4, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 4.0 in stage 0.0 (TID 4) 23/02/20 22:06:23 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5) (10.0.2.15, executor driver, partition 5, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 5.0 in stage 0.0 (TID 5) 23/02/20 22:06:23 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 70 ms on 10.0.2.15 (executor driver) (3/10) 23/02/20 22:06:23 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 68 ms on 10.0.2.15 (executor driver) (4/10) 23/02/20 22:06:23 INFO Executor: Finished task 4.0 in stage 0.0 (TID 4). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6) (10.0.2.15, executor driver, partition 6, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 32 ms on 10.0.2.15 (executor driver) (5/10) 23/02/20 22:06:23 INFO Executor: Finished task 5.0 in stage 0.0 (TID 5). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7) (10.0.2.15, executor driver, partition 7, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 25 ms on 10.0.2.15 (executor driver) (6/10) 23/02/20 22:06:23 INFO Executor: Running task 6.0 in stage 0.0 (TID 6) 23/02/20 22:06:23 INFO Executor: Running task 7.0 in stage 0.0 (TID 7) 23/02/20 22:06:23 INFO Executor: Finished task 6.0 in stage 0.0 (TID 6). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8) (10.0.2.15, executor driver, partition 8, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO Executor: Running task 8.0 in stage 0.0 (TID 8) 23/02/20 22:06:23 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 26 ms on 10.0.2.15 (executor driver) (7/10) 23/02/20 22:06:23 INFO Executor: Finished task 8.0 in stage 0.0 (TID 8). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9) (10.0.2.15, executor driver, partition 9, PROCESS_LOCAL, 7534 bytes) taskResourceAssignments Map() 23/02/20 22:06:23 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 16 ms on 10.0.2.15 (executor driver) (8/10) 23/02/20 22:06:23 INFO Executor: Running task 9.0 in stage 0.0 (TID 9) 23/02/20 22:06:23 INFO Executor: Finished task 9.0 in stage 0.0 (TID 9). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 19 ms on 10.0.2.15 (executor driver) (9/10) 23/02/20 22:06:23 INFO Executor: Finished task 7.0 in stage 0.0 (TID 7). 1054 bytes result sent to driver 23/02/20 22:06:23 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 58 ms on 10.0.2.15 (executor driver) (10/10) 23/02/20 22:06:23 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 23/02/20 22:06:23 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.677 s 23/02/20 22:06:23 INFO DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job 23/02/20 22:06:23 INFO TaskSchedulerImpl: Killing all running tasks in stage 0: Stage finished 23/02/20 22:06:23 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 0.746729 s Pi is roughly 3.13993113993114 23/02/20 22:06:23 INFO SparkUI: Stopped Spark web UI at http://10.0.2.15:4040 23/02/20 22:06:23 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 23/02/20 22:06:23 INFO MemoryStore: MemoryStore cleared 23/02/20 22:06:23 INFO BlockManager: BlockManager stopped 23/02/20 22:06:23 INFO BlockManagerMaster: BlockManagerMaster stopped 23/02/20 22:06:23 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 23/02/20 22:06:23 INFO SparkContext: Successfully stopped SparkContext 23/02/20 22:06:23 INFO ShutdownHookManager: Shutdown hook called 23/02/20 22:06:23 INFO ShutdownHookManager: Deleting directory /tmp/spark-3c59b0eb-bbc2-4c51-b5b2-235b4bceab08 23/02/20 22:06:23 INFO ShutdownHookManager: Deleting directory /tmp/spark-b99bef20-2c34-4558-892d-a13ba2a38524 hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$ hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$

hadoop@apollo-virtualbox:/opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13$ ./bin/spark-shell 23/02/20 22:09:10 WARN Utils: Your hostname, apollo-virtualbox resolves to a loopback address: 127.0.1.1; using 10.0.2.15 instead (on interface enp0s3) 23/02/20 22:09:10 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 3.3.2 /_/ Using Scala version 2.13.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_352) Type in expressions to have them evaluated. Type :help for more information. 23/02/20 22:09:14 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Spark context Web UI available at http://10.0.2.15:4040 Spark context available as 'sc' (master = local[*], app id = local-1676902155804). Spark session available as 'spark'. scala>

Install anaconda Anaconda3-2022.10-Linux-x86_64.sh

(base) hadoop@apollo-virtualbox:~$ which pyspark /opt/bigdata/spark-3.3.2-bin-hadoop3-scala2.13/bin/pyspark (base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$ pyspark

Python 3.9.13 (main, Aug 25 2022, 23:26:10)

[GCC 11.2.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

23/02/20 23:25:19 WARN Utils: Your hostname, apollo-virtualbox resolves to a loopback address: 127.0.1.1; using 10.0.2.15 instead (on interface enp0s3)

23/02/20 23:25:19 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

23/02/20 23:25:21 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.3.2

/_/

Using Python version 3.9.13 (main, Aug 25 2022 23:26:10)

Spark context Web UI available at http://10.0.2.15:4040

Spark context available as 'sc' (master = local[*], app id = local-1676906723183).

SparkSession available as 'spark'.

>>>

(base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$ cd

(base) hadoop@apollo-virtualbox:~$ cat pycharm.sh

nohup /opt/bigdata/pycharm/bin/pycharm.sh &

(base) hadoop@apollo-virtualbox:~$ chmod +x pycharm.sh

(base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$ which conda

/opt/bigdata/anaconda3/bin/conda

(base) hadoop@apollo-virtualbox:~$ conda

usage: conda [-h] [-V] command ...

conda is a tool for managing and deploying applications, environments and packages.

Options:

positional arguments:

command

clean Remove unused packages and caches.

compare Compare packages between conda environments.

config Modify configuration values in .condarc. This is modeled after the git config command. Writes to the user .condarc file (/home/hadoop/.condarc) by

default. Use the --show-sources flag to display all identified configuration locations on your computer.

create Create a new conda environment from a list of specified packages.

info Display information about current conda install.

init Initialize conda for shell interaction.

install Installs a list of packages into a specified conda environment.

list List installed packages in a conda environment.

package Low-level conda package utility. (EXPERIMENTAL)

remove Remove a list of packages from a specified conda environment.

rename Renames an existing environment.

run Run an executable in a conda environment.

search Search for packages and display associated information.The input is a MatchSpec, a query language for conda packages. See examples below.

uninstall Alias for conda remove.

update Updates conda packages to the latest compatible version.

upgrade Alias for conda update.

notices Retrieves latest channel notifications.

optional arguments:

-h, --help Show this help message and exit.

-V, --version Show the conda version number and exit.

conda commands available from other packages:

build

content-trust

convert

debug

develop

env

index

inspect

metapackage

pack

render

repo

server

skeleton

token

verify

(base) hadoop@apollo-virtualbox:~$ conda info

active environment : base

active env location : /opt/bigdata/anaconda3

shell level : 1

user config file : /home/hadoop/.condarc

populated config files :

conda version : 22.9.0

conda-build version : 3.22.0

python version : 3.9.13.final.0

virtual packages : __linux=5.19.0=0

__glibc=2.35=0

__unix=0=0

__archspec=1=x86_64

base environment : /opt/bigdata/anaconda3 (writable)

conda av data dir : /opt/bigdata/anaconda3/etc/conda

conda av metadata url : None

channel URLs : https://repo.anaconda.com/pkgs/main/linux-64

https://repo.anaconda.com/pkgs/main/noarch

https://repo.anaconda.com/pkgs/r/linux-64

https://repo.anaconda.com/pkgs/r/noarch

package cache : /opt/bigdata/anaconda3/pkgs

/home/hadoop/.conda/pkgs

envs directories : /opt/bigdata/anaconda3/envs

/home/hadoop/.conda/envs

platform : linux-64

user-agent : conda/22.9.0 requests/2.28.1 CPython/3.9.13 Linux/5.19.0-32-generic ubuntu/22.04.1 glibc/2.35

UID:GID : 1002:1002

netrc file : None

offline mode : False

(base) hadoop@apollo-virtualbox:~$

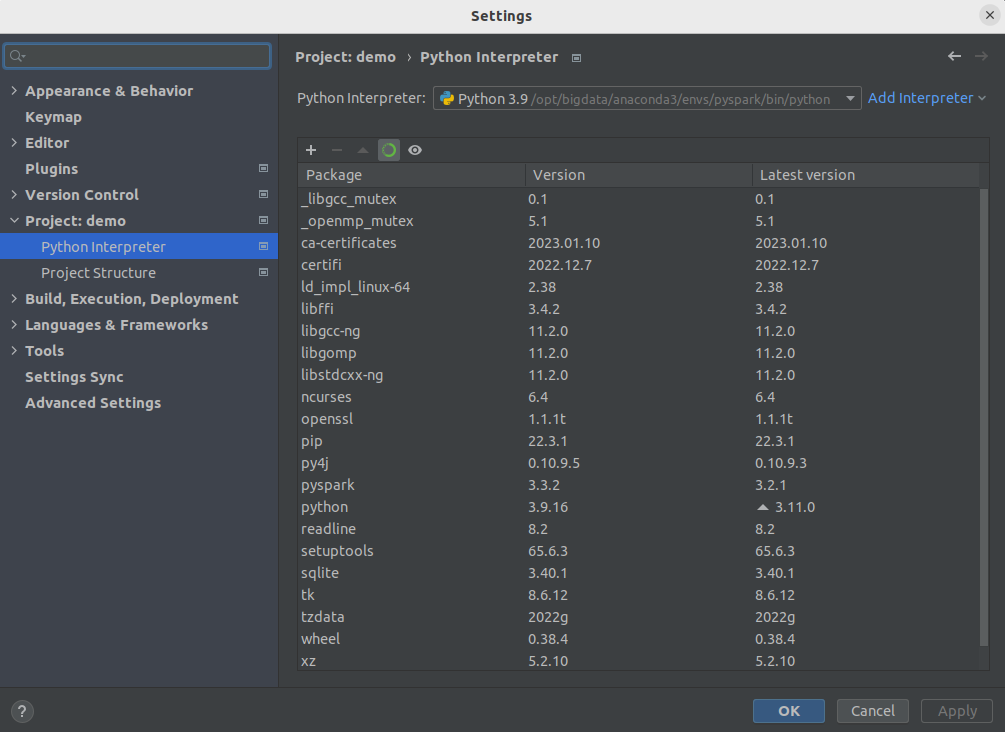

(base) hadoop@apollo-virtualbox:~$ conda create -n pyspark python=3.9

Collecting package metadata (current_repodata.json): done

Solving environment: done

==> WARNING: A newer version of conda exists. <==

current version: 22.9.0

latest version: 23.1.0

Please update conda by running

$ conda update -n base -c defaults conda

## Package Plan ##

environment location: /opt/bigdata/anaconda3/envs/pyspark

added / updated specs:

- python=3.9

The following packages will be downloaded:

package | build

---------------------------|-----------------

ca-certificates-2023.01.10 | h06a4308_0 120 KB

certifi-2022.12.7 | py39h06a4308_0 150 KB

libffi-3.4.2 | h6a678d5_6 136 KB

ncurses-6.4 | h6a678d5_0 914 KB

openssl-1.1.1t | h7f8727e_0 3.7 MB

pip-22.3.1 | py39h06a4308_0 2.7 MB

python-3.9.16 | h7a1cb2a_0 25.0 MB

readline-8.2 | h5eee18b_0 357 KB

setuptools-65.6.3 | py39h06a4308_0 1.1 MB

sqlite-3.40.1 | h5082296_0 1.2 MB

tzdata-2022g | h04d1e81_0 114 KB

wheel-0.38.4 | py39h06a4308_0 64 KB

xz-5.2.10 | h5eee18b_1 429 KB

zlib-1.2.13 | h5eee18b_0 103 KB

------------------------------------------------------------

Total: 36.1 MB

The following NEW packages will be INSTALLED:

_libgcc_mutex pkgs/main/linux-64::_libgcc_mutex-0.1-main None

_openmp_mutex pkgs/main/linux-64::_openmp_mutex-5.1-1_gnu None

ca-certificates pkgs/main/linux-64::ca-certificates-2023.01.10-h06a4308_0 None

certifi pkgs/main/linux-64::certifi-2022.12.7-py39h06a4308_0 None

ld_impl_linux-64 pkgs/main/linux-64::ld_impl_linux-64-2.38-h1181459_1 None

libffi pkgs/main/linux-64::libffi-3.4.2-h6a678d5_6 None

libgcc-ng pkgs/main/linux-64::libgcc-ng-11.2.0-h1234567_1 None

libgomp pkgs/main/linux-64::libgomp-11.2.0-h1234567_1 None

libstdcxx-ng pkgs/main/linux-64::libstdcxx-ng-11.2.0-h1234567_1 None

ncurses pkgs/main/linux-64::ncurses-6.4-h6a678d5_0 None

openssl pkgs/main/linux-64::openssl-1.1.1t-h7f8727e_0 None

pip pkgs/main/linux-64::pip-22.3.1-py39h06a4308_0 None

python pkgs/main/linux-64::python-3.9.16-h7a1cb2a_0 None

readline pkgs/main/linux-64::readline-8.2-h5eee18b_0 None

setuptools pkgs/main/linux-64::setuptools-65.6.3-py39h06a4308_0 None

sqlite pkgs/main/linux-64::sqlite-3.40.1-h5082296_0 None

tk pkgs/main/linux-64::tk-8.6.12-h1ccaba5_0 None

tzdata pkgs/main/noarch::tzdata-2022g-h04d1e81_0 None

wheel pkgs/main/linux-64::wheel-0.38.4-py39h06a4308_0 None

xz pkgs/main/linux-64::xz-5.2.10-h5eee18b_1 None

zlib pkgs/main/linux-64::zlib-1.2.13-h5eee18b_0 None

Proceed ([y]/n)? y

Downloading and Extracting Packages

pip-22.3.1 | 2.7 MB | ########################################################################################################################## | 100%

readline-8.2 | 357 KB | ########################################################################################################################## | 100%

certifi-2022.12.7 | 150 KB | ########################################################################################################################## | 100%

ncurses-6.4 | 914 KB | ########################################################################################################################## | 100%

tzdata-2022g | 114 KB | ########################################################################################################################## | 100%

openssl-1.1.1t | 3.7 MB | ########################################################################################################################## | 100%

sqlite-3.40.1 | 1.2 MB | ########################################################################################################################## | 100%

python-3.9.16 | 25.0 MB | ########################################################################################################################## | 100%

xz-5.2.10 | 429 KB | ########################################################################################################################## | 100%

zlib-1.2.13 | 103 KB | ########################################################################################################################## | 100%

wheel-0.38.4 | 64 KB | ########################################################################################################################## | 100%

libffi-3.4.2 | 136 KB | ########################################################################################################################## | 100%

setuptools-65.6.3 | 1.1 MB | ########################################################################################################################## | 100%

ca-certificates-2023 | 120 KB | ########################################################################################################################## | 100%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

#

# To activate this environment, use

#

# $ conda activate pyspark

#

# To deactivate an active environment, use

#

# $ conda deactivate

Retrieving notices: ...working... done

(base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$

(base) hadoop@apollo-virtualbox:~$ conda activate pyspark

(pyspark) hadoop@apollo-virtualbox:~$ python -V

Python 3.9.16

(pyspark) hadoop@apollo-virtualbox:~$ pip install pyspark

Collecting pyspark

Downloading pyspark-3.3.2.tar.gz (281.4 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 281.4/281.4 MB 4.6 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Collecting py4j==0.10.9.5

Downloading py4j-0.10.9.5-py2.py3-none-any.whl (199 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 199.7/199.7 kB 2.2 MB/s eta 0:00:00

Building wheels for collected packages: pyspark

Building wheel for pyspark (setup.py) ... done

Created wheel for pyspark: filename=pyspark-3.3.2-py2.py3-none-any.whl size=281824010 sha256=066c2fe155b381e915821a492c048d32502640ba71121d8cf070f0b3c5c99458

Stored in directory: /home/hadoop/.cache/pip/wheels/8e/1f/b7/ed748602b39b0e85d8d322ca481856faea8d5360900ee84b00

Successfully built pyspark

Installing collected packages: py4j, pyspark

Successfully installed py4j-0.10.9.5 pyspark-3.3.2

(pyspark) hadoop@apollo-virtualbox:~$

(pyspark) hadoop@apollo-virtualbox:~$ conda deactivate

(base) hadoop@apollo-virtualbox:~$ conda activate pyspark

(pyspark) hadoop@apollo-virtualbox:~$

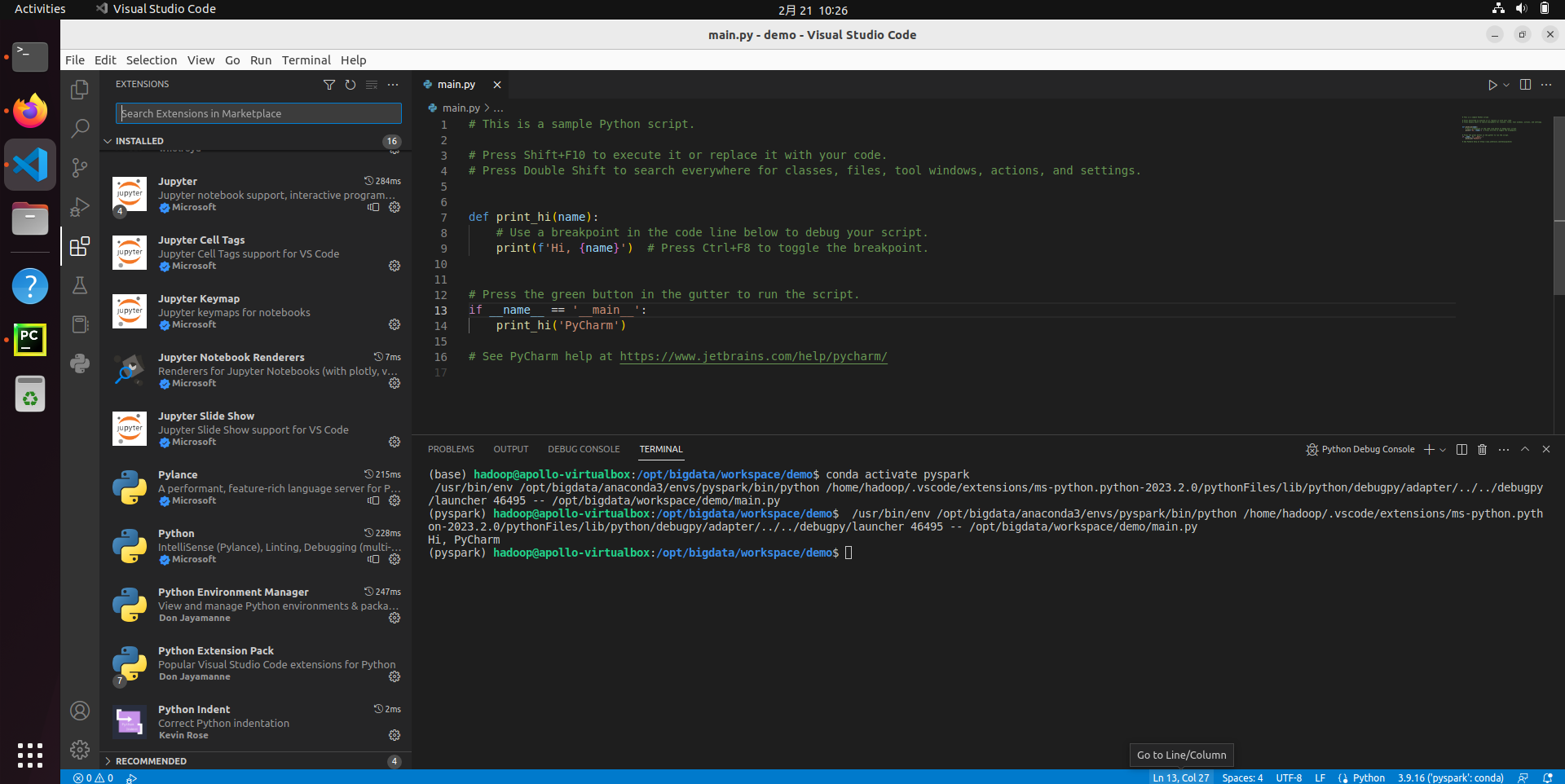

vscode Installation

root@apollo-virtualbox:/opt/bigdata/_backup# root@apollo-virtualbox:/opt/bigdata/_backup# apt install ./code_1.75.1-1675893397_amd64.deb Reading package lists... Done Building dependency tree... Done Reading state information... Done Note, selecting 'code' instead of './code_1.75.1-1675893397_amd64.deb' The following packages were automatically installed and are no longer required: ethtool libfreerdp-server2-2 libgnome-bg-4-1 libmspack0 libntfs-3g89 libvncserver1 libxmlsec1-openssl open-vm-tools systemd-hwe-hwdb zerofree Use 'apt autoremove' to remove them. The following NEW packages will be installed: code 0 upgraded, 1 newly installed, 0 to remove and 177 not upgraded. Need to get 0 B/98.2 MB of archives. After this operation, 406 MB of additional disk space will be used. Get:1 /opt/bigdata/_backup/code_1.75.1-1675893397_amd64.deb code amd64 1.75.1-1675893397 [98.2 MB] Selecting previously unselected package code. (Reading database ... 216140 files and directories currently installed.) Preparing to unpack .../code_1.75.1-1675893397_amd64.deb ... Unpacking code (1.75.1-1675893397) ... Setting up code (1.75.1-1675893397) ... Processing triggers for gnome-menus (3.36.0-1ubuntu3) ... Processing triggers for shared-mime-info (2.1-2) ... Processing triggers for mailcap (3.70+nmu1ubuntu1) ... Processing triggers for desktop-file-utils (0.26-1ubuntu3) ... root@apollo-virtualbox:/opt/bigdata/_backup#

Install plugin Python Environment Manager

浙公网安备 33010602011771号

浙公网安备 33010602011771号