微服务落地k8s碰到的问题

1 微服务落地k8s碰到的问题

1.1 elasticsearch 无法参加索引

在用log-pilot采集日志时,在部署项目的yml文件中写的是大写字母(petApi),然后在elasticsearch中无法创建索引,把yml配置的索引名改成小写后重新apply -f 配置文件就好了

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2261 100 2261 0 0 56678 0 --:--:-- --:--:-- --:--:-- 57974

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2527 100 2527 0 0 47344 0 --:--:-- --:--:-- --:--:-- 47679

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2527 100 2527 0 0 68709 0 --:--:-- --:--:-- --:--:-- 70194

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2527 100 2527 0 0 64933 0 --:--:-- --:--:-- --:--:-- 66500

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2660 100 2660 0 0 69468 0 --:--:-- --:--:-- --:--:-- 70000

yellow open petapi-catalina-2020.11.05 XwaBYiIKSvOxRDCiUUyZ8Q 5 1 0 0 460b 460b

[root@e4dce53fcdf9 logs]#

[root@e4dce53fcdf9 logs]#

[root@e4dce53fcdf9 logs]#

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v|grep pet

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2660 100 2660 0 0 58679 0 --:--:-- --:--:-- --:--:-- 57826

yellow open petapi-catalina-2020.11.05 XwaBYiIKSvOxRDCiUUyZ8Q 5 1 26 0 460b 460b

[root@e4dce53fcdf9 logs]#

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v -s |grep pet

yellow open petapi-catalina-2020.11.05 XwaBYiIKSvOxRDCiUUyZ8Q 5 1 32 0 86.2kb 86.2kb

1.2 RollingUpdate 更新过程

如果pod添加了就绪性探测后,滚动更新策略会先创建一个pod,然后直到自己定义的探测地址成功请求后才会删除原来的pod,这在发版的时候可以保证业务不中断

[root@htbb-k8s-master-b-test-01 app]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES app-57898c8d76-96l5f 0/1 Running 0 2m45s 10.244.3.62 htbb-k8s-master-b-test-02 <none> <none> app-bdbcfb5b9-qvbxl 1/1 Running 0 66m 10.244.1.60 htbb-k8s-node-b-test-02 <none> <none> htbb-admin-855568f4d-vz6sx 1/1 Running 0 22h 10.244.3.59 htbb-k8s-master-b-test-02 <none> <none> htbb-api-556df66b7-x2rpc 1/1 Running 0 23m 10.244.1.61 htbb-k8s-node-b-test-02 <none> <none> htbb-interface-6ccb4db757-4dwl7 1/1 Running 0 31m 10.244.2.48 htbb-k8s-node-b-test-01 <none> <none> htbb-wechat-6b95b6fbcc-r59xq 1/1 Running 0 61m 10.244.0.60 htbb-k8s-master-b-test-01 <none> <none> htbbpay-75749466f5-5nvj8 1/1 Running 0 20m 10.244.4.77 htbb-k8s-node-d-test-04 <none> <none> htbbtask-d778d8565-m2tqp 1/1 Running 0 17m 10.244.3.61 htbb-k8s-master-b-test-02 <none> <none> jx-d8bb958f9-4x4hb 1/1 Running 0 4h29m 10.244.2.47 htbb-k8s-node-b-test-01 <none> <none> jx-wechat-678bfb58c-j6qk5 1/1 Running 0 15m 10.244.5.82 htbb-k8s-node-d-test-03 <none> <none> mdrive-68557dfbb4-4q2n5 1/1 Running 0 107d 10.244.5.60 htbb-k8s-node-d-test-03 <none> <none> petapi-5d45c8f9f8-qkwr2 1/1 Running 0 18m 10.244.0.61 htbb-k8s-master-b-test-01 <none> <none> upload-566c6f6688-qkdm6 1/1 Running 0 26m 10.244.5.81 htbb-k8s-node-d-test-03 <none> <none> [root@htbb-k8s-master-b-test-01 app]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES app-57898c8d76-96l5f 0/1 Running 0 2m47s 10.244.3.62 htbb-k8s-master-b-test-02 <none> <none> app-bdbcfb5b9-qvbxl 1/1 Running 0 66m 10.244.1.60 htbb-k8s-node-b-test-02 <none> <none> htbb-admin-855568f4d-vz6sx 1/1 Running 0 22h 10.244.3.59 htbb-k8s-master-b-test-02 <none> <none> htbb-api-556df66b7-x2rpc 1/1 Running 0 23m 10.244.1.61 htbb-k8s-node-b-test-02 <none> <none> htbb-interface-6ccb4db757-4dwl7 1/1 Running 0 31m 10.244.2.48 htbb-k8s-node-b-test-01 <none> <none> htbb-wechat-6b95b6fbcc-r59xq 1/1 Running 0 62m 10.244.0.60 htbb-k8s-master-b-test-01 <none> <none> htbbpay-75749466f5-5nvj8 1/1 Running 0 20m 10.244.4.77 htbb-k8s-node-d-test-04 <none> <none> htbbtask-d778d8565-m2tqp 1/1 Running 0 17m 10.244.3.61 htbb-k8s-master-b-test-02 <none> <none> jx-d8bb958f9-4x4hb 1/1 Running 0 4h29m 10.244.2.47 htbb-k8s-node-b-test-01 <none> <none> jx-wechat-678bfb58c-j6qk5 1/1 Running 0 15m 10.244.5.82 htbb-k8s-node-d-test-03 <none> <none> mdrive-68557dfbb4-4q2n5 1/1 Running 0 107d 10.244.5.60 htbb-k8s-node-d-test-03 <none> <none> petapi-5d45c8f9f8-qkwr2 1/1 Running 0 18m 10.244.0.61 htbb-k8s-master-b-test-01 <none> <none> upload-566c6f6688-qkdm6 1/1 Running 0 26m 10.244.5.81 htbb-k8s-node-d-test-03 <none> <none> [root@htbb-k8s-master-b-test-01 app]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES app-57898c8d76-96l5f 1/1 Running 0 2m51s 10.244.3.62 htbb-k8s-master-b-test-02 <none> <none> app-bdbcfb5b9-qvbxl 1/1 Terminating 0 66m 10.244.1.60 htbb-k8s-node-b-test-02 <none> <none> htbb-admin-855568f4d-vz6sx 1/1 Running 0 22h 10.244.3.59 htbb-k8s-master-b-test-02 <none> <none> htbb-api-556df66b7-x2rpc 1/1 Running 0 23m 10.244.1.61 htbb-k8s-node-b-test-02 <none> <none> htbb-interface-6ccb4db757-4dwl7 1/1 Running 0 31m 10.244.2.48 htbb-k8s-node-b-test-01 <none> <none> htbb-wechat-6b95b6fbcc-r59xq 1/1 Running 0 62m 10.244.0.60 htbb-k8s-master-b-test-01 <none> <none> htbbpay-75749466f5-5nvj8 1/1 Running 0 20m 10.244.4.77 htbb-k8s-node-d-test-04 <none> <none> htbbtask-d778d8565-m2tqp 1/1 Running 0 17m 10.244.3.61 htbb-k8s-master-b-test-02 <none> <none> jx-d8bb958f9-4x4hb 1/1 Running 0 4h29m 10.244.2.47 htbb-k8s-node-b-test-01 <none> <none> jx-wechat-678bfb58c-j6qk5 1/1 Running 0 15m 10.244.5.82 htbb-k8s-node-d-test-03 <none> <none> mdrive-68557dfbb4-4q2n5 1/1 Running 0 107d 10.244.5.60 htbb-k8s-node-d-test-03 <none> <none> petapi-5d45c8f9f8-qkwr2 1/1 Running 0 19m 10.244.0.61 htbb-k8s-master-b-test-01 <none> <none> upload-566c6f6688-qkdm6 1/1 Running 0 27m 10.244.5.81 htbb-k8s-node-d-test-03 <none> <none>

[root@htbb-k8s-master-b-test-01 app]# cat app-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: app

name: app

spec:

replicas: 1

selector:

matchLabels:

app: app

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: app

spec:

volumes:

- name: tomcat-log

emptyDir: {}

- name: pub-configs

hostPath:

path: /nas/pub_configs

- name: htbb-share

hostPath:

path: /nas/htbb_share

imagePullSecrets:

- name: aliyun-registry

containers:

- image: registry.cn-shenzhen.aliyuncs.com/htbb-test/app:master_2020-11-05_14-37

name: app

env:

- name: aliyun_logs_app-catalina

value: "stdout"

- name: aliyun_logs_app-project-log

value: "/data/logs/tomcat_log/project_log/htagent-api/*.log"

resources: {}

imagePullPolicy: Always

volumeMounts:

- name: pub-configs

mountPath: /nas/pub_configs

- name: htbb-share

mountPath: /nas/htbb_share

- name: tomcat-log

mountPath: /data/logs/tomcat_log

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

failureThreshold: 3

readinessProbe:

httpGet:

path: /app/learn/getMediaList?pageIndex=1&pageSize=10&userId=1&type=1

port: 8080

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 3

failureThreshold: 3

---

apiVersion: v1

kind: Service

metadata:

name: app

labels:

app: app

namespace: default

spec:

selector:

app: app

ports:

- name: app

port: 8080

targetPort: 8080

1.3 jx_wechat 应用部署后无法采集日志

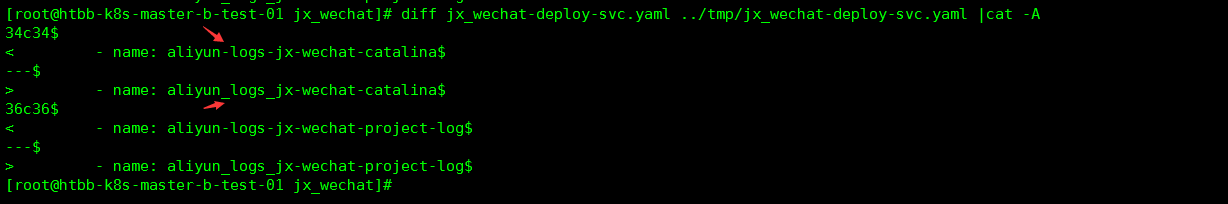

jx_wechat 应用部署后就绪性探测是好的,服务正常,但是用log-pilot怎么也收集不到日志,不管是catalina日志还是项目日志,看了elasticsearch的日志没有,看jx_wechat部署所在的log-pilot的pod的日志有看到其他项目采集的信息,就是没有jx_wechat信息,最后的方法是重新拷贝一份已经正常可以采集到日志项目的yaml部署文件,然后改成对应jx_wechat的相关信息,发现elasticsearch就有索引出来了。然后用diff 比对jx_wechat之前的yaml文件,发现是yaml配置文件中配置log-pilot那部分必须严格写下划线,而jx_wechat写成了中横线

改前后配置文件对比

更改前收集不到日志

[root@e4dce53fcdf9 logs]# curl localhost:9200/_cat/indices?v -s|grep -Ev "kibana|tasks" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size yellow open htbb-api-catalina-2020.11.05 ayy6NGJKSXGtEjB-u0jrkA 5 1 46 0 298.6kb 298.6kb yellow open htbb-admin-catalina-2020.11.05 po_LC8FBRoOt3N5o-KKZjA 5 1 186 0 628.4kb 628.4kb yellow open htbb-interface-catalina-2020.11.05 Pv0CeRbPT8GNvTRzqB43EQ 5 1 41 0 337.2kb 337.2kb yellow open petapi-catalina-2020.11.05 XwaBYiIKSvOxRDCiUUyZ8Q 5 1 47 0 299.9kb 299.9kb yellow open app-project-log-2020.11.05 sd62CrLLRTaa-1-ZXct_UQ 5 1 22003 0 11.3mb 11.3mb yellow open htbbtask-project-log-2020.11.05 Mbsl3P-5ToawC4empR7BBQ 5 1 10086 0 4.7mb 4.7mb yellow open htbb-wechat-project-log-2020.11.05 ftOqrZrTQd-UqhPfZjseCw 5 1 75 0 244.8kb 244.8kb yellow open app-catalina-2020.11.05 jl1g43nnR8SHDZtC1ZfsNw 5 1 575 0 5mb 5mb yellow open jx-catalina-2020.11.05 tqU9S-muQzOBxtHVGAhFjQ 5 1 1153 0 1.4mb 1.4mb yellow open jx-project-log-2020.11.05 1d0UGKLrQ9OBA-bAHPmMfA 5 1 1111 0 1.4mb 1.4mb yellow open htbb-api-project-log-2020.11.05 Pl9XresLTcaWOsQdsYU_kw 5 1 1 0 79.9kb 79.9kb yellow open htbbtask-catalina-2020.11.05 EAUiuLa-RTOkCF2vHR3GCA 5 1 41 0 462.9kb 462.9kb yellow open upload-project-log-2020.11.05 ce-e9OjaRbWarDp4wj4gkw 5 1 44 0 252.8kb 252.8kb yellow open htbb-wechat-catalina-2020.11.05 86zipBjKSsatcuWiasCdcw 5 1 84 0 456.3kb 456.3kb yellow open upload-catalina-2020.11.05 H-Ee8ebrS2WXxz64tOu9fw 5 1 41 0 216.7kb 216.7kb yellow open htbbpay-project-log-2020.11.05 3yaFAe_YTcuWBndBRzRKYg 5 1 89 0 209.9kb 209.9kb yellow open htbbpay-catalina-2020.11.05 utIUsSdOSmOTCC2cTTgJqw 5 1 41 0 328.4kb 328.4kb yellow open htbb-admin-project-log-2020.11.05 gL2bV5XpQl-pI5UzIm-jLw 5 1 483 0 678.9kb 678.9kb yellow open htbb-interface-project-log-2020.11.05 x9xMMFXbRJ-lrnj6UKjjeA 5 1 190 0 396.3kb 396.3kb yellow open petapi-project-log-2020.11.05 nTC7QnZeRzWfge-E1Ikgyw 5 1 5 0 121.4kb 121.4kb

更改后 可以看到jx-wechat-* 的日志了

[root@htbb-k8s-node-d-test-03 ~]# curl localhost:9200/_cat/indices?v -s|grep -Ev "kibana|tasks" health status index uuid pri rep docs.count docs.deleted store.size pri.store.size yellow open htbb-api-catalina-2020.11.05 ayy6NGJKSXGtEjB-u0jrkA 5 1 46 0 298.6kb 298.6kb yellow open htbb-admin-catalina-2020.11.05 po_LC8FBRoOt3N5o-KKZjA 5 1 209 0 585.2kb 585.2kb yellow open htbb-interface-catalina-2020.11.05 Pv0CeRbPT8GNvTRzqB43EQ 5 1 41 0 337.2kb 337.2kb yellow open htbbtask-project-log-2020.11.05 Mbsl3P-5ToawC4empR7BBQ 5 1 15675 0 6.7mb 6.7mb yellow open app-project-log-2020.11.05 sd62CrLLRTaa-1-ZXct_UQ 5 1 27074 0 13.9mb 13.9mb yellow open jx-catalina-2020.11.05 tqU9S-muQzOBxtHVGAhFjQ 5 1 1384 0 1.6mb 1.6mb yellow open jx-project-log-2020.11.05 1d0UGKLrQ9OBA-bAHPmMfA 5 1 1342 0 1.2mb 1.2mb yellow open htbb-api-project-log-2020.11.05 Pl9XresLTcaWOsQdsYU_kw 5 1 1 0 79.9kb 79.9kb yellow open upload-project-log-2020.11.05 ce-e9OjaRbWarDp4wj4gkw 5 1 44 0 252.8kb 252.8kb yellow open htbb-wechat-catalina-2020.11.05 86zipBjKSsatcuWiasCdcw 5 1 84 0 456.3kb 456.3kb yellow open jx-wechat-project-log-2020.11.05 e4UWyIBcQQmqhyCVaIbkcw 5 1 82 0 364.3kb 364.3kb yellow open upload-catalina-2020.11.05 H-Ee8ebrS2WXxz64tOu9fw 5 1 41 0 216.7kb 216.7kb yellow open htbbpay-catalina-2020.11.05 utIUsSdOSmOTCC2cTTgJqw 5 1 41 0 328.4kb 328.4kb yellow open petapi-catalina-2020.11.05 XwaBYiIKSvOxRDCiUUyZ8Q 5 1 47 0 299.9kb 299.9kb yellow open htbb-wechat-project-log-2020.11.05 ftOqrZrTQd-UqhPfZjseCw 5 1 75 0 244.8kb 244.8kb yellow open app-catalina-2020.11.05 jl1g43nnR8SHDZtC1ZfsNw 5 1 805 0 6mb 6mb yellow open htbbtask-catalina-2020.11.05 EAUiuLa-RTOkCF2vHR3GCA 5 1 41 0 462.9kb 462.9kb yellow open htbbpay-project-log-2020.11.05 3yaFAe_YTcuWBndBRzRKYg 5 1 89 0 209.9kb 209.9kb yellow open jx-wechat-catalina-2020.11.05 C6YCdzSOQN6HXBWO12oLMQ 5 1 41 0 215.4kb 215.4kb yellow open htbb-admin-project-log-2020.11.05 gL2bV5XpQl-pI5UzIm-jLw 5 1 529 0 967kb 967kb yellow open petapi-project-log-2020.11.05 nTC7QnZeRzWfge-E1Ikgyw 5 1 5 0 121.4kb 121.4kb yellow open htbb-interface-project-log-2020.11.05 x9xMMFXbRJ-lrnj6UKjjeA 5 1 190 0 396.3kb 396.3kb [root@htbb-k8s-node-d-test-03 ~]# curl localhost:9200/_cat/indices?v -s|grep -Ev "kibana|tasks"|grep jx-wechat yellow open jx-wechat-project-log-2020.11.05 e4UWyIBcQQmqhyCVaIbkcw 5 1 82 0 364.3kb 364.3kb yellow open jx-wechat-catalina-2020.11.05 C6YCdzSOQN6HXBWO12oLMQ 5 1 41 0 215.4kb 215.4kb [root@htbb-k8s-node-d-test-03 ~]#

1.4 采集不到project_log日志

log-pilot可以采集到stdout的日志,即catalina.out日志,但是采集不到project_log目录里面的日志,排查步骤,查看ymal配置里面的路径是否和实际pod里面项目配置的日志路径一样,这里采集不到project_log日志就是因为这个原因导致的,还有个statistic-service项目,war包名是这个,然而project_log里面的路径又多了个s,statistces-service

小总结:

根据以上碰到的4个问题,基本都是因为命名大小写不统一,项目名和日志配置的项目名又不一样导致的,由此可见,统一标准是多么重要,为后期维护,排查问题节约多少时间。

1.5 应用readinessProbe外网无法探测的问题

探测接口地址:http://testadmin.hthu.com.cn/htbb-admin/healthcheck?serverName=htbb-api

公司是微服务模式,分为应用层和service层,然后应用层都可以直接写监控探测接口,通过参数化的方式,但是service层只能通过应用层去调用以判断service服务是否正常,我们公司统一健康检测地址,健康检测地址接口统一写在管理后台,然后由管理后台的这个接口地址去调用其他服务来判断对应的应用或服务是否正常,如果应用层的应用,那么这个后台管理统一健康检查接口发起完整的域名应用接口请求,如果是探测service服务就需要通过dubbo的rpc协议来调用对应的service.,有个问题是由于同一都是管理后台来调用,当管理后台这个健康检查接口服务有问题的时候,所有的监控检测都不能用了

但是在k8s环境里面,这种方式就不行了,原因是,当部署一个应用htbb-api后,readinessProbe要检查成功后才能接受用户请求,(这里假设管理后台的监控检测接口可以用),readinessProbe开始发起 http://testadmin.hthu.com.cn/htbb-admin/healthcheck?serverName=htbb-api这个请求,当请求到了htbb-admin后,htbb-admin在请求http://testdata.hthu.com.cn/htbb-api/healthcheck ,但是此时htbb-api服务还没通过健康检测,也就htbb-api还无法接受到http://testdata.hthu.com.cn/htbb-api/healthcheck这个请求,所以就是个死循环。htbb-admin永远请求不了这个地址,也就htbb-api一直无法通过readinessProbe检测。

因此,这里问题的根本原因就是发起健康检测请求的不是k8s master发起的,同样后台管理应用htbb-admin 的readinessProbe写成 /htbb-admin/healthcheck?serverName=htbb-admin就可以探测成功,因为发起请求的是k8s master的监控检测组件。即便是管理后台这种是k8s master健康检查组件发起的请求,也不能用外网地址,不然也一直卡住不能通过readinessProbe。已验证测试。

2 解决k8s内部能够解析外部域名或主机名的功能

当前部署的版本是1.18版,在>1.12版本就k8s就默认使用了coreDNS了,但是也不能解决解析公司内部其他应用域名,比如应用的配置文件中配置的是hosts里面的主机名,由于zookeeper集群是跑在云主机的,pod的里面的微服务需要通过配置文件来获取zk的地址来注册,因此就涉及到k8s集群内部的pod需要解析外部域名。

[root@htbb-k8s-master-b-test-01 k8s]# kubectl exec -it htbb-order-service-9dd69c8f8-8c8dq -- bash [root@htbb-order-service-9dd69c8f8-8c8dq /]# ping htbb-k8s-node-d-test-04 ping: htbb-k8s-node-d-test-04: Name or service not known [root@htbb-order-service-9dd69c8f8-8c8dq /]# cat /etc/hosts # Kubernetes-managed hosts file. 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet fe00::0 ip6-mcastprefix fe00::1 ip6-allnodes fe00::2 ip6-allrouters 10.244.0.65 htbb-order-service-9dd69c8f8-8c8dq

根据k8s官方描述,If a Pod's dnsPolicy is set to default, it inherits(继承) the name resolution configuration from the node that the Pod runs on. The Pod's DNS resolution should behave the same as the node.

出现上面无法解析是因为pod内部无法解析默认会转发dns解析请求到pod当前说在运行的node节点上的dns服务器,然后上面是公司内部的主机名,外部dns肯定是解析不了的,所以无法解析。

参考一下地址,找到解决方案

https://www.cnblogs.com/cuishuai/p/9856843.html

https://kubernetes.io/docs/tasks/administer-cluster/dns-custom-nameservers/

2.1 方案分析

为了解决解析公司内部非k8s平台的应用,需要搭建一个dns服务器来解析这部分应用,然后再k8s的coreDNS里面配置如果解析不了非k8s系统的域名时,它转发到它的上游DNS,这个DNS这里就选择用dnsmasq来部署,然后dnsmasq的上游指向云服务器默认的/etc/resolv.conf中的DNS地址,这样不管是k8s内部的、公司内部私有域名,还是互联网上的都可以解析。

2.2 dnsmasq部署

# 安装 yum install -y dnsmasq # 查看包内目录结构分布情况 rpm -ql dnsmasq # 修改配置 vim /etc/dnsmasq.conf [root@htbb-k8s-master-b-test-01 ~]# egrep -v '^#|^$' /etc/dnsmasq.conf port=53 strict-order listen-address=127.0.0.1,172.16.1.178 no-dhcp-interface=eth0 addn-hosts=/etc/hosts conf-dir=/etc/dnsmasq.d,.rpmnew,.rpmsave,.rpmorig [root@htbb-k8s-master-b-test-01 ~]# port=53 # dnsmasq的端口 strict-order # 严格安装配置文件的dns servername一个一个的匹配 listen-address=127.0.0.1,172.16.1.178 # 监听的地址,127地址必须要 no-dhcp-interface=eth0 # 不让dhcp功能监听的端口,不配置也行,默认没启用dhcp addn-hosts=/etc/hosts # dns解析记录 # Change this line if you want dns to get its upstream servers from # somewhere other that /etc/resolv.conf #resolv-file= 这项保持默认,让它的上游dns服务器为node的dns地址

2.3 更改coreDNS的configmap配置

更改coreDNS的configmap配置,让其在转发时不要转发到当前pod所在的node的/etc/resolv.conf,而是转发到自定义的dnsmasq的dns地址,dnsmasq如果没有解析记录再转发到节点的默认dns地址。

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

Corefile: |

.:53 {

log

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . 172.16.1.178 # 直接转发至dnsmasq的地址解析

cache 30

loop

reload

loadbalance

}

提示:如果不改coreDNS的转发策略,要想实现解析公司内部应用的话,就需要将云主机默认/etc/resolv.conf里面的dns改成dnsmasq的地址,然后dnsmasq里面的上游dns再改成云主机默认的dns或223.5.5.5或8.8.8.8等公共dns地址。这样改比较麻烦,因为coreDNS默认转发的是pod节点所在的node默认/etc/resolv.conf,所以这样的就要改每台node的dns地址为dnsmasq的地址,再者尽可能不要改阿里云默认的相关信息。

2.4 验证解析结果

[root@htbb-k8s-master-b-test-01 ~]# kubectl exec -it htbb-order-service-9dd69c8f8-8c8dq -- bash [root@htbb-order-service-9dd69c8f8-8c8dq /]# ping htbb-k8s-node-d-test-04 PING htbb-k8s-node-d-test-04 (172.16.51.205) 56(84) bytes of data. 64 bytes from htbb-k8s-node-d-test-04 (172.16.51.205): icmp_seq=1 ttl=63 time=1.13 ms 64 bytes from htbb-k8s-node-d-test-04 (172.16.51.205): icmp_seq=2 ttl=63 time=1.10 ms 64 bytes from htbb-k8s-node-d-test-04 (172.16.51.205): icmp_seq=3 ttl=63 time=1.15 ms 64 bytes from htbb-k8s-node-d-test-04 (172.16.51.205): icmp_seq=4 ttl=63 time=1.13 ms ^C --- htbb-k8s-node-d-test-04 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3002ms rtt min/avg/max/mdev = 1.103/1.132/1.155/0.018 ms [root@htbb-order-service-9dd69c8f8-8c8dq /]# nslookup htbb-k8s-node-d-test-04 bash: nslookup: command not found [root@htbb-order-service-9dd69c8f8-8c8dq /]# ping baidu.com PING baidu.com (39.156.69.79) 56(84) bytes of data. 64 bytes from 39.156.69.79 (39.156.69.79): icmp_seq=1 ttl=48 time=39.0 ms 64 bytes from 39.156.69.79 (39.156.69.79): icmp_seq=2 ttl=48 time=38.9 ms 64 bytes from 39.156.69.79 (39.156.69.79): icmp_seq=3 ttl=48 time=38.9 ms 64 bytes from 39.156.69.79 (39.156.69.79): icmp_seq=4 ttl=48 time=43.7 ms ^C --- baidu.com ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3002ms rtt min/avg/max/mdev = 38.951/40.179/43.760/2.072 ms [root@htbb-order-service-9dd69c8f8-8c8dq /]# ping htbb-order-service.default.svc.cluster.local PING htbb-order-service.default.svc.cluster.local (10.0.0.151) 56(84) bytes of data. ^C --- htbb-order-service.default.svc.cluster.local ping statistics --- 4 packets transmitted, 0 received, 100% packet loss, time 2999ms [root@htbb-order-service-9dd69c8f8-8c8dq /]# telnet htbb-k8s-node-d-test-04 22 Trying 172.16.51.205... Connected to htbb-k8s-node-d-test-04. Escape character is '^]'. SSH-2.0-OpenSSH_7.4 ^C^C Connection closed by foreign host. [root@htbb-order-service-9dd69c8f8-8c8dq /]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号