Mac 下用IDEA时maven,ant打包 (mr 入库hbase)

现在非常喜欢IDEA,之前在mac 上用的eclipse 经常出现无缘无故的错误。所以转为IDEA. 不过新工具需要学习成本,手头上的项目就遇到了很多问题,现列举如下:

背景描述

在hadoop 开发时,经常在mr阶段将清洗后的数据入库到Hbase. 在这个过程中,需要编译、打jar包,然后上传到服务器,执行hadoop jar *.jar 命令。每次清洗后需要手动4步操作。农民阿姨天生喜欢取巧,故这几天一直研究如何简化此过程。

思路描述

1.之前项目自动化打包上传都用ant ,不过是在window系统下eclipse开发的。但是在mac的IDEA中,屡次失败。总是出现如下错误信息

Exception in thread "main" java.lang.SecurityException: Invalid signature file digest for Manifest main attributes at sun.security.util.SignatureFileVerifier.processImpl(SignatureFileVerifier.java:287) at sun.security.util.SignatureFileVerifier.process(SignatureFileVerifier.java:240) at java.util.jar.JarVerifier.processEntry(JarVerifier.java:317) at java.util.jar.JarVerifier.update(JarVerifier.java:228) at java.util.jar.JarFile.initializeVerifier(JarFile.java:348) at java.util.jar.JarFile.getInputStream(JarFile.java:415) at org.apache.hadoop.util.RunJar.unJar(RunJar.java:101) at org.apache.hadoop.util.RunJar.unJar(RunJar.java:81) at org.apache.hadoop.util.RunJar.run(RunJar.java:209) at org.apache.hadoop.util.RunJar.main(RunJar.java:136)网上搜了很多资料,解决方案都是失败。原因是 项目中引用的jar包签名有问题。(之前用eclipse导出jar包没有出现过这种情况)

2.用IDEA导出jar包,操作步骤如下

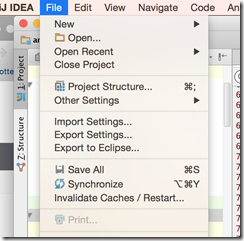

Fiel –>Project Structure

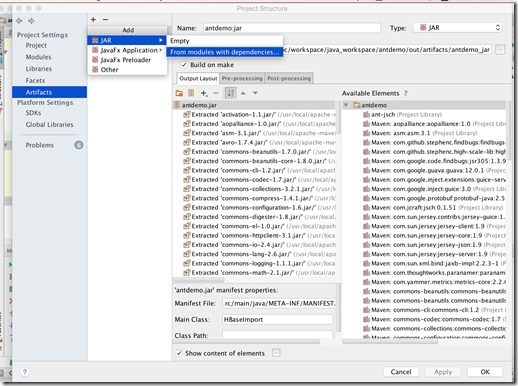

然后出现如下:

选好Manifest File ,Main Class

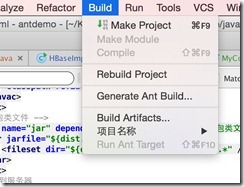

之后Build->Build Artifacts 可以编译打包了。去项目中 out 文件下可以找到。

但是通过这种打包方式,在运行时报错。因为hbase的lib相关包没有包含进去。这个一种笨的解决方案是在hadoop 中每个节点上的lib包下都拷贝一份hbase的lib包。这种方案有弊端,我没有选择。如何解决这个问题?我用maven 打包,上传jar包,运行,成功。

最终方案:

通过maven可以正常打包,那如何自动化上传并运行呢?我是用maven 打包,用ant 上传并执行的。

maven pom 文件内容:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>groupId</groupId> <artifactId>antdemo</artifactId> <version>1</version> <dependencies> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-client</artifactId> <version>0.98.15-hadoop2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.6.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-core</artifactId> <version>2.6.0</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-common</artifactId> <version>0.98.15-hadoop2</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-server</artifactId> <version>0.98.15-hadoop2</version> </dependency> <dependency> <groupId>com.jcraft</groupId> <artifactId>jsch</artifactId> <version>0.1.51</version> </dependency> <dependency> <groupId>org.apache.ant</groupId> <artifactId>ant-jsch</artifactId> <version>1.9.5</version> </dependency> </dependencies> <build> <plugins> <plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <archive> <manifest> <!--这里要替换成jar包main方法所在类--> <mainClass>HBaseImport</mainClass> </manifest> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <!-- this is used for inheritance merges --> <phase>package</phase> <!-- 指定在打包节点执行jar包合并操作 --> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </build> </project>

ant 中的 build.xml的内容:

<?xml version="1.0" encoding="UTF-8"?> <project name="项目名称" basedir="." default="sshexec"> <description>本配置文件供ANT编译项目、自动进行单元测试、打包并部署之用。</description> <description>默认操作(输入命令:ant)为编译源程序并发布运行。</description> <!--属性设置--> <property environment="env" /> <!--<property file="build.xml" />--> <property name="build.dir" location="build.xml"/> <property name="src.dir" value="${basedir}/src" /> <!--<property name="java.lib.dir" location="lib"/>--> <property name="java.lib.dir" value="/Library/Java/JavaVirtualMachines/jdk1.7.0_79.jdk/Contents/Home/lib" /> <property name="classes.dir" value="${basedir}/classes" /> <property name="dist.dir" value="${basedir}/dist" /> <property name="third.lib.dir" value="/Users/zzy/Kclouds/01_mac/doc/workspace/other_workspace/hbase-0.98.8-hadoop2/lib" /> <!--<property name="third.lib.dir" value="{basedir}/lib" />--> <property name="localpath.dir" value="${basedir}" /> <property name="remote.host" value="192.168.122.211"/> <property name="remote.username" value="root"/> <property name="remote.password" value="lg"/> <property name="remote.home" value="/test"/> <!--每次需要知道的main类,写到这里--> <property name="main.class" value="HBaseImport"/> <!-- 基本编译路径设置 --> <path id="compile.classpath"> <fileset dir="${java.lib.dir}"> <include name="tools.jar" /> </fileset> <fileset dir="${third.lib.dir}"> <include name="*.jar"/> </fileset> </path> <!-- 运行路径设置 --> <path id="run.classpath"> <path refid="compile.classpath" /> <pathelement location="${classes.dir}" /> </path> <!-- 清理,删除临时目录 --> <target name="clean" description="清理,删除临时目录"> <!--delete dir="${build.dir}" /--> <delete dir="${dist.dir}" /> <delete dir="${classes.dir}" /> <echo level="info">清理完毕</echo> </target> <!-- 初始化,建立目录,复制文件 --> <target name="init" depends="clean" description="初始化,建立目录,复制文件"> <mkdir dir="${classes.dir}" /> <mkdir dir="${dist.dir}" /> </target> <!-- 编译源文件--> <target name="compile" depends="init" description="编译源文件"> <javac srcdir="${src.dir}" destdir="${classes.dir}" source="1.7" target="1.7" includeAntRuntime="false" debug="false" verbose="false"> <compilerarg line="-encoding UTF-8 "/> <classpath refid="compile.classpath" /> </javac> </target> <!-- 打包类文件 --> <target name="jar" depends="compile" description="打包类文件"> <jar jarfile="${dist.dir}/jar.jar"> <fileset dir="${classes.dir}" includes="**/*.*" /> </jar> </target> <!--上传到服务器 **需要把lib目录下的jsch-0.1.51拷贝到$ANT_HOME/lib下,如果是Eclipse下的Ant环境必须在Window->Preferences->Ant->Runtime->Classpath中加入jsch-0.1.51。 --> <!--<target name="ssh" depends="jar">--> <!--<scp file="${dist.dir}/jar.jar" todir="${remote.username}@${remote.host}:${remote.home}" password="${remote.password}" trust="true"/>--> <!--</target>--> <!-- --> <!--<target name="sshexec" depends="ssh">--> <!--<sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;hadoop jar ${remote.home}/jar.jar ${main.class}"/>--> <!--<!–<sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;touch what.text ${main.class}"/>–>--> <!--</target>--> <target name="ssh" depends="jar"> <scp file="${basedir}/target/antdemo-1-jar-with-dependencies.jar" todir="${remote.username}@${remote.host}:${remote.home}" password="${remote.password}" trust="true"/> </target> <target name="sshexec" depends="ssh"> <sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;hadoop jar ${remote.home}/antdemo-1-jar-with-dependencies.jar ${main.class}"/> <!--<sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;touch what.text ${main.class}"/>--> </target> </project>最关键的是:<target name="ssh" depends="jar"> <scp file="${basedir}/target/antdemo-1-jar-with-dependencies.jar" todir="${remote.username}@${remote.host}:${remote.home}" password="${remote.password}" trust="true"/> </target> <target name="sshexec" depends="ssh"> <sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;hadoop jar ${remote.home}/antdemo-1-jar-with-dependencies.jar ${main.class}"/> <!--<sshexec host="${remote.host}" username="${remote.username}" password="${remote.password}" trust="true" command="source /etc/profile;touch what.text ${main.class}"/>--> </target>

这步是上传并执行,好好研究一下这步代码。

MR清洗后入库Hbase 代码:

package demo; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil; import org.apache.hadoop.hbase.mapreduce.TableOutputFormat; import org.apache.hadoop.hbase.mapreduce.TableReducer; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; //import org.apache.hadoop.mapred.FileInputFormat; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import java.io.IOException; import java.text.SimpleDateFormat; import java.util.Date; /** * Created by zzy on 15/11/17. */ /** * mr 中操作hbase */ public class HBaseImport { static class BatchImportMapper extends Mapper<LongWritable,Text,LongWritable,Text>{ @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { super.map(key, value, context); String line = value.toString(); String[] splited = line.split("\t"); SimpleDateFormat sdf = new SimpleDateFormat("yyyyMMddHHmmss"); String time =sdf.format(new Date(Long.parseLong(splited[0].trim()))); String rowkey = splited[1]+"_"+time; Text v2s = new Text(); v2s.set(rowkey+"\t" +line); context.write(key,v2s); } } static class BatchImportReducer extends TableReducer<LongWritable,Text,NullWritable> { private byte[] family = "cf".getBytes(); @Override protected void reduce(LongWritable key, Iterable<Text> v2s, Context context) throws IOException, InterruptedException { // super.reduce(key, values, context); for (Text v2 :v2s){ String [] splited = v2.toString().split("\t"); String rowKey = splited[0]; Put put = new Put(rowKey.getBytes()); put.add(family,"raw".getBytes(),v2.toString().getBytes()); put.add(family,"reportTime".getBytes(),splited[1].getBytes()); put.add(family,"msisdn".getBytes(),splited[2].getBytes()); put.add(family,"apmac".getBytes(),splited[3].getBytes()); put.add(family,"acmac".getBytes(),splited[4].getBytes()); } } } private static final String tableName = "logs"; private static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Configuration conf = new Configuration(); conf.set("hbase.zookeeper.quorum","192.168.122.213:2181"); conf.set("hbase.rootdir","hdfs://192.168.122.211:9000/hbase"); conf.set(TableOutputFormat.OUTPUT_TABLE, tableName); Job job = Job.getInstance(conf,HBaseImport.class.getSimpleName()); TableMapReduceUtil.addDependencyJars(job); job.setJarByClass(HBaseImport.class); job.setMapperClass(BatchImportMapper.class); job.setReducerClass(BatchImportReducer.class); job.setMapOutputKeyClass(LongWritable.class); job.setMapOutputValueClass(Text.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TableOutputFormat.class); FileInputFormat.setInputPaths(job, "hdfs://192.168.122.211:9000/user/hbase"); job.waitForCompletion(true); // FileInputFormat.setInputPaths(job,""); } }

本文章如何对阁下有帮助,希望您高举贵手,支持一下。

浙公网安备 33010602011771号

浙公网安备 33010602011771号