import os

import numpy as np

import sys

from datetime import datetime

import gc

path = 'F:\\jj147'

# 导入结巴库,并将需要用到的词库加进字典

import jieba

# 导入停用词:

with open(r'F:\stopsCN.txt', encoding='utf-8') as f:

stopwords = f.read().split('\n')

def processing(tokens):

# 去掉非字母汉字的字符

tokens = "".join([char for char in tokens if char.isalpha()])

# 结巴分词

tokens = [token for token in jieba.cut(tokens,cut_all=True) if len(token) >=2]

# 去掉停用词

tokens = " ".join([token for token in tokens if token not in stopwords])

return tokens

tokenList = []

targetList = []

# 用os.walk获取需要的变量,并拼接文件路径再打开每一个文件

for root,dirs,files in os.walk(path):

for f in files:

filePath = os.path.join(root,f)

with open(filePath, encoding='utf-8') as f:

content = f.read()

# 获取新闻类别标签,并处理该新闻

target = filePath.split('\\')[-2]

targetList.append(target)

tokenList.append(processing(content))

#划分训练集和测试,用TF-IDF算法进行单词权值的计算

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

vectorizer= TfidfVectorizer()

x_train,x_test,y_train,y_test=train_test_split(tokenList,targetList,test_size=0.2)

X_train=vectorizer.fit_transform(x_train)

X_test=vectorizer.transform(x_test)

#构建贝叶斯模型

from sklearn.naive_bayes import MultinomialNB #用于离散特征分类,文本分类单词统计,以出现的次数作为特征值

mulp=MultinomialNB ()

mulp_NB=mulp.fit(X_train,y_train)

#对模型进行预测

y_predict=mulp.predict(X_test)

# # 从sklearn.metrics里导入classification_report做分类的性能报告

from sklearn.metrics import classification_report

print('模型的准确率为:', mulp.score(X_test, y_test))

print('classification_report:\n',classification_report(y_test, y_predict))

![]()

# 将预测结果和实际结果进行对比

import collections

import matplotlib.pyplot as plt

# 统计测试集和预测集的各类新闻个数

testCount = collections.Counter(y_test)

predCount = collections.Counter(y_predict)

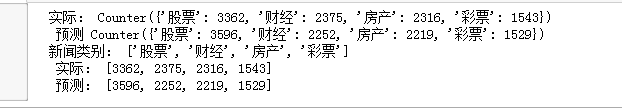

print('实际:',testCount,'\n', '预测', predCount)

# 建立标签列表,实际结果列表,预测结果列表,

nameList = list(testCount.keys())

testList = list(testCount.values())

predictList = list(predCount.values())

x = list(range(len(nameList)))

print("新闻类别:",nameList,'\n',"实际:",testList,'\n',"预测:",predictList)

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号