腾讯云服务器安装Hadoop

- 修改主机名(主机默认是带_的。如果不修改,远程hdfs-client通过主机名访问时会报java.lang.IllegalArgumentException:Does not contain a valid host:port)

1.--查看主机名 hostname 2.--设置当前主机名为master hostnamectl set-hostname maste 3.--重启 rboot

- 修改host文件

--修改hosts映射 vim /etc/hosts 在结尾添加主机名与ip的映射 如: master 172.17.0.7 注意:(这里的ip为云服务器内网的ip) slave1 122.255.255.255 (这个集群的机器需要填外网ip) --刷新配置 /etc/init.d/network restart

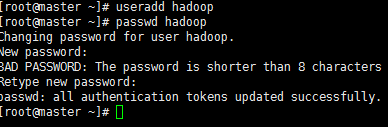

- 添加hadoop用户

![]()

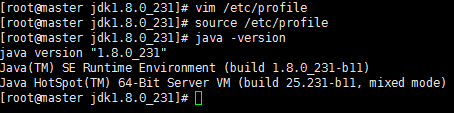

- 安装JDK

tar -zxvf jdk-1.8.0_231.tar.gz --解压jdk vim /etc/profile --配置Java环境变量 --在/etc/profile下添加如下命令 export JAVA_HOME={jdk路径} export PATH=$PATH:$JAVA_HOME/bin --保存退出 --刷新环境变量 source /etc/profile查看java版本,检验是否配置成功

![]()

- 切换至hadoop用户下安装hadoop

上传hadoop压缩包并解压

tar -zxvf hadoop-3.2.1.tar.gz

运行hadoop version查看是否安装成功

[hadoop@master ~]$ cd hadoop-3.2.1/ [hadoop@master hadoop-3.2.1]$ bin/hadoop version Hadoop 3.2.1 Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842 Compiled by rohithsharmaks on 2019-09-10T15:56Z Compiled with protoc 2.5.0 From source with checksum 776eaf9eee9c0ffc370bcbc1888737 This command was run using /home/hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar [hadoop@master hadoop-3.2.1]$ cd .. [hadoop@master ~]$ cd hadoop-3.2.1/ [hadoop@master hadoop-3.2.1]$ bin/hadoop version Hadoop 3.2.1 Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842 Compiled by rohithsharmaks on 2019-09-10T15:56Z Compiled with protoc 2.5.0 From source with checksum 776eaf9eee9c0ffc370bcbc1888737 This command was run using /home/hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar [hadoop@master hadoop-3.2.1]$

测试单机环境下,mapreduce案例是否成功运行

[hadoop@master hadoop-3.2.1]$ mkdir input [hadoop@master hadoop-3.2.1]$ cp etc/hadoop/*.xml input [hadoop@master hadoop-3.2.1]$ cat bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+' --等待程序运行 . . . . [hadoop@master hadoop-3.2.1]$ cat output/* 1 dfsadmin

- 搭建伪分布式环境,配置SSH免密连接

具体配置步骤如下:

[hadoop@master ~]ssh localhost

[hadoop@master ~]exit # 退出刚才的 ssh localhost [hadoop@master ~]cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhost [hadoop@master .ssh]ssh-keygen -t rsa # 会有提示,都按回车就可以 [hadoop@master .ssh]cat id_rsa.pub >> authorized_keys # 加入授权 [hadoop@master .ssh]chmod 600 ./authorized_keys # 修改文件权限

配置SSH的原因:Hadoop NameNode节点需要启动集群中所有机器的Hadoop的守护进程,此过程需要SSH登录实现,而Hadoop并未提供者中配置,需要我们配置无密码登录

SSH(Secure Shell):是建立在应用层与传输层上的安全协议。是目前较可靠、专为远程登录回话和其他网络服务提供安全性的协议。

SSH客户端:ssh程序、scp(远程拷贝)、slogin(远程登录)、sftp(安全文件传输)等

SHH服务端:一个Daemon进程,在后台运行并响应来自客户端的连接请求。 - 修改hadoop配置

etc/hadoop/core-site.xml<configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/home/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> <!-- master为主机名--> </property> </configuration>hadoop.tmp.dir:表示存放临时数据的目录,包括NameNode的数据,也包括DataNode的数据。 fs.defaultFS:表示hdfs路径的逻辑名称

etc/hadoop/hdfs-site.xml<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/hadoop/tmp/dfs/data</value> </property> </configuration>dfs.replication:集群副本数量,伪分布式设置为1

dfs.namenode.name.dir:本地存放FsImage文件的路径dfs.datanode.data.dir:本地存放HDFS数据的Block的路径

etc/hadoop/hadoop-env.sh52 # The java implementation to use. By default, this environment 53 # variable is REQUIRED on ALL platforms except OS X! 54 # export JAVA_HOME= 55 expott JAVA_HOME=/opt/jdk/jdk1.8.0_231

- 格式化NameNode

[hadoop@master hadoop-3.2.1]$ bin/hdfs namenode -format

- 启动HDFS

[hadoop@master hadoop-3.2.1]$ sbin/start-dfs.sh Starting namenodes on [master] Starting datanodes Starting secondary namenodes [master] [hadoop@master hadoop-3.2.1]$

- 查看启动是否成功,如果启动不成功,查看logs/hadopp-hadoop-{对应服务}.log查看失败原因

Starting secondary namenodes [master] [hadoop@master hadoop-3.2.1]$ jps 16596 NameNode 17111 Jps 16938 SecondaryNameNode 16718 DataNode

愚人不努力,懒人盼巅峰

浙公网安备 33010602011771号

浙公网安备 33010602011771号