【Hadoop学习之二】Hadoop伪分布式安装

环境

虚拟机:VMware 10

Linux版本:CentOS-6.5-x86_64

客户端:Xshell4

FTP:Xftp4

jdk8

hadoop-3.1.1

伪分布式就一台机器:主节点和从节点都在一个机器上,这里我们使用:node1 192.168230.11

一、平台和软件

平台:GNU/Linux

软件:JDK+SSH+rsync+hadoop3.1.1

修改主机/etc/hosts和/etc/sysconfig/network: 【切记】

192.168.230.11 node1

参考:https://www.cnblogs.com/heruiguo/p/7943006.html

1.安装JDK

参考:https://www.cnblogs.com/cac2020/p/9683212.html

2.安装ssh:

(1)查看是否已经安装

[root@node1 bin]# type ssh ssh is hashed (/usr/bin/ssh)

(2)如果未安装 进行安装

yum install -y ssh

3.安装rsync(远程同步):

(1)查看是否已经安装

[root@node1 bin]# type rsync

-bash: type: rsync: not found

(2)如果未安装 进行安装

yum install -y rsync

4.免密登录(主要用于全分布模式)

(1)实现localhost免密登录

#1)生成公钥和私钥对

[root@node1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa Generating public/private rsa key pair. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 18:e5:c1:eb:55:be:9c:27:3a:bc:c1:51:dd:8e:2c:f3 root@node1 The key's randomart image is: +--[ RSA 2048]----+ | .o | | o.. .. . | | . .. o. . .| | o. .... o | | ..S...ooo .| | .. .=+. | | .o. oE | | +. | | .o | +-----------------+

#2)将生成公钥id_rsa.pub拷贝至当前目录下隐藏目录.ssh下文件authorized_keys

[root@node1 ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

#3).ssh权限改为700

[root@node1 ~]# chmod 0700 ~/.ssh

#4)authorized_keys权限改为0600

[root@node1 ~]# chmod 0600 ~/.ssh/authorized_keys

#5)登录localhost

[root@node1 ~]# echo $$ 1209 [root@node1 ~]# ssh localhost Last login: Tue Jan 1 16:17:35 2019 from 192.168.230.1 [root@node1 ~]# echo $$ 1234

(2)实现node1访问node2(192.168.230.12)免密登录

#1)通过命令ssh-copy-id将node1的公钥追加到node2 注意使用 ssh-copy-id命令 一定要在/root/.ssh目录下

[root@node1 .ssh]# ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.230.12 root@192.168.230.12's password: Now try logging into the machine, with "ssh '192.168.230.12'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting.

#注意如果提示 -bash: ssh-copy-id: command not found //提示命令不存在

#需要安装yum -y install openssh-clients

#2)node2修改权限

#.ssh权限改为700

[root@node2 ~]# chmod 0700 ~/.ssh

#3)authorized_keys权限改为0600

[root@node2 ~]# chmod 0600 ~/.ssh/authorized_keys

#4)免密登录

[root@node1 ~]# ssh 192.168.230.12 Last login: Tue Jan 1 16:57:09 2019 from 192.168.230.11 [root@node2 ~]#

5.安装Hadoop

[root@node1 src]# tar -xf hadoop-3.1.1.tar.gz -C /usr/local

二、配置Hadoop

1、修改/usr/local/hadoop-3.1.1/etc/hadoop/hadoop-env.sh 设置JAVA环境变量、角色用户

在最后添加如下设置:

export JAVA_HOME=/usr/local/jdk1.8.0_65 export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root

2、修改/usr/local/hadoop-3.1.1/etc/hadoop/core-site.xml 配置主节点相关信息

(1)fs.defaultFS 主节点通讯信息 (hadoop3默认端口改为9820)

(2)hadoop.tmp.dir 设置namenode元数据和datanode block数据的目录

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9820</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop</value>

</property>

</configuration>

3、修改/usr/local/hadoop-3.1.1/etc/hadoop/hdfs-site.xml 配置从节点相关信息

(1)dfs.replication 副本数

(2)dfs.namenode.secondary.http-address 二级namenode (hadoop默认端口改为9868)

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node1:9868</value>

</property>

4、修改workers(hadoop2.X叫slave)配置从节点(DATANODE)信息

配置:node1

三、启动Hadoop

1、格式化namenode

1)将hadoop.tmp.dir指定的目录格式化,如果没有先创建,然后将元信息fsimge存到该目录下

2)生成全局唯一的集群ID 所以搭建集群时只执行一次

[root@node1 /]# /usr/local/hadoop-3.1.1/bin/hdfs namenode -format 2019-01-02 10:33:06,541 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = node1/192.168.230.11 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 3.1.1 STARTUP_MSG: classpath = /usr/local/hadoop-3.1.1/etc/hadoop:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-core-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-lang3-3.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-http-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/netty-3.10.5.Final.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-io-2.5.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/curator-client-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/asm-5.0.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/json-smart-2.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/hadoop-annotations-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-net-3.6.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-servlet-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/curator-framework-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-util-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/httpcore-4.4.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-io-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/curator-recipes-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-security-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-server-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-xml-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jetty-webapp-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/re2j-1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/hadoop-auth-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/httpclient-4.5.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/avro-1.7.7.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/zookeeper-3.4.9.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/hadoop-kms-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/hadoop-common-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/hadoop-nfs-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/common/hadoop-common-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-http-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/curator-client-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/hadoop-annotations-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-servlet-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-compress-1.4.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/curator-framework-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-util-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-io-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/curator-recipes-2.12.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-security-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-server-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-xml-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jetty-webapp-9.3.19.v20170502.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/xz-1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/hadoop-auth-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/zookeeper-3.4.9.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-client-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-client-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.1-tests.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/fst-2.50.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/guice-4.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/objenesis-1.0.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-router-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-common-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-services-core-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-services-api-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-common-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-client-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-api-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-registry-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.1.jar:/usr/local/hadoop-3.1.1/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.1.jar STARTUP_MSG: build = https://github.com/apache/hadoop -r 2b9a8c1d3a2caf1e733d57f346af3ff0d5ba529c; compiled by 'leftnoteasy' on 2018-08-02T04:26Z STARTUP_MSG: java = 1.8.0_65 ************************************************************/ 2019-01-02 10:33:06,647 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 2019-01-02 10:33:06,681 INFO namenode.NameNode: createNameNode [-format] 2019-01-02 10:33:17,758 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Formatting using clusterid: CID-501237ab-3212-4483-a36e-a48f31345438 2019-01-02 10:33:23,541 INFO namenode.FSEditLog: Edit logging is async:true 2019-01-02 10:33:23,759 INFO namenode.FSNamesystem: KeyProvider: null 2019-01-02 10:33:23,778 INFO namenode.FSNamesystem: fsLock is fair: true 2019-01-02 10:33:23,802 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 2019-01-02 10:33:23,925 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 2019-01-02 10:33:23,925 INFO namenode.FSNamesystem: supergroup = supergroup 2019-01-02 10:33:23,926 INFO namenode.FSNamesystem: isPermissionEnabled = true 2019-01-02 10:33:23,926 INFO namenode.FSNamesystem: HA Enabled: false 2019-01-02 10:33:24,298 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 2019-01-02 10:33:24,405 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 2019-01-02 10:33:24,405 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 2019-01-02 10:33:24,419 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 2019-01-02 10:33:24,421 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Jan 02 10:33:24 2019-01-02 10:33:24,424 INFO util.GSet: Computing capacity for map BlocksMap 2019-01-02 10:33:24,424 INFO util.GSet: VM type = 64-bit 2019-01-02 10:33:24,482 INFO util.GSet: 2.0% max memory 239.8 MB = 4.8 MB 2019-01-02 10:33:24,482 INFO util.GSet: capacity = 2^19 = 524288 entries 2019-01-02 10:33:24,582 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false 2019-01-02 10:33:24,622 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS 2019-01-02 10:33:24,622 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 2019-01-02 10:33:24,622 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0 2019-01-02 10:33:24,622 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 2019-01-02 10:33:24,622 INFO blockmanagement.BlockManager: defaultReplication = 1 2019-01-02 10:33:24,740 INFO blockmanagement.BlockManager: maxReplication = 512 2019-01-02 10:33:24,740 INFO blockmanagement.BlockManager: minReplication = 1 2019-01-02 10:33:24,741 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 2019-01-02 10:33:24,741 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms 2019-01-02 10:33:24,741 INFO blockmanagement.BlockManager: encryptDataTransfer = false 2019-01-02 10:33:24,741 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 2019-01-02 10:33:24,922 INFO util.GSet: Computing capacity for map INodeMap 2019-01-02 10:33:24,922 INFO util.GSet: VM type = 64-bit 2019-01-02 10:33:24,922 INFO util.GSet: 1.0% max memory 239.8 MB = 2.4 MB 2019-01-02 10:33:24,922 INFO util.GSet: capacity = 2^18 = 262144 entries 2019-01-02 10:33:24,930 INFO namenode.FSDirectory: ACLs enabled? false 2019-01-02 10:33:24,930 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true 2019-01-02 10:33:24,931 INFO namenode.FSDirectory: XAttrs enabled? true 2019-01-02 10:33:24,932 INFO namenode.NameNode: Caching file names occurring more than 10 times 2019-01-02 10:33:24,978 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536 2019-01-02 10:33:24,986 INFO snapshot.SnapshotManager: SkipList is disabled 2019-01-02 10:33:24,995 INFO util.GSet: Computing capacity for map cachedBlocks 2019-01-02 10:33:24,995 INFO util.GSet: VM type = 64-bit 2019-01-02 10:33:24,996 INFO util.GSet: 0.25% max memory 239.8 MB = 613.8 KB 2019-01-02 10:33:24,996 INFO util.GSet: capacity = 2^16 = 65536 entries 2019-01-02 10:33:25,022 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 2019-01-02 10:33:25,022 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 2019-01-02 10:33:25,022 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 2019-01-02 10:33:25,047 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 2019-01-02 10:33:25,048 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 2019-01-02 10:33:25,090 INFO util.GSet: Computing capacity for map NameNodeRetryCache 2019-01-02 10:33:25,091 INFO util.GSet: VM type = 64-bit 2019-01-02 10:33:25,091 INFO util.GSet: 0.029999999329447746% max memory 239.8 MB = 73.7 KB 2019-01-02 10:33:25,091 INFO util.GSet: capacity = 2^13 = 8192 entries 2019-01-02 10:33:25,285 INFO namenode.FSImage: Allocated new BlockPoolId: BP-600017715-192.168.230.11-1546396405239 2019-01-02 10:33:25,421 INFO common.Storage: Storage directory /data/hadoop/dfs/name has been successfully formatted. 2019-01-02 10:33:25,584 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 2019-01-02 10:33:26,036 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 386 bytes saved in 0 seconds . 2019-01-02 10:33:26,198 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2019-01-02 10:33:26,297 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.230.11 ************************************************************/

2、启动hadoop

[root@node1 sbin]# /usr/local/hadoop-3.1.1/sbin/start-dfs.sh Starting namenodes on [node1] node1: Warning: Permanently added 'node1,192.168.230.11' (RSA) to the list of known hosts. Starting datanodes Starting secondary namenodes [node1] 2019-01-02 10:52:31,822 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [root@node1 sbin]# jps 1344 NameNode 1616 SecondaryNameNode 1450 DataNode 1803 Jps [root@node1 sbin]# ss -nal State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:9867 *:* LISTEN 0 128 192.168.230.11:9868 *:* LISTEN 0 128 *:9870 *:* LISTEN 0 128 127.0.0.1:33840 *:* LISTEN 0 128 :::22 :::* LISTEN 0 128 *:22 *:* LISTEN 0 100 ::1:25 :::* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 128 192.168.230.11:9820 *:* LISTEN 0 128 *:9864 *:* LISTEN 0 128 *:9866 *:* [root@node1 sbin]#

hadoop2与3的端口

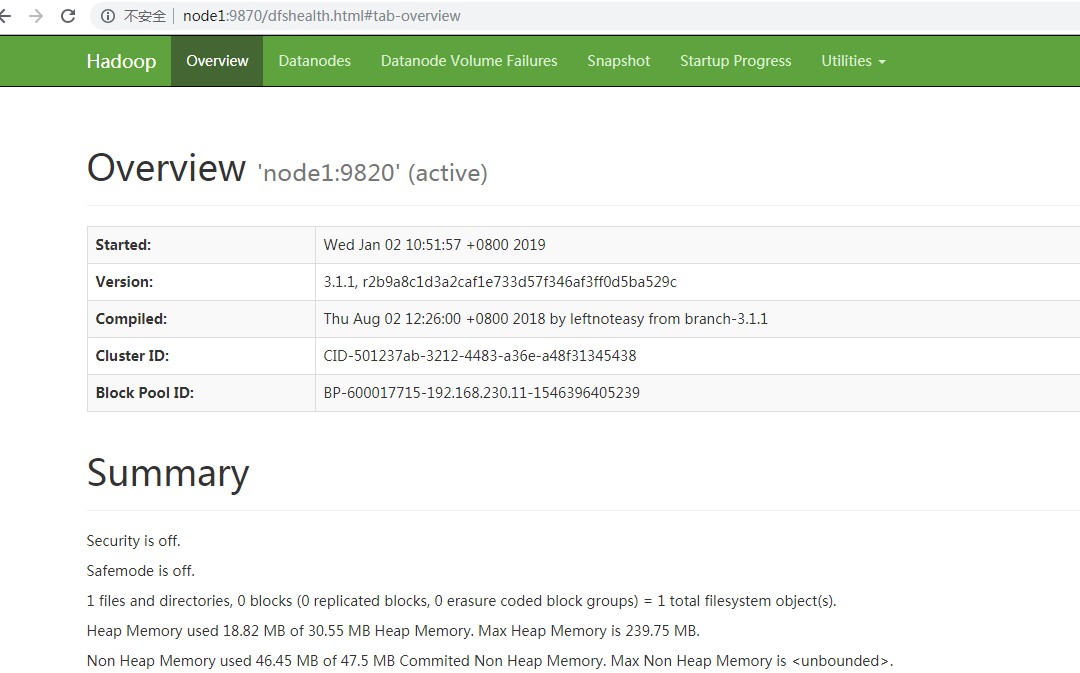

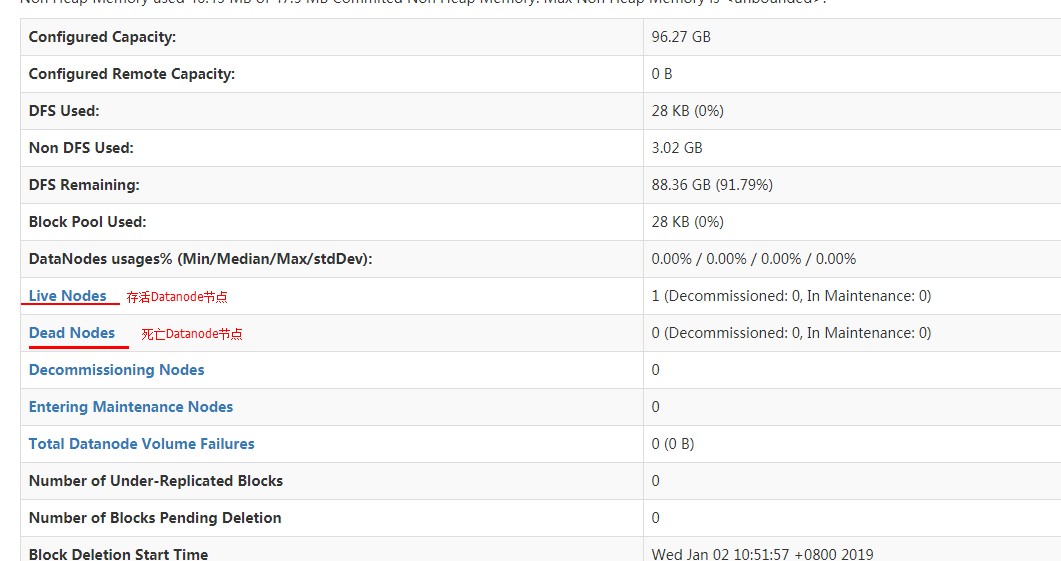

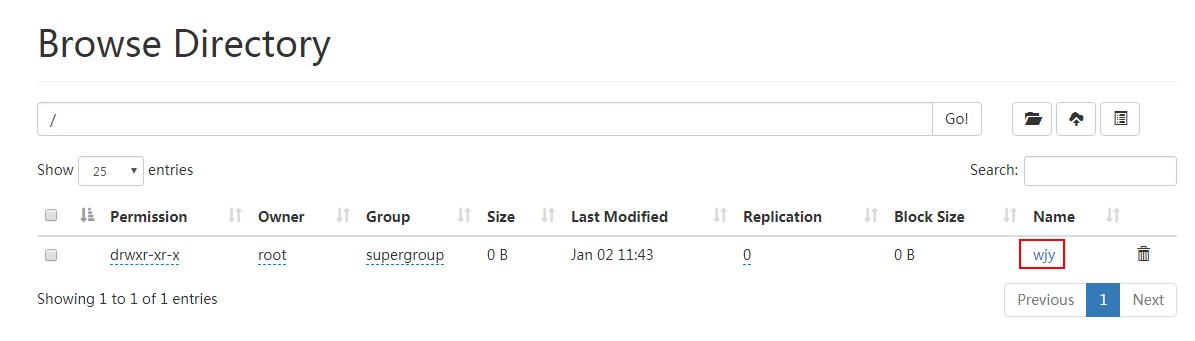

3、可视化UI

谷歌或者火狐浏览器输入:http://node1:9870

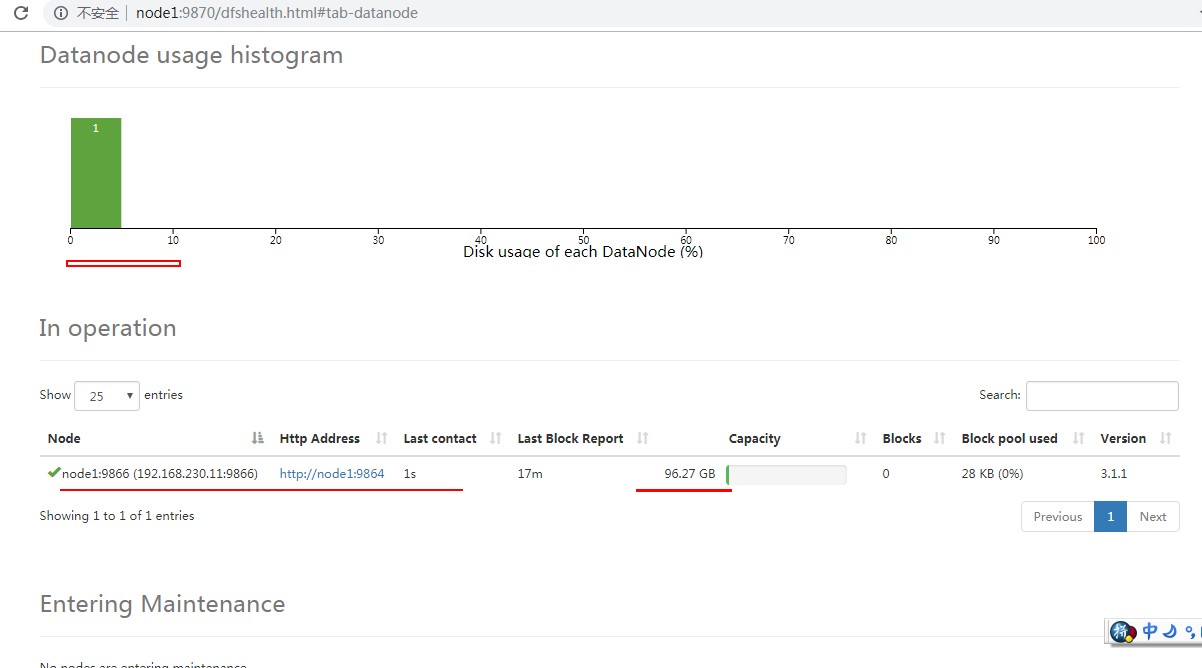

4、上传文件

UI操作文件:上传、下载、查看

使用命令上传文件:

相关操作命令:

[root@node1 bin]# /usr/local/hadoop-3.1.1/bin/hdfs dfs 2019-01-02 11:42:06,609 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Usage: hadoop fs [generic options] [-appendToFile <localsrc> ... <dst>] [-cat [-ignoreCrc] <src> ...] [-checksum <src> ...] [-chgrp [-R] GROUP PATH...] [-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...] [-chown [-R] [OWNER][:[GROUP]] PATH...] [-copyFromLocal [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst>] [-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] <path> ...] [-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>] [-createSnapshot <snapshotDir> [<snapshotName>]] [-deleteSnapshot <snapshotDir> <snapshotName>] [-df [-h] [<path> ...]] [-du [-s] [-h] [-v] [-x] <path> ...] [-expunge] [-find <path> ... <expression> ...] [-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] [-getfacl [-R] <path>] [-getfattr [-R] {-n name | -d} [-e en] <path>] [-getmerge [-nl] [-skip-empty-file] <src> <localdst>] [-head <file>] [-help [cmd ...]] [-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]] [-mkdir [-p] <path> ...] [-moveFromLocal <localsrc> ... <dst>] [-moveToLocal <src> <localdst>] [-mv <src> ... <dst>] [-put [-f] [-p] [-l] [-d] <localsrc> ... <dst>] [-renameSnapshot <snapshotDir> <oldName> <newName>] [-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...] [-rmdir [--ignore-fail-on-non-empty] <dir> ...] [-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]] [-setfattr {-n name [-v value] | -x name} <path>] [-setrep [-R] [-w] <rep> <path> ...] [-stat [format] <path> ...] [-tail [-f] <file>] [-test -[defsz] <path>] [-text [-ignoreCrc] <src> ...] [-touchz <path> ...] [-truncate [-w] <length> <path> ...] [-usage [cmd ...]] Generic options supported are: -conf <configuration file> specify an application configuration file -D <property=value> define a value for a given property -fs <file:///|hdfs://namenode:port> specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations. -jt <local|resourcemanager:port> specify a ResourceManager -files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster -libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath -archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines The general command line syntax is: command [genericOptions] [commandOptions]

(1)创建目录

[root@node1 bin]# /usr/local/hadoop-3.1.1/bin/hdfs dfs -mkdir /wjy 2019-01-02 11:43:46,198 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

(2)上传文件

[root@node1 bin]# /usr/local/hadoop-3.1.1/bin/hdfs dfs -put /usr/local/src/hadoop-3.1.1.tar.gz /wjy 2019-01-02 12:38:52,177 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2019-01-02 12:41:48,612 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 47006ms (threshold=30000ms); ack: seqno: 1834 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,613 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56321ms (threshold=30000ms); ack: seqno: 1835 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,617 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56317ms (threshold=30000ms); ack: seqno: 1836 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,746 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56448ms (threshold=30000ms); ack: seqno: 1837 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,750 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56401ms (threshold=30000ms); ack: seqno: 1838 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,774 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56424ms (threshold=30000ms); ack: seqno: 1839 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,826 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56463ms (threshold=30000ms); ack: seqno: 1840 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,867 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56503ms (threshold=30000ms); ack: seqno: 1841 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,872 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56490ms (threshold=30000ms); ack: seqno: 1842 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,915 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56147ms (threshold=30000ms); ack: seqno: 1843 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:48,919 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56150ms (threshold=30000ms); ack: seqno: 1844 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,006 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56237ms (threshold=30000ms); ack: seqno: 1845 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,009 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56240ms (threshold=30000ms); ack: seqno: 1846 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,012 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56242ms (threshold=30000ms); ack: seqno: 1847 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,033 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56260ms (threshold=30000ms); ack: seqno: 1848 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,139 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56262ms (threshold=30000ms); ack: seqno: 1849 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,154 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55999ms (threshold=30000ms); ack: seqno: 1850 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,158 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56008ms (threshold=30000ms); ack: seqno: 1851 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,158 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55998ms (threshold=30000ms); ack: seqno: 1852 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,162 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 56001ms (threshold=30000ms); ack: seqno: 1853 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,173 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55901ms (threshold=30000ms); ack: seqno: 1854 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,178 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55901ms (threshold=30000ms); ack: seqno: 1855 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,178 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55730ms (threshold=30000ms); ack: seqno: 1856 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,180 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 55689ms (threshold=30000ms); ack: seqno: 1857 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,188 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 47743ms (threshold=30000ms); ack: seqno: 1858 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]] 2019-01-02 12:41:49,189 INFO hdfs.DataStreamer: Slow ReadProcessor read fields for block BP-600017715-192.168.230.11-1546396405239:blk_1073741825_1001 took 47749ms (threshold=30000ms); ack: seqno: 1859 reply: SUCCESS downstreamAckTimeNanos: 0 flag: 0, targets: [DatanodeInfoWithStorage[192.168.230.11:9866,DS-8bc75a4e-7bac-46ea-8bbd-1e30d1daf6e2,DISK]]

上传过程,显示COPYING:

上传结束:

查看Block块:

(3)查看上传文件

[root@node1 bin]# /usr/local/hadoop-3.1.1/bin/hdfs dfs -ls / 2019-01-02 12:48:55,881 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items drwxr-xr-x - root supergroup 0 2019-01-02 12:42 /wjy [root@node1 bin]# /usr/local/hadoop-3.1.1/bin/hdfs dfs -ls /wjy 2019-01-02 12:49:05,657 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items -rw-r--r-- 1 root supergroup 334559382 2019-01-02 12:42 /wjy/hadoop-3.1.1.tar.gz [root@node1 bin]#

可以进入数据目录查看:

[root@node1 subdir0]# cd /data/hadoop/dfs/data/current/BP-600017715-192.168.230.11-1546396405239/current/finalized/subdir0/subdir0 [root@node1 subdir0]# ll total 329284 -rw-r--r--. 1 root root 134217728 Jan 2 12:42 blk_1073741825 -rw-r--r--. 1 root root 1048583 Jan 2 12:42 blk_1073741825_1001.meta -rw-r--r--. 1 root root 134217728 Jan 2 12:42 blk_1073741826 -rw-r--r--. 1 root root 1048583 Jan 2 12:42 blk_1073741826_1002.meta -rw-r--r--. 1 root root 66123926 Jan 2 12:42 blk_1073741827 -rw-r--r--. 1 root root 516603 Jan 2 12:42 blk_1073741827_1003.meta [root@node1 subdir0]#

5、关闭Hadoop

[root@node1 sbin]# /usr/local/hadoop-3.1.1/sbin/stop-dfs.sh Stopping namenodes on [node1] Stopping datanodes Stopping secondary namenodes [node1] 2019-01-02 12:56:34,554 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [root@node1 sbin]# jps 3025 Jps [root@node1 sbin]# ss -nal State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 :::22 :::* LISTEN 0 128 *:22 *:* LISTEN 0 100 ::1:25 :::* LISTEN 0 100 127.0.0.1:25 *:* [root@node1 sbin]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号