1、pom.xml代码

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.hduser</groupId>

<artifactId>hduser</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.2.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.logging.log4j/log4j-core -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.17.1</version>

</dependency>

</dependencies>

</project>

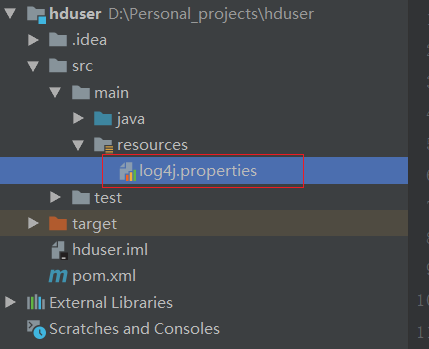

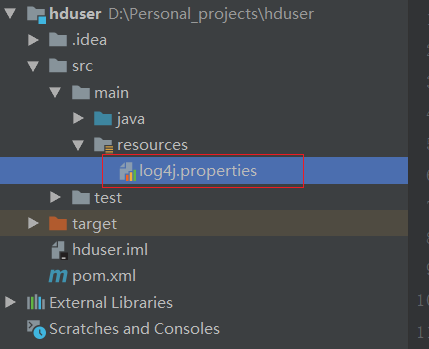

2、log4j.properties文件内容

# Set root logger level to DEBUG and its only appender to A1.

log4j.rootLogger=DEBUG, A1

# A1 is set to be a ConsoleAppender.

log4j.appender.A1=org.apache.log4j.ConsoleAppender

# A1 uses PatternLayout.

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%-4r [%t] %-5p %c %x - %m%n

3、WordCountMapper代码

package com.example;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.io.Text;

import java.io.IOException;

public class WordCountMapper extends Mapper<LongWritable,Text, Text, IntWritable> {

@Override

protected void map(LongWritable key,Text value,Mapper<LongWritable,Text,Text,IntWritable>.Context context) throws IOException,InterruptedException{

//接收传入进来的一行文本

String line = value.toString();

//将这行内容按照分隔符切割

String[] words = line.split(" ");

//遍历数组,没出现一个单词就标记为数组1

for(String word:words){

//使用context,把Map阶段处理的数据发给Reduce阶段作为输入数据

context.write(new Text(word),new IntWritable(1));

}

}

}

4、WordCountReducer代码

package com.example;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

System.out.println("reduce called key: " + key.toString());

// 定义一个计数器

int count = 0;

// 遍历一组迭代器,把每一个数量1累加起来构成单词的总数

for (IntWritable iw : values) {

System.out.println(iw.get());

count += iw.get();

}

context.write(key, new IntWritable(count));

}

}

5、WordCountCombiner代码

package com.example;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WordCountCombiner extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

// 局部汇总

int count = 0;

for (IntWritable v : values) {

count += v.get();

}

context.write(key, new IntWritable(count));

}

}

6、WordCountDriver代码

package com.example;

import com.example.WordCountCombiner;

import com.example.WordCountMapper;

import com.example.WordCountReducer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import java.io.IOException;

public class WordCountDriver {

public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {

// 主类中需要设置本地hadoop的环境变量

//System.setProperty("hadoop.home.dir", "D:/hadoop/hadoop-3.2.1");

// 通过Job来封装本地MR的相关信息

Configuration conf = new Configuration();

//读取args参数

// 新增读取参数的代码

String[] otherArgs = (new GenericOptionsParser(conf, args)).getRemainingArgs();

if (otherArgs.length < 2) {

System.err.println("Usage: wordcount <in> [<in>...] <out>");

System.exit(2);

}

// 配置MR运行模式,使用local表示本地模式,可以省略

conf.set("mapreduce.framework.name", "local");

Job wcjob = Job.getInstance(conf);

// 指定MR Job jar包运行主类

wcjob.setJarByClass(WordCountDriver.class);

// 指定本次MR 所有的Mapper Reducer类

wcjob.setMapperClass(WordCountMapper.class);

wcjob.setReducerClass(WordCountReducer.class);

// Combiner 用于整合数据,此处可写可不写

wcjob.setCombinerClass(WordCountCombiner.class);

// 设置业务逻辑Mapper类输出的key和value的数据类型

wcjob.setMapOutputKeyClass(Text.class);

wcjob.setMapOutputValueClass(IntWritable.class);

// 设置业务逻辑Reducer类的输出key和value的数据类型

wcjob.setOutputKeyClass(Text.class);

wcjob.setOutputValueClass(IntWritable.class);

// 使用本地模式指定要处理的数据所在的位置

FileInputFormat.addInputPath(wcjob, new Path(otherArgs[otherArgs.length - 2]));

FileOutputFormat.setOutputPath(wcjob, new Path(otherArgs[otherArgs.length - 1]));

// 提交程序并且监督打印程序执行情况

boolean res = wcjob.waitForCompletion(true);

System.exit(res ? 0 : 1);

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号