银行麒麟系统离线部署clickhouse三节点集群

在国产化环境下部署clickhouse集群,个人建议先查看cpu的内核信息,可能有的人会说,

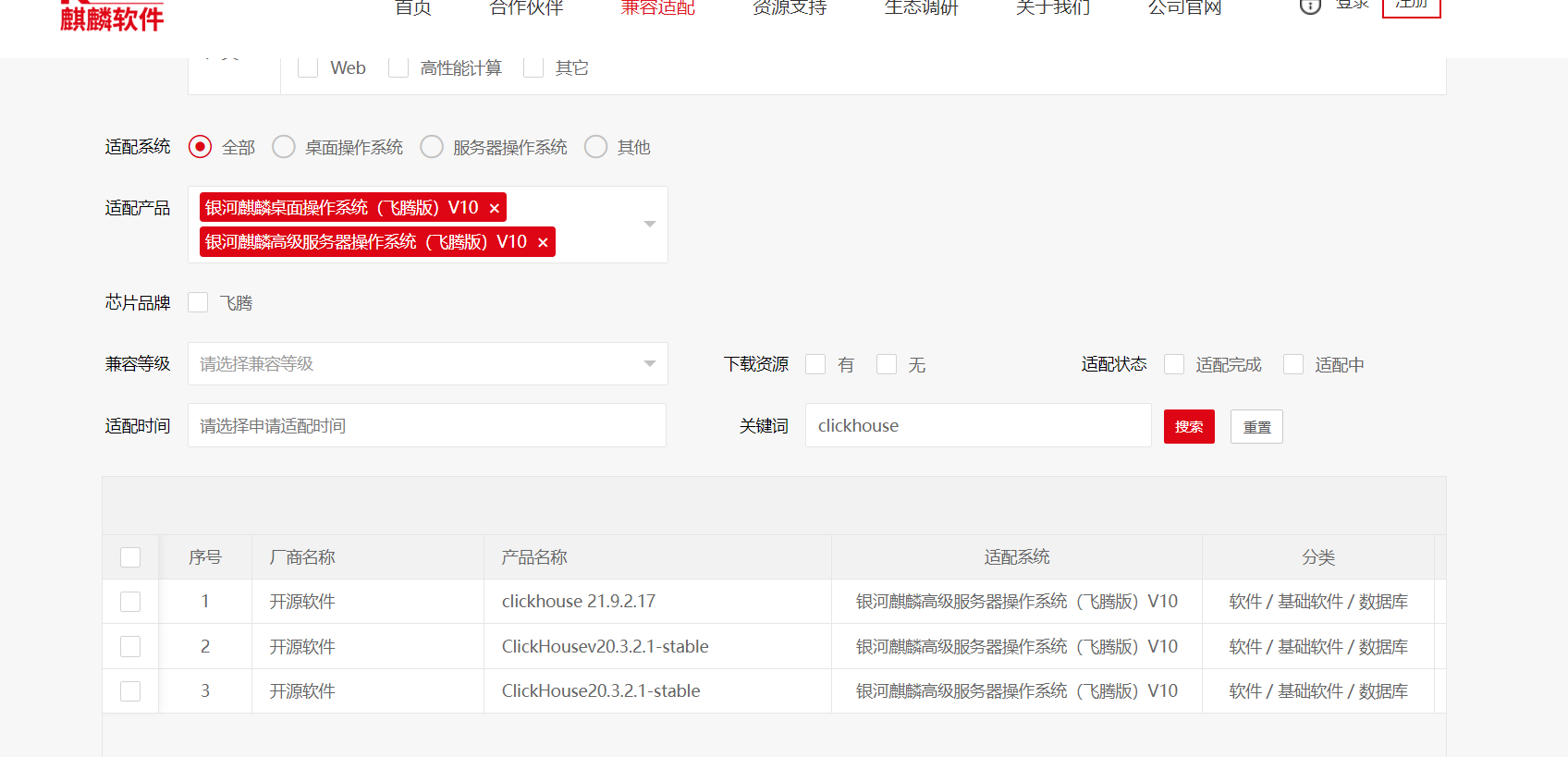

去银河麒麟的官网查看适配的版本信息,实践证明,官网的未必就是真的正确

好比银河麒麟的官网是这样介绍的

我在安装的过程中,就会报不支持的二进制文件的错误,所以这里不建议安装官网推荐的版本,毕竟是在国产化的环境下,目前国产化的兼容性还是做得不好 。

下面我们正式开始介绍在国产化环境中应该如何部署clickhouse三节点集群。

1、查看cpu的内核信息

cat /proc/cpuinfo

可以看出,cpu是基于arm的,所以我们下载cllckhouse安装包的时候也要基于arm内核的

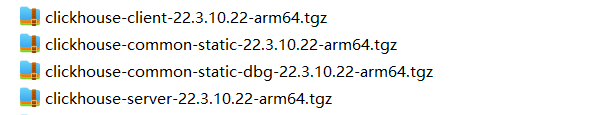

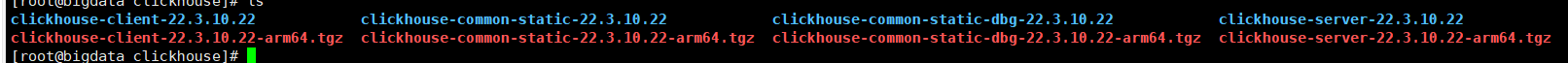

2、clickhouse安装包下载,下载地址可以参考这里:https://packages.clickhouse.com/tgz/stable/

这里是我本地下载的安装包

3、修改linux文件限制(三台服务器都需要修改)

vim /etc/security/limits.conf

//添加以下内容

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

4、关闭防火墙(三台服务器都需要关闭防火墙)

systemctl status firewalld.service //查看防火墙状态

systemctl stop firewalld.service //关闭防火墙

systemctl disable firewalld.service //永久关闭防火墙

5、配置免密通讯

每台服务器都进行以下操作:

vim /etc/hosts //添加以下内容 176.28.40.25 bigdata.exchange01 176.28.40.26 bigdata.exchange02 176.28.40.27 bigdata.exchange03

每台服务器都进行以下操作:

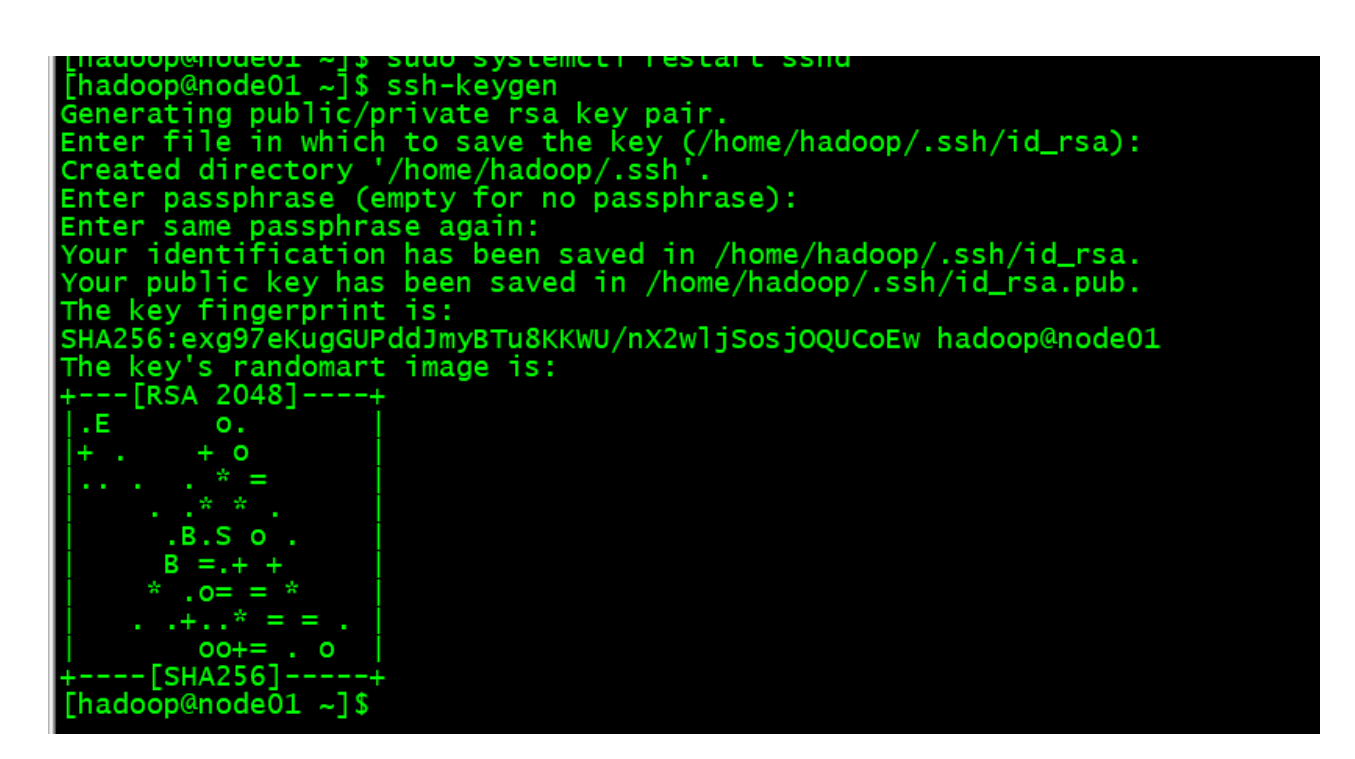

ssh-keygen cd .ssh cat id_rsa.pub >> authorized_keys chmod 700 ~/.ssh chmod 600 ~/.ssh/authorized_keys

以其中一台服务器为例

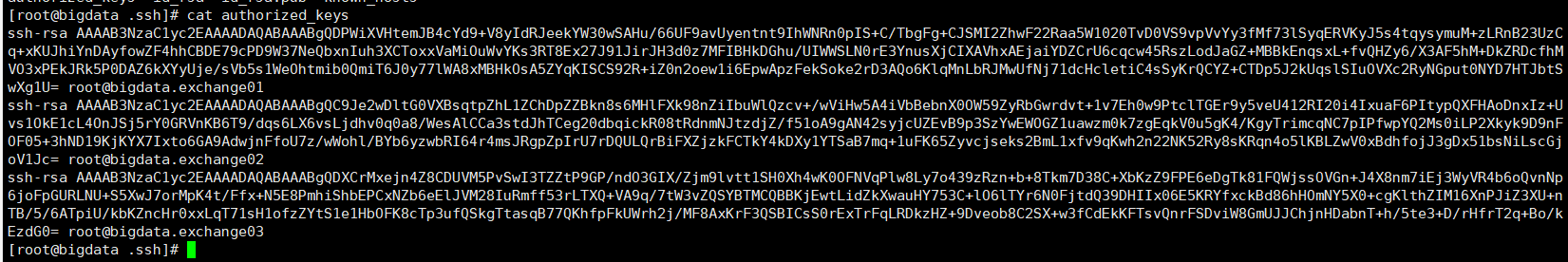

这个时候每台服务器就会生成自己的公钥,如果需要能相互之间免密访问,就需要活得别人的公钥,我这里用简单粗暴的方法,就是把别的机器的公钥内容都复制到所在服务器的

authorized_keys 文件里面

好比这样,每台服务器里面的 authorized_keys 文件里面都存放着另外两台机器的公钥,第一台服务器就把第二第三台的公钥复制过来,第二台服务器就把第一第三台台服务器的公钥

复制过来,同样的道理,第三台就把第一第二的公钥复制过来,authorized_keys 文件所在目录/root/.ssh

6、安装zookeeper,安装包可以到官网下载,这里我就不做赘述了

上传安装包,并解压安装包(三台服务器都这样操作)

tar -zxf zookeeper-3.4.5.tar.gz

在zookeeper的conf目录,拷贝zoo_sample.cfg为zoo.cfg

修改配置文件

vim zoo.cfg

tickTime=10000 initLimit=10 syncLimit=5 dataDir=/opt/softwares/zookeeper/data dataLogDir=/opt/softwares/zookeeper/logs autopurge.purgeInterval=0 globalOutstandingLimit=200 clientPort=2181 server.1=176.28.40.25:2888:3888 server.2=176.28.40.26:2888:3888 server.3=176.28.40.27:2888:3888

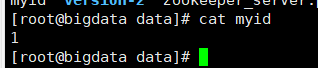

接下来在每台服务器的dataDir=/opt/softwares/zookeeper/data 目录下创建文件myid,分别在相应的myid文件里面写入 1 、2、3

另外两台服务器就是2 和 3

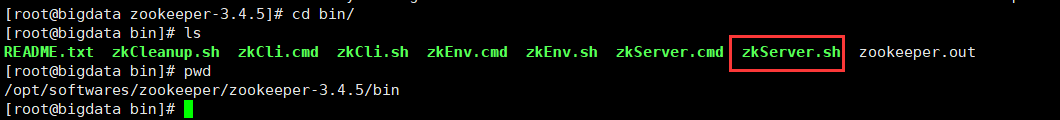

启动zookeeper,启动脚本如下(三台服务器都进去启动)

启动: ./zkServer.sh start

查看状态: ./zkServer.sh status

7、安装clickhouse(三台服务器都进行操作)

把安装包上传到三台服务器,并解压

tar -zxf 压缩包名称

按照以下顺序进行安装(三台服务器都进行这样的安装)

tar -xzvf clickhouse-common-static-22.3.10.22.tgz (ClickHouse编译的二进制文件。)

clickhouse-common-static-22.3.10.22/install/doinst.sh

tar -xzvf clickhouse-common-static-dbg-22.3.10.22.tgz (创建clickhouse-server软连接,并安装默认配置服务)

clickhouse-common-static-dbg-22.3.10.22/install/doinst.sh

tar -xzvf clickhouse-server-22.3.10.22.tgz (创建clickhouse-client客户端工具软连接,并安装客户端配置文件。)

clickhouse-server-22.3.10.22/install/doinst.sh

tar -xzvf clickhouse-client-22.3.10.22.tgz (带有调试信息的ClickHouse二进制文件。)

clickhouse-client-22.3.10.22/install/doinst.sh

这里需要注意一点,在进行clickhouse-server-22.3.10.22/install/doinst.sh安装的时候,会提示设置好default用户的密码,这里设置的时候要记好了,只有一次输入的机会,我这里设置的密码是clickhouse

安装日志如下:

ClickHouse binary is already located at /usr/bin/clickhouse Symlink /usr/bin/clickhouse-extract-from-config already exists but it points to /opt/softwares/clickhouse/clickhouse-server-22.3.10.22/install/clickhouse. Will replace the old symlink to /usr/bin/clickhouse. Creating symlink /usr/bin/clickhouse-extract-from-config to /usr/bin/clickhouse. Creating clickhouse group if it does not exist. groupadd -r clickhouse groupadd:“clickhouse”组已存在 Creating clickhouse user if it does not exist. useradd -r --shell /bin/false --home-dir /nonexistent -g clickhouse clickhouse useradd:用户“clickhouse”已存在 Will set ulimits for clickhouse user in /etc/security/limits.d/clickhouse.conf. Config file /etc/clickhouse-server/config.xml already exists, will keep it and extract path info from it. /etc/clickhouse-server/config.xml has /var/lib/clickhouse as data path. /etc/clickhouse-server/config.xml has /var/log/clickhouse-server/ as log path. Users config file /etc/clickhouse-server/users.xml already exists, will keep it and extract users info from it. Log directory /var/log/clickhouse-server/ already exists. Data directory /var/lib/clickhouse already exists. Pid directory /var/run/clickhouse-server already exists. chown -R clickhouse:clickhouse '/var/log/clickhouse-server/' chown -R clickhouse:clickhouse '/var/run/clickhouse-server' chown clickhouse:clickhouse '/var/lib/clickhouse' groupadd -r clickhouse-bridge groupadd:“clickhouse-bridge”组已存在 useradd -r --shell /bin/false --home-dir /nonexistent -g clickhouse-bridge clickhouse-bridge useradd:用户“clickhouse-bridge”已存在 chown -R clickhouse-bridge:clickhouse-bridge '/usr/bin/clickhouse-odbc-bridge' chown -R clickhouse-bridge:clickhouse-bridge '/usr/bin/clickhouse-library-bridge' Password for default user is already specified. To remind or reset, see /etc/clickhouse-server/users.xml and /etc/clickhouse-server/users.d. Setting capabilities for clickhouse binary. This is optional. Cannot set 'net_admin' or 'ipc_lock' or 'sys_nice' capability for clickhouse binary. This is optional. Taskstats accounting will be disabled. To enable taskstats accounting you may add the required capability later manually. Allow server to accept connections from the network (default is localhost only), [y/N]: y The choice is saved in file /etc/clickhouse-server/config.d/listen.xml. chown -R clickhouse:clickhouse '/etc/clickhouse-server' ClickHouse has been successfully installed. Start clickhouse-server with: sudo clickhouse start Start clickhouse-client with: clickhouse-client --password

如果说大家在安装的时候真的不小心乱按,忘记密码了,我这里教大家一招,到这个目录下把user.xml文件删除掉,再重新安装一次clickhouse-server,这样就重新输入一个新的密码了

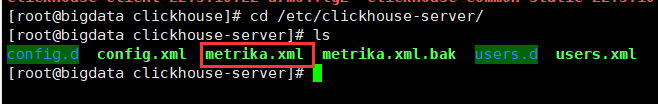

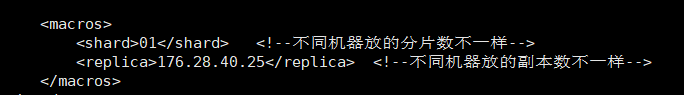

配置metrika.xml文件

root@bigdata clickhouse-server]# cat metrika.xml <yandex> <clickhouse_remote_servers> <gmall_cluster> <!-- 集群名称--> <shard> <!--集群的第一个分片--> <internal_replication>true</internal_replication> <!--该分片的第一个副本--> <replica> <host>176.28.40.25</host> <port>9000</port> </replica> </shard> <shard> <!--集群的第二个分片--> <internal_replication>true</internal_replication> <replica> <!--该分片的第一个副本--> <host>176.28.40.26</host> <port>9000</port> </replica> </shard> <shard> <!--集群的第三个分片--> <internal_replication>true</internal_replication> <replica> <!--该分片的第一个副本--> <host>176.28.40.27</host> <port>9000</port> </replica> </shard> </gmall_cluster> </clickhouse_remote_servers> <zookeeper> <node> <host>bigdata.exchange01.yandex.ru</host> <port>2181</port> </node> <node> <host>bigdata.exchange02.yandex.ru</host> <port>2181</port> </node> <node> <host>bigdata.exchange03.yandex.ru</host> <port>2181</port> </node> </zookeeper> <macros> <shard>01</shard> <!--不同机器放的分片数不一样--> <replica>176.28.40.25</replica> <!--不同机器放的副本数不一样--> </macros> </yandex>

同样的方式把 metrika.xml文件同步到另外两台服务器,同时把该标签改成自己的ip地址

修改config.xml文件

<listen_host>::</listen_host> <include_from>/etc/clickhouse-server/metrika.xml</include_from> <zookeeper> <node> <host>176.28.40.25</host> <port>2181</port> </node> <node> <host>176.28.40.26</host> <port>2181</port> </node> <node> <host>176.28.40.27</host> <port>2181</port> </node> </zookeeper> <remote_servers> <!--集群名称可以自定义修改,我这里定义集群名称为tj_dev --> <tj_dev> <shard> <!--副本--> <replica> <host>176.28.40.25</host> <port>9000</port> <user>default</user> <password>clickhosue</password> </replica> </shard> <shard> <replica> <host>176.28.40.26</host> <port>9000</port> <user>default</user> <password>clickhosue</password> </replica> </shard> <shard> <replica> <host>176.28.40.27</host> <port>9000</port> <user>default</user> <password>clickhosue</password> </replica> </shard> </tj_dev> </remote_servers> <!--这里修改成自己的ip,编号也要变成2 3 --> <macros> <shard>01</shard> <replica>176.28.40.25</replica> </macros>

修改完后,把该配置文件同步到另外两台服务器,可以用scp命令进行同步, 此时配置基本完成。

8、启动clickhouse集群

在每台服务器上面执行启动命令

sudo clickhouse start

通过以下命令查看clickhouse运行状态

sudo clickhouse status

登录clichouse,我们这里通过default用户进行登录

clickhouse-client -u default --password clickhouse

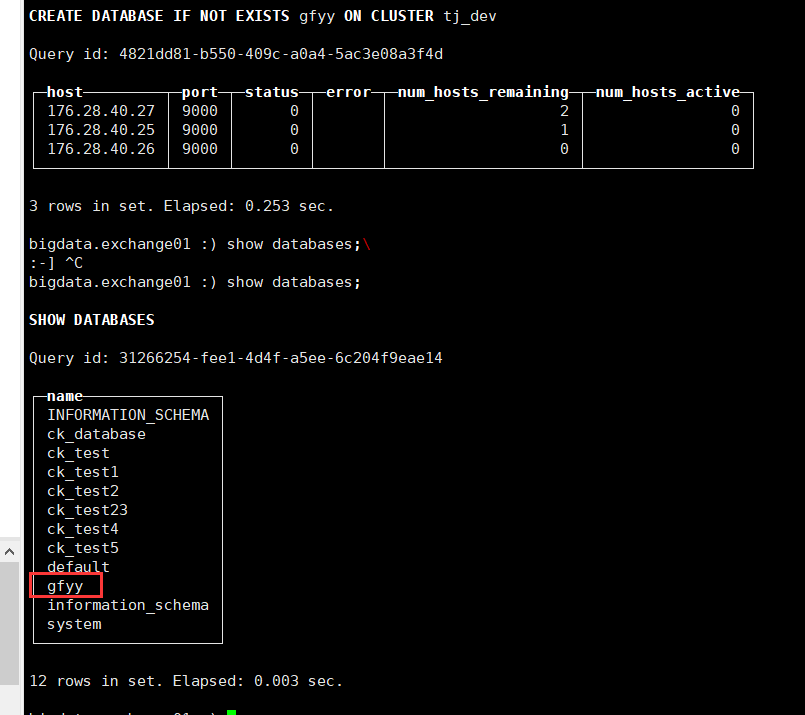

这里需要对clickhouse进行说明一下,如果需要在三台服务器都有表和库,我们就需要在创建数据库和数据表的时候指定集群

在集群创建数据库的命令

CREATE DATABASE IF NOT EXISTS gfyy on cluster tj_dev;

在该数据库下面创建表命令:

use gfyy; CREATE TABLE IF NOT EXISTS exp_person_info on cluster tj_dev ( PERSON_NO Int32, PERSON_NM String, ID_NUMBER String, SEX String, BIRTH_DATE Date, DRIVER_LCS_NUMBER String, PASSPORT_NO String, HVPS_NO String, VEHICLE_NO String, PERMIT_NO String, TEL_CO String, ORIGIN String, HOME_ADDRESS String, ETPS_SCCD String, RECORD_TIME Date, RECORD_EXP_TIME Date, RFID_NO String, MASTER_CUSCD String, AREA_CODE String, DCL_TYPECD String )ENGINE = MergeTree() ORDER BY PERSON_NO PARTITION BY PERSON_NO PRIMARY KEY PERSON_NO;

可用如下命令查询集群信息,查看每个节点是否正常运行

select * from system.clusters

浙公网安备 33010602011771号

浙公网安备 33010602011771号