HBase-2.2.4(Snappy)基于YCSB的压测步骤

HBase2.2.4是截止2020-08-03官方最新的Stable版本,因为做了snappy压缩,但是还未升级到生产环境,需要在测试环境先测试压缩效果的同时也需要测试读写性能

YCSB-0.17.0压测HBase,这个工具不多介绍了,雅虎开源的专门压测NoSQL数据库的

ycsb-0.17.0下载、解压(这个安装包670M,包含了这个工具支持的所有NoSQL数据库测试样例,若不需要其他的,可以自行下载源码编译指定的数据库,这样体积比较小)

hadoop@hadoop1:~$ wget https://github.com/brianfrankcooper/YCSB/releases/download/0.17.0/ycsb-0.17.0.tar.gz

hadoop@hadoop1:~$ tar zxvf ycsb-0.17.0.tar.gz && cd ycsb-0.17.0

目录如下

hadoop@hadoop1:~/ycsb-0.17.0$ ll total 232 drwxrwxr-x 54 hadoop hadoop 4096 Aug 3 05:49 ./ drwxr-xr-x 23 hadoop hadoop 4096 Aug 3 05:48 ../ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:48 accumulo1.6-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:48 accumulo1.7-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:48 accumulo1.8-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 aerospike-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 arangodb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 asynchbase-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 azurecosmos-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 azuretablestorage-binding/ drwxrwxr-x 2 hadoop hadoop 4096 Aug 3 05:48 bin/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 cassandra-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 cloudspanner-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 couchbase2-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 couchbase-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 crail-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 dynamodb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 elasticsearch5-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 elasticsearch-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 foundationdb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 geode-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 googlebigtable-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 googledatastore-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 griddb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 hbase098-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 hbase10-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:48 hbase12-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 hbase14-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:50 hbase20-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 hypertable-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 ignite-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 infinispan-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 jdbc-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 kudu-binding/ drwxrwxr-x 2 hadoop hadoop 4096 Aug 3 05:49 lib/ -rw-r--r-- 1 hadoop hadoop 11358 May 19 2018 LICENSE.txt drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 maprdb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 maprjsondb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 memcached-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 mongodb-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 nosqldb-binding/ -rw-r--r-- 1 hadoop hadoop 615 May 19 2018 NOTICE.txt drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 orientdb-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 postgrenosql-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 rados-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 redis-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 rest-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 riak-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 rocksdb-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 s3-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 solr6-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 solr-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 tablestore-binding/ drwxrwxr-x 4 hadoop hadoop 4096 Aug 3 05:49 tarantool-binding/ drwxrwxr-x 3 hadoop hadoop 4096 Aug 3 05:49 voltdb-binding/ drwxr-xr-x 2 hadoop hadoop 4096 Sep 25 2019 workloads/ hadoop@hadoop1:~/ycsb-0.17.0$

上述输出标记的即为HBase压测执行所需配置的目录,但目录内部默认没有我集群自己的配置,需要我将$HBASE_HOME/conf/hbase-site.xml拷贝到此

hadoop@hadoop1:~/ycsb-0.17.0/hbase20-binding$ tree . ├── conf(需要手动新建) │ └── hbase-site.xml(拷贝线上的配置) ├── lib │ ├── audience-annotations-0.5.0.jar │ ├── commons-logging-1.1.3.jar │ ├── findbugs-annotations-1.3.9-1.jar │ ├── hbase10-binding-0.17.0.jar │ ├── hbase20-binding-0.17.0.jar │ ├── hbase-shaded-client-2.0.0.jar │ ├── htrace-core4-4.1.0-incubating.jar │ ├── log4j-1.2.17.jar │ └── slf4j-api-1.7.25.jar └── README.md 2 directories, 11 files hadoop@hadoop1:~/ycsb-0.17.0/hbase20-binding$

准备工作到此就做好了,先看一下命令用法

hadoop@hadoop1:~/ycsb-0.17.0$ ./bin/ycsb usage: ./bin/ycsb command database [options] Commands: load Execute the load phase run Execute the transaction phase shell Interactive mode Databases: ... hbase098 https://github.com/brianfrankcooper/YCSB/tree/master/hbase098 hbase10 https://github.com/brianfrankcooper/YCSB/tree/master/hbase10 hbase12 https://github.com/brianfrankcooper/YCSB/tree/master/hbase12 hbase14 https://github.com/brianfrankcooper/YCSB/tree/master/hbase14 hbase20 https://github.com/brianfrankcooper/YCSB/tree/master/hbase20 ... Options: -P file Specify workload file -cp path Additional Java classpath entries -jvm-args args Additional arguments to the JVM -p key=value Override workload property -s Print status to stderr -target n Target ops/sec (default: unthrottled) -threads n Number of client threads (default: 1) Workload Files: There are various predefined workloads under workloads/ directory. See https://github.com/brianfrankcooper/YCSB/wiki/Core-Properties for the list of workload properties. ycsb: error: too few arguments hadoop@hadoop1:~/ycsb-0.17.0$

同时,还有选择合适的workload数据,在目录里面有以下几种:

hadoop@hadoop1:~/ycsb-0.17.0$ tree workloads/ workloads/ ├── tsworkloada ├── tsworkload_template ├── workloada //重更新,50% 读 50% 写 ├── workloadb //读多写少,95% 读 5% 写 ├── workloadc //只读:100% 读 ├── workloadd //读最近更新:这个 workload 会插入新纪录,越新的纪录读取概率越大 ├── workloade //小范围查询:这个 workload 会查询小范围的纪录,而不是单个纪录 ├── workloadf //读取-修改-写入:这个 workload 会读取一个纪录,然后修改这个纪录,最后写回 └── workload_template //压测配置(文末讲) 0 directories, 9 files hadoop@hadoop-backup-01:~/ycsb-0.17.0$

命令参数的含义

hbase20: 使用 HBase 2.x 版本的数据库连接层 -P: 指定 workload 配置文件路径,使用 Workload A 类型 -p: 指定单个配置(会覆盖之前文件中的配置) table=,columnfamily=: 指定 HBase 表名和列族 recordcount=,operationcount=: 指定纪录数和操作数 -thread: 指定客户端线程数 -s: 打印状态

加载数据到指定的表内

hadoop@hadoop1:~/ycsb-0.17.0$ bin/ycsb load hbase20 -P workloads/workloada -p threads=10 -p table=nosnappy -p columnfamily=data -p recordcount=100000 -s

... Loading workload... log4j:WARN No appenders could be found for logger (org.apache.htrace.core.Tracer). log4j:WARN Please initialize the log4j system properly. log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. Starting test. SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder". SLF4J: Defaulting to no-operation (NOP) logger implementation SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details. 2020-08-03 06:53:01:837 0 sec: 0 operations; est completion in 0 second DBWrapper: report latency for each error is false and specific error codes to track for latency are: [] 2020-08-03 06:53:11:817 10 sec: 6648 operations; 664.8 current ops/sec; est completion in 2 minutes [INSERT: Count=6648, Max=69503, Min=805, Avg=1428.28, 90=2289, 99=3209, 99.9=6047, 99.99=14895] ... (执行结果如下) [OVERALL], RunTime(ms), 134495 加载数据的耗时 [OVERALL], Throughput(ops/sec), 743.5220640172497 吞吐量,数据条数取整即可 [TOTAL_GCS_PS_Scavenge], Count, 23 [TOTAL_GC_TIME_PS_Scavenge], Time(ms), 34 [TOTAL_GC_TIME_%_PS_Scavenge], Time(%), 0.02527975017658649 [TOTAL_GCS_PS_MarkSweep], Count, 1 [TOTAL_GC_TIME_PS_MarkSweep], Time(ms), 19 [TOTAL_GC_TIME_%_PS_MarkSweep], Time(%), 0.014126919216327744 [TOTAL_GCs], Count, 24 [TOTAL_GC_TIME], Time(ms), 53 [TOTAL_GC_TIME_%], Time(%), 0.039406669392914234 [CLEANUP], Operations, 2 [CLEANUP], AverageLatency(us), 781.5 [CLEANUP], MinLatency(us), 5 [CLEANUP], MaxLatency(us), 1558 [CLEANUP], 95thPercentileLatency(us), 1558 [CLEANUP], 99thPercentileLatency(us), 1558 [INSERT], Operations, 100000 执行insert操作总数 [INSERT], AverageLatency(us), 1336.92224 每次insert操作的平均耗时 [INSERT], MinLatency(us), 514 所有insert操作的最小耗时 [INSERT], MaxLatency(us), 672255 所有insert操作的最大耗时 [INSERT], 95thPercentileLatency(us), 2455 95%的insert操作耗时在2455us内 [INSERT], 99thPercentileLatency(us), 4399 99%的insert操作耗时在4399us内 [INSERT], Return=OK, 100000 返回成功条数 hadoop@hadoop1:~/ycsb-0.17.0$

执行压测是,命令仅仅需要把上面的load换成run即可,若需要记录压测结果后面跟上追加重定向到一个文件就可以了,默认是打在标准输出上的

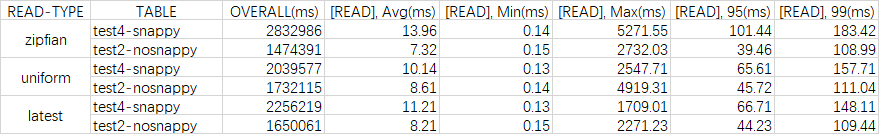

上面是基本的压测执行,压测的配置文件上面提到了,是workload/workload_templete,里面的配置有时间单位的换算,也有请求的概率分布类型(说人话就是你压测的数据是想要均匀分布,还是齐夫定律的数据,还是最新的数据访问概率越高。这几种),这个文件内容我就不贴出来了,注释写的很详细,虽然是英文,努努力也都能看懂,下面是我三台比较屌丝的戴尔服务器压测效果,仅供参考

测试结果跟实际环境的硬件水平关系很大,内存频率,cpu核心数等等,所以别人的数据都只能是"仅供参考",还是要亲自压测才能有结论

——————

浙公网安备 33010602011771号

浙公网安备 33010602011771号