Openstack T版高可用VMware部署方案(更新)

此文章采用虚拟机安装Zerotier形成虚拟网卡的方式实现不同VMware中的虚拟机实现通信,具体安装ZeroTier的流程自行百度。

参考文档:① OpenStack高可用集群部署方案(train版)—基础配置 - 简书 (jianshu.com)

一、节点规划

controller01

配置:

6RAM、4CPU、30GB硬盘、1块NAT网卡、1块Zerotier的虚拟网卡

网络:

NAT:192.168.200.10

ZeroTier:192.168.100.10

controller02

配置:

6RAM、4CPU、30GB硬盘、1块NAT网卡、1块Zerotier的虚拟网卡

网络:

NAT:192.168.200.11

ZeroTier:192.168.100.11

compute01

配置:

4RAM、4CPU、30GB硬盘、1块NAT网卡、1块Zerotier的虚拟网卡

网络:

NAT:192.168.200.20

ZeroTier:192.168.100.20

compute02

配置:

4RAM、4CPU、30GB硬盘、1块NAT网卡、1块Zerotier的虚拟网卡

网络:

NAT:192.168.200.21

ZeroTier:192.168.100.21

#采用pacemaker+haproxy的模式实现高可用,pacemaker提供资源管理及VIP(虚拟IP),haproxy提供方向代理及负载均衡

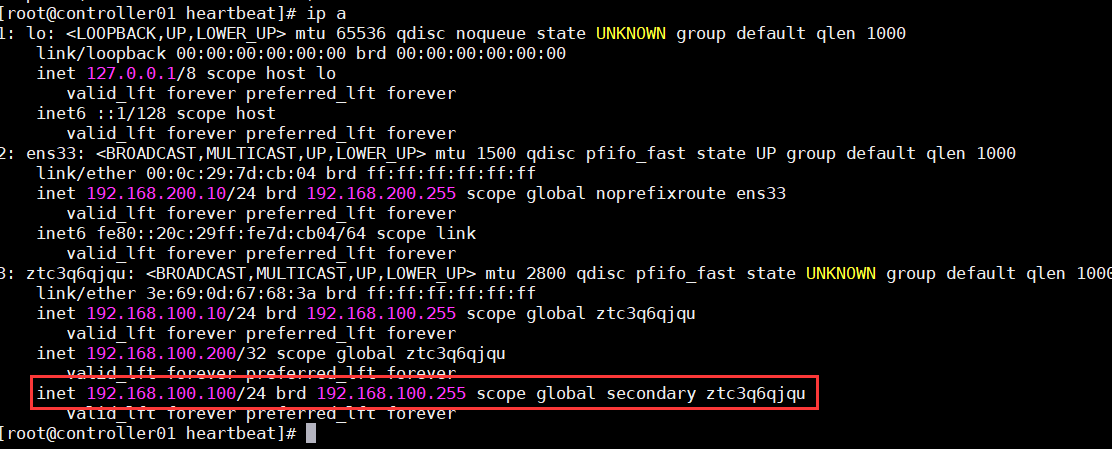

Pacemaker高可用VIP:192.168.100.100

| 节点 | controller01 | controller02 | compute01 | compute02 |

| 组件 | mysql | mysql |

libvirtd.service openstack-nova-compute |

libvirtd.service openstack-nova-compute |

|

Keepalived |

Keepalived |

neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent |

neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent |

|

| RabbitMQ |

RabbitMQ |

|||

|

memcached |

memcached | |||

|

Etcd |

Etcd | |||

|

pacemake |

pacemake | |||

|

haproxy |

haproxy | |||

| keystone | keystone | |||

| glance | glance | |||

| placement | placement | |||

| openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy |

openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy |

|||

|

neutron-server |

neutron-server |

|||

|

neutron-server |

neutron-server |

|||

|

dashboard |

dashboard |

| 服务 | 用户 | 密码 |

| 数据库mysql | root | 000000 |

| backup | backup | |

| keystone | KEYSTONE_DBPASS | |

| glance | GLANCE_DBPASS | |

| placement | PLACEMENT_DBPASS | |

| neutron | NEUTRON_DBPASS | |

| keystone | admin | admin |

| keystone | keystone | |

| glance | glance | |

| placement | placement | |

| nova | nova | |

| rabbitmq | openstack | 000000 |

| pacemakeweb https://192.168.100.10:2224/ | hacluster | 000000 |

| HAProxyweb http://192.168.100.100:1080/ | admin | admin |

二、基本高可用环境配置

1、环境初始化配置

首先配置好四个节点的NAT网卡地址,并且确定可以访问外网,再进行如下操作。

#####所有节点##### #设置对应节点的名称 hostnamectl set-hostname controller01 hostnamectl set-hostname controller02 hostnamectl set-hostname compute01 hostnamectl set-hostname compute02关闭防火墙、selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i 's\SELINUX=enforcing\SELINUX=disable' /etc/selinux/config

setenforce 0安装基本工具

yum install vim wget net-tools lsof -y

安装并且配置zeroTier

curl -s https://install.zerotier.com | sudo bash

zerotier-cli join a0cbf4b62a1c903e

//此处换成自己的网络ID(在官网申请)

//加入网络后需在zerotier的网络管理后台同意加入,否则没有IP地址配置moon提高速度

mkdir /var/lib/zerotier-one/moons.d

cd /var/lib/zerotier-one/moons.d

wget --no-check-certificate https://baimafeima1.coding.net/p/linux-openstack-jiaoben/d/openstack-T/git/raw/master/000000986a8a957f.moon

systemctl restart zerotier-one.service

systemctl enable zerotier-one.service配置hosts

cat >> /etc/hosts <<EOF

192.168.100.10 controller01

192.168.100.11 controller02

192.168.100.20 compute01

192.168.100.21 compute02

EOF配置ssh免密

设置时间同步,设置controller01节点做时间同步服务器

controller01节点配置如下

yum install chrony -y

sed -i '3,6d' /etc/chrony.conf

sed -i '3a\server ntp3.aliyun.com iburst' /etc/chrony.conf

sed -i 's#allow 192.168.0.0/16\allow all' /etc/chrony.conf

sed -i 's#local stratum 10\local stratum 10' /etc/chrony.conf

systemctl enable chronyd.service

systemctl restart chronyd.service其他节点配置如下

yum install chrony -y

sed -i '3,6d' /etc/chrony.conf

sed -i '3a\server controller01 iburst' /etc/chrony.conf

systemctl restart chronyd.service

systemctl enable chronyd.service

chronyc sources -v内核参数优化,在控制节点上添加,允许非本地IP绑定,允许运行中的HAProxy实例绑定到VIP

modprobe br_netfilter

echo 'net.ipv4.ip_forward = 1' >>/etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-iptables = 1' >>/etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables = 1' >>/etc/sysctl.conf

echo 'net.ipv4.ip_nonlocal_bind = 1' >>/etc/sysctl.conf

sysctl -p下载Train版的软件包

yum install centos-release-openstack-train -y

yum upgrade

yum clean all

yum makecache

yum install python-openstackclient -y

yum install openstack-utils -yopenstack-selinux(暂时未装)

yum install openstack-selinux -y

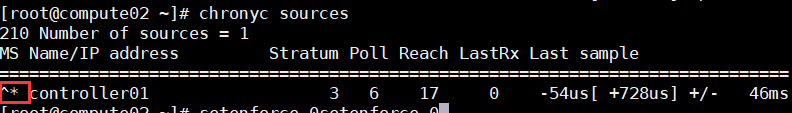

如图显示【*】则为同步成功

2、MariaDB双主高可用配置MySQL-HA

双master+keeplived

2.1、安装数据库

#####controller#####

所有的controller安装数据库

yum install mariadb mariadb-server python2-PyMySQL -y

systemctl restart mariadb.service

systemctl enable mariadb.service

#安装一些必备软件

yum -y install gcc gcc-c++ gcc-g77 ncurses-devel bison libaio-devel cmake libnl* libpopt* popt-static openssl-devel2.2初始化mariadb,在全部控制节点初始化数据库密码

######全部controller##### mysql_secure_installation输入root用户的当前密码(不输入密码)

Enter current password for root (enter for none):

设置root密码?

Set root password? [Y/n] y

新密码:

New password:

重新输入新的密码:

Re-enter new password:

删除匿名用户?

Remove anonymous users? [Y/n] y

禁止远程root登录?

Disallow root login remotely? [Y/n] n

删除测试数据库并访问它?

Remove test database and access to it? [Y/n] y

现在重新加载特权表?

Reload privilege tables now? [Y/n] y

2.3、修改mariadb配置文件

在全部控制节点修改配置文件/etc/my.cnf

######controller01###### 确保/etc/my.cnf中有如下参数,没有的话需手工添加,并重启mysql服务。 [mysqld] log-bin=mysql-bin #启动二进制文件 server-id=1 #服务器ID(两个节点的ID不能一样)

systemctl restart mariadb #重启数据库

mysql -uroot -p000000 #登录数据库

grant replication slave on . to 'backup'@'%' identified by 'backup'; flush privileges; #创建一个用户用于同步数据

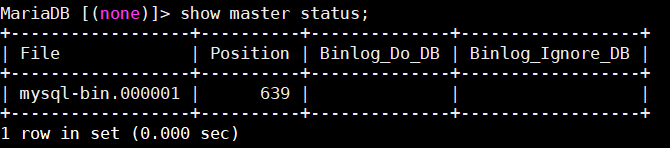

show master status; #查看master的状态,记录下File和Position然后在controller02上面设置从controller01同步需要使用。

######配置controller02###### 确保/etc/my.cnf中有如下参数,没有的话需手工添加,并重启mysql服务。 [mysqld] log-bin=mysql-bin #启动二进制文件 server-id=2 #服务器ID(两个节点的ID不能一样)systemctl restart mariadb #重启数据库

mysql -uroot -p000000 #登录数据库

grant replication slave on . to 'backup'@'%' identified by 'backup'; flush privileges; #创建一个用户用于同步数据

show master status; #查看master的状态,记录下File和Position然后在controller02上面设置从controller01同步需要使用。

change master to master_host='192.168.100.10',master_user='backup',master_password='backup',master_log_file='mysql-bin.000001',master_log_pos=639;

#设置controller02以01为主进行数据库同步,,master_log_file='mysql-bin.000001',master_log_pos=639;是上图查到的信息

exit; #退出数据库

systemctl restart mariadb #重启数据库

mysql -uroot -p000000

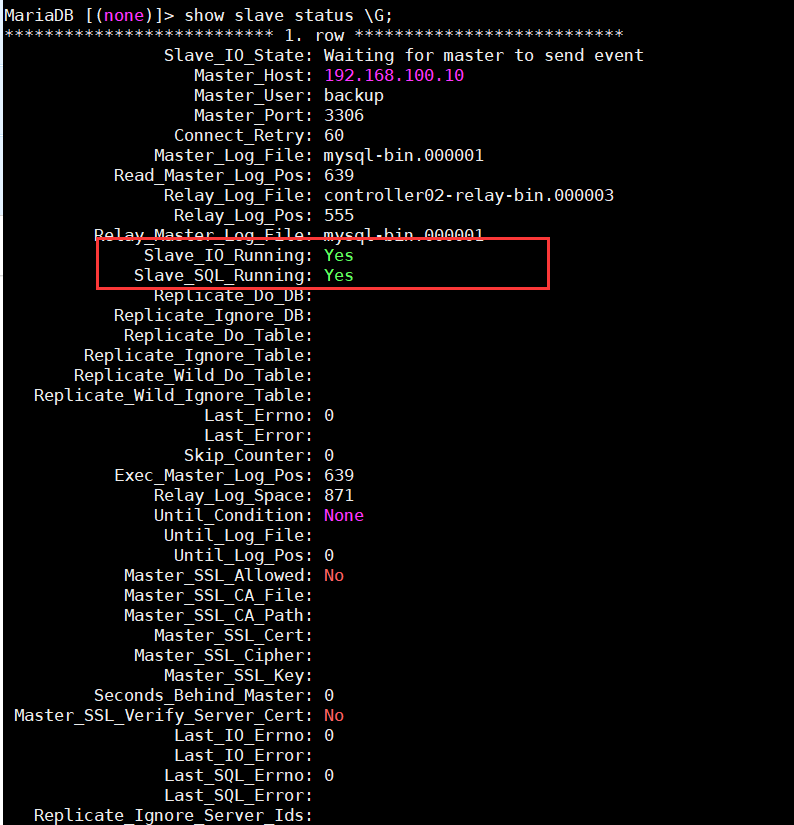

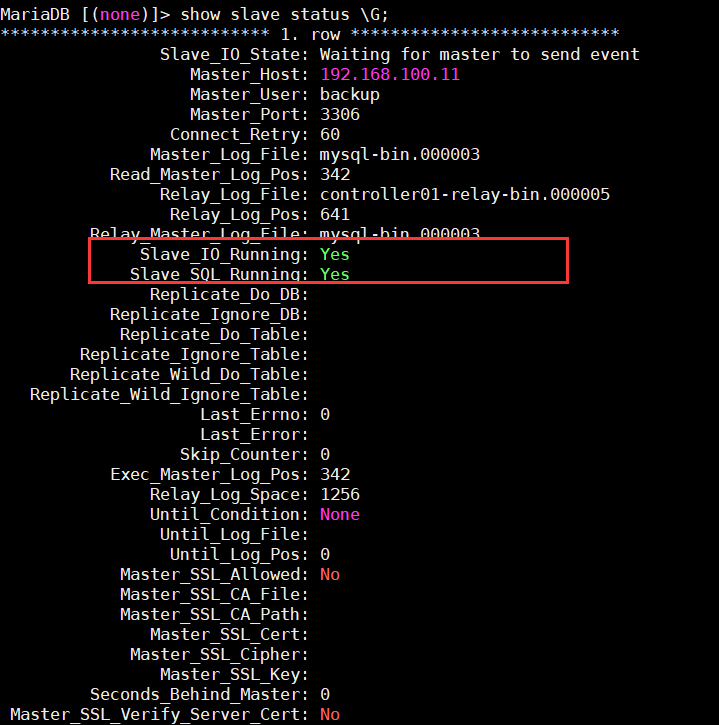

show slave status \G; #查看数据库的状态

//Slave_IO_Running: Yes

//Slave_SQL_Running: Yes

//两项都显示Yes时说明从controller02同步数据成功。

//至此controller01为主controller02为从的主从架构数据设置成功!

######controller02######

进入数据库查看数据库的状态

mysql -uroot -p000000;

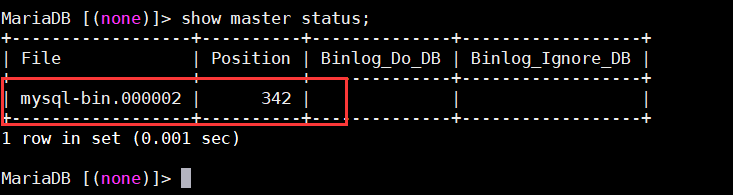

show master status;

######controller01######

#设置controller01和controller02互为主从(即双master)

mysql -uroot -p000000;

change master to master_host='192.168.100.11',master_user='backup',master_password='backup',master_log_file='mysql-bin.000002',master_log_pos=342;

#master_log_file='mysql-bin.000002',master_log_pos=342;填上图所示信息

exit;

######controller01#######

systemctl restart mariadb #重启数据库

mysql -uroot -p000000

show slave status \G; #查看数据库的状态

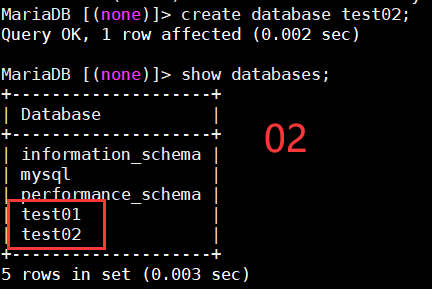

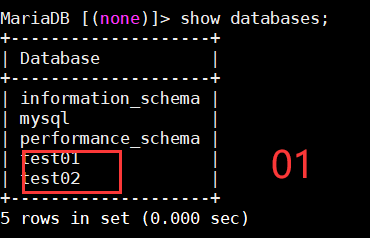

2.4、测试同步(自行测试)

在controller01节点上创建一个库,然后查看controller02是否已经同步成功,反之在controller02上创建一个库controller01上查看是否同步成功。

同步成功

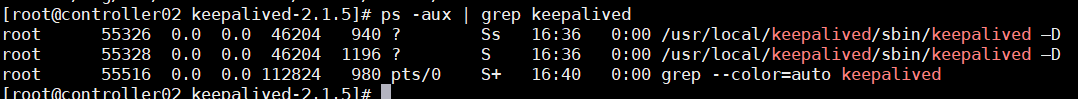

2.5、测试利用keepalived实现高可用

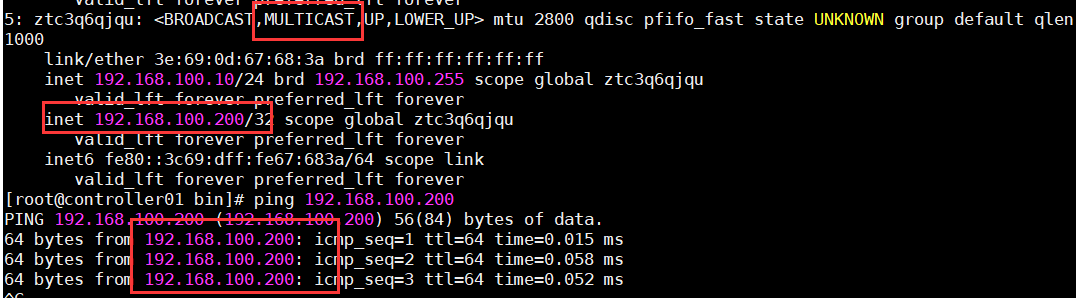

#####controller01###### ip link set multicast on dev ztc3q6qjqu //打开虚拟网卡的多播功能 wget http://www.keepalived.org/software/keepalived-2.1.5.tar.gz tar -zxvf keepalived-2.1.5.tar.gz cd keepalived-2.1.5 ./configure --prefix=/usr/local/keepalived #安装到/usr/local/keepalived目录下; make && make install编辑配置文件

说明:keepalived只有一个配置文件keepalived.conf,里面主要包括以下几个配置区域,分别是:

global_defs、vrrp_instance和virtual_server。

global_defs:主要是配置故障发生时的通知对象以及机器标识。

vrrp_instance:用来定义对外提供服务的VIP区域及其相关属性。

virtual_server:虚拟服务器定义mkdir -p /etc/keepalived/

vim /etc/keepalived/keepalived.conf写入以下配置文件

! Configuration File for keepalived

global_defs {

router_id MySQL-ha

}vrrp_instance VI_1 {

state BACKUP #两台配置此处均是BACKUP

interface ztc3q6qjqu

virtual_router_id 51

priority 100 #优先级,另一台改为90

advert_int 1

nopreempt #不抢占,只在优先级高的机器上设置即可,优先级低的机器不设置

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.200

}

}

virtual_server 192.168.100.200 3306 {

delay_loop 2 #每个2秒检查一次real_server状态

lb_algo wrr #LVS算法

lb_kind DR #LVS模式

persistence_timeout 60 #会话保持时间

protocol TCP

real_server 192.168.100.10 3306 {

weight 3

notify_down /usr/local/MySQL/bin/MySQL.sh #检测到服务down后执行的脚本

TCP_CHECK {

connect_timeout 10 #连接超时时间

nb_get_retry 3 #重连次数

delay_before_retry 3 #重连间隔时间

connect_port 3306 #健康检查端口

}

}

#####controller01

#编写检测脚本

mkdir -p /usr/local/MySQL/bin/

vi /usr/local/MySQL/bin/MySQL.sh

#内容如下

!/bin/sh

pkill keepalived

#添加可执行权限

chmod +x /usr/local/MySQL/bin/MySQL.sh

#启动keepalived

/usr/local/keepalived/sbin/keepalived -D

systemctl enable keepalived.service //开机自启

#####controller02##### #安装keepalived-2.1.5 ip link set multicast on dev ztc3q6qjqu //打开虚拟网卡的多播功能 wget http://www.keepalived.org/software/keepalived-2.1.5.tar.gz tar -zxvf keepalived-2.1.5.tar.gz cd keepalived-2.1.5 ./configure --prefix=/usr/local/keepalived #安装到/usr/local/keepalived目录下; make && make installmkdir -p /etc/keepalived/

vim /etc/keepalived/keepalived.conf写入以下配置文件

! Configuration File for keepalived

global_defs {

router_id MySQL-ha

}vrrp_instance VI_1 {

state BACKUP

interface ztc3q6qjqu

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.200

}

}

virtual_server 192.168.100.200 3306 {

delay_loop 2

lb_algo wrr

lb_kind DR

persistence_timeout 60

protocol TCP

real_server 192.168.100.11 3306 {

weight 3

notify_down /usr/local/MySQL/bin/MySQL.sh

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

#####controller02#####

#编写检测脚本

mkdir -p /usr/local/MySQL/bin/

vi /usr/local/MySQL/bin/MySQL.sh

#内容如下

!/bin/sh

pkill keepalived

#添加可执行权限

chmod +x /usr/local/MySQL/bin/MySQL.sh

#启动keepalived

/usr/local/keepalived/sbin/keepalived -D

systemctl enable keepalived.service //开机自启

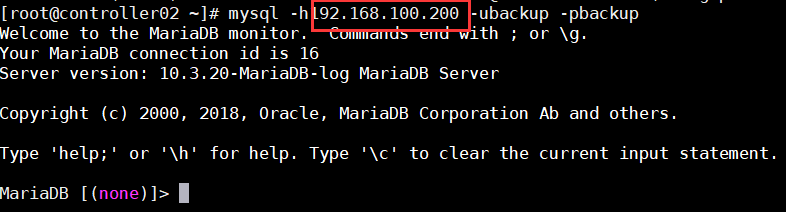

2.6使用VIP测试登录MySQL

mysql -h192.168.100.200 -ubackup -pbackup关闭keepalived

systemctl stop keepalived

systemctl disable keepalived

数据库高可用搭建成功

此处仅作为测试使用Zerotier的方式搭建高可用是否可行,文章后面的组件均采用Pacemaker实现VIP,keepalived弃用

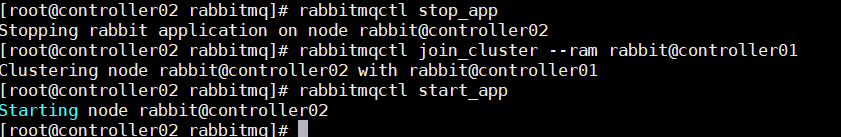

3、RabbitMQ集群(控制节点)

#####全部controller节点###### yum install erlang rabbitmq-server python-memcached -y systemctl enable rabbitmq-server.servicecontroller01#####

systemctl start rabbitmq-server.service

rabbitmqctl cluster_statusscp /var/lib/rabbitmq/.erlang.cookie controller02:/var/lib/rabbitmq/

controller02######

chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie #修改controller02节点.erlang.cookie文件的用户/组

systemctl start rabbitmq-server #启动controller02节点的rabbitmq服务构建集群,controller02节点以ram节点的形式加入集群

rabbitmqctl stop_app

rabbitmqctl join_cluster --ram rabbit@controller01

rabbitmqctl start_app

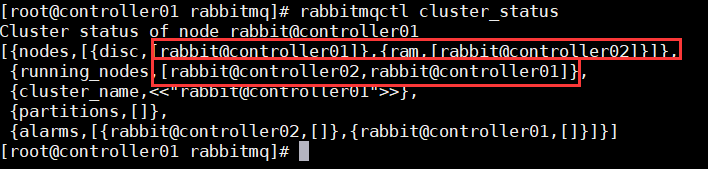

#查询集群状态

rabbitmqctl cluster_status

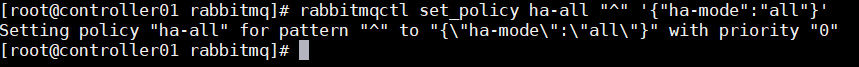

#####controller01##### # 在任意节点新建账号并设置密码,以controller01节点为例 rabbitmqctl add_user openstack 000000 rabbitmqctl set_user_tags openstack administrator rabbitmqctl set_permissions -p "/" openstack ".*" ".*" ".*"设置消息队列的高可用

rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

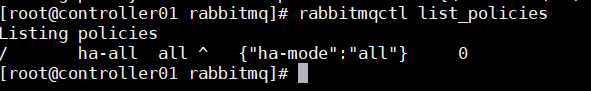

查看消息队列策略

rabbitmqctl list_policies

4、Memcached(控制节点)

#####全部控制节点 #安装memcache的软件包 yum install memcached python-memcached -y设置本地监听

//controller01

sed -i 's\OPTIONS="-l 127.0.0.1,::1"\OPTIONS="-l 127.0.0.1,::1,controller01"' /etc/sysconfig/memcached

sed -i 's\CACHESIZE="64"\CACHESIZE="1024"' /etc/sysconfig/memcached//controller02

sed -i 's\OPTIONS="-l 127.0.0.1,::1"\OPTIONS="-l 127.0.0.1,::1,controller02"' /etc/sysconfig/memcached

sed -i 's\CACHESIZE="64"\CACHESIZE="1024"' /etc/sysconfig/memcached开机自启(所有controller节点)

systemctl enable memcached.service

systemctl start memcached.service

systemctl status memcached.service

5、Etcd集群(控制节点)

#####所有controller节点##### yum install -y etcd cp -a /etc/etcd/etcd.conf{,.bak} //备份配置文件controller01#####

cat > /etc/etcd/etcd.conf <<EOF

[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.100.10:2379,http://127.0.0.1:2379"

ETCD_NAME="controller01"[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.100.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.100.10:2379"

ETCD_INITIAL_CLUSTER="controller01=http://192.168.100.10:2380,controller02=http://192.168.100.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

EOFcontroller02#####

cat > /etc/etcd/etcd.conf <<EOF

[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.100.11:2379,http://127.0.0.1:2379"

ETCD_NAME="controller02"[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.100.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.100.11:2379"

ETCD_INITIAL_CLUSTER="controller01=http://192.168.100.10:2380,controller02=http://192.168.100.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

#####controller01######

#修改etcd.service

vim /usr/lib/systemd/system/etcd.service

#修改成以下

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd \

--name=\"${ETCD_NAME}\" \

--data-dir=\"${ETCD_DATA_DIR}\" \

--listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\" \

--listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\" \

--initial-advertise-peer-urls=\"${ETCD_INITIAL_ADVERTISE_PEER_URLS}\" \

--advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\" \

--initial-cluster=\"${ETCD_INITIAL_CLUSTER}\" \

--initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\" \

--initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\""

Restart=on-failure

LimitNOFILE=65536

#拷贝该配置文件到controller02

scp -rp /usr/lib/systemd/system/etcd.service controller02:/usr/lib/systemd/system/

#####全部controller#####

#设置开机自启

systemctl enable etcd

systemctl restart etcd

systemctl status etcd

#验证

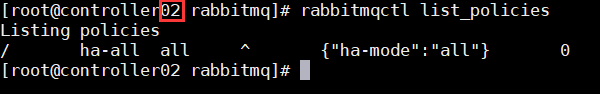

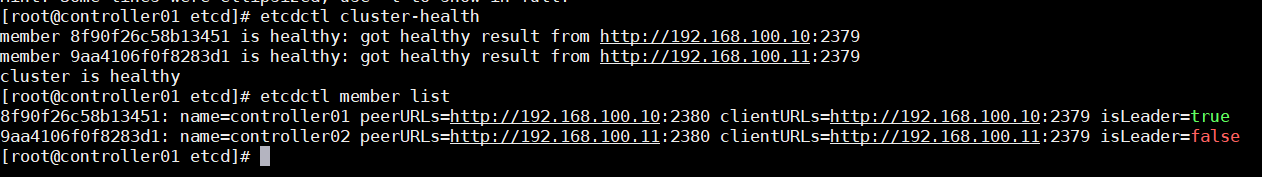

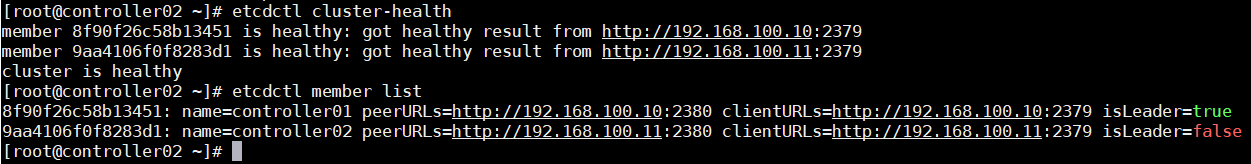

etcdctl cluster-health

etcdctl member list

两台节点都是healthy,controller01成为了leader。

6、使用开源的pacemaker cluster stack做为集群高可用资源管理软件

#####所有controller##### yum install pacemaker pcs corosync fence-agents resource-agents -y启动pcs服务

systemctl enable pcsd

systemctl start pcsd修改集群管理员hacluster密码

echo 000000 | passwd --stdin hacluster

认证操作(controller01)

节点认证,组建集群,需要采用上一步设置的password

pcs cluster auth controller01 controller02 -u hacluster -p 000000 --force

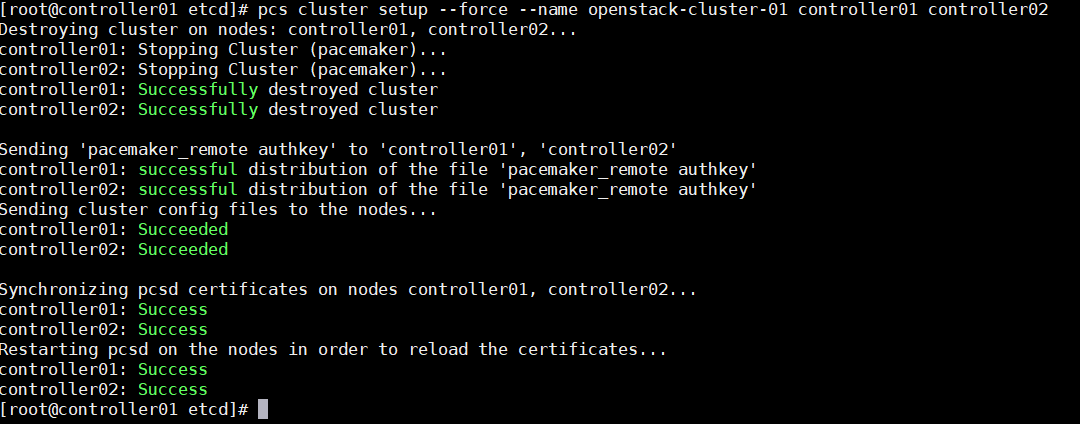

创建并命名集群,

pcs cluster setup --force --name openstack-cluster-01 controller01 controller02

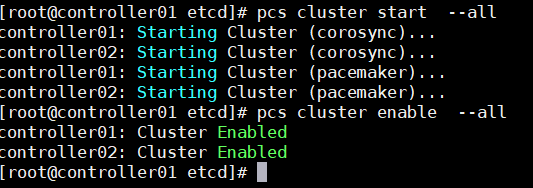

pacemaker集群启动

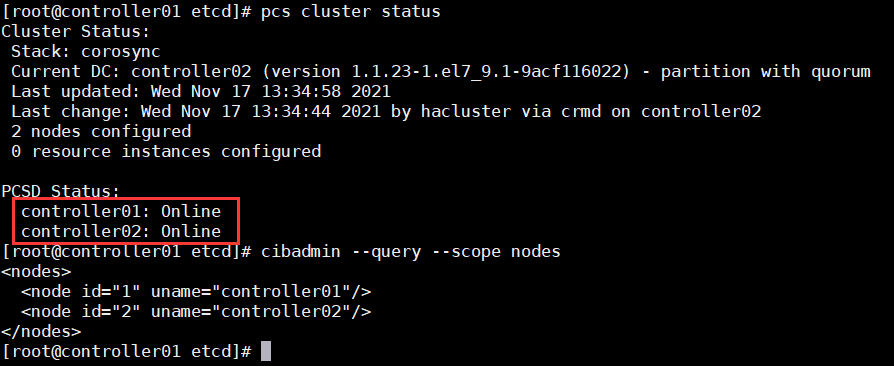

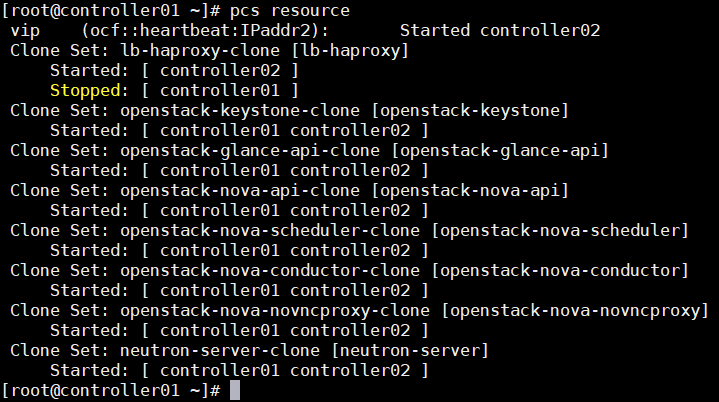

#####controller01##### pcs cluster start --all pcs cluster enable --all命令记录

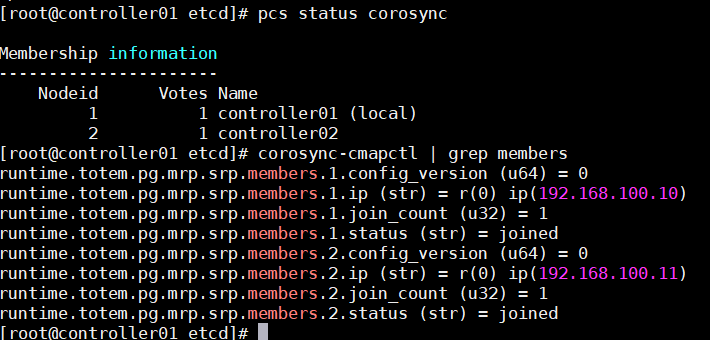

pcs cluster status //查看集群状态

pcs status corosync //corosync表示一种底层状态等信息的同步方式

corosync-cmapctl | grep members //查看节点

pcs resource //查看资源

设置高可用属性

#####controller01##### #设置合适的输入处理历史记录及策略引擎生成的错误与警告 pcs property set pe-warn-series-max=1000 \ pe-input-series-max=1000 \ pe-error-series-max=1000cluster-recheck-interval默认定义某些pacemaker操作发生的事件间隔为15min,建议设置为5min或3min

pcs property set cluster-recheck-interval=5

pcs property set stonith-enabled=false因为资源问题本次只采用了两控制节点搭建,无法仲裁,需忽略法定人数策略

pcs property set no-quorum-policy=ignore

配置 vip

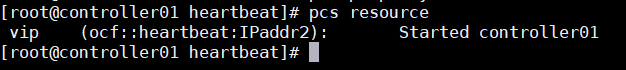

#####controller01##### pcs resource create vip ocf:heartbeat:IPaddr2 ip=192.168.100.100 cidr_netmask=24 op monitor interval=30s查看集群资源和生成的VIP情况

pcs resource

ip a

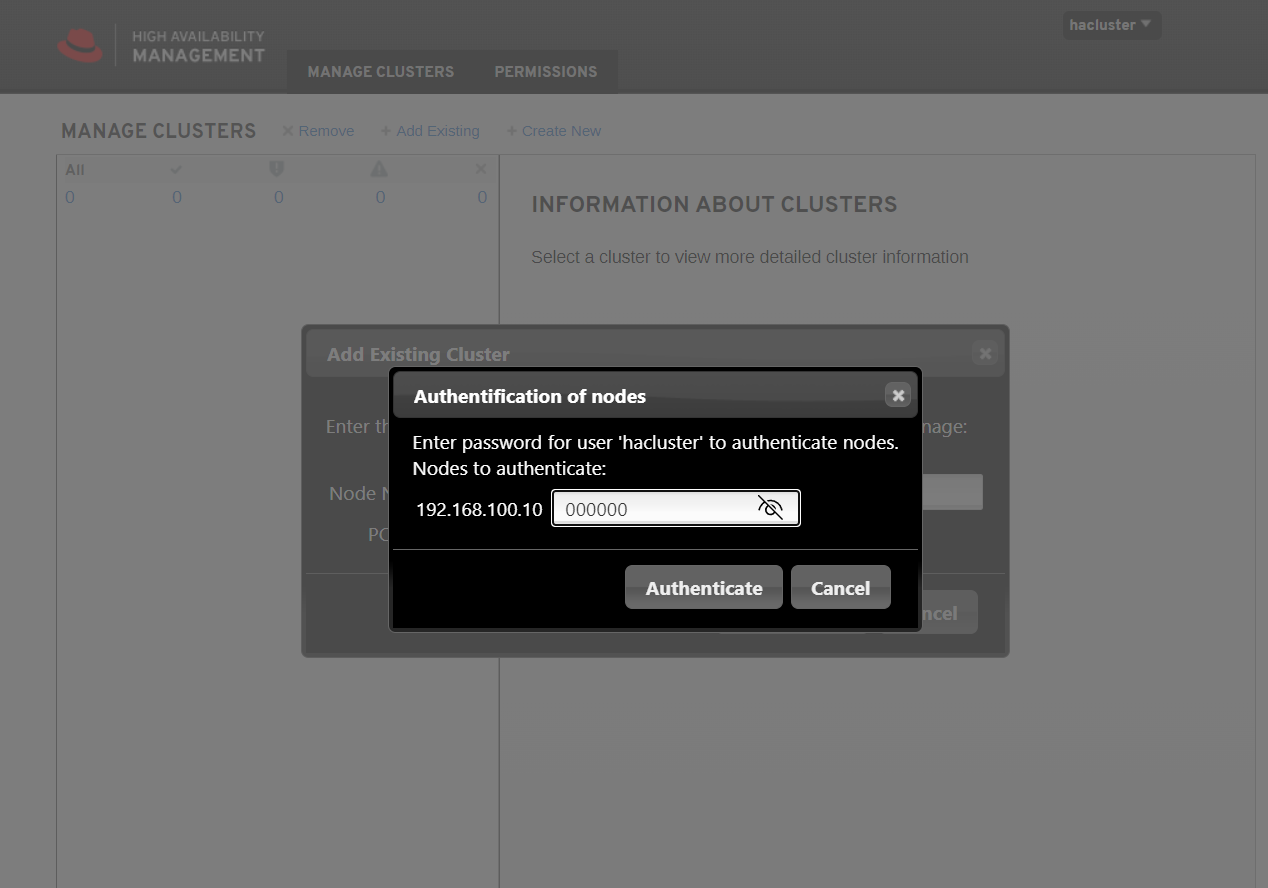

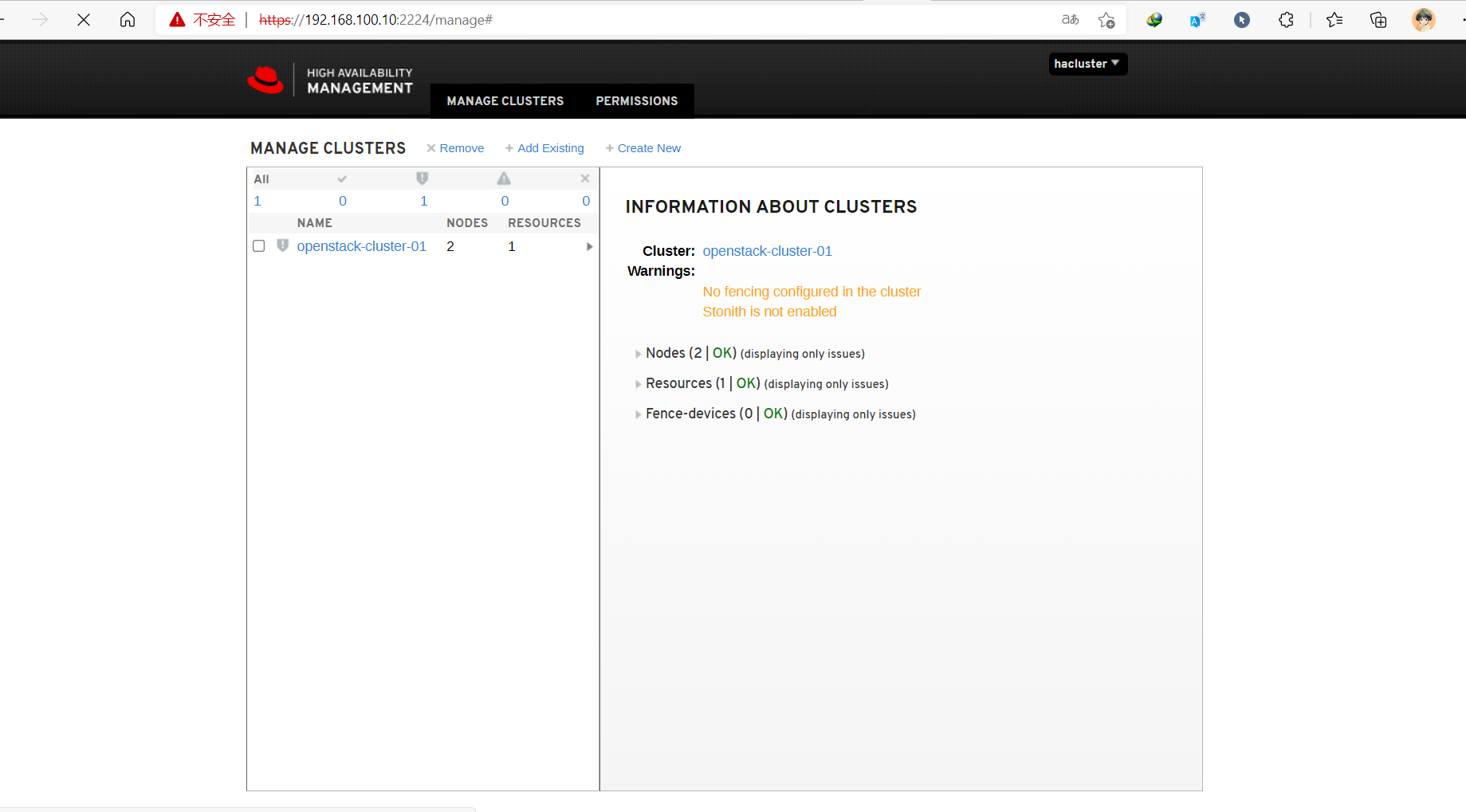

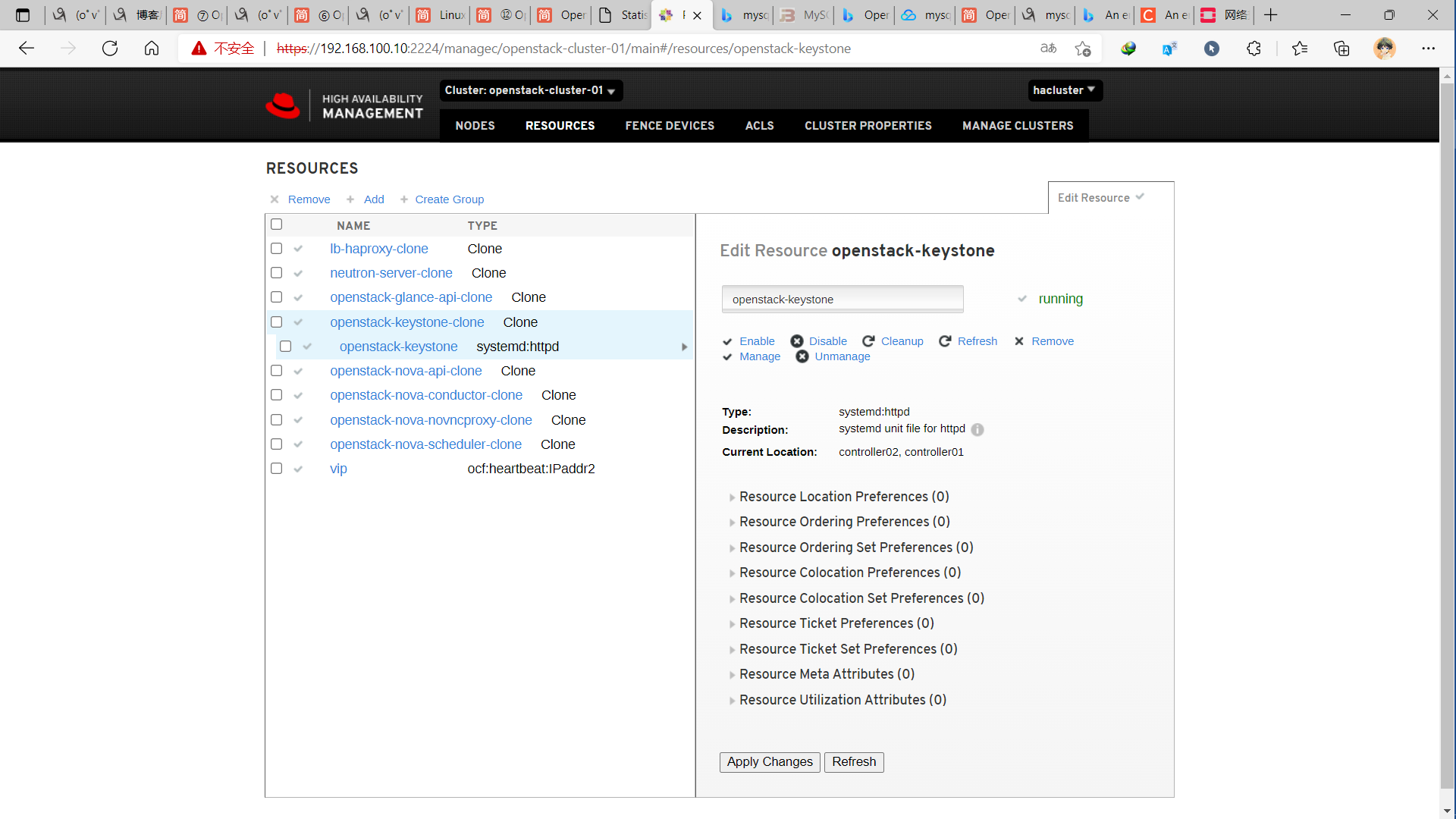

高可用性管理

通过web访问任意控制节点:https://192.168.100.10:2224,账号/密码(即构建集群时生成的密码):hacluster/000000。虽然以命令行的方式设置了集群,但web界面默认并不显示,手动添加集群,实际操作只需要添加已组建集群的任意节点即可,如下

7、部署Haproxy

#####全部controller##### yum install haproxy -y #开启日志功能 mkdir /var/log/haproxy chmod a+w /var/log/haproxy编辑配置文件

vim /etc/rsyslog.conf

取消以下注释:

15 $ModLoad imudp

16 $UDPServerRun 514

19 $ModLoad imtcp

20 $InputTCPServerRun 514最后添加:

local0.=info -/var/log/haproxy/haproxy-info.log

local0.=err -/var/log/haproxy/haproxy-err.log

local0.notice;local0.!=err -/var/log/haproxy/haproxy-notice.log重启rsyslog

systemctl restart rsyslog

配置关于所有组件的配置(全部控制节点)

VIP:192.168.100.100

#####全部controller节点##### cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak vim /etc/haproxy/haproxy.cfg global log 127.0.0.1 local0 chroot /var/lib/haproxy daemon group haproxy user haproxy maxconn 4000 pidfile /var/run/haproxy.pid stats socket /var/lib/haproxy/statsdefaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

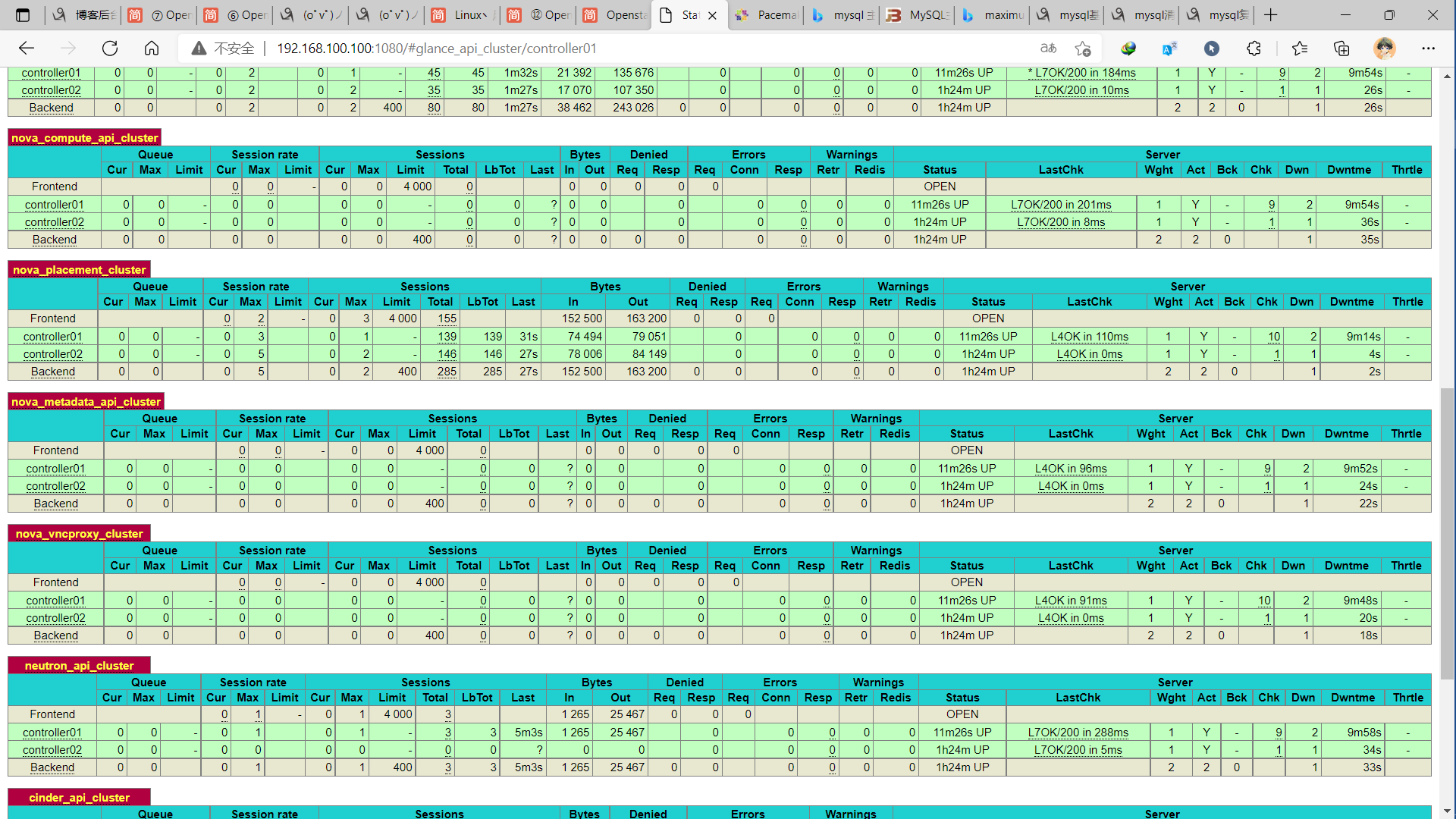

maxconn 4000haproxy监控页

listen stats

bind 0.0.0.0:1080

mode http

stats enable

stats uri /

stats realm OpenStack\ Haproxy

stats auth admin:admin

stats refresh 30s

stats show-node

stats show-legends

stats hide-versionhorizon服务

listen dashboard_cluster

bind 192.168.100.100:80

balance source

option tcpka

option httpchk

option tcplog

server controller01 192.168.100.10:80 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:80 check inter 2000 rise 2 fall 5为rabbirmq提供ha集群访问端口,供openstack各服务访问;

如果openstack各服务直接连接rabbitmq集群,这里可不设置rabbitmq的负载均衡

listen rabbitmq_cluster

bind 192.168.100.100:5673

mode tcp

option tcpka

balance roundrobin

timeout client 3h

timeout server 3h

option clitcpka

server controller01 192.168.100.10:5672 check inter 10s rise 2 fall 5

server controller02 192.168.100.11:5672 check inter 10s rise 2 fall 5glance_api服务

listen glance_api_cluster

bind 192.168.100.100:9292

balance source

option tcpka

option httpchk

option tcplog

timeout client 3h

timeout server 3h

server controller01 192.168.100.10:9292 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:9292 check inter 2000 rise 2 fall 5keystone_public _api服务

listen keystone_public_cluster

bind 192.168.100.100:5000

balance source

option tcpka

option httpchk

option tcplog

server controller01 192.168.100.10:5000 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:5000 check inter 2000 rise 2 fall 5listen nova_compute_api_cluster

bind 192.168.100.100:8774

balance source

option tcpka

option httpchk

option tcplog

server controller01 192.168.100.10:8774 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:8774 check inter 2000 rise 2 fall 5listen nova_placement_cluster

bind 192.168.100.100:8778

balance source

option tcpka

option tcplog

server controller01 192.168.100.10:8778 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:8778 check inter 2000 rise 2 fall 5listen nova_metadata_api_cluster

bind 192.168.100.100:8775

balance source

option tcpka

option tcplog

server controller01 192.168.100.10:8775 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:8775 check inter 2000 rise 2 fall 5listen nova_vncproxy_cluster

bind 192.168.100.100:6080

balance source

option tcpka

option tcplog

server controller01 192.168.100.10:6080 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:6080 check inter 2000 rise 2 fall 5listen neutron_api_cluster

bind 192.168.100.100:9696

balance source

option tcpka

option httpchk

option tcplog

server controller01 192.168.100.10:9696 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:9696 check inter 2000 rise 2 fall 5listen cinder_api_cluster

bind 192.168.100.100:8776

balance source

option tcpka

option httpchk

option tcplog

server controller01 192.168.100.10:8776 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:8776 check inter 2000 rise 2 fall 5mariadb服务;

设置controller01节点为master,controller02节点为backup,一主多备的架构可规避数据不一致性;

另外官方示例为检测9200(心跳)端口,测试在mariadb服务宕机的情况下,虽然”/usr/bin/clustercheck”脚本已探测不到服务,但受xinetd控制的9200端口依然正常,导致haproxy始终将请求转发到mariadb服务宕机的节点,暂时修改为监听3306端口

listen galera_cluster

bind 192.168.100.100:3306

balance source

mode tcp

server controller01 192.168.100.10:3306 check inter 2000 rise 2 fall 5

server controller02 192.168.100.11:3306 backup check inter 2000 rise 2 fall 5

为rabbirmq提供ha集群访问端口,供openstack各服务访问;

如果openstack各服务直接连接rabbitmq集群,这里可不设置rabbitmq的负载均衡

#复制配置信息给controller02

scp /etc/haproxy/haproxy.cfg controller02:/etc/haproxy/haproxy.cfg

配置内核参数

#####所有controller#####

#net.ipv4.ip_nonlocal_bind = 1是否允许no-local ip绑定,关系到haproxy实例与vip能否绑定并切换

#net.ipv4.ip_forward:是否允许转发

echo 'net.ipv4.ip_nonlocal_bind = 1' >>/etc/sysctl.conf

echo "net.ipv4.ip_forward = 1" >>/etc/sysctl.conf

sysctl -p启动服务

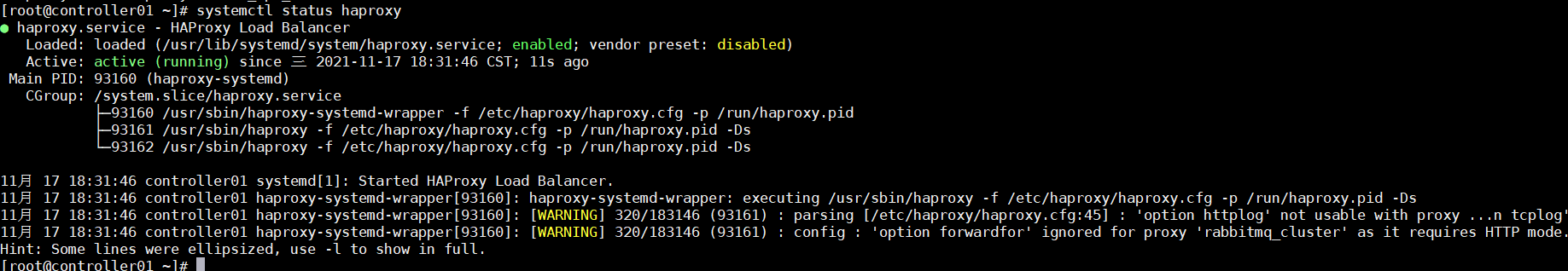

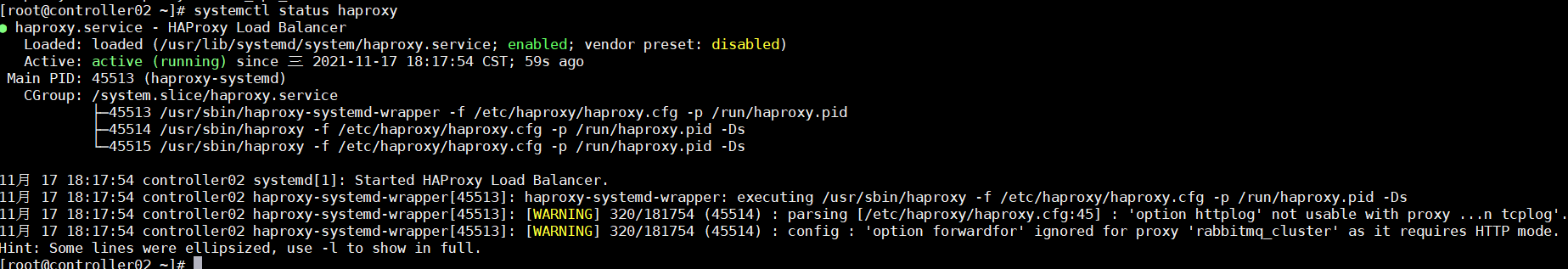

#####所有controller######

systemctl enable haproxy

systemctl restart haproxy

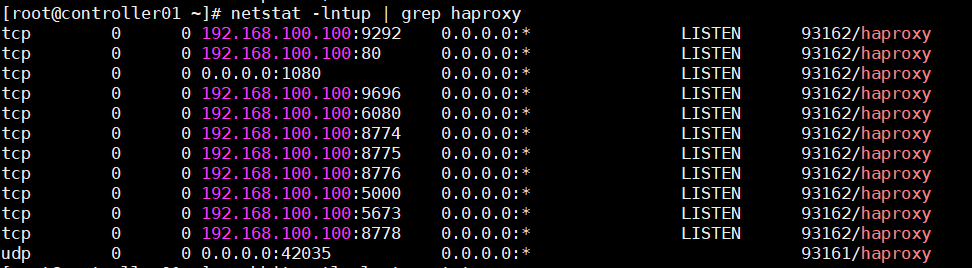

systemctl status haproxy

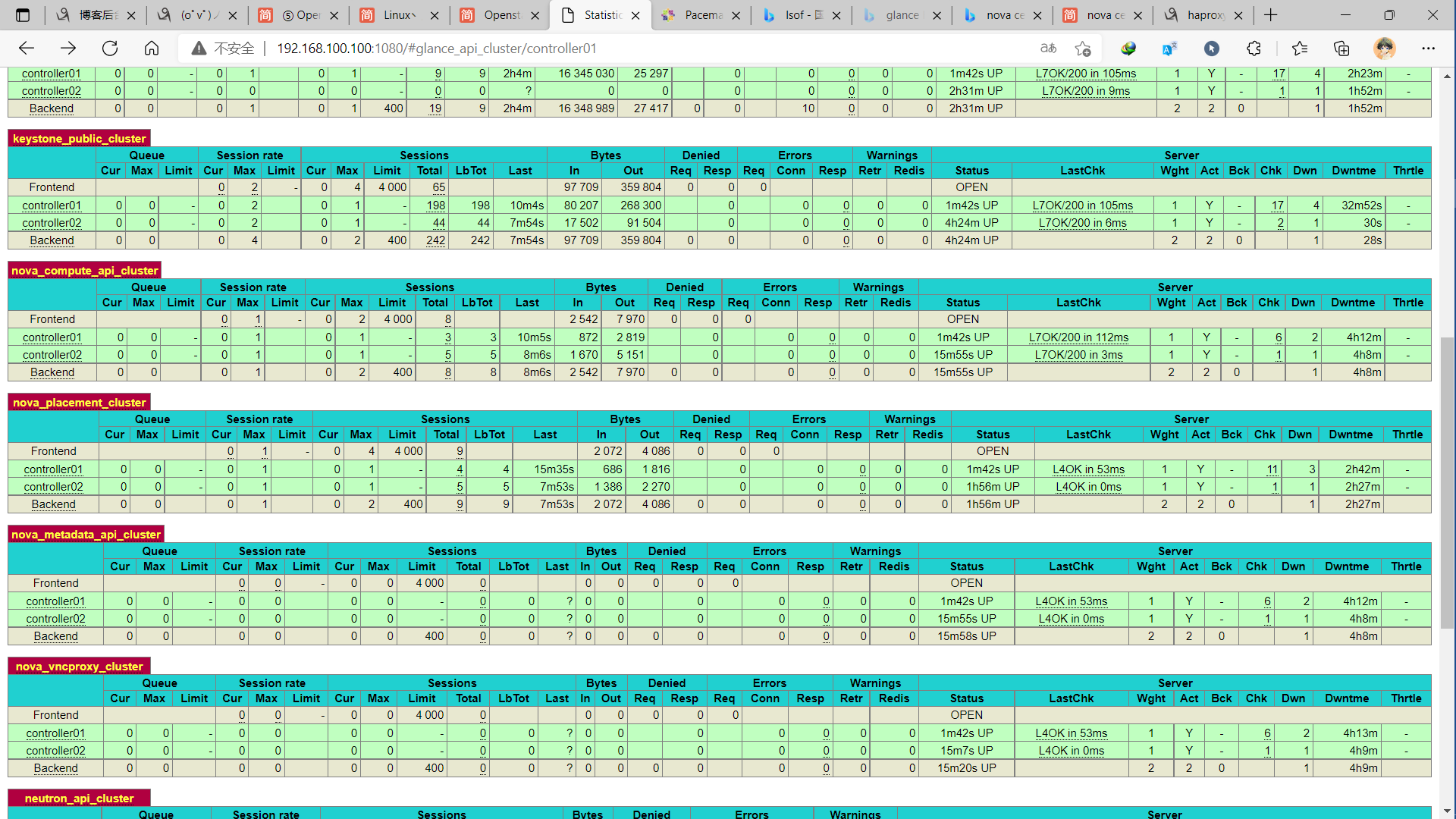

netstat -lntup | grep haproxy可以看到VIP的各端口处于监听状态

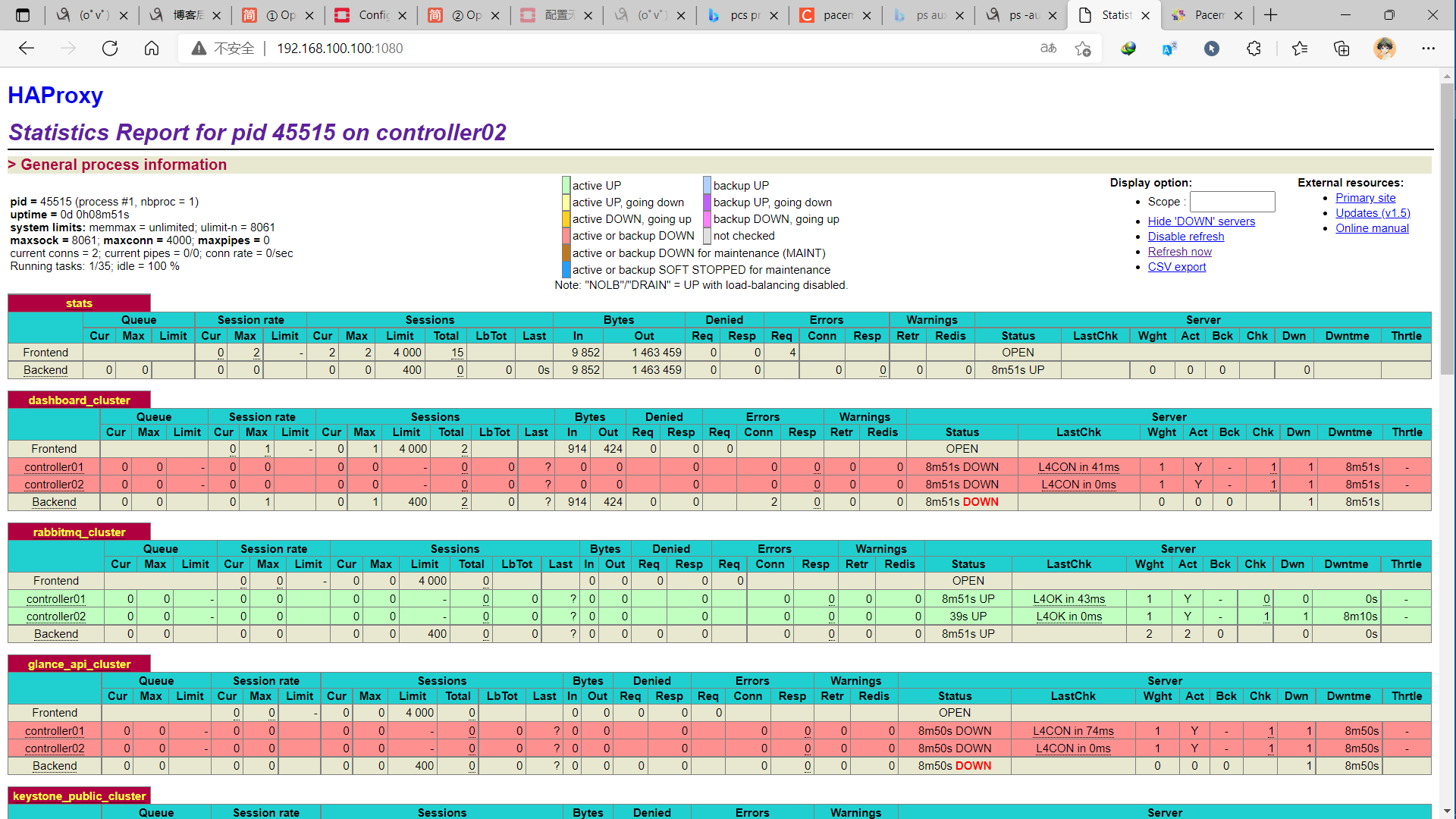

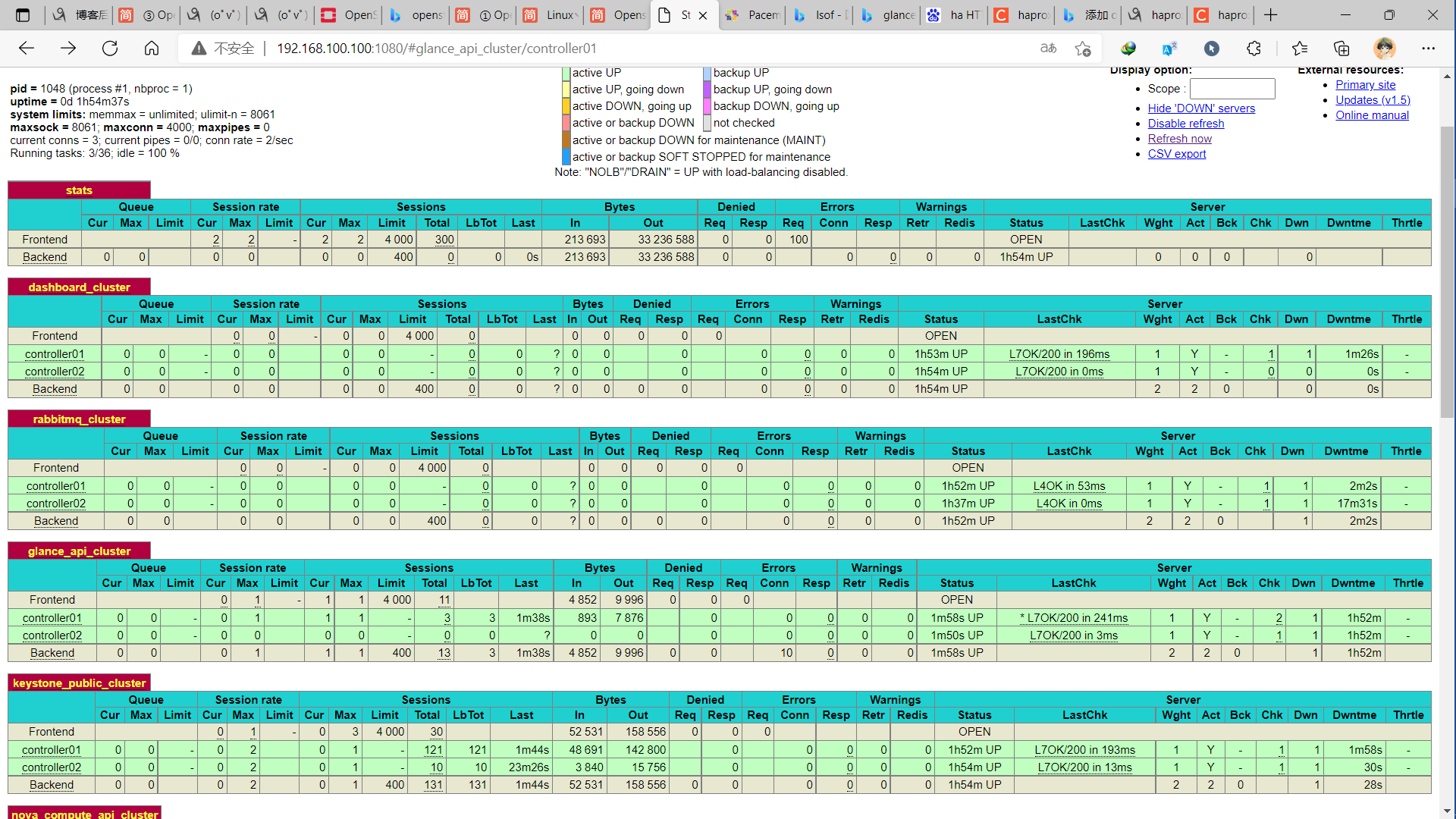

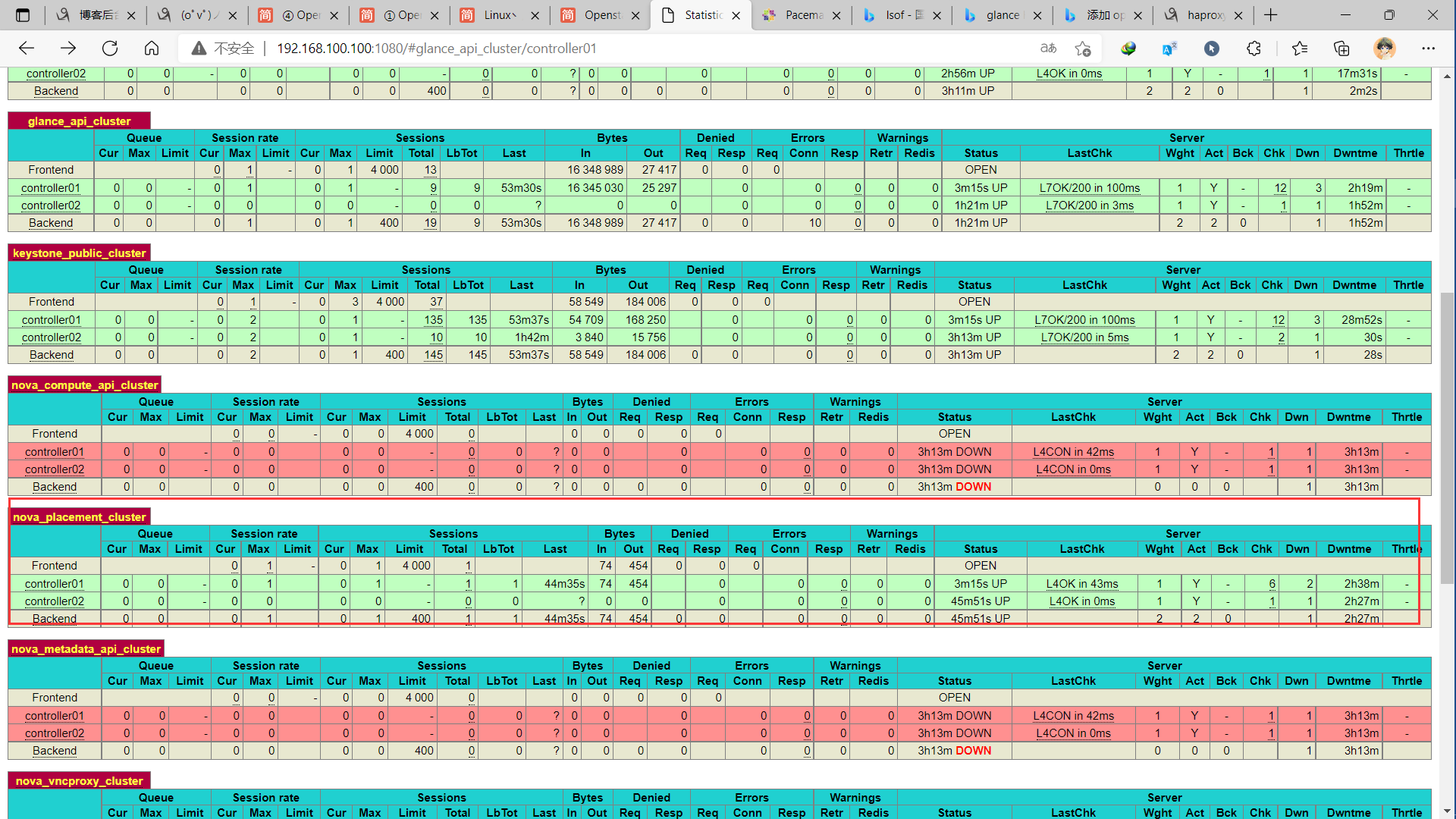

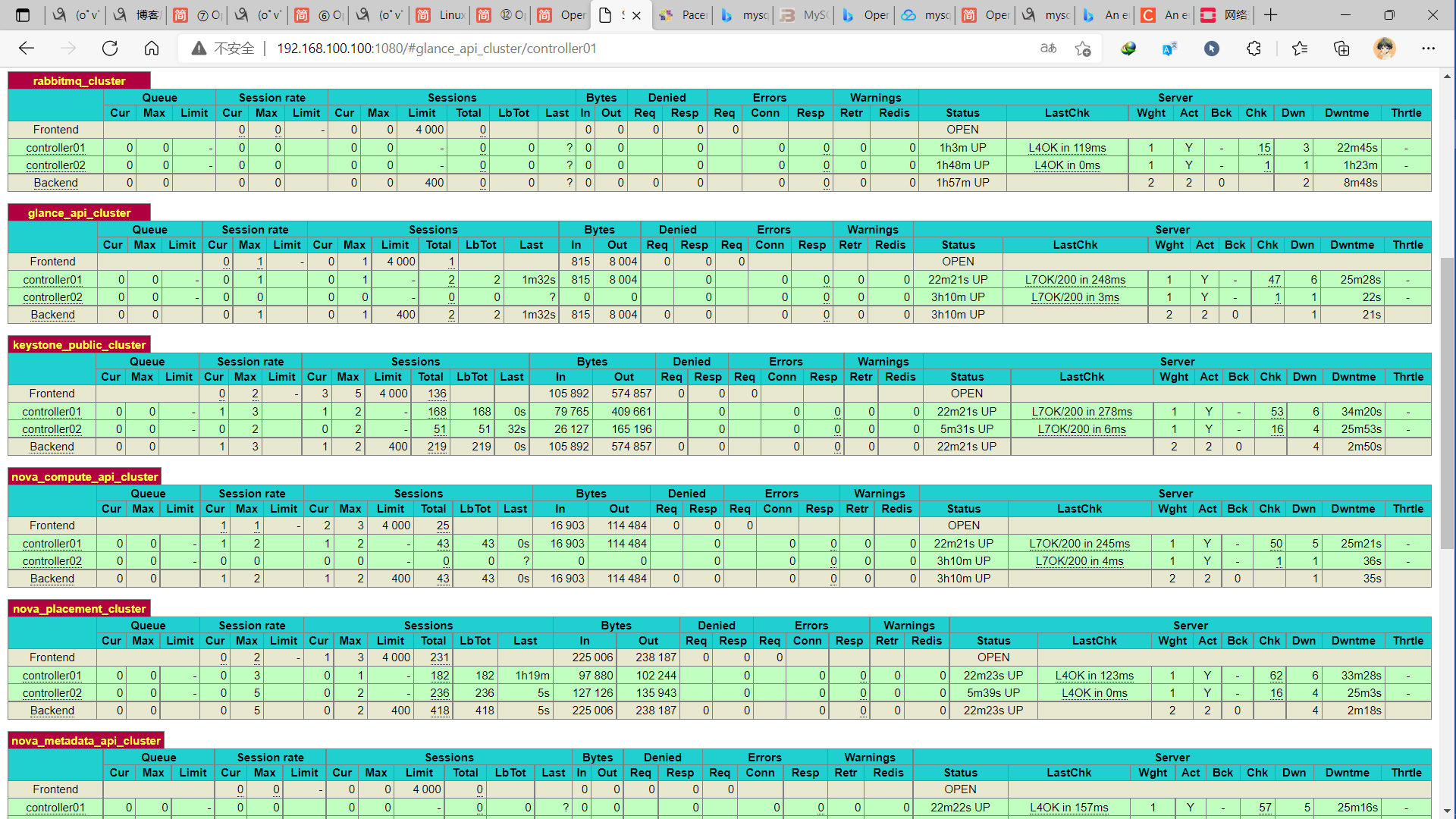

访问:http://192.168.100.100:1080 用户名/密码:admin/admin

rabbitmq已安装所有显示绿色,其他服务未安装;在此步骤会显示红色

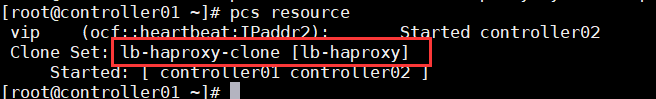

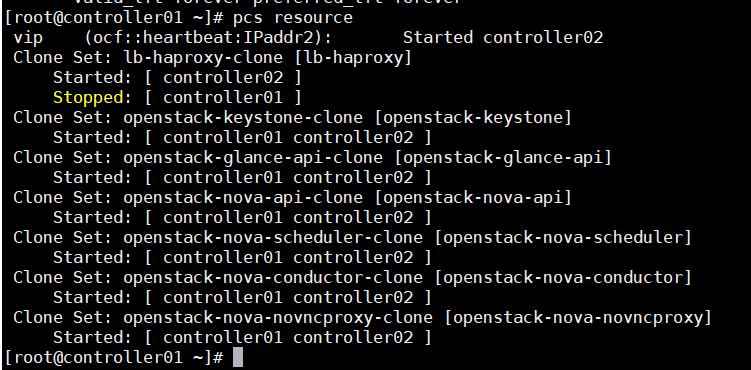

设置pcs资源

#####controller01#####

#添加资源 lb-haproxy-clone

pcs resource create lb-haproxy systemd:haproxy clone

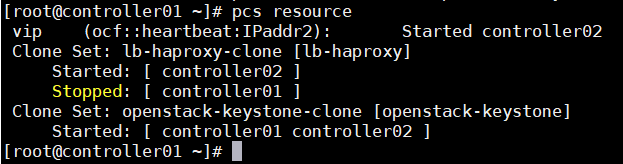

pcs resource

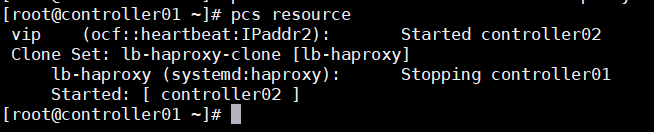

#####controller01##### #设置资源启动顺序,先vip再lb-haproxy-clone; pcs constraint order start vip then lb-haproxy-clone kind=Optional官方建议设置vip运行在haproxy active的节点,通过绑定lb-haproxy-clone与vip服务,

所以将两种资源约束在1个节点;约束后,从资源角度看,其余暂时没有获得vip的节点的haproxy会被pcs关闭

pcs constraint colocation add lb-haproxy-clone with vip

pcs resource

通过pacemaker高可用管理查看资源相关的设置

#####controller01#####

#hosts添加mysqlvip和havip解析

sed -i '$a 192.168.100.100 havip' /etc/hosts

scp /etc/hosts controller02:/etc/hosts

scp /etc/hosts compute01:/etc/hosts

scp /etc/hosts compute02:/etc/hosts三、openstackT版各个组件部署

1、Keystone部署

1.1、配置keystone数据库

#####任意控制节点(例如controller01)#####

mysql -u root -p000000

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

flush privileges;

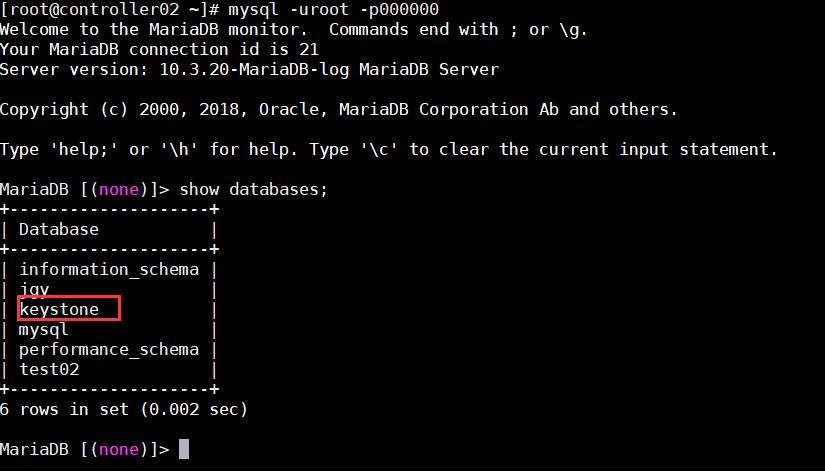

exitcontroller02查看一下是否同步成功、

同步成功。

#####全部controller节点##### yum install openstack-keystone httpd mod_wsgi -y yum install openstack-utils -y yum install python-openstackclient -y cp /etc/keystone/keystone.conf{,.bak} egrep -v '^$|^#' /etc/keystone/keystone.conf.bak >/etc/keystone/keystone.conf

openstack-config --set /etc/keystone/keystone.conf cache backend oslo_cache.memcache_pool

openstack-config --set /etc/keystone/keystone.conf cache enabled true

openstack-config --set /etc/keystone/keystone.conf cache memcache_servers controller01:11211,controller02:11211

openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:KEYSTONE_DBPASS@havip/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

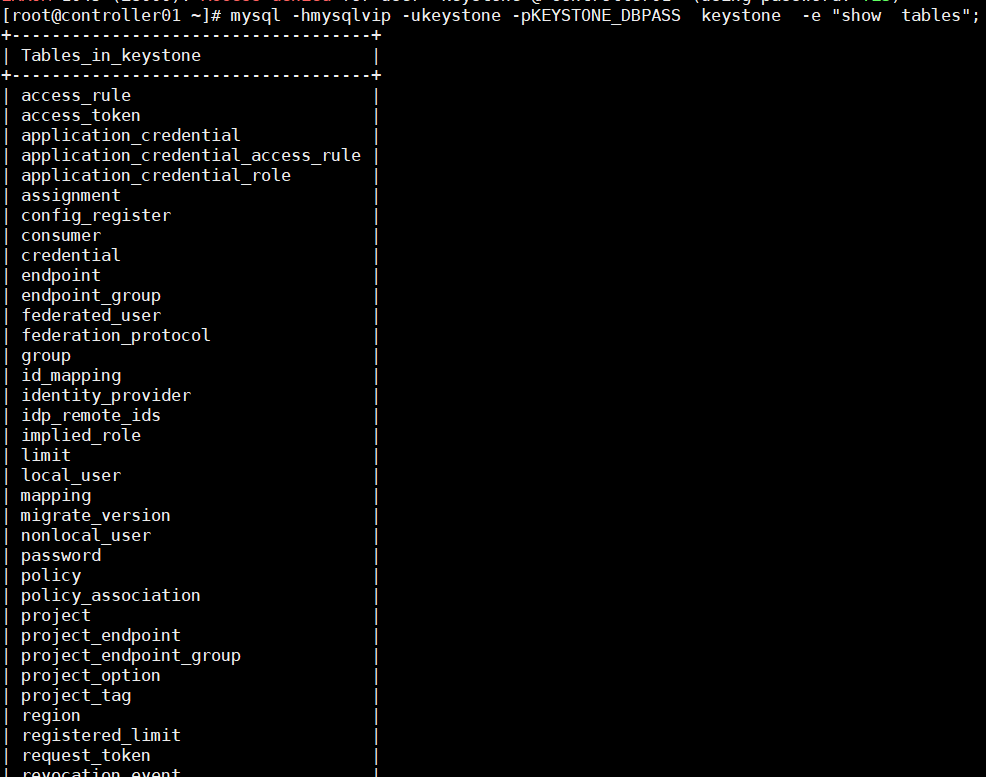

1.2、同步数据库

#####controller01#####

#同步数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

#查看是否同步成功

mysql -hhavip -ukeystone -pKEYSTONE_DBPASS keystone -e "show tables";

#####controller01##### #在/etc/keystone/生成相关秘钥及目录 keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone #并将初始化的密钥拷贝到其他的控制节点 scp -rp /etc/keystone/fernet-keys /etc/keystone/credential-keys controller02:/etc/keystone/controller02#####

同步后修改controller02节点的fernet的权限

chown -R keystone:keystone /etc/keystone/credential-keys/

chown -R keystone:keystone /etc/keystone/fernet-keys/

1.3、认证引导

#####controller01#####

keystone-manage bootstrap --bootstrap-password admin \

--bootstrap-admin-url http://havip:5000/v3/ \

--bootstrap-internal-url http://havip:5000/v3/ \

--bootstrap-public-url http://havip:5000/v3/ \

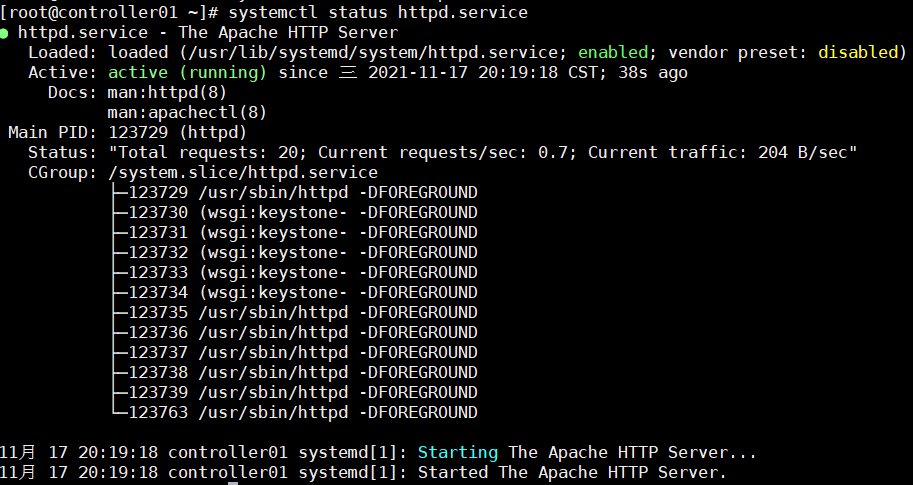

--bootstrap-region-id RegionOne1.4、配置Http Server

#####controller01##### cp /etc/httpd/conf/httpd.conf{,.bak} sed -i "s/#ServerName www.example.com:80/ServerName ${HOSTNAME}/" /etc/httpd/conf/httpd.conf sed -i "s/Listen\ 80/Listen\ 192.168.100.10:80/g" /etc/httpd/conf/httpd.conf ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ sed -i "s/Listen\ 5000/Listen\ 192.168.100.10:5000/g" /etc/httpd/conf.d/wsgi-keystone.conf sed -i "s#*:5000#192.168.100.10:5000#g" /etc/httpd/conf.d/wsgi-keystone.conf systemctl enable httpd.service systemctl restart httpd.service systemctl status httpd.servicecontroller02#####

cp /etc/httpd/conf/httpd.conf{,.bak}

sed -i "s/#ServerName www.example.com:80/ServerName ${HOSTNAME}/" /etc/httpd/conf/httpd.conf

sed -i "s/Listen\ 80/Listen\ 192.168.100.11:80/g" /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

sed -i "s/Listen\ 5000/Listen\ 192.168.100.11:5000/g" /etc/httpd/conf.d/wsgi-keystone.conf

sed -i "s#*:5000#192.168.100.11:5000#g" /etc/httpd/conf.d/wsgi-keystone.conf

systemctl enable httpd.service

systemctl restart httpd.service

systemctl status httpd.service

1.5、编写环境变量脚本

#####controller01#####

touch ~/admin-openrc.sh

cat >> ~/admin-openrc.sh<< EOF

#admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://havip:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

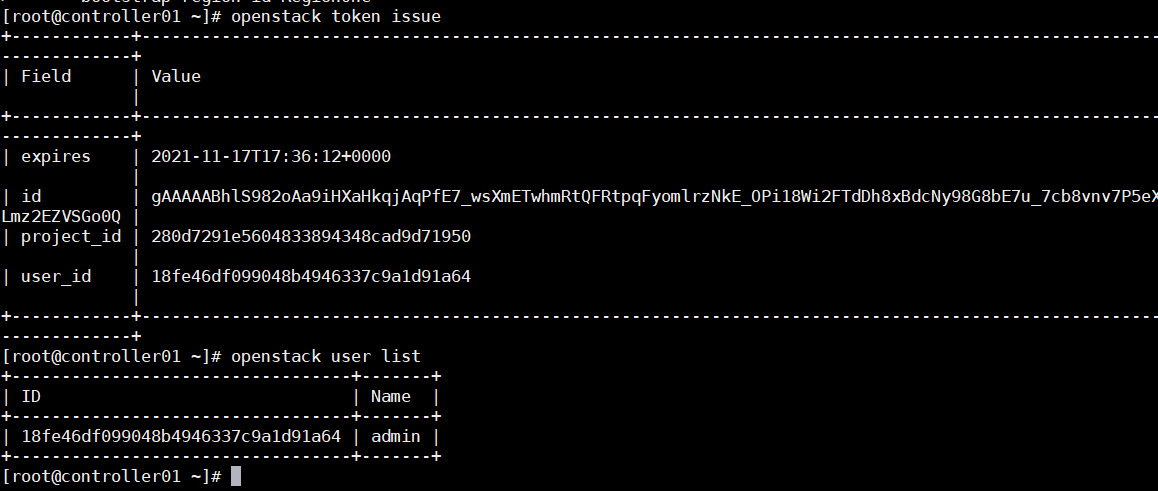

source ~/admin-openrc.sh

scp -rp ~/admin-openrc.sh controller02:~/

scp -rp ~/admin-openrc.sh compute01:~/

scp -rp ~/admin-openrc.sh compute02:~/

#验证

openstack token issue

1.6、创建新域、项目、用户和角色

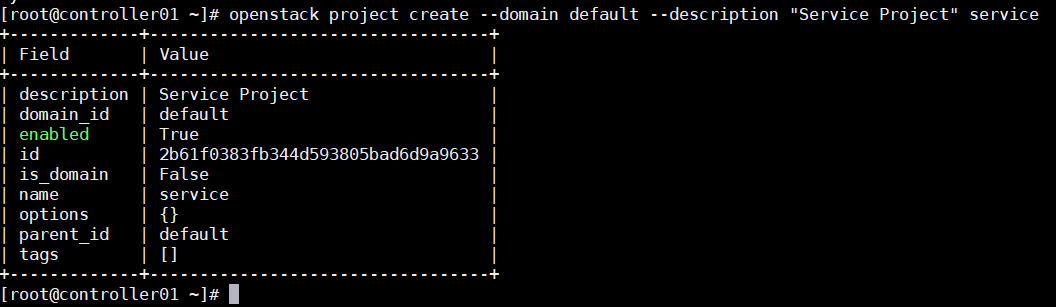

openstack project create --domain default --description "Service Project" service //使用默认域创建service项目

openstack project create --domain default --description "demo Project" demo //使用默认域创建myproject项目(no-admin使用)

openstack user create --domain default --password demo demo //创建myuser用户,需要设置密码,可设置为myuser

openstack role create user //创建myrole角色

openstack role add --project demo --user demo user

1.7、设置pcs资源

#####任意controller节点#####

pcs resource create openstack-keystone systemd:httpd clone interleave=true

pcs resource

2、Glance

2.1、创建数据库、用户角色、endpoint

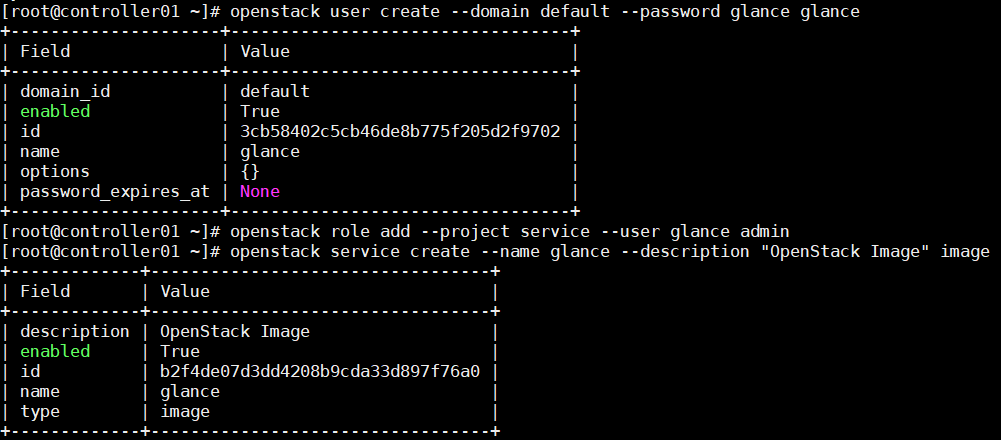

#####任意controller节点##### #创建数据库 mysql -u root -p000000 CREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS'; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS'; flush privileges; exit;创建用户、角色

openstack user create --domain default --password glance glance //创建glance用户,密码glance

openstack role add --project service --user glance admin

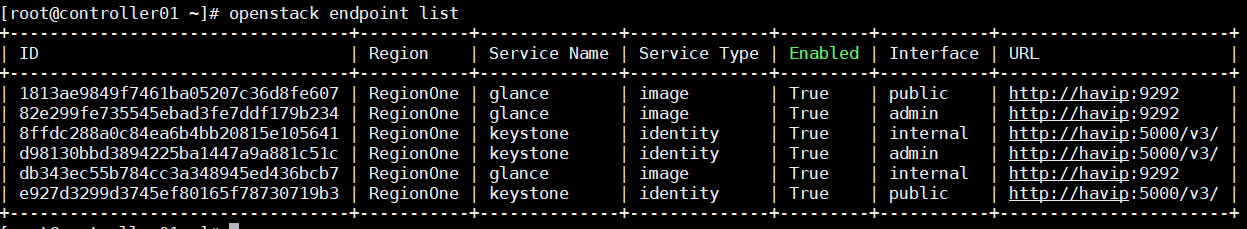

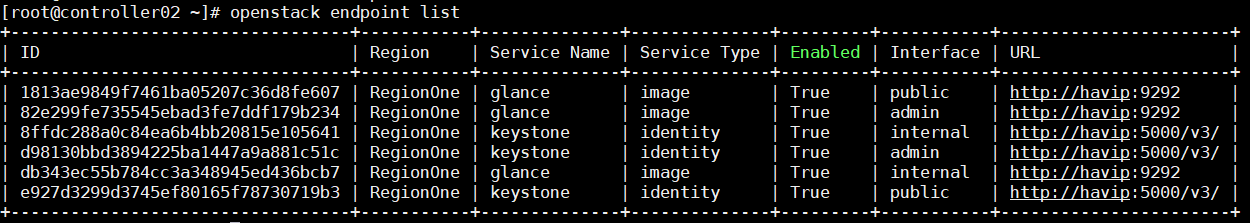

openstack service create --name glance --description "OpenStack Image" image //创建glance实体服务创建glance endpoint

openstack endpoint create --region RegionOne image public http://havip:9292

openstack endpoint create --region RegionOne image internal http://havip:9292

openstack endpoint create --region RegionOne image admin http://havip:9292

2.2、部署与配置glance

#####全部controller节点##### yum install openstack-glance -ymkdir /var/lib/glance/images/

chown glance:nobody /var/lib/glance/images备份Keystone配置文件

cp /etc/glance/glance-api.conf{,.bak}

egrep -v '$|#' /etc/glance/glance-api.conf.bak >/etc/glance/glance-api.confcontroller01节点参数

openstack-config --set /etc/glance/glance-api.conf DEFAULT bind_host 192.168.100.10

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@havip/glance

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir /var/lib/glance/images/

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://havip:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://havip:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password glance

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystonecontroller02节点配置

scp -rp /etc/glance/glance-api.conf controller02:/etc/glance/glance-api.conf

openstack-config --set /etc/glance/glance-api.conf DEFAULT bind_host 192.168.100.11

2.3、同步数据库

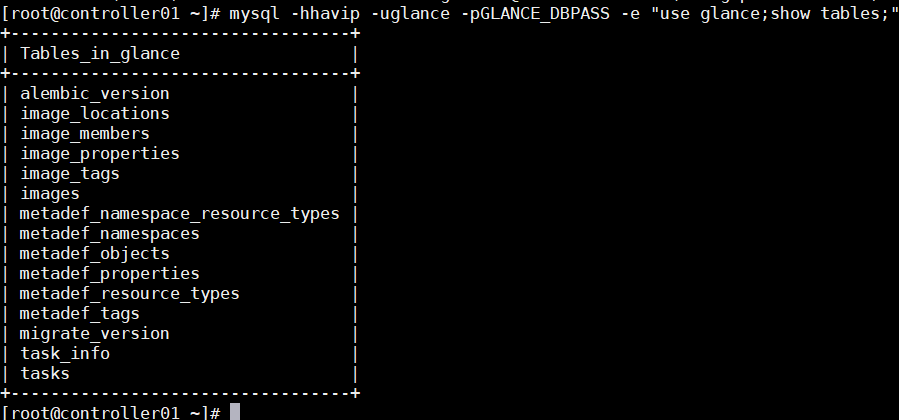

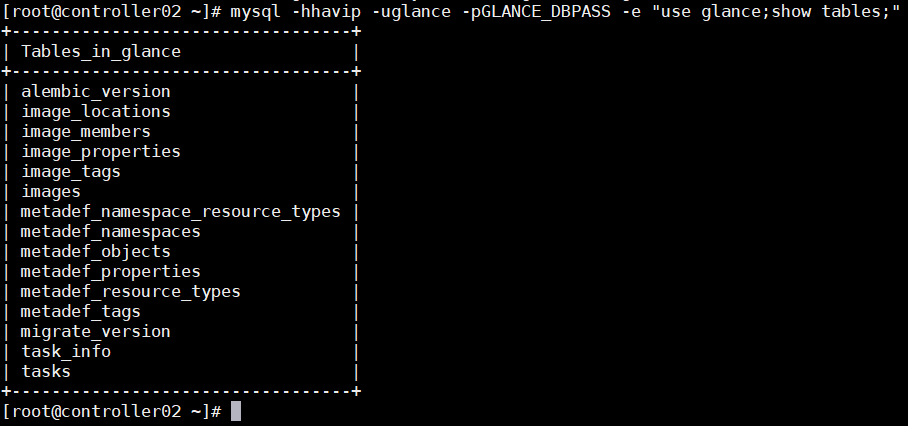

#####任意controller##### su -s /bin/sh -c "glance-manage db_sync" glance查看组件

mysql -hhavip -uglance -pGLANCE_DBPASS -e "use glance;show tables;"

2.4、启动服务

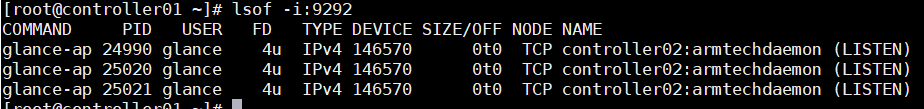

#####全部controller节点##### systemctl enable openstack-glance-api.service systemctl restart openstack-glance-api.service systemctl status openstack-glance-api.service

lsof -i:9292

2.5、下载cirros镜像验证glance服务

######任意controller节点#####

wget -c http://download.cirros-cloud.net/0.5.1/cirros-0.5.1-x86_64-disk.img

openstack image create --file ~/cirros-0.5.1-x86_64-disk.img --disk-format qcow2 --container-format bare --public cirros-qcow2

openstack image list2.6、添加pcs资源

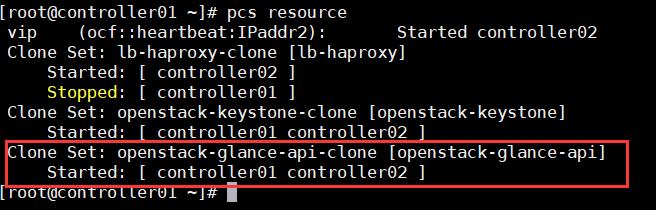

#####任意controller##### pcs resource create openstack-glance-api systemd:openstack-glance-api clone interleave=true

pcs resource

3、Placement

3.1、配置Placement数据库

#####任意controller mysql -u root -p000000 CREATE DATABASE placement; GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS'; GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS'; flush privileges; exit;创建placement用户,添加角色

openstack user create --domain default --password placement placement //设置密码为placement

openstack role add --project service --user placement admin

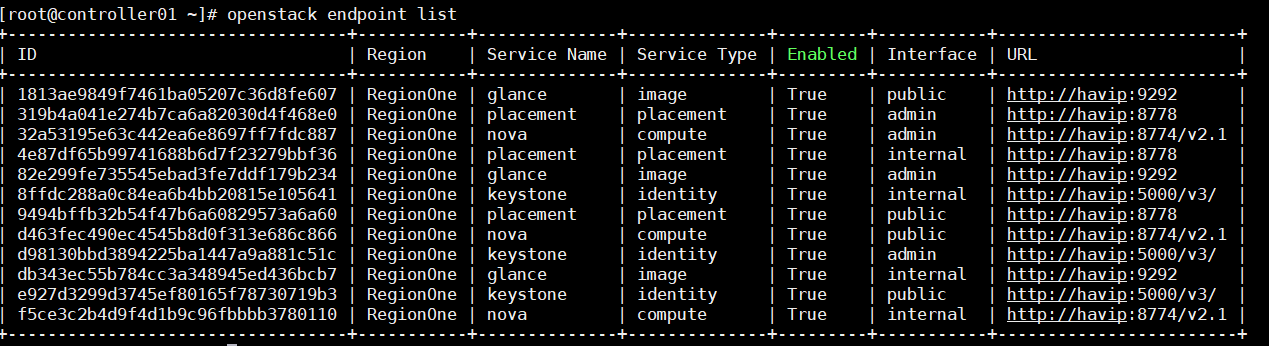

openstack service create --name placement --description "Placement API" placement创建endpoint

openstack endpoint create --region RegionOne placement public http://havip:8778

openstack endpoint create --region RegionOne placement internal http://havip:8778

openstack endpoint create --region RegionOne placement admin http://havip:8778

3.2、安装配置placement软件包

######全部controller##### yum install openstack-placement-api -y备份Placement配置

cp /etc/placement/placement.conf /etc/placement/placement.conf.bak

grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf编辑配置文件

openstack-config --set /etc/placement/placement.conf placement_database connection mysql+pymysql://placement:PLACEMENT_DBPASS@havip/placement

openstack-config --set /etc/placement/placement.conf api auth_strategy keystone

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_url http://havip:5000/v3

openstack-config --set /etc/placement/placement.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/placement/placement.conf keystone_authtoken auth_type password

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/placement/placement.conf keystone_authtoken project_name service

openstack-config --set /etc/placement/placement.conf keystone_authtoken username placement

openstack-config --set /etc/placement/placement.conf keystone_authtoken password placement

scp /etc/placement/placement.conf controller02:/etc/placement/

3.3、同步数据库

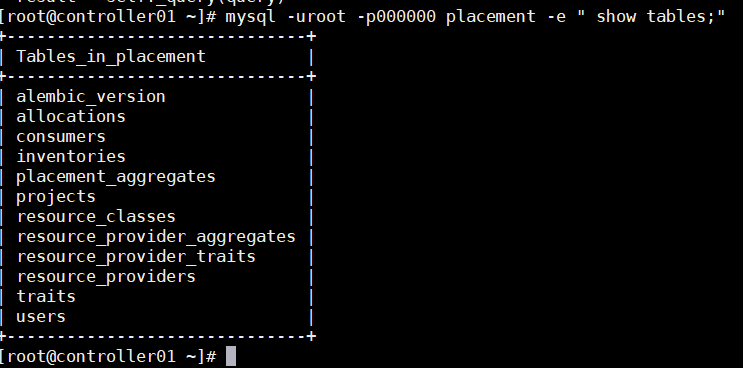

#####任意controller##### su -s /bin/sh -c "placement-manage db sync" placement //忽略输出

mysql -uroot -p000000 placement -e " show tables;"

3.4、修改placement的apache配置文件

#####全部controller##### #备份00-Placement-api配置 ##controller01上 cp /etc/httpd/conf.d/00-placement-api.conf{,.bak} sed -i "s/Listen\ 8778/Listen\ 192.168.100.10:8778/g" /etc/httpd/conf.d/00-placement-api.conf sed -i "s/*:8778/192.168.100.10:8778/g" /etc/httpd/conf.d/00-placement-api.confcontroller02上

cp /etc/httpd/conf.d/00-placement-api.conf{,.bak}

sed -i "s/Listen\ 8778/Listen\ 192.168.100.11:8778/g" /etc/httpd/conf.d/00-placement-api.conf

sed -i "s/*:8778/192.168.100.11:8778/g" /etc/httpd/conf.d/00-placement-api.conf在00-placement-api.conf后添加如下

vim /etc/httpd/conf.d/00-placement-api.conf

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>重启httpd

systemctl restart httpd.service

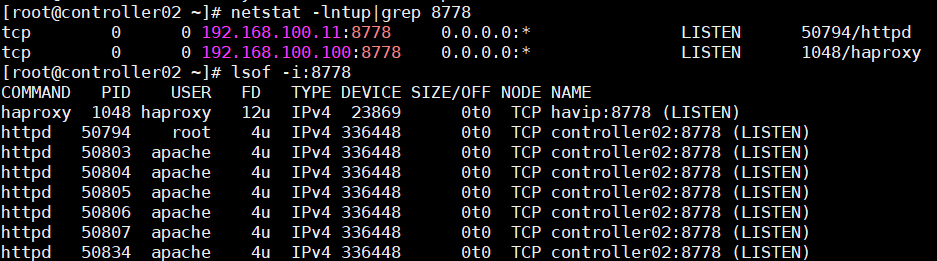

netstat -lntup|grep 8778

lsof -i:8778

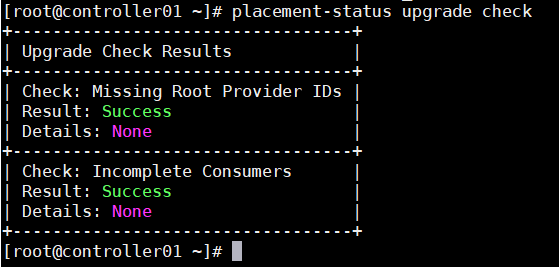

3.5、验证检查Placement健康状态

placement-status upgrade check

3.6、设置pcs资源

前面keystone已经设置过httpd的服务,因为placement也是使用httpd服务,因此不需要再重复设置,登陆haproxy的web界面查看已经添加成功

4、Nova控制节点集群部署

4.1、创建配置nova相关数据库

#####任意controller节点###### #创建nova_api,nova和nova_cell0数据库并授权 mysql -u root -p000000 CREATE DATABASE nova_api; CREATE DATABASE nova; CREATE DATABASE nova_cell0; GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

flush privileges;

4.2、创建相关服务凭证

#####任意controller节点##### #创建nova用户 openstack user create --domain default --password nova nova //密码nova添加管理员角色给nova

openstack role add --project service --user nova admin

创建compute服务

openstack service create --name nova --description "OpenStack Compute" compute

创建endpoint

openstack endpoint create --region RegionOne compute public http://havip:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://havip:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://havip:8774/v2.1

4.3、安装并配置nova软件包

#####全部controller节点##### yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y #nova-api(nova主服务) #nova-scheduler(nova调度服务) #nova-conductor(nova数据库服务,提供数据库访问) #nova-novncproxy(nova的vnc服务,提供实例的控制台)controller01######

备份配置文件/etc/nova/nova.conf

cp -a /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.confopenstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.100.10

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriverrabbitmq的vip端口在haproxy中设置的为5673;暂不使用haproxy配置的rabbitmq;直接连接rabbitmq集群

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:Zx*****@10.15.253.88:5673

openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:000000@controller01:5672,openstack:000000@controller02:5672

openstack-config --set /etc/nova/nova.conf DEFAULT osapi_compute_listen_port 8774

openstack-config --set /etc/nova/nova.conf DEFAULT metadata_listen_port 8775

openstack-config --set /etc/nova/nova.conf DEFAULT metadata_listen 192.168.100.10

openstack-config --set /etc/nova/nova.conf DEFAULT osapi_compute_listen 192.168.100.10openstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@havip/nova_apiopenstack-config --set /etc/nova/nova.conf cache backend oslo_cache.memcache_pool

openstack-config --set /etc/nova/nova.conf cache enabled True

openstack-config --set /etc/nova/nova.conf cache memcache_servers controller01:11211,controller02:11211openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@havip/nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken www_authenticate_uri http://havip:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://havip:5000/v3

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password novaopenstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 192.168.100.10

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address 192.168.100.10

openstack-config --set /etc/nova/nova.conf vnc novncproxy_host 192.168.100.10

openstack-config --set /etc/nova/nova.conf vnc novncproxy_port 6080openstack-config --set /etc/nova/nova.conf glance api_servers http://havip:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://havip:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password placement

#####controller01##### #拷贝配置文件 scp -rp /etc/nova/nova.conf controller02:/etc/nova/controller02#####

sed -i "s\192.168.100.10\192.168.100.11\g" /etc/nova/nova.conf

4.4、同步数据库并验证

#####任意controller##### #同步nova-api的数据库 su -s /bin/sh -c "nova-manage api_db sync" nova注册cell0数据库:

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

创建cell1单元格:

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

同步nova数据库

su -s /bin/sh -c "nova-manage db sync" nova

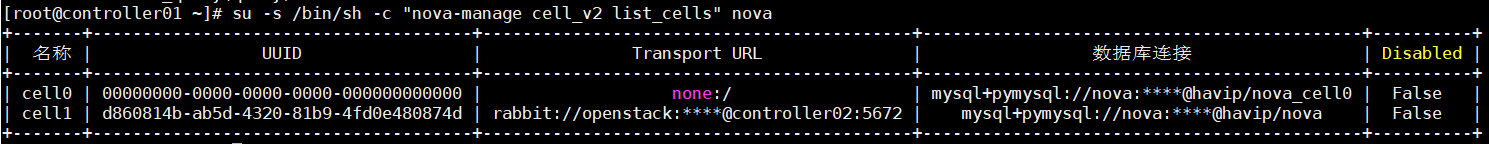

验证nova cell0和cell1已正确注册

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

Cells是Nova内部为了解决数据库、消息队列瓶颈问题而设计的一种计算节点划分部署方案,cell v2 自 Newton 版本引入 ,Ocata 版本变为必要组件 。

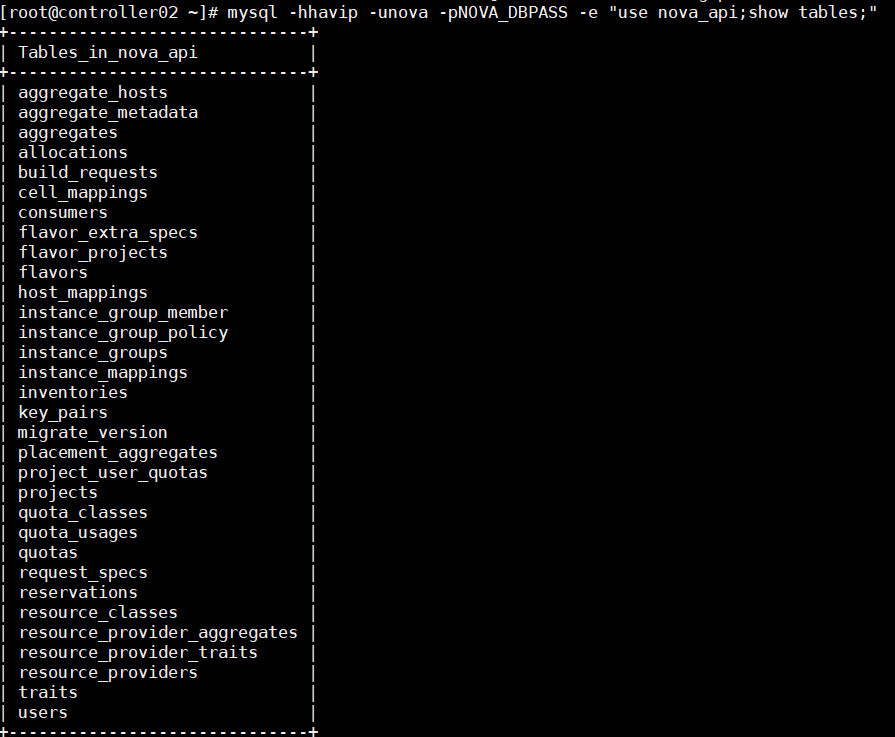

#验证数据库

mysql -hhavip -unova -pNOVA_DBPASS -e "use nova_api;show tables;"

mysql -hhavip -unova -pNOVA_DBPASS -e "use nova;show tables;"

mysql -hhavip -unova -pNOVA_DBPASS -e "use nova_cell0;show tables;"

4.5、启动nova服务,并配置开机启动

#####全部controller节点##### systemctl enable openstack-nova-api.service systemctl enable openstack-nova-scheduler.service systemctl enable openstack-nova-conductor.service systemctl enable openstack-nova-novncproxy.servicesystemctl restart openstack-nova-api.service

systemctl restart openstack-nova-scheduler.service

systemctl restart openstack-nova-conductor.service

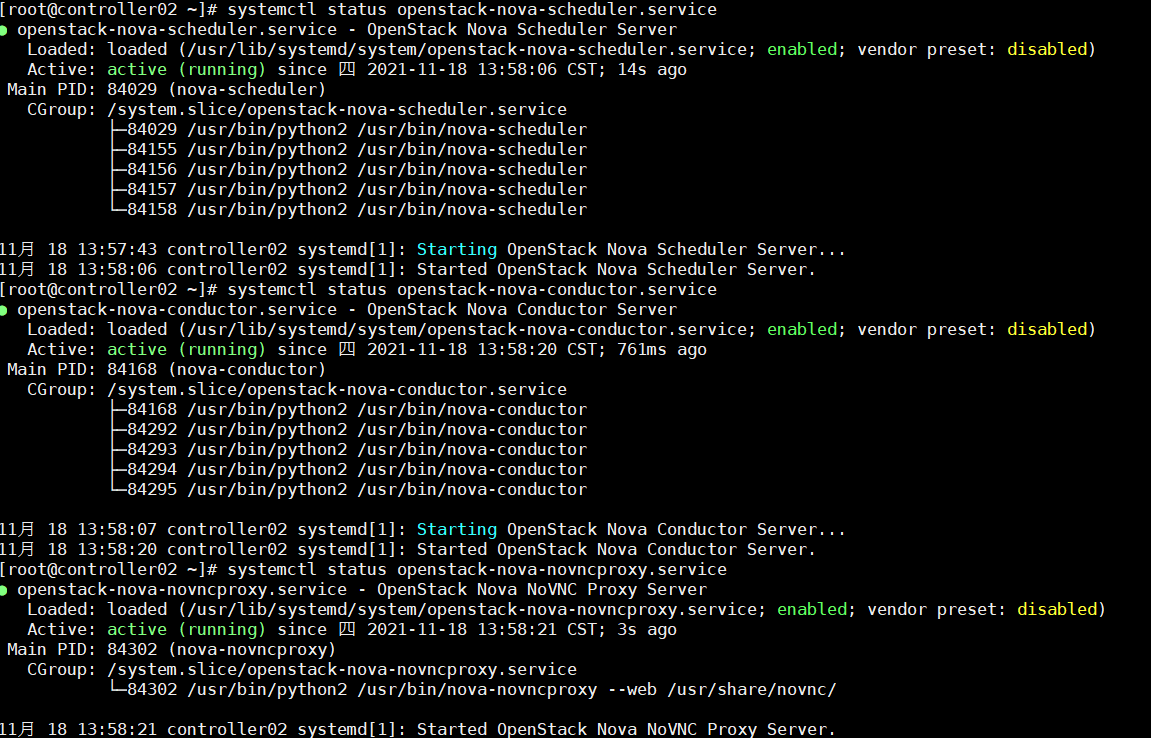

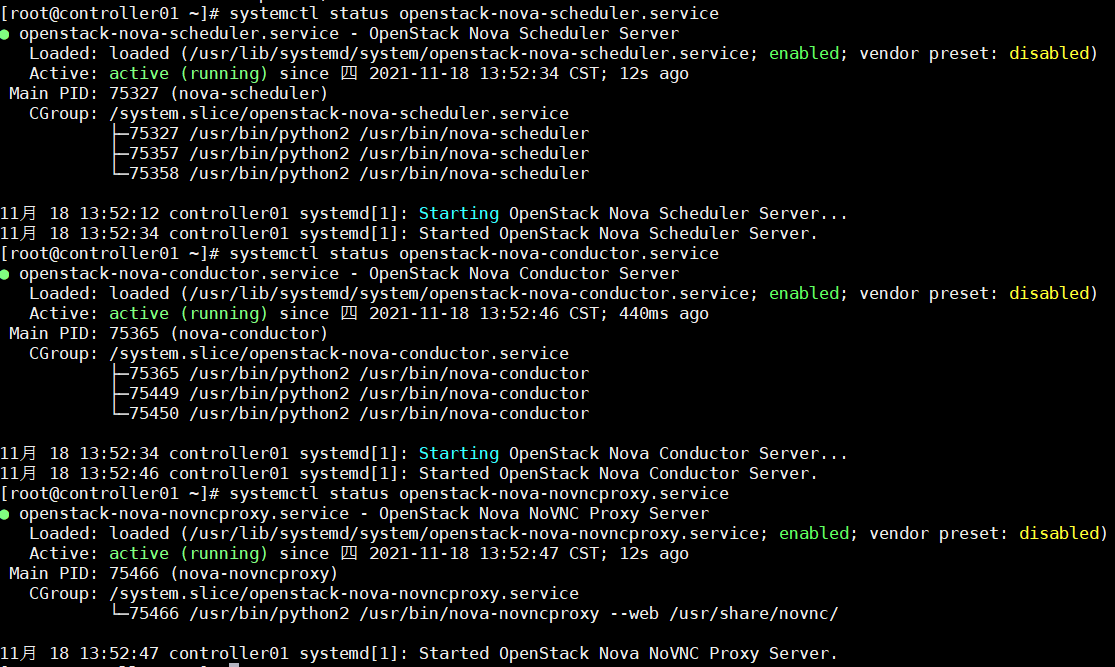

systemctl restart openstack-nova-novncproxy.servicesystemctl status openstack-nova-api.service

systemctl status openstack-nova-scheduler.service

systemctl status openstack-nova-conductor.service

systemctl status openstack-nova-novncproxy.service

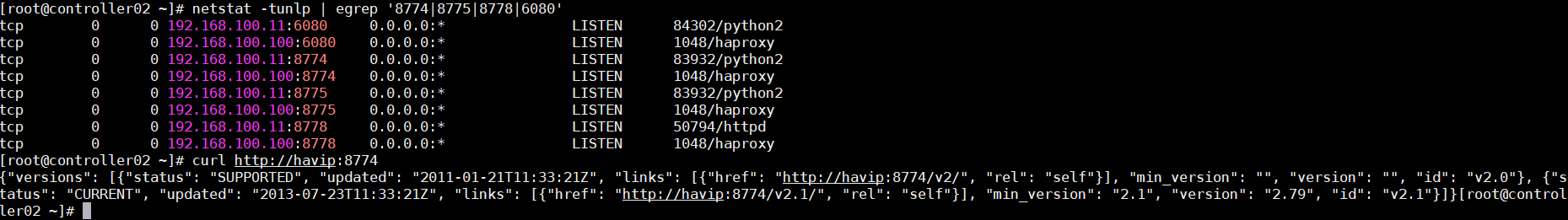

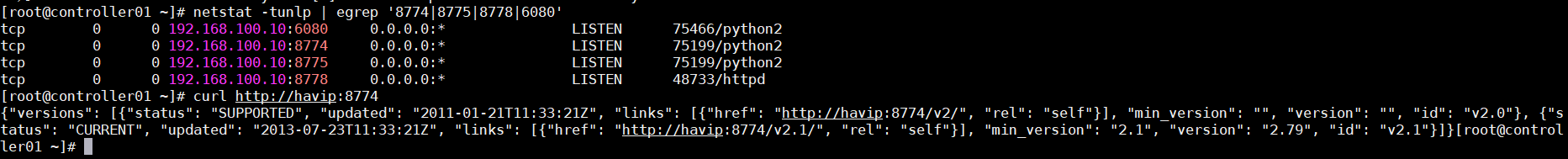

netstat -tunlp | egrep '8774|8775|8778|6080'

curl http://havip:8774

4.6、验证

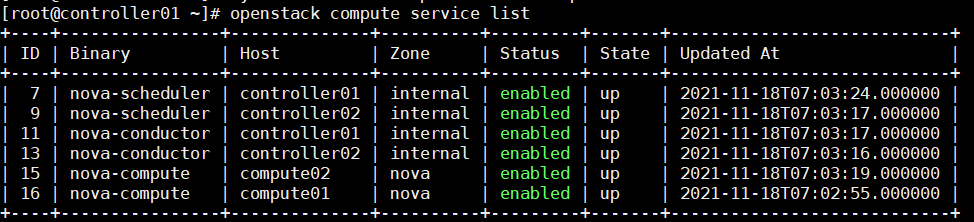

#####controller##### #列出各服务控制组件,查看状态; openstack compute service list显示api端点;

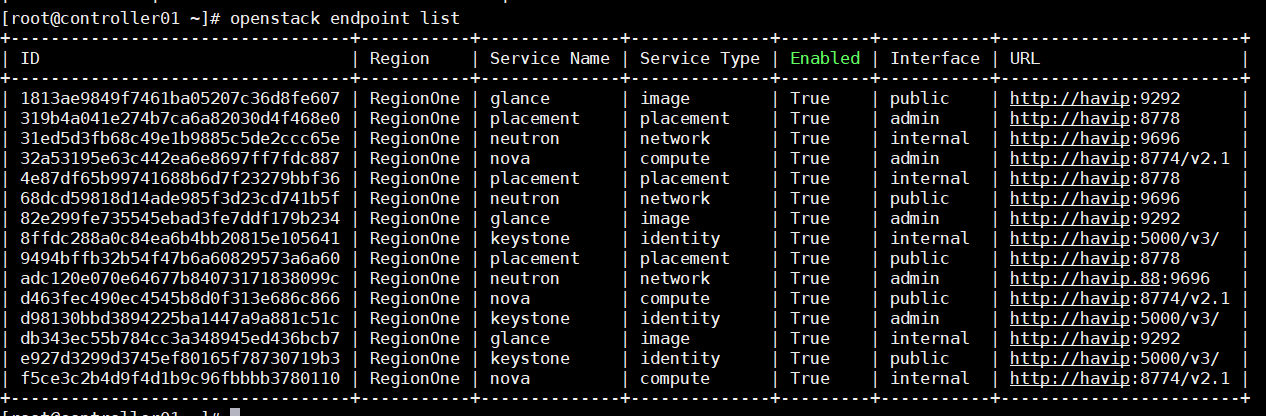

openstack catalog list

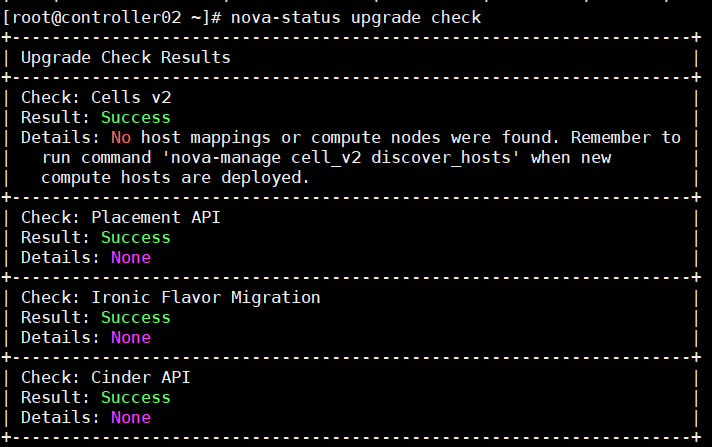

检查cell与placement api;都为success为正常

nova-status upgrade check

4.7、设置pcs资源

#####任意controller节点#### pcs resource create openstack-nova-api systemd:openstack-nova-api clone interleave=true pcs resource create openstack-nova-scheduler systemd:openstack-nova-scheduler clone interleave=true pcs resource create openstack-nova-conductor systemd:openstack-nova-conductor clone interleave=true pcs resource create openstack-nova-novncproxy systemd:openstack-nova-novncproxy clone interleave=true建议openstack-nova-api,openstack-nova-conductor与openstack-nova-novncproxy 等无状态服务以active/active模式运行;

openstack-nova-scheduler等服务以active/passive模式运行

5、Nova计算节点集群部署

5.1、Nova安装

compute01:192.168.100.20

compute02:192.168.100.21

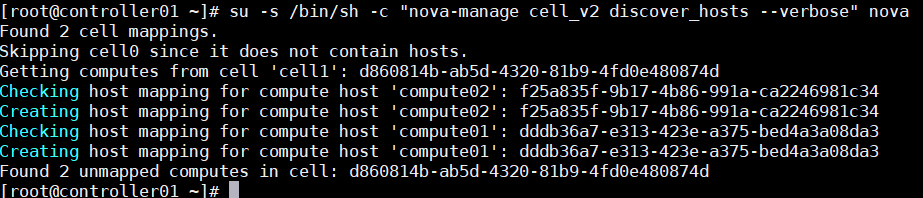

#####全部compute节点##### yum install openstack-nova-compute -y yum install -y openstack-utils -y备份配置文件/etc/nova/nova.confcp /etc/nova/nova.conf

cp /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf确定计算节点是否支持虚拟机硬件加速

egrep -c '(vmx|svm)' /proc/cpuinfo

0如果此命令返回值不是0,则计算节点支持硬件加速,不需要加入下面的配置。

如果此命令返回值是0,则计算节点不支持硬件加速,并且必须配置libvirt为使用QEMU而不是KVM

需要编辑/etc/nova/nova.conf 配置中的[libvirt]部分:因测试使用为虚拟机,所以修改为qemu

5.2、部署与配置

######compute01###### openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata openstack-config --set /etc/nova/nova.conf DEFAULT transport_url rabbit://openstack:000000@controller01:5672,openstack:000000@controller02:5672 openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.100.20 openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron true openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriveropenstack-config --set /etc/nova/nova.conf api auth_strategy keystone

openstack-config --set /etc/nova/nova.conf keystone_authtoken www_authenticate_uri http://havip:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://havip:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name Default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password novaopenstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

openstack-config --set /etc/nova/nova.conf vnc enabled true

openstack-config --set /etc/nova/nova.conf vnc server_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc server_proxyclient_address 192.168.100.20

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://havip:6080/vnc_auto.htmlopenstack-config --set /etc/nova/nova.conf glance api_servers http://havip:9292

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf placement region_name RegionOne

openstack-config --set /etc/nova/nova.conf placement project_domain_name Default

openstack-config --set /etc/nova/nova.conf placement project_name service

openstack-config --set /etc/nova/nova.conf placement auth_type password

openstack-config --set /etc/nova/nova.conf placement user_domain_name Default

openstack-config --set /etc/nova/nova.conf placement auth_url http://havip:5000/v3

openstack-config --set /etc/nova/nova.conf placement username placement

openstack-config --set /etc/nova/nova.conf placement password placement拷贝配置文件

scp -rp /etc/nova/nova.conf compute02:/etc/nova/

compute02#####

sed -i "s\192.168.100.20\192.168.100.21\g" /etc/nova/nova.conf

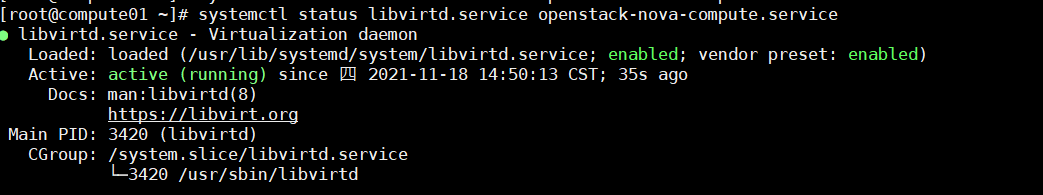

5.3、启动nova

######全部compute######

systemctl restart libvirtd.service openstack-nova-compute.service

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl status libvirtd.service openstack-nova-compute.service

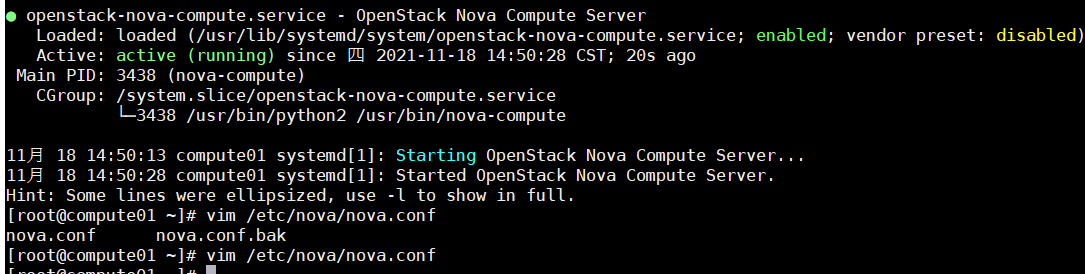

#任意控制节点执行;查看计算节点列表

openstack compute service list --service nova-compute

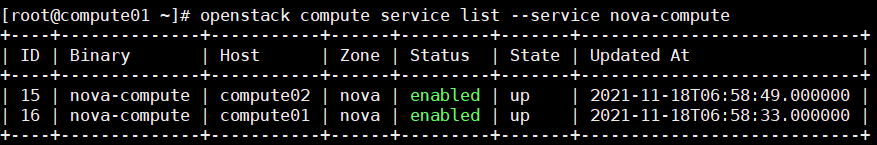

5.4、控制节点上发现计算主机

#####controller节点上运行#####

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

####全部controller节点#####

#在全部控制节点操作;设置自动发现时间为10min,可根据实际环境调节

openstack-config --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 600

systemctl restart openstack-nova-api.service5.5、验证

openstack compute service list

6、Neutron控制节点集群部署

6.1创建neutron相关数据库及认证信息(控制节点)

#建库授权 mysql -u root -p000000 CREATE DATABASE neutron; GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS'; GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS'; flush privileges; exit;创建用户、项目、角色

openstack user create --domain default --password neutron neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network创建endpoint

openstack endpoint create --region RegionOne network public http://havip:9696

openstack endpoint create --region RegionOne network internal http://havip:9696

openstack endpoint create --region RegionOne network admin http://havip.88:9696

6.2、安装Neutron server(控制节点)

6.2.1、配置neutron.conf

#####全部controller##### yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y yum install conntrack-tools -ycontroller01######

配置参数文件

备份配置文件/etc/nova/nova.conf

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf配置neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT bind_host 192.168.100.10

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins router

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips true直接连接rabbitmq集群

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:000000@controller01:5672,openstack:000000@controller02:5672

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true启用l3 ha功能

openstack-config --set /etc/neutron/neutron.conf DEFAULT l3_ha True

最多在几个l3 agent上创建ha router

openstack-config --set /etc/neutron/neutron.conf DEFAULT max_l3_agents_per_router 3

可创建ha router的最少正常运行的l3 agnet数量

openstack-config --set /etc/neutron/neutron.conf DEFAULT min_l3_agents_per_router 1

dhcp高可用,在3个网络节点各生成1个dhcp服务器

openstack-config --set /etc/neutron/neutron.conf DEFAULT dhcp_agents_per_network 2

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@havip/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://havip:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://havip:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password neutronopenstack-config --set /etc/neutron/neutron.conf nova auth_url http://havip:5000

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password novaopenstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

将配置文件复制到controller02节点

scp -rp /etc/neutron/neutron.conf controller02:/etc/neutron/

controller02######

sed -i "s\192.168.100.10\192.168.100.11\g" /etc/neutron/neutron.conf

6.2.2、配置 ml2_conf.ini

#####全部controller##### #备份配置文件 cp -a /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak} grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.inicontroller01######

编辑配置文件

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

scp -rp /etc/neutron/plugins/ml2/ml2_conf.ini controller02:/etc/neutron/plugins/ml2/ml2_conf.ini

6.2.3、配置nova服务与neutron服务进行交互

#####全部controller#####

#修改配置文件/etc/nova/nova.conf

#在全部控制节点上配置nova服务与网络节点服务进行交互

openstack-config --set /etc/nova/nova.conf neutron url http://havip:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://havip:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password neutron

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy true

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret neutron

6.3、同步数据库

#####controller01#####

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

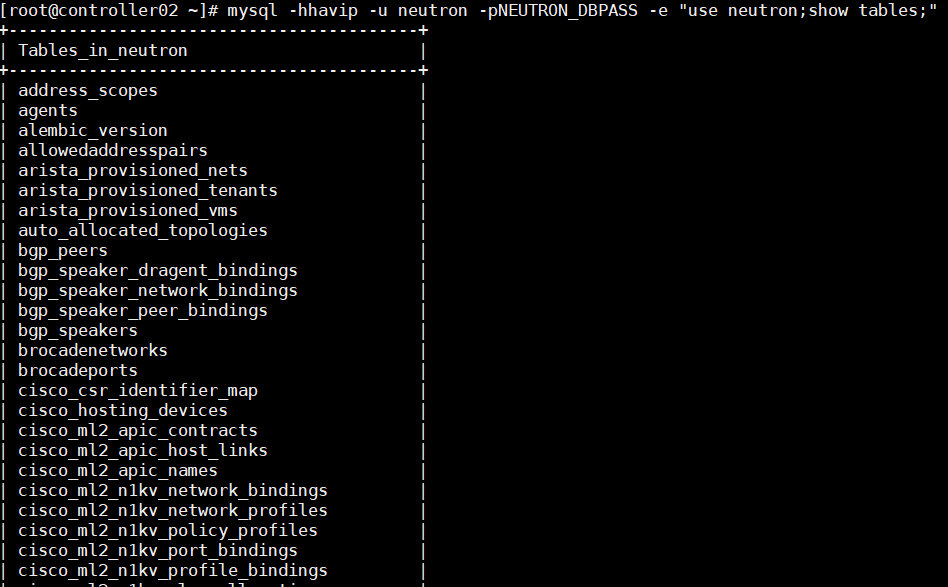

mysql -hhavip -u neutron -pNEUTRON_DBPASS -e "use neutron;show tables;"

#####全部controller节点##### ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini systemctl restart openstack-nova-api.service systemctl status openstack-nova-api.service

systemctl enable neutron-server.service

systemctl restart neutron-server.service

systemctl status neutron-server.service

7、Neutron计算节点集群部署

7.1、安装配置Neutron agent(计算节点=网络节点)

#####所有的compute节点##### yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y备份配置文件/etc/nova/nova.conf

cp -a /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.confcompute01#####

openstack-config --set /etc/neutron/neutron.conf DEFAULT bind_host 192.168.100.20

openstack-config --set /etc/neutron/neutron.conf DEFAULT transport_url rabbit://openstack:000000@controller01:5672,openstack:000000@controller02:5672

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone配置RPC的超时时间,默认为60s,可能导致超时异常.设置为180s

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_response_timeout 180

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken www_authenticate_uri http://havip:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://havip:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller01:11211,controller02:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password neutronopenstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

scp -rp /etc/neutron/neutron.conf compute02:/etc/neutron/neutron.conf

compute02#####

sed -i "s\192.168.100.20\192.168.100.21\g" /etc/neutron/neutron.conf

7.2、部署与配置(计算节点)

7.2.1、配置nova.conf

#####全部compute节点#####

openstack-config --set /etc/nova/nova.conf neutron url http://havip:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://havip:5000

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password neutron7.2.2、配置ml2_conf.ini

#####compute01##### #备份配置文件 cp -a /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak} grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.iniopenstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan,vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge,l2population

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset true

scp -rp /etc/neutron/plugins/ml2/ml2_conf.ini compute02:/etc/neutron/plugins/ml2/ml2_conf.ini

7.2.3、配置linuxbridge_agent.ini

######compute01###### #备份配置文件 cp -a /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak} grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak >/etc/neutron/plugins/ml2/linuxbridge_agent.ini环境无法提供四张网卡;建议生产环境上将每种网络分开配置

provider网络对应规划的ens33

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:ens33

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true

tunnel租户网络(vxlan)vtep端点,这里对应规划的ens33地址,根据节点做相应修改

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.100.20

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriverscp -rp /etc/neutron/plugins/ml2/linuxbridge_agent.ini compute02:/etc/neutron/plugins/ml2/

#######compute02上######

sed -i "s#192.168.100.20#192.168.100.21#g" /etc/neutron/plugins/ml2/linuxbridge_agent.ini

7.2.4、 配置 l3_agent.ini

- l3代理为租户虚拟网络提供路由和NAT服务

#####全部compute节点#####

#备份配置文件

cp -a /etc/neutron/l3_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridge7.2.5、配置dhcp_agent.ini

#####全部controller##### #备份配置文件 cp -a /etc/neutron/dhcp_agent.ini{,.bak} grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridge

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true

7.2.6、配置metadata_agent.ini

- 元数据代理提供配置信息,例如实例的凭据

metadata_proxy_shared_secret的密码与控制节点上/etc/nova/nova.conf文件中密码一致;

#####全部compute节点#####

#备份配置文件

cp -a /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host havip

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret neutron

openstack-config --set /etc/neutron/metadata_agent.ini cache memcache_servers controller01:11211,controller02:11211

7.3、添加linux内核参数设置

- 确保Linux操作系统内核支持网桥过滤器,通过验证所有下列sysctl值设置为1;

#####全部的控制节点和计算节点#####

#启用网络桥接器支持,需要加载 br_netfilter 内核模块;否则会提示没有目录

modprobe br_netfilter

echo 'net.ipv4.ip_nonlocal_bind = 1' >>/etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-iptables=1' >>/etc/sysctl.conf

echo 'net.bridge.bridge-nf-call-ip6tables=1' >>/etc/sysctl.conf

sysctl -p7.4、重启nova-api和neutron-gaent服务

-0------------------------------------------------ #####全部的compute节点##### systemctl restart openstack-nova-compute.service systemctl enable neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent systemctl restart neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent systemctl status neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent neutron-l3-agentcontroller01######

neutron服务验证(控制节点)

列出已加载的扩展,以验证该neutron-server过程是否成功启动

openstack extension list --network

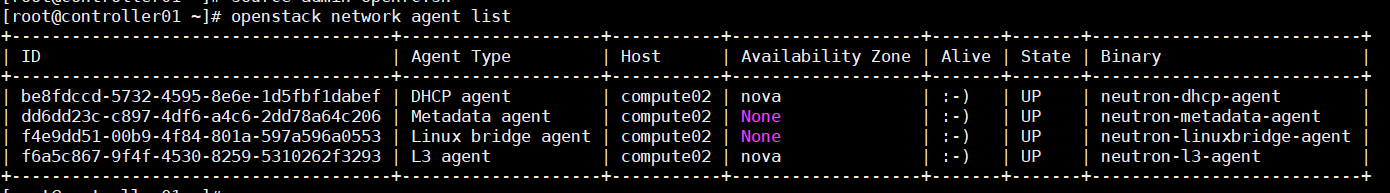

列出代理商以验证成功

openstack network agent list

7.5、添加pcs资源

#####controller01#####

pcs resource create neutron-server systemd:neutron-server clone interleave=true

8、Horazion仪表盘集群部署

8.1、安装并配置dashboard

######全部conntroller###### yum install openstack-dashboard memcached python3-memcached -y备份配置文件/etc/nova/nova.conf

cp -a /etc/openstack-dashboard/local_settings{,.bak}

grep -Ev '^$|#' /etc/openstack-dashboard/local_settings.bak >/etc/openstack-dashboard/local_settingscontroller01#########

在controller01上配置,后通过scp拷贝到controller02

配置文件中要将所有注释取消

vim /etc/openstack-dashboard/local_settings

指定在网络服务器中配置仪表板的访问位置,添加如下:

WEBROOT = '/dashboard/'

配置仪表盘在controller节点上使用OpenStack服务

sed -i 's\OPENSTACK_HOST = "127.0.0.1"\OPENSTACK_HOST = "192.168.100.100"' /etc/openstack-dashboard/local_settings

允许主机访问仪表板,接受所有主机,不安全不应在生产中使用

ALLOWED_HOSTS = ['*']

配置memcached会话存储服务,添加如下

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller01:11211,controller02:11211',

}

}启用身份API版本3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

配置API版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}配置Default为通过仪表板创建的用户的默认域

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

配置user为通过仪表板创建的用户的默认角色

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

如果选择网络选项1,请禁用对第3层网络服务的支持,如果选择网络选项2,则可以打开

OPENSTACK_NEUTRON_NETWORK = {

#自动分配的网络

'enable_auto_allocated_network': False,

#Neutron分布式虚拟路由器(DVR)

'enable_distributed_router': False,

#FIP拓扑检查

'enable_fip_topology_check': False,

#高可用路由器模式

'enable_ha_router': True,#ipv6网络 'enable_ipv6': True, #Neutron配额功能 'enable_quotas': True, #rbac政策 'enable_rbac_policy': True, #路由器的菜单和浮动IP功能,Neutron部署中有三层功能的支持;可以打开 'enable_router': True, #默认的DNS名称服务器 'default_dns_nameservers': [], #网络支持的提供者类型,在创建网络时,该列表中的网络类型可供选择 'supported_provider_types': ['*'], #使用与提供网络ID范围,仅涉及到VLAN,GRE,和VXLAN网络类型 'segmentation_id_range': {}, #使用与提供网络类型 'extra_provider_types': {}, #支持的vnic类型,用于与端口绑定扩展 'supported_vnic_types': ['*'], #物理网络 'physical_networks': [],}

配置时区为亚洲上海

TIME_ZONE = "Asia/Shanghai"

拷贝到controller02

scp -rp /etc/openstack-dashboard/local_settings controller02:/etc/openstack-dashboard/

8.2、配置openstack-dashboard.conf

#####全部controller##### cp /etc/httpd/conf.d/openstack-dashboard.conf{,.bak}建立策略文件(policy.json)的软链接,否则登录到dashboard将出现权限错误和显示混乱

ln -s /etc/openstack-dashboard /usr/share/openstack-dashboard/openstack_dashboard/conf

赋权,在第3行后新增 WSGIApplicationGroup %

sed -i '3a WSGIApplicationGroup\ %{GLOBAL}' /etc/httpd/conf.d/openstack-dashboard.conf

######所有controller节点######

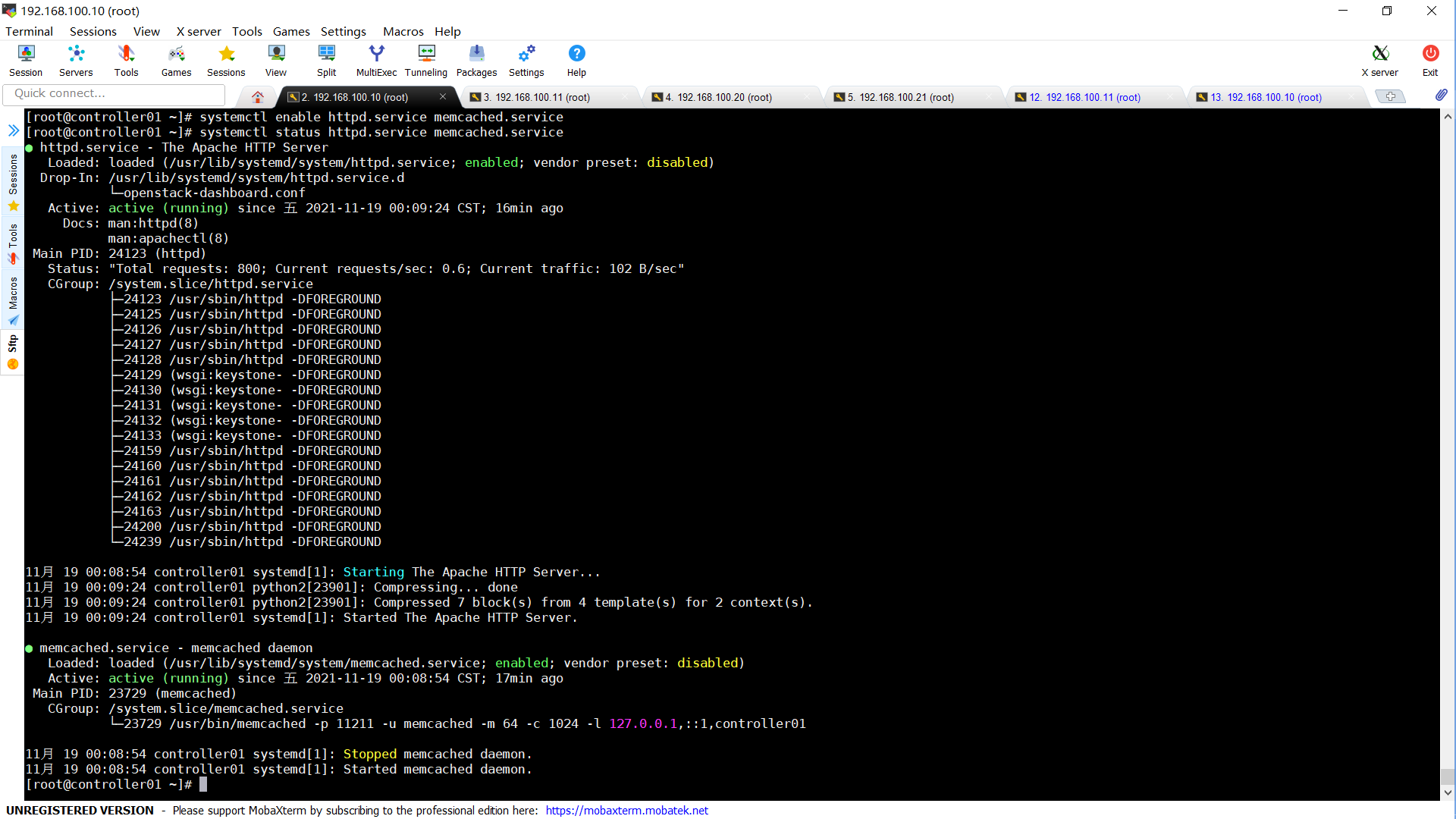

systemctl restart httpd.service memcached.service

systemctl enable httpd.service memcached.service

systemctl status httpd.service memcached.service

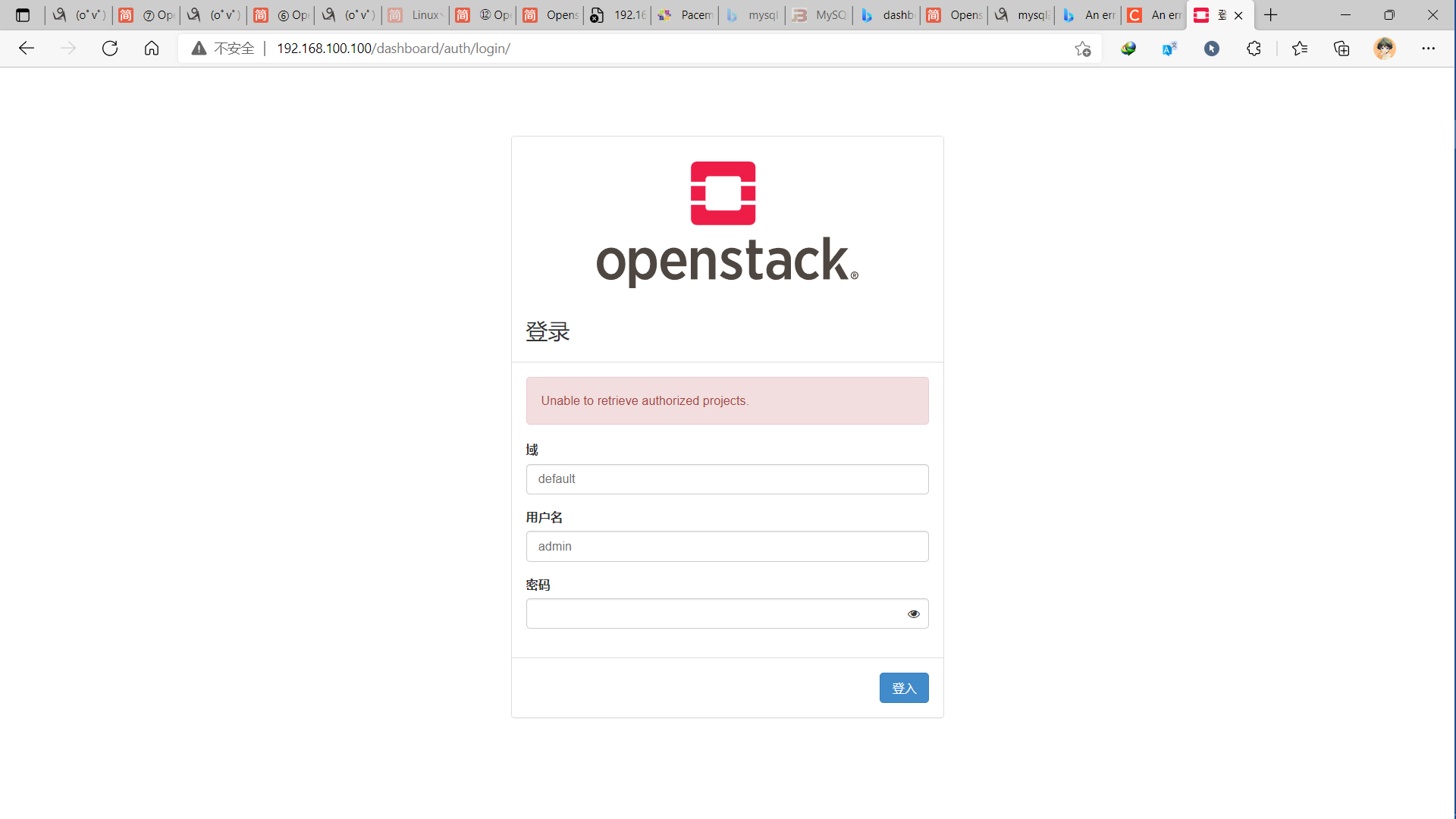

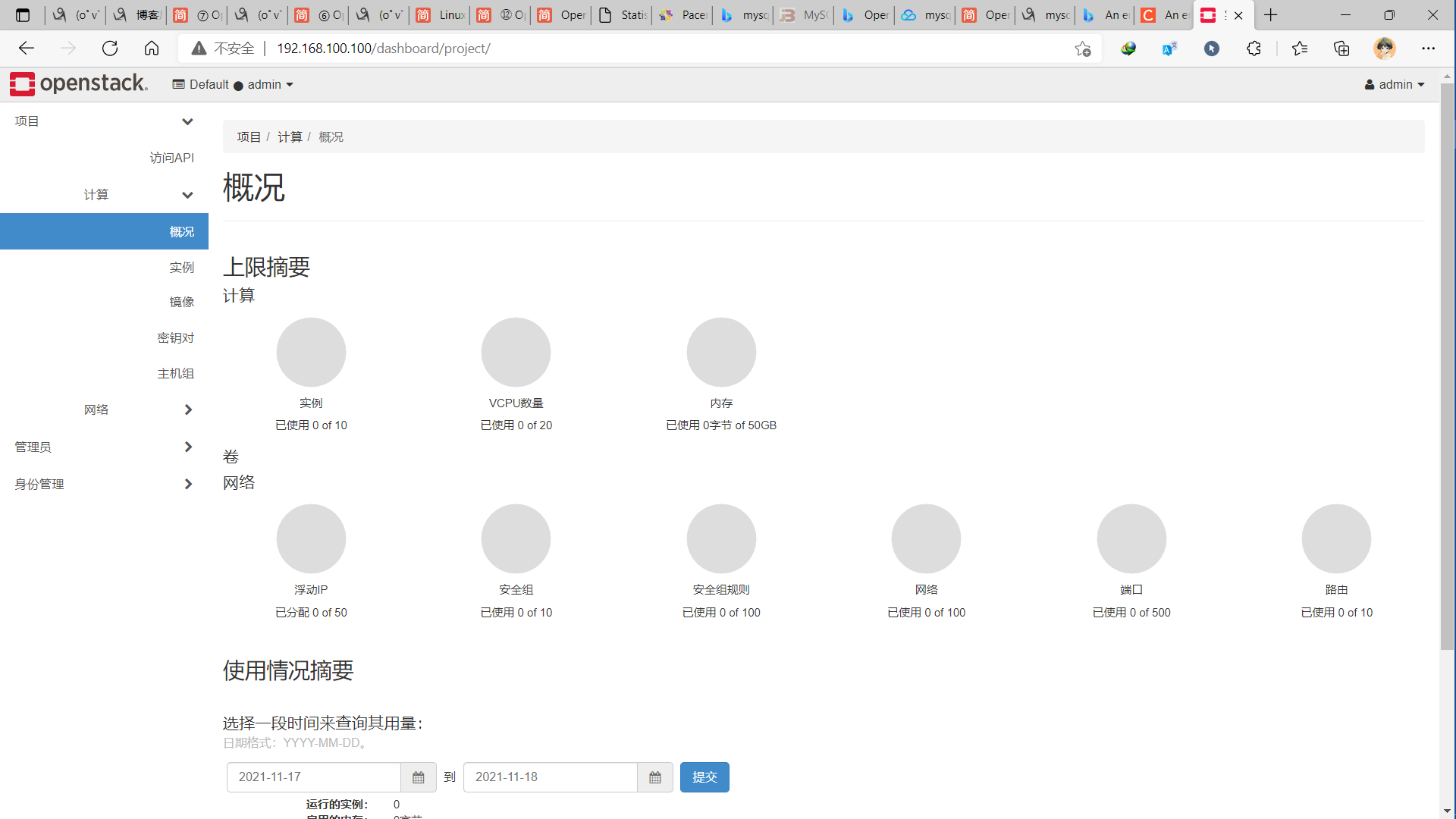

8.3、测试登录

http://192.168.100.100/dashboard

浙公网安备 33010602011771号

浙公网安备 33010602011771号