数据采集第六次作业

作业①

(1)、要求:

用requests和BeautifulSoup库方法爬取豆瓣电影Top250数据。

每部电影的图片,采用多线程的方法爬取,图片名字为电影名

了解正则的使用方法

编写爬虫程序

import re

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import threading

def spider(start_url):

global threads

global urls

global count

try:

req = urllib.request.Request(start_url, headers=headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, 'html.parser')

olTag = soup.find('ol', class_='grid_view')

details = olTag.find_all('li')

for detail in details:

movieRank = detail.find('em').text # 电影排名

movieName = '《' + detail.find('span', class_='title').text + '》' # 电影名称

movieScore = detail.find('span', class_='rating_num').text + '分' # 电影评分

movieCommentNum = detail.find(text=re.compile('\d+人评价')).string # 评价人数

movieReview = '"' + detail.find('span', class_='inq').text + '"' # 电影短评

movieP = detail.find('p').text

movieP1 = movieP.split('\n')[1]

movieP2 = movieP.split('\n')[2]

movieDirector = movieP1.split('\xa0')[0].strip()[4:] # 导演

movieYear = re.findall(r'\d{4}', movieP2)[0] # 上映年份

movieCountry = movieP2.split('\xa0/\xa0')[-2] # 制片国家

movieType = movieP2.split('\xa0/\xa0')[-1].strip() # 电影类型

print(movieRank, movieName, movieDirector, movieYear, movieCountry, movieType, movieScore,

movieCommentNum, movieReview)

# 爬取图片

images = soup.select("img")

for image in images:

try:

url = image["src"] # 图片下载链接

name = image["alt"] # 图片名称

print(url)

if url not in urls:

urls.append(url)

print(url)

T = threading.Thread(target=download, args=(url, name))

T.setDaemon(False)

T.start()

threads.append(T)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url, name):

try:

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

path = "images_\\" + name + ".png" # 保存为png格式

fobj = open(path, "wb")

fobj.write(data)

fobj.close()

print("download" + name + ".png")

except Exception as err:

print(err)

headers = {

"User-Agent": "Mozilla/5.0(Windows;U;Windows NT 6.0 x64;en-US;rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"

}

urls = []

threads = []

# 共10页

for i in range(0, 10):

start_url = "https://movie.douban.com/top250?start=" + str(i * 25) + "&filter="

print("start Spider")

spider(start_url)

for t in threads:

t.join()

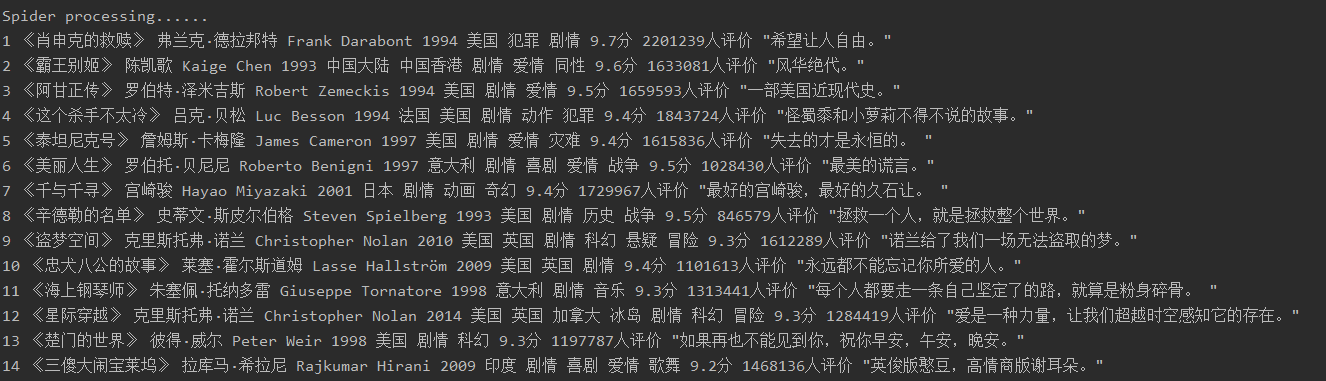

输出信息

(2)、心得体会

复习了多线程和BeautifulSoup的知识,刚开始用了非常复杂的方法但是一直报错,在参考了书上的内容之后终于爬了出来,还有就是定位有的时候会错,要多加注意

作业②

(1)、要求:

熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取科软排名信息

爬取科软学校排名,并获取学校的详细链接,进入下载学校Logo存储、获取官网Url、院校信息等内容。

编写爬虫程序

import urllib

import scrapy

from ..items import RankItem

from bs4 import UnicodeDammit

class MySpider(scrapy.Spider):

name = "mySpider"

start_urls=['https://www.shanghairanking.cn/rankings/bcur/2020']

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

tds = selector.xpath("//div[@class='rk-table-box']//tr")

for td in tds[1:]:

sNo = td.xpath("./td[position()=1]/text()").extract_first()

schoolName =td.xpath("./td[position()=2]/a/text()").extract_first()

city = td.xpath("./td[position()=3]/text()").extract_first()

Url =td.xpath("./td[position()=2]/a/@href").extract_first()

url=response.urljoin(Url)

# print(url)

req = urllib.request.Request(url)

html = urllib.request.urlopen(req)

html = html.read()

dammit = UnicodeDammit(html, ["utf-8", "gbk"])

html = dammit.unicode_markup

selector1 = scrapy.Selector(text=html)

officialUrl = selector1.xpath("//div[@class='univ-website']/a/text()").extract_first()

mFile = selector1.xpath("//td[@class='univ-logo']/img/@src").extract_first()

try:

info = selector1.xpath("//div[@class='univ-introduce']/p/text()").extract_first()

except:

info = ""

item = RankItem()

item["sNo"] = sNo.strip() if sNo else ""

sNo1=sNo.strip() if sNo else ""

print(sNo1)

item["schoolName"] =schoolName.strip() if schoolName else ""

item["city"] = city.strip() if city else ""

item["officialUrl"] = officialUrl.strip() if officialUrl else ""

item["info"] = info.strip() if info else ""

item["mFile"] = str(sNo1)+".jpg"

yield item

except Exception as err:

print(err)

pipelines.py

import urllib

import pymysql

class RankPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root",

passwd="root", db="mydb", charset="gbk")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from ranks")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

def download(self,url, count):

UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari" \

"/537.36 Edge/18.18363"

headers = {'User-Agent': UserAgent}

try:

if (url[len(url) - 4] == "."):

ext = url[len(url) - 4:]

else:

ext = ""

req = urllib.request.Request(url,headers=headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("E:\\wangluopachong_6\\images_2\\" + str(count) + ext, "wb")

fobj.write(data)

fobj.close()

print("downloaded " + str(count) + ext)

except Exception as err:

print(err)

def process_item(self, item, spider):

try:

# print(item["sNo"])

# print(item["schoolName"])

# print(item["city"])

# print(item["officialUrl"])

# print(item["info"])

# print()

if self.opened:

self.count += 1

self.cursor.execute(

"insert into ranks(sNo,schoolName,city,officialUrl,info,mFile)values(%s,%s,%s,%s,%s,%s)",

(item["sNo"], item["schoolName"], item["city"],

item["officialUrl"], item["info"], item["mFile"]))

self.download(item["url"], item["sNo"])

except Exception as err:

print(err)

return item

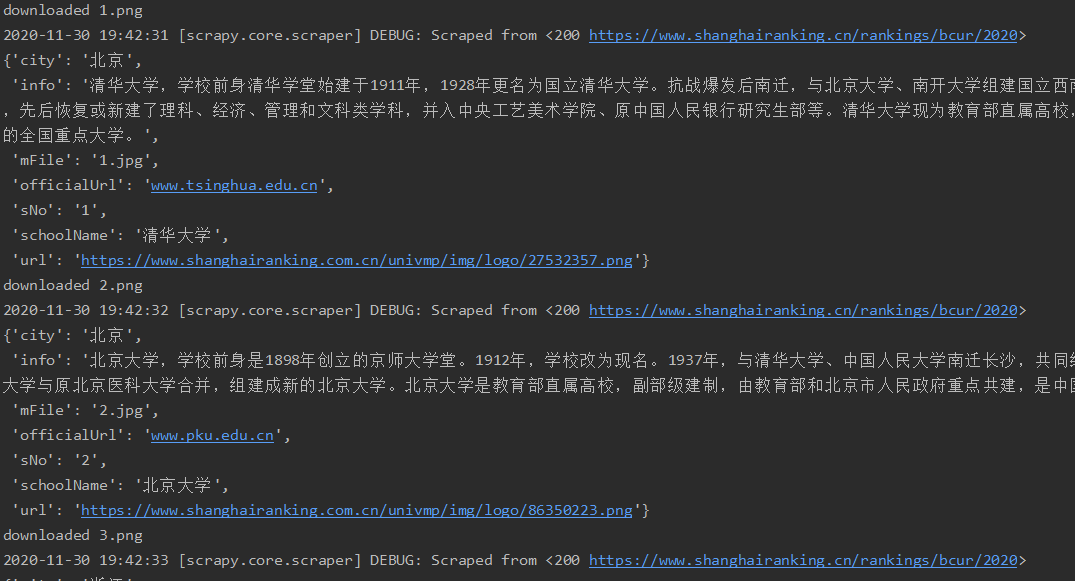

输出信息

(2)、心得体会:

在编写代码的过程中,对scrapy的知识有些许遗忘,也犯了许多幼稚的错误,今后还是要多加练习

作业③

(1)、要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

编写爬虫程序

import pymysql

from selenium import webdriver

import datetime

import time

from selenium.webdriver.support import ui

class MySpider:

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

def startUp(self, url):

# Initializing Chrome browser

self.driver = webdriver.Chrome(r'C:\Program Files (x86)\Google\Chrome\Application\chromedriver.exe')

# Initializing variables

self.threads = []

self.count = 0

self.page = 1

# Initializing database

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="root", db="mydb",charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

try:

# 如果有表就删除

self.cursor.execute("drop table courses2")

except Exception as err:

print(err)

try:

# 建立新的表

sql = "create table courses2(Id int,cCourse VARCHAR (32),cCollege VARCHAR(32),cTeacher VARCHAR(32),cTeam VARCHAR(32),cCount VARCHAR(32),cProcess VARCHAR(32),cBrief VARCHAR(512))"

self.cursor.execute(sql)

except Exception as err:

print(err)

except Exception as err:

print(err)

# Initializing images folder

# 请求登录页面

try:

wait = ui.WebDriverWait(self.driver, 10)

self.driver.get(url)

time.sleep(2) # 睡眠2秒

self.driver.find_element_by_xpath("//div[@class='_1Y4Ni']//div[@role='button']").click()

time.sleep(0.5) # 休眠0.5秒钟后执行填写用户名和密码操作

self.driver.find_element_by_xpath("//span[@class='ux-login-set-scan-code_ft_back']").click()

time.sleep(0.5)

self.driver.find_elements_by_xpath("//ul[@class='ux-tabs-underline_hd']//li")[1].click()

time.sleep(2) # 找到用户名输入用户名

frame_ = self.driver.find_element_by_xpath("//div[@class='ux-login-set-container']//iframe")

self.driver.switch_to.frame(frame_)

wait.until(lambda driver: driver.find_element_by_xpath("//div[@class='u-input box']//input"))

self.driver.find_element_by_xpath("//div[@class='u-input box']//input").send_keys('15259181235')

wait.until(lambda driver: driver.find_element_by_xpath("//input[@placeholder='请输入密码']"))

self.driver.find_element_by_xpath("//input[@placeholder='请输入密码']").send_keys("123456789blh") # 点击登录按钮实现登录

self.driver.find_element_by_id('submitBtn').click() # 登录成功后跳转首页,进行加载,休眠10秒加载页面

time.sleep(10)

self.driver.find_element_by_xpath("//div[@class='u-navLogin-myCourse-t']//span[@class='nav']").click()

time.sleep(10)

except Exception as err:

print(err)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err);

def insertDB(self, id, ccourse, ccollege, cteacher, cTeam, ccount, cprocess, cbrief):

try:

sql = "insert into courses2(id,ccourse,ccollege,cteacher,cTeam,ccount,cprocess,cbrief)values(%s,%s,%s,%s,%s,%s,%s,%s)"

self.cursor.execute(sql,(id,ccourse,ccollege,cteacher,cTeam,ccount,cprocess,cbrief))

except Exception as err:

print(err)

def processSpider(self):

try:

time.sleep(1)

print(self.driver.current_url)

divs = self.driver.find_elements_by_xpath("//div[@class='course-card-wrapper']")

for div in divs:

try:

ccourse = div.find_element_by_xpath(".//span[@class='text']").text

ccollege = div.find_element_by_xpath(".//div[@class='school']/a").text

except Exception as err:

print(err)

print("爬取失败1")

try:

self.driver.execute_script("arguments[0].click();",div.find_element_by_xpath(".//div[@class='menu']//a[@class='ga-click']"))

# 跳转到新打开的页面

self.driver.switch_to.window(self.driver.window_handles[-1])

time.sleep(2)

ccount=self.driver.find_element_by_xpath("//span[@class='course-enroll-info_course-enroll_price-enroll_enroll-count']").text

cprocess = self.driver.find_element_by_xpath("//div[@class='course-enroll-info_course-info_term-info_term-time']/span[2]").text

cbrief = self.driver.find_element_by_xpath("//div[@class='course-heading-intro_intro']").text

cteacher = self.driver.find_element_by_xpath("//h3[@class='f-fc3']").text

cteam = self.driver.find_elements_by_xpath("//h3[@class='f-fc3']")

cTeam = ""

for t in cteam:

cTeam += " " + t.text

# 关闭新页面,返回原来的页面

self.driver.close()

self.driver.switch_to.window(self.driver.window_handles[0])

self.count += 1

id = self.count

print(ccourse, ccollege,ccount,cprocess,cbrief,cTeam,cteacher)

try:

self.insertDB(id, ccourse, ccollege, cteacher, cTeam, ccount, cprocess, cbrief)

except Exception as err:

print(err)

print("插入失败")

except Exception as err:

print(err)

print("爬取失败2")

except Exception as err:

print(err)

def executeSpider(self, url):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

for t in self.threads:

t.join()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

url = "https://www.icourse163.org/"

spider = MySpider()

while True:

print("1.爬取")

print("2.退出")

s = input("请选择(1,2):")

if s == "1":

spider.executeSpider(url)

elif s == "2":

break

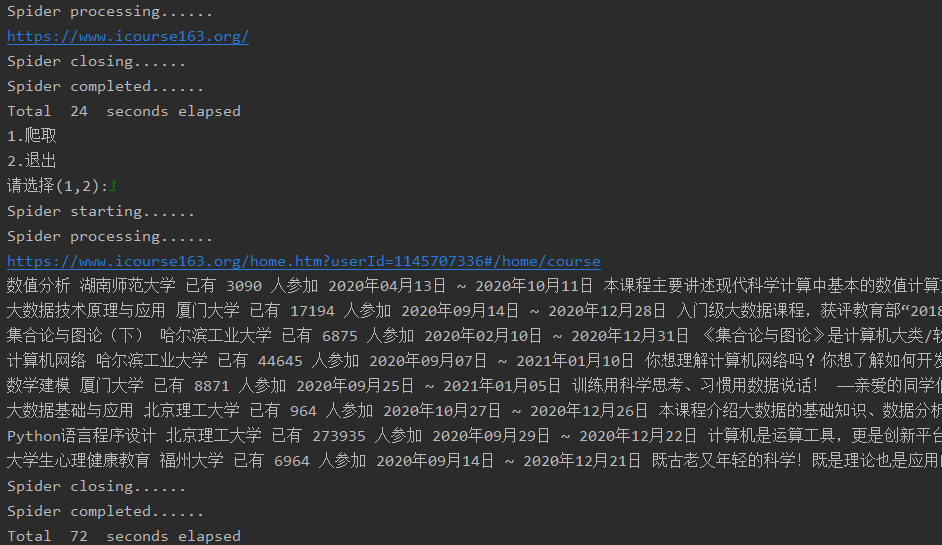

输出信息

浙公网安备 33010602011771号

浙公网安备 33010602011771号