基于Linux-2.6.23的进程调度分析

1.前言

本文基于Linux Kernel 2.6.23的源码,深入分析其进程模型及调度机制

2.进程是什么

在对进程模型分析前让我们先来看看什么是进程,进程(process)是具有一定独立功能的程序关于某个数据集合上的一次运行活动,是系统进行资源(内存、CPU)分配和调度的一个独立单位。而Linux是多任务抢占操作系统,多任务就是指多个进程通过分时切换来并发执行,所以对于这些进程间的切换就要有一套完善的调度机制。

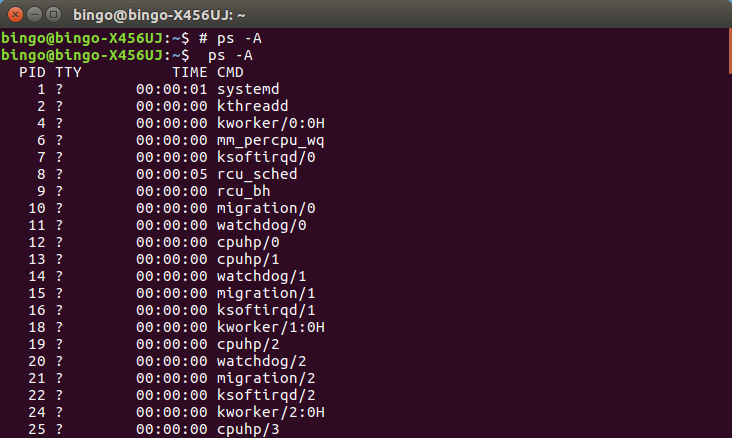

在Linux中,我们可以用 ps 的命令来查询系统当前正在运行的进程(PID:进程号,TTY:控制终端,TIME:进程使用cpu时间,CMD:命令名)

3.进程的组织过程

本节主要深入源码对进程的组织过程进行解析

3.1进程描述符

要组织进程第一步当然就是对进程的定义了,在源码中进程描述符定义在 sched.h 文件下的 task_struct 结构体中,每个进程有且只有一个进程描述符,它里面包含了这个进程相关的所有信息。下面是对 task_struct 结构体的部分截取。

1 struct task_struct { 2 3 ...... 4 5 volatile long state; /* 进程状态 */ 6 void *stack; /* 指向内核栈 */ 7 struct list_head tasks; /* 用于加入进程链表 */ 8 ...... 9 10 struct mm_struct *mm, *active_mm;/* 指向该进程的内存区描述符 */ 11 12 ........ 13 14 pid_t pid;/* 进程ID,每个进程(线程)的PID都不同 */ 15 pid_t tgid; /* 线程组ID,同一个线程组拥有相同的pid,与领头线程(该组中第一个轻量级进程)pid一致,保存在tgid中,线程组领头线程的pid和tgid相同 */ 16 struct pid_link pids[PIDTYPE_MAX]; /* 用于连接到PID、TGID、PGRP、SESSION哈希表 */ 17 18 ........ 19 }

如果你想看整个结构体的代码可以点击这里

1 struct task_struct { 2 volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */ 3 void *stack; 4 atomic_t usage; 5 unsigned int flags; /* per process flags, defined below */ 6 unsigned int ptrace; 7 8 int lock_depth; /* BKL lock depth */ 9 10 #ifdef CONFIG_SMP 11 #ifdef __ARCH_WANT_UNLOCKED_CTXSW 12 int oncpu; 13 #endif 14 #endif 15 16 int prio, static_prio, normal_prio; 17 struct list_head run_list; 18 struct sched_class *sched_class; 19 struct sched_entity se; 20 21 #ifdef CONFIG_PREEMPT_NOTIFIERS 22 /* list of struct preempt_notifier: */ 23 struct hlist_head preempt_notifiers; 24 #endif 25 26 unsigned short ioprio; 27 #ifdef CONFIG_BLK_DEV_IO_TRACE 28 unsigned int btrace_seq; 29 #endif 30 31 unsigned int policy; 32 cpumask_t cpus_allowed; 33 unsigned int time_slice; 34 35 #if defined(CONFIG_SCHEDSTATS) || defined(CONFIG_TASK_DELAY_ACCT) 36 struct sched_info sched_info; 37 #endif 38 39 struct list_head tasks; 40 /* 41 * ptrace_list/ptrace_children forms the list of my children 42 * that were stolen by a ptracer. 43 */ 44 struct list_head ptrace_children; 45 struct list_head ptrace_list; 46 47 struct mm_struct *mm, *active_mm; 48 49 /* task state */ 50 struct linux_binfmt *binfmt; 51 int exit_state; 52 int exit_code, exit_signal; 53 int pdeath_signal; /* The signal sent when the parent dies */ 54 /* ??? */ 55 unsigned int personality; 56 unsigned did_exec:1; 57 pid_t pid; 58 pid_t tgid; 59 60 #ifdef CONFIG_CC_STACKPROTECTOR 61 /* Canary value for the -fstack-protector gcc feature */ 62 unsigned long stack_canary; 63 #endif 64 /* 65 * pointers to (original) parent process, youngest child, younger sibling, 66 * older sibling, respectively. (p->father can be replaced with 67 * p->parent->pid) 68 */ 69 struct task_struct *real_parent; /* real parent process (when being debugged) */ 70 struct task_struct *parent; /* parent process */ 71 /* 72 * children/sibling forms the list of my children plus the 73 * tasks I'm ptracing. 74 */ 75 struct list_head children; /* list of my children */ 76 struct list_head sibling; /* linkage in my parent's children list */ 77 struct task_struct *group_leader; /* threadgroup leader */ 78 79 /* PID/PID hash table linkage. */ 80 struct pid_link pids[PIDTYPE_MAX]; 81 struct list_head thread_group; 82 83 struct completion *vfork_done; /* for vfork() */ 84 int __user *set_child_tid; /* CLONE_CHILD_SETTID */ 85 int __user *clear_child_tid; /* CLONE_CHILD_CLEARTID */ 86 87 unsigned int rt_priority; 88 cputime_t utime, stime; 89 unsigned long nvcsw, nivcsw; /* context switch counts */ 90 struct timespec start_time; /* monotonic time */ 91 struct timespec real_start_time; /* boot based time */ 92 /* mm fault and swap info: this can arguably be seen as either mm-specific or thread-specific */ 93 unsigned long min_flt, maj_flt; 94 95 cputime_t it_prof_expires, it_virt_expires; 96 unsigned long long it_sched_expires; 97 struct list_head cpu_timers[3]; 98 99 /* process credentials */ 100 uid_t uid,euid,suid,fsuid; 101 gid_t gid,egid,sgid,fsgid; 102 struct group_info *group_info; 103 kernel_cap_t cap_effective, cap_inheritable, cap_permitted; 104 unsigned keep_capabilities:1; 105 struct user_struct *user; 106 #ifdef CONFIG_KEYS 107 struct key *request_key_auth; /* assumed request_key authority */ 108 struct key *thread_keyring; /* keyring private to this thread */ 109 unsigned char jit_keyring; /* default keyring to attach requested keys to */ 110 #endif 111 /* 112 * fpu_counter contains the number of consecutive context switches 113 * that the FPU is used. If this is over a threshold, the lazy fpu 114 * saving becomes unlazy to save the trap. This is an unsigned char 115 * so that after 256 times the counter wraps and the behavior turns 116 * lazy again; this to deal with bursty apps that only use FPU for 117 * a short time 118 */ 119 unsigned char fpu_counter; 120 int oomkilladj; /* OOM kill score adjustment (bit shift). */ 121 char comm[TASK_COMM_LEN]; /* executable name excluding path 122 - access with [gs]et_task_comm (which lock 123 it with task_lock()) 124 - initialized normally by flush_old_exec */ 125 /* file system info */ 126 int link_count, total_link_count; 127 #ifdef CONFIG_SYSVIPC 128 /* ipc stuff */ 129 struct sysv_sem sysvsem; 130 #endif 131 /* CPU-specific state of this task */ 132 struct thread_struct thread; 133 /* filesystem information */ 134 struct fs_struct *fs; 135 /* open file information */ 136 struct files_struct *files; 137 /* namespaces */ 138 struct nsproxy *nsproxy; 139 /* signal handlers */ 140 struct signal_struct *signal; 141 struct sighand_struct *sighand; 142 143 sigset_t blocked, real_blocked; 144 sigset_t saved_sigmask; /* To be restored with TIF_RESTORE_SIGMASK */ 145 struct sigpending pending; 146 147 unsigned long sas_ss_sp; 148 size_t sas_ss_size; 149 int (*notifier)(void *priv); 150 void *notifier_data; 151 sigset_t *notifier_mask; 152 153 void *security; 154 struct audit_context *audit_context; 155 seccomp_t seccomp; 156 157 /* Thread group tracking */ 158 u32 parent_exec_id; 159 u32 self_exec_id; 160 /* Protection of (de-)allocation: mm, files, fs, tty, keyrings */ 161 spinlock_t alloc_lock; 162 163 /* Protection of the PI data structures: */ 164 spinlock_t pi_lock; 165 166 #ifdef CONFIG_RT_MUTEXES 167 /* PI waiters blocked on a rt_mutex held by this task */ 168 struct plist_head pi_waiters; 169 /* Deadlock detection and priority inheritance handling */ 170 struct rt_mutex_waiter *pi_blocked_on; 171 #endif 172 173 #ifdef CONFIG_DEBUG_MUTEXES 174 /* mutex deadlock detection */ 175 struct mutex_waiter *blocked_on; 176 #endif 177 #ifdef CONFIG_TRACE_IRQFLAGS 178 unsigned int irq_events; 179 int hardirqs_enabled; 180 unsigned long hardirq_enable_ip; 181 unsigned int hardirq_enable_event; 182 unsigned long hardirq_disable_ip; 183 unsigned int hardirq_disable_event; 184 int softirqs_enabled; 185 unsigned long softirq_disable_ip; 186 unsigned int softirq_disable_event; 187 unsigned long softirq_enable_ip; 188 unsigned int softirq_enable_event; 189 int hardirq_context; 190 int softirq_context; 191 #endif 192 #ifdef CONFIG_LOCKDEP 193 # define MAX_LOCK_DEPTH 30UL 194 u64 curr_chain_key; 195 int lockdep_depth; 196 struct held_lock held_locks[MAX_LOCK_DEPTH]; 197 unsigned int lockdep_recursion; 198 #endif 199 200 /* journalling filesystem info */ 201 void *journal_info; 202 203 /* stacked block device info */ 204 struct bio *bio_list, **bio_tail; 205 206 /* VM state */ 207 struct reclaim_state *reclaim_state; 208 209 struct backing_dev_info *backing_dev_info; 210 211 struct io_context *io_context; 212 213 unsigned long ptrace_message; 214 siginfo_t *last_siginfo; /* For ptrace use. */ 215 /* 216 * current io wait handle: wait queue entry to use for io waits 217 * If this thread is processing aio, this points at the waitqueue 218 * inside the currently handled kiocb. It may be NULL (i.e. default 219 * to a stack based synchronous wait) if its doing sync IO. 220 */ 221 wait_queue_t *io_wait; 222 #ifdef CONFIG_TASK_XACCT 223 /* i/o counters(bytes read/written, #syscalls */ 224 u64 rchar, wchar, syscr, syscw; 225 #endif 226 struct task_io_accounting ioac; 227 #if defined(CONFIG_TASK_XACCT) 228 u64 acct_rss_mem1; /* accumulated rss usage */ 229 u64 acct_vm_mem1; /* accumulated virtual memory usage */ 230 cputime_t acct_stimexpd;/* stime since last update */ 231 #endif 232 #ifdef CONFIG_NUMA 233 struct mempolicy *mempolicy; 234 short il_next; 235 #endif 236 #ifdef CONFIG_CPUSETS 237 struct cpuset *cpuset; 238 nodemask_t mems_allowed; 239 int cpuset_mems_generation; 240 int cpuset_mem_spread_rotor; 241 #endif 242 struct robust_list_head __user *robust_list; 243 #ifdef CONFIG_COMPAT 244 struct compat_robust_list_head __user *compat_robust_list; 245 #endif 246 struct list_head pi_state_list; 247 struct futex_pi_state *pi_state_cache; 248 249 atomic_t fs_excl; /* holding fs exclusive resources */ 250 struct rcu_head rcu; 251 252 /* 253 * cache last used pipe for splice 254 */ 255 struct pipe_inode_info *splice_pipe; 256 #ifdef CONFIG_TASK_DELAY_ACCT 257 struct task_delay_info *delays; 258 #endif 259 #ifdef CONFIG_FAULT_INJECTION 260 int make_it_fail; 261 #endif 262 };

这当中定义了进程的状态,调度信息,通讯状况,插入进程树时联系父子兄弟的指针,时间信息,内存信息,进程上下文,内核上下文以及处理器上下文等相关信息。

3.2进程标识符

pid_t pid;/* 进程ID,每个进程(线程)的PID都不同 */

pid_t tgid; /* 线程组ID,同一个线程组拥有相同的pid,与领头线程(该组中第一个轻量级进程)pid一致,保存在tgid中,线程组领头线程的pid和tgid相同 */

Unix系统通过pid来标识进程,linux把不同的pid与系统中每个进程或轻量级线程关联,而unix程序员希望同一组线程具有共同的pid,遵照这个标准linux引入线程组的概念。在Linux系统中,一个线程组中的所有线程使用和该线程组的领头线程(该组中的第一个轻量级进程)相同的PID,并被存放在tgid成员中。只有线程组的领头线程的pid成员才会被设置为与tgid相同的值。

#define PID_MAX_DEFAULT (CONFIG_BASE_SMALL ? 0x1000 : 0x8000)

在CONFIG_BASE_SMALL配置为0的情况下,PID的取值范围是0到32767,即系统中的进程数最大为32768个。

3.3进程标记

unsigned int flags; /*定义每个进程标记*/

进程标记用于反应进程状态的信息,但不是运行状态,用于内核识别进程当前的状态,以备下一步操作。

flags成员的可能取值如下,这些宏以PF(ProcessFlag)开头

/* * Per process flags */ #define PF_ALIGNWARN 0x00000001 /* Print alignment warning msgs */ /* Not implemented yet, only for 486*/ #define PF_STARTING 0x00000002 /* being created */ #define PF_EXITING 0x00000004 /* getting shut down */ #define PF_EXITPIDONE 0x00000008 /* pi exit done on shut down */ #define PF_FORKNOEXEC 0x00000040 /* forked but didn't exec */ #define PF_SUPERPRIV 0x00000100 /* used super-user privileges */ #define PF_DUMPCORE 0x00000200 /* dumped core */ #define PF_SIGNALED 0x00000400 /* killed by a signal */ #define PF_MEMALLOC 0x00000800 /* Allocating memory */ #define PF_FLUSHER 0x00001000 /* responsible for disk writeback */ #define PF_USED_MATH 0x00002000 /* if unset the fpu must be initialized before use */ #define PF_NOFREEZE 0x00008000 /* this thread should not be frozen */ #define PF_FROZEN 0x00010000 /* frozen for system suspend */ #define PF_FSTRANS 0x00020000 /* inside a filesystem transaction */ #define PF_KSWAPD 0x00040000 /* I am kswapd */ #define PF_SWAPOFF 0x00080000 /* I am in swapoff */ #define PF_LESS_THROTTLE 0x00100000 /* Throttle me less: I clean memory */ #define PF_BORROWED_MM 0x00200000 /* I am a kthread doing use_mm */ #define PF_RANDOMIZE 0x00400000 /* randomize virtual address space */ #define PF_SWAPWRITE 0x00800000 /* Allowed to write to swap */ #define PF_SPREAD_PAGE 0x01000000 /* Spread page cache over cpuset */ #define PF_SPREAD_SLAB 0x02000000 /* Spread some slab caches over cpuset */ #define PF_MEMPOLICY 0x10000000 /* Non-default NUMA mempolicy */ #define PF_MUTEX_TESTER 0x20000000 /* Thread belongs to the rt mutex tester */ #define PF_FREEZER_SKIP 0x40000000 /* Freezer should not count it as freezeable */

3.4进程的状态

进程状态的定义

volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */

state成员的可能取值

/*

* Task state bitmask. NOTE! These bits are also

* encoded in fs/proc/array.c: get_task_state().

*

* We have two separate sets of flags: task->state

* is about runnability, while task->exit_state are

* about the task exiting. Confusing, but this way

* modifying one set can't modify the other one by

* mistake.

*/

#define TASK_RUNNING 0

#define TASK_INTERRUPTIBLE 1

#define TASK_UNINTERRUPTIBLE 2

#define TASK_STOPPED 4

#define TASK_TRACED 8

/* in tsk->exit_state */

#define EXIT_ZOMBIE 16

#define EXIT_DEAD 32

/* in tsk->state again */

#define TASK_NONINTERACTIVE 64

#define TASK_DEAD 128

#define __set_task_state(tsk, state_value) \

do { (tsk)->state = (state_value); } while (0)

#define set_task_state(tsk, state_value) \

set_mb((tsk)->state, (state_value))

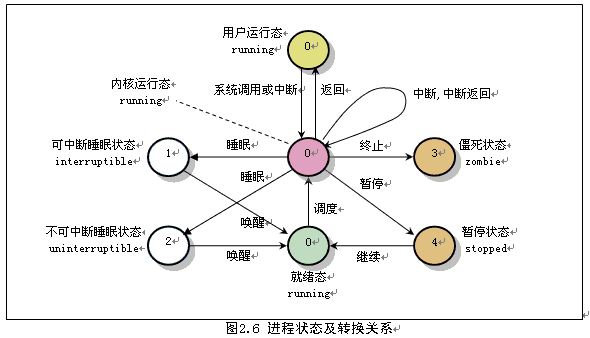

那就让我们来分析一下这些进程状态的具体含义:

1、TASK_RUNNING:(R) 进程当前正在运行,或者正在运行队列中等待调度。只有在该状态的进程才可能在CPU上运行,同一时刻可能有多个进程处于可执行状态。 2、TASK_INTERRUPTIBLE:(S) 进程处于睡眠状态,处于这个状态的进程因为等待某事件的发生(比如等待socket连接、等待信号量),而被挂起。当这些事件发生时,对应的等待队列中的一个或多个进程将被唤醒。一般情况下,

进程列表中的绝大多数进程都处于TASK_INTERRUPTIBLE状态。进程可以被信号中断。接收到信号或被显式的唤醒呼叫唤醒之后,进程将转变为 TASK_RUNNING 状态。 3、TASK_UNINTERRUPTIBLE:(D) 不可中断的睡眠状态,此进程状态类似于 TASK_INTERRUPTIBLE,只是它不会处理信号。不可中断,指的是进程不响应异步信号,无法用kill命令关闭处于TASK_UNINTERRUPTIBLE状态的进程。中

断处于这种状态的进程是不合适的,因为它可能正在完成某些重要的任务。 当它所等待的事件发生时,进程将被显式的唤醒呼叫唤醒。可处理signal, 有延迟 4、TASK_STOPPED: 进程已中止执行,它没有运行,并且不能运行。接收到 SIGSTOP 和 SIGTSTP 等信号时,进程将进入这种状态。接收到 SIGCONT 信号之后,进程将再次变得可运行。 5、TASK_TRACED:(T) 正被调试程序等其他进程监控时,进程将进入这种状态。 6、EXIT_ZOMBIE:(Z) 进程已终止,它正等待其父进程收集关于它的一些统计信息。不可被kill, 即不响应任务信号, 无法用SIGKILL杀死 7、EXIT_DEAD:(X) 最终状态(正如其名)。将进程从系统中删除时,它将进入此状态,因为其父进程已经通过 wait4() 或 waitpid() 调用收集了所有统计信息。EXIT_DEAD状态是非常短暂的,几乎不可能通过ps命

令捕捉到。

在Linux-2.6.25的版本后还引入了 TASK_KILLABLE 这种进程状态,用于将进程置为睡眠状态,它可以替代有效但可能无法终止的 TASK_UNINTERRUPTIBLE 进程状态,以及易于唤醒但更加安全的 TASK_INTERRUPTIBLE 进程状态。

4.进程调度

上文提到了Linux是多任务抢占操作系统,抢占式系统表示即使当前进程没有用完时间片,也没有主动放弃cpu,如果调度系统发现有更高动态优先级的进程,则强制剥夺当前的cpu,选择更高动态优先级的进程执行。

4.1.时间片和优先级进程优先级

时间片是一个数值,它表明进程在被抢占前所能持续运行的时间。I/O消耗型不需要长的时间片,而处理器消耗型的进程则希望越长越好。

#define MAX_USER_RT_PRIO 100 #define MAX_RT_PRIO MAX_USER_RT_PRIO #define MAX_PRIO (MAX_RT_PRIO + 40) #define DEFAULT_PRIO (MAX_RT_PRIO + 20)

Linux进程的静态优先级。静态优先级分为两个范围:0~99是实时进程的静态优先级(值越大优先级越高),100~139是普通进程的静态优先级(通过nice值表示,-20~19,值越大优先级越低)。

4.2.进程调度策略

/* * Scheduling policies */ #define SCHED_NORMAL 0 #define SCHED_FIFO 1 #define SCHED_RR 2 #define SCHED_BATCH 3 /* SCHED_ISO: reserved but not implemented yet */ #define SCHED_IDLE 5

内核2.6的进程调度策略一共有五种: SCHED_NORMAL,SCHED-FIFO,SCHED_RR,SCHED_BATCH,SCHED_IDLE 。其中 SCHED_NORMAL 和 SCHED_BATCH 用于普通进程的调度通过CFS调度器实现,后三种用于实时进程的调度。

4.3.普通进程的调度策略(CFS)

CFS( Completely Fair Scheduler)即完全公平调度器,不再跟踪进程的睡眠时间,也不再企图区分交互式进程,它将所有的进程统一对待,这就是公平的含义。

先简单说一下CFS调度算法的思想:理想状态下每个进程都能获得相同的时间片,并且同时运行在CPU上,但实际上一个CPU同一时刻运行的进程只能有一个。 也就是说,当一个进程占用CPU时,其他进程就必须等待。CFS为了实现公平,必须惩罚当前正在运行的进程,以使那些正在等待的进程下次被调度。

4.3.1.调度实体

/*

* CFS stats for a schedulable entity (task, task-group etc)

*

* Current field usage histogram:

*

* 4 se->block_start

* 4 se->run_node

* 4 se->sleep_start

* 4 se->sleep_start_fair

* 6 se->load.weight

* 7 se->delta_fair

* 15 se->wait_runtime

*/

struct sched_entity {

long wait_runtime;

unsigned long delta_fair_run;

unsigned long delta_fair_sleep;

unsigned long delta_exec;

s64 fair_key;

struct load_weight load; /* for load-balancing */

struct rb_node run_node;

unsigned int on_rq;

u64 exec_start;

u64 sum_exec_runtime;

u64 prev_sum_exec_runtime;

u64 wait_start_fair;

u64 sleep_start_fair;

#ifdef CONFIG_SCHEDSTATS

u64 wait_start;

u64 wait_max;

s64 sum_wait_runtime;

u64 sleep_start;

u64 sleep_max;

s64 sum_sleep_runtime;

u64 block_start;

u64 block_max;

u64 exec_max;

unsigned long wait_runtime_overruns;

unsigned long wait_runtime_underruns;

#endif

运行实体结构为sched_entity,所有的调度器都必须对进程运行时间做记账。CFS不再有时间片的概念,但是它也必须维护每个进程运行的时间记账,因为他需要确保每个进程只在公平分配给他的处理器时间内运行。CFS使用调度器实体结构来最终运行记账。

4.3.2.运行队列

struct cfs_rq {

struct load_weight load;/*运行负载*/

unsigned long nr_running;/*运行进程个数*/

u64 exec_clock;

u64 min_vruntime;/*保存的最小运行时间*/

struct rb_root tasks_timeline;/*运行队列树根*/

struct rb_node *rb_leftmost;/*保存的红黑树最左边的节点,这个为最小运行时间的节点,当进程选择下一个来运行时,直接选择这个*/

struct list_head tasks;

struct list_head *balance_iterator;

/*

* 'curr' points to currently running entity on this cfs_rq.

* It is set to NULL otherwise (i.e when none are currently running).

*/

struct sched_entity *curr, *next, *last;

unsigned int nr_spread_over;

#ifdef CONFIG_FAIR_GROUP_SCHED

struct rq *rq; /* cpu runqueue to which this cfs_rq is attached */

/*

* leaf cfs_rqs are those that hold tasks (lowest schedulable entity in

* a hierarchy). Non-leaf lrqs hold other higher schedulable entities

* (like users, containers etc.)

*

* leaf_cfs_rq_list ties together list of leaf cfs_rq's in a cpu. This

* list is used during load balance.

*/

struct list_head leaf_cfs_rq_list;

struct task_group *tg; /* group that "owns" this runqueue */

#ifdef CONFIG_SMP

/*

* the part of load.weight contributed by tasks

*/

unsigned long task_weight;

/*

* h_load = weight * f(tg)

*

* Where f(tg) is the recursive weight fraction assigned to

* this group.

*/

unsigned long h_load;

/*

* this cpu's part of tg->shares

*/

unsigned long shares;

/*

* load.weight at the time we set shares

*/

unsigned long rq_weight;

#endif

#endif

};

4.3.3.虚拟运行时间(vruntime)

struct sched_entity

{

struct load_weight load; /* for load-balancing负荷权重,这个决定了进程在CPU上的运行时间和被调度次数 */

struct rb_node run_node;

unsigned int on_rq; /* 是否在就绪队列上 */

u64 exec_start; /* 上次启动的时间*/

u64 sum_exec_runtime;

u64 vruntime;

u64 prev_sum_exec_runtime;

/* rq on which this entity is (to be) queued: */

struct cfs_rq *cfs_rq;

...

};

实现CFS算法时,CFS通过每个进程的虚拟运行时间(vruntime)来衡量哪个进程最值得被调度。CFS中的就绪队列是一棵以vruntime为键值的红黑树,虚拟时间越小的进程越靠近整个红黑树的最左端。因此,调度器每次选择位于红黑树最左端的那个进程,该进程的vruntime最小。

虚拟运行时间是通过进程的实际运行时间和进程的权重(weight)计算出来的。在CFS调度器中,将进程优先级这个概念弱化,而是强调进程的权重。一个进程的权重越大,则说明这个进程更需要运行,因此它的虚拟运行时间就越小,这样被调度的机会就越大。

而在内核中,进程的虚拟运行时间是自进程诞生以来进行累加的,每个时钟周期内一个进程的虚拟运行时间是通过下面的方法计算的:

一次调度间隔的虚拟运行时间=实际运行时间*(NICE_0_LOAD/权重)

其中,NICE_0_LOAD是nice为0时的权重。也就是说,nice值为0的进程实际运行时间和虚拟运行时间相同。通过这个公式可以看到,权重越大的进程获得的虚拟运行时间越小,那么它将被调度器所调度的机会就越大。

4.3.4.进程选择

CFS调度算法的核心是选择具有最小vruntine的任务。运行队列采用红黑树方式存放,其中节点的键值便是可运行进程的虚拟运行时间。CFS调度器选取待运行的下一个进程,是所有进程中vruntime最小的那个,他对应的便是在树中最左侧的叶子节点。实现选择的函数为pick_next_task_fair。

static struct task_struct *pick_next_task_fair(struct rq *rq)

{

struct task_struct *p;

struct cfs_rq *cfs_rq = &rq->cfs;

struct sched_entity *se;

if (unlikely(!cfs_rq->nr_running))

return NULL;

do {/*此循环为了考虑组调度*/

se = pick_next_entity(cfs_rq);

set_next_entity(cfs_rq, se);/*设置为当前运行进程*/

cfs_rq = group_cfs_rq(se);

} while (cfs_rq);

p = task_of(se);

hrtick_start_fair(rq, p);

return p;

}

该函数最终调用__pick_next_entity完成实质工作

/*函数本身并不会遍历数找到最左叶子节点(是

所有进程中vruntime最小的那个),因为该值已经缓存

在rb_leftmost字段中*/

static struct sched_entity *__pick_next_entity(struct cfs_rq *cfs_rq)

{

/*rb_leftmost为保存的红黑树的最左边的节点*/

struct rb_node *left = cfs_rq->rb_leftmost;

if (!left)

return NULL;

return rb_entry(left, struct sched_entity, run_node);

}

CFS调度运行队列采用红黑树方式组织,红黑树种的key值以vruntime排序。每次选择下一个进程运行时即是选择最左边的一个进程运行。而对于入队和处队都会更新调度队列、调度实体的相关信息。

5.对操作系统进程模型的看法

操作系统其实就相当于一个大型的软件,是用户和计算机的接口,同时也是计算机硬件和其他软件的接口。操作系统管理着计算机的资源,同时为用户的使用分配着资源,进程的调度就是操作系统在幕后控制的。从一开始的非抢占式单任务运行系统到现在的抢占式多任务并行系统,进程的调度算法一次又一次的优化创新,以此来达到更好的效率,来更好的满足用户的需求。而我认为这个创新是永无止境的,随着硬件以及时间的变化,调度算法也会一次又一次的更新换代。

浙公网安备 33010602011771号

浙公网安备 33010602011771号