Kubernetes(k8s)概念学习、集群安装

1、Kubernetes(k8s)架构图,如下所示:

1)、etcd的官方将它定位成一个可信赖的分布式键值存储服务,它能够为整个分布式集群存储一些关键数据,协助分布式集群的正常运转。键值对数据库,存储k8s集群的所有重要信息,重要信息是进行持久化的信息。

2)、etcd作为持久化方案,etcd STORAGE存储有两个版本,v2版本是基于Memory内存的,数据存储到内存中,v3版本是基于Database,引入本地卷的持久化操作,关机不会操作数据的损坏。推荐在k8s集群中使用Etcd v3,v2版本已在k8s v1.11中弃用。

3)、master服务器,领导者,包含scheduler调度器、replication controller简称rc,控制器、api server是主服务器。

4)、scheduler调度器,任务过来以后通过调度器分散到不同的node节点里面,注意,scheduler调度器将任务交给api server,api server将任务写入到etcd,scheduler调度器并不会和etch直接进行交互。负责接收任务,选择合适的节点进行分配任务。

5)、replication controller简称rc,控制器,它们就是维护副本的数目,维护我们的期望值,维护副本的数目也就是创建相应的pod,删除相应的pod。维护副本期望数目。

6)、api server是主服务器里面一切服务访问的入口,api server非常繁忙的,为了减轻其压力,每个组件可以在本地生成一定的缓存。所有服务访问统一入口。

7)、node节点,执行者。包含 Kubelet、Kube proxy、container。node节点需要安装三个软件Kubelet、Kube proxy、Docker(或者其他容器引擎)。

8)、Kubelet组件会和CRI(container Runtime Interface)这里就是Docker的表现形式,Kubelet和Docker进行交互操作Docker创建对应的容器,Kubelet维持我们的Pod的声明周期。直接跟容器引擎交互实现容器的生命周期管理。

9)、Kube proxy组件可以完成负载的操作,怎么实现Pod与Pod之间的访问,包括负载均衡,需要借助Kube proxy,它的默认操作对象是操作防火墙去实现这里的映射,新版本还支持IPVS(LVS组件)。负责写入规则至iptables、ipvs实现服务映射访问的。

2、其他插件说明,如下所示:

1)、CoreDNS:可以为集群中的SVC创建一个域名IP的对应关系解析。

2)、Dashboard:给 K8S 集群提供一个 B/S 结构访问体系。

3)、Ingress Controller:官方只能实现四层代理,INGRESS 可以实现七层代理。

4)、Federation:提供一个可以跨集群中心多K8S统一管理功能。

5)、Prometheus:提供K8S集群的监控能力。

6)、Elk:提供 K8S 集群日志统一分析介入平台。

3、ETCD的架构图,如下所示:

4、Pod的分类,根据是否自主式进行分类。

1)、自主式Pod(即不是被控制器管理的Pod),一旦死亡就不会被拉起来,也不会重启,Pod死亡之后副本数达不到期望值了,也不会有新的Pod创建满足它的期望值,这些都是自主式Pod的缺憾。

2)、控制器管理的Pod(被控制器管理的Pod)。

5、Pod与容器的理解?

答:定义一个Pod,它会先启动第一个容器Pause,只要运行了这个Pod,这个容器Pause就会被启动,只要是有Pod,这个容器Pause就要被启动。一个Pod里面可以有很多个容器,如果此Pod有两个容器,这两个容器会共用Pause的网络栈,共用Pause的存储卷。共用Pause的网络栈意味着这两个容器没有独立的ip地址,有的都是这个Pod的地址或者是Pause的地址,但是这两个尾根隔离,但是进程不隔离,互相访问可以使用localhost进行互访,这就意味着同一个Pod内容器之间的端口不能冲突。共用Pause的存储卷,两个容器可以共享Pause的存储,在同一个Pod内既共享网络,又共享存储卷。

6、Pod的控制器类型。

1)、ReplicationController & ReplicaSet & Deployment 这是三种控制器。

1.1)、ReplicationController,用来确保容器应用的副本数时终保持在用户定义的副本数,即如果有容器异常退出,会自动创建新的Pod来替代。而如果异常多出来的容器也会自动回收。在新版本的Kubernetes中建议使用RepliaSet来取代ReplicationControlle。

1.2)、ReplicaSet跟ReplicationController没有本质的不同,只是名字不一样,并且ReplicaSet支持集合式的selector。ReplicaSet比ReplicationController更有意义,在最新版本中官方全部采用ReplicaSet。虽然ReplicaSet可以独立使用,但是一般还是建议使用Deployment来自动管理ReplicaSet,这样就无需担心跟其他机制的不兼容问题(比如ReplicaSet不支持rolling-update滚动更新,但Deployment支持rolling-update滚动更新)。

1.3)、Deployment,为Pod和ReplicaSet提供了一个声明式定义(declarative)方法。用来替代以前的ReplicationController来方便的管理应用。典型的应用场景包括,定义Deployment来创建Pod和ReplicaSet、滚动升级和回滚应用、扩容和缩容、暂停和继续Deployment。

2)、HPA(HorizontalPodAutoScale),Horizontal Pod Autoscaling仅适用于Deployment和ReplicaSet,在V1版本中仅支持根据Pod的Cpu利用率扩缩容,在v1alpha版本中,支持根据内容和用户自定义的metric扩缩容。

3)、StatefullSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计),其应用场景包括。

3.1)、稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现。

3.2)、稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service(即没有Cluster IP的Service)来实现。

3.3)、有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次进行(即从0到N-1,在下一个Pod运行所有之前的Pod必须都是Running和Ready状态),基于init containers来实现。

3.4)、有序收缩,有序删除(即从N-1到0)。

4)、DaemonSet确保全部(或者一些)Node上运行一个Pod的副本。当有Node加入集群的时候,也会为它们新增一个Pod,当有Node从集群移除的时候,这些Pod也会被回收。删除DaemonSet将会删除它创建的所有Pod。使用DaemonSet的一些典型用法。

4.1)、运行集群存储daemon,例如在每个Node上运行glusterd、ceph。

4.2)、在每个Node上运行日志收集daemon,例如fluentd、logstash。

4.3)、在每个Node上运行监控daemon,例如Prometheus Node Exporter。

5)、Job,Cronjob,Job负责批处理任务,即仅执行一次的任务,它保证批处理任务的一个或者多个Pod成功结束。Cron Job管理基于时间的Job,即:

5.1)、在给定时间点只运行一次。

5.2)、周期性地在给定时间点运行。

7、Service服务发现。

答:客户端想要访问一组Pod,如果Pod是不相关地话,是不可以通过service进行统一代理的。一组Pod必须要具有相关性,比如是同一个Deployment 创建的,或者拥有同一组标签的,就可以被service所收集到,service收集到Pod是通过标签收集到的,收集到之后service会有自己的ip地址和端口号,客户端就可以访问service的ip地址和端口号,就间接的访问到了对应的Pod,这里面有一个RR轮询算法存在的。

8、Kubernetes的网络通讯模式。

答:1)、Kubernetes的网络模型假定了所有Pod都在一个可以直接连通的扁平的网络空间中,这在GCE(Google Compute Engine)里面是现成的网络模型,Kubernetes假定这个网络已经存在。而在私有云里面搭建Kubernetes集群,就不能假定这个网络已经存在了。我们需要自己实现这个网络假设,将不同节点上的Docker容器之间的互相访问先打通,然后运行Kubernetes。

2)、同一个Pod内的多个容器之间:IO。同一个Pod内的多个容器之间互相访问是通过(只要是同一个Pod,就会共用我们的Pause容器的网络栈)Pause容器网络栈的网卡IO,通过localhost的方式就可以进行访问。

3)、各个Pod之间的通讯:Overlay Network全覆盖网络。

4)、Pod与Service之间的通讯:各节点的Iptables规则。在最新版本中加入了LVS,转发效率会更高,上限也会更高。

9、网络解决方案Kubernetes + Flannel。

答:Flannel是CoreOS团队针对Kubernetes设计的一个网络规划服务,简单来说,它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址。而且它还能在这些IP地址之间建立一个覆盖网络(Overlay Network),通过这个覆盖网络,将数据包原封不动地传递到目标容器内。

解释说明,如下所示:

1)、上图是两台大主机,物理机,192.168.66.11、192.168.66.12。

2)、运行了四个Pod,分别是Web app1、Web app2、Web app3、Backend前端组件。

3)、外部访问到Backend,然后经过自己的网关去处理,把什么样的请求分配到什么样的服务上。所以要了解Web app2与Backend如何进行通讯、Web app3与Backend如何进行通讯。

4)、首先,在真实的Node服务器上面,会安装一个Flanneld守护进程,这个进程监听一个端口,这个端口就是用于后期转发数据包以及接收数据包的端口。Flanneld守护进程一旦启动就会启动一个Flannel0网桥,这个网桥专门用于收集Docker0转发出来的数据报文,可以理解Flannel0网桥就是一个钩子函数,强行获取数据报文。

Docker0会分配自己ip到对应的Pod上,如果是同一台主机两个不同Pod进行访问的话,其实走的是Docker0的网桥,因为它们都是在同一个网桥下面的两个不同子网ip地址。

5)、难点是如何通过跨主机,还能通过对方的ip直接到达。此时,如果将Web app2这个Pod的数据包发送到Backend前端组件,源地址要填写10.1.15.2/24,目标地址要填写10.1.20.3/24。

由于目标地址和源地址是不同的网段,此时源地址将数据包发送到网关Docker0,Docker0上面会有钩子函数,将数据包抓取到Flannel0,此时Flannel0里面有一堆的路由表记录,是从etcd里面获取到的,写入到当前的主机里面,判断到底路由到那台机器,由于Flannel0是Flanneld开启的网桥,此时数据包到了Flanneld,到了Flanneld之后会对数据包进行封装,通过mac封装、udp封装、payload封装,然后转发到192.168.66.12,目标端口就是Flanneld的端口。

6)、此时数据包会被Flanneld所截获,截获之后进行拆封,拆封完之后转发到Flannel0,Flannel0转发到Docker0,Docker0会路由到Backend端,并且从源地址出来的数据包是经过二次解封的,目标地址的Docker0是看不到第一层的信息的,它看到的是第二层的信息,此时就可以实现跨主机的扁平化网络。

10、上图的架构图,可以分析为,如下所示的访问过程。

11、ETCD之Flannel提供说明。ETCD之与Flannel如何进行关联的。

答:1)、存储管理Flannel可分配地IP地址段资源。

2)、监控ETCD中每个Pod地实际地址,并在内存中建立维护Pod节点路由表。

12、不同情况下网络通信方式。

答:1)、同一个Pod内部通讯,同一个Pod共享同一个网络命名空间,共享同一个Linux协议栈,可以通过localhost进行互访。

2)、Pod1至Pod2的两种方式,在同一台机器,不在同一台机器。

a、第一种,Pod1与Pod2不在同一台主机,Pod地地址是与docker0在同一个网段地但是docker0网段与宿主机网卡是两个完全不同地IP网段,并且不同Node之间的通信只能通过宿主机的物理网卡进行。将Pod的Ip和所在Node的IP关联起来,通过这个关联让Pod可以互相访问。

b、第二种,Pod1与Pod2在同一台机器,由Docker0网桥直接转发请求至Pod2,不需要经过Flannel。直接通过网桥在本机之间完成转换。

3)、Pod至Service的网络,目前基于性能考虑,全部为iptables维护和转发。注意,最新版的可以改为LVS模式,通过LVS进行转发和维护,效率更加高。

4)、Pod到外网,Pod向外网发送请求,查找路由表,转发数据包到宿主机的网卡,宿主网卡完成路由选择后,iptables执行Masquerade,把源IP更改为宿主网卡的IP,然后向外网服务器发送请求。即如果Pod里面的容器想要上网,直接通过SNAT动态转换来完成上网行为。

5)、外网访问Pod,Service,借助Service的NodPod方式才可以进行所谓的访问集群内部。

13、组件通讯示意图,如下所示:

答:k8s里面有三层网络,包含节点网络、Pod网络、Service网络。

注意:真实地物理网络只有节点网络这一个,构建服务器的时候只需要一张网卡就可以实现,也可以有多个网卡,Pod网络、Service网络是虚拟网络,可以将它两个理解为内部网络。

如果想要访问Service网络的时候,需要在Service网络中进行访问,Service再和后端的Pod进行访问,通过Iptables或者LVS转换进行访问。

14、什么是Kubernetes?

答:Kubernetes是谷歌公司开源的一个容器管理平台。Kubernetes就是将Borg项目最精华的部分提取出来,使现在的开发者能够更简单、直接的去应用它。

15、Kubernetes的核心功能?

答:自我修复、服务发现和负载均衡、自动部署和回滚、弹性伸缩。

16、Kubernetes核心组件。

答:1)、ectd,保存了整个集群的状态。

2)、apiserver,提供了资源操作的唯一入口,并提供认证、授权、访问控制、Api注册和发现等机制。

3)、controller manager,负责维护集群的状态,比如故障检测,自动扩展,滚动更新等等。

4)、scheduler,负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上。

5)、kubelet,负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理。

6)、Container runtime,负责镜像管理以及Pod和容器的真正运行(CRI)。

7)、Kube-proxy,负责为Service提供cluster内部的服务发现和负载均衡。

17、Kubernetes扩展组件。

答:1)、kube-dns,负责为整个集群提供DNS服务。

2)、ingress Controller为服务提供外网入口。

3)、Heapster,提供资源监控。

4)、Dashboard,提供GUI。

5)、Federation,提供跨可用区的集群。

6)、Fluentd-elasticasearch,提供集群日志采集、存储与查询。

18、Kubernetes架构图,如下所示:

使用该命令进行编辑网络:vim /etc/sysconfig/network-scripts/ifcfg-ens33

1、Kubernetes(k8s)集群安装。

首先,查看CentOS7内核版本及发行版本。我这里使用的是CentOS Linux release 7.6.1810 (Core)、内核版本Linux 3.10.0-957.el7.x86_64。

1 -- 查看内核版本,方式一 2 [root@slaver4 ~]# uname -sr 3 Linux 3.10.0-957.el7.x86_64 4 [root@slaver4 ~]# 5 6 -- 查看内核版本,方式二 7 [root@slaver4 ~]# uname -a 8 Linux slaver4 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux 9 [root@slaver4 ~]# 10 11 -- 查看内核版本,方式三 12 [root@slaver4 ~]# cat /proc/version 13 Linux version 3.10.0-957.el7.x86_64 (mockbuild@kbuilder.bsys.centos.org) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC) ) #1 SMP Thu Nov 8 23:39:32 UTC 2018 14 [root@slaver4 ~]# 15 16 17 18 -- 查看发行版本,适用于所有linux发行版本 19 [root@slaver4 ~]# yum install -y redhat-lsb-core 20 Loaded plugins: fastestmirror, langpacks 21 Loading mirror speeds from cached hostfile 22 * base: mirrors.tuna.tsinghua.edu.cn 23 * extras: mirrors.tuna.tsinghua.edu.cn 24 * updates: mirrors.tuna.tsinghua.edu.cn 25 base | 3.6 kB 00:00:00 26 extras | 2.9 kB 00:00:00 27 updates | 2.9 kB 00:00:00 28 updates/7/x86_64/primary_db | 2.1 MB 00:00:00 29 Resolving Dependencies 30 --> Running transaction check 31 ---> Package redhat-lsb-core.x86_64 0:4.1-27.el7.centos.1 will be installed 32 --> Processing Dependency: redhat-lsb-submod-security(x86-64) = 4.1-27.el7.centos.1 for package: redhat-lsb-core-4.1-27.el7.centos.1.x86_64 33 --> Processing Dependency: spax for package: redhat-lsb-core-4.1-27.el7.centos.1.x86_64 34 --> Processing Dependency: /usr/bin/patch for package: redhat-lsb-core-4.1-27.el7.centos.1.x86_64 35 --> Processing Dependency: /usr/bin/m4 for package: redhat-lsb-core-4.1-27.el7.centos.1.x86_64 36 --> Running transaction check 37 ---> Package m4.x86_64 0:1.4.16-10.el7 will be installed 38 ---> Package patch.x86_64 0:2.7.1-12.el7_7 will be installed 39 ---> Package redhat-lsb-submod-security.x86_64 0:4.1-27.el7.centos.1 will be installed 40 ---> Package spax.x86_64 0:1.5.2-13.el7 will be installed 41 --> Finished Dependency Resolution 42 43 Dependencies Resolved 44 45 ============================================================================================================ 46 Package Arch Version Repository Size 47 ============================================================================================================ 48 Installing: 49 redhat-lsb-core x86_64 4.1-27.el7.centos.1 base 38 k 50 Installing for dependencies: 51 m4 x86_64 1.4.16-10.el7 base 256 k 52 patch x86_64 2.7.1-12.el7_7 base 111 k 53 redhat-lsb-submod-security x86_64 4.1-27.el7.centos.1 base 15 k 54 spax x86_64 1.5.2-13.el7 base 260 k 55 56 Transaction Summary 57 ============================================================================================================ 58 Install 1 Package (+4 Dependent packages) 59 60 Total download size: 679 k 61 Installed size: 1.3 M 62 Downloading packages: 63 (1/5): patch-2.7.1-12.el7_7.x86_64.rpm | 111 kB 00:00:00 64 (2/5): redhat-lsb-core-4.1-27.el7.centos.1.x86_64.rpm | 38 kB 00:00:00 65 (3/5): redhat-lsb-submod-security-4.1-27.el7.centos.1.x86_64.rpm | 15 kB 00:00:00 66 (4/5): m4-1.4.16-10.el7.x86_64.rpm | 256 kB 00:00:00 67 (5/5): spax-1.5.2-13.el7.x86_64.rpm | 260 kB 00:00:00 68 ------------------------------------------------------------------------------------------------------------ 69 Total 1.5 MB/s | 679 kB 00:00:00 70 Running transaction check 71 Running transaction test 72 Transaction test succeeded 73 Running transaction 74 Warning: RPMDB altered outside of yum. 75 Installing : spax-1.5.2-13.el7.x86_64 1/5 76 Installing : redhat-lsb-submod-security-4.1-27.el7.centos.1.x86_64 2/5 77 Installing : m4-1.4.16-10.el7.x86_64 3/5 78 Installing : patch-2.7.1-12.el7_7.x86_64 4/5 79 Installing : redhat-lsb-core-4.1-27.el7.centos.1.x86_64 5/5 80 Verifying : patch-2.7.1-12.el7_7.x86_64 1/5 81 Verifying : m4-1.4.16-10.el7.x86_64 2/5 82 Verifying : redhat-lsb-submod-security-4.1-27.el7.centos.1.x86_64 3/5 83 Verifying : spax-1.5.2-13.el7.x86_64 4/5 84 Verifying : redhat-lsb-core-4.1-27.el7.centos.1.x86_64 5/5 85 86 Installed: 87 redhat-lsb-core.x86_64 0:4.1-27.el7.centos.1 88 89 Dependency Installed: 90 m4.x86_64 0:1.4.16-10.el7 patch.x86_64 0:2.7.1-12.el7_7 91 redhat-lsb-submod-security.x86_64 0:4.1-27.el7.centos.1 spax.x86_64 0:1.5.2-13.el7 92 93 Complete! 94 [root@slaver4 ~]# lsb_release -a 95 LSB Version: :core-4.1-amd64:core-4.1-noarch 96 Distributor ID: CentOS 97 Description: CentOS Linux release 7.6.1810 (Core) 98 Release: 7.6.1810 99 Codename: Core 100 [root@slaver4 ~]# cat /etc/redhat-release 101 CentOS Linux release 7.6.1810 (Core) 102 [root@slaver4 ~]# 103 104 105 -- 仅适用于redhat系列linux 106 [root@slaver4 ~]# cat /etc/redhat-release 107 CentOS Linux release 7.6.1810 (Core) 108 [root@slaver4 ~]#

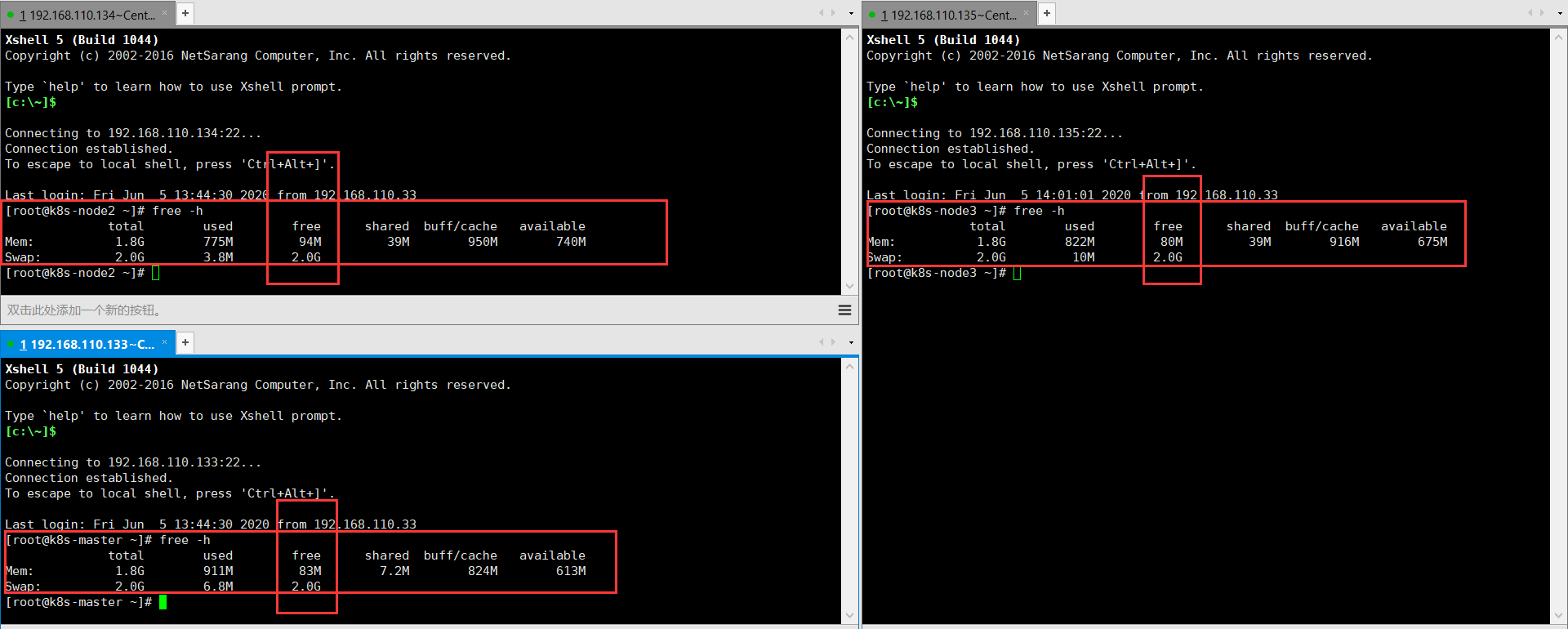

网卡模式使用的VMnet8(NAT模式),三台虚拟机。

1 192.168.110.133 2G内存 一个处理器,每个处理器的核心数量为2 2 192.168.110.134 2G内存 一个处理器,每个处理器的核心数量为2 3 192.168.110.135 2G内存 一个处理器,每个处理器的核心数量为2

首先,由于我电脑资源有限,这里先使用三台机器,一个机器作为Master、Node、另外两台机器作为两个Node节点即可,分配如下所示:

| 主机名称 | ip地址 | 角色 | 组件 |

| k8s-master | 192.168.110.133 | Master、Node | etcd、api-server、controller-manager、scheduler、 kubelet、kube-proxy。 |

| k8s-node2 | 192.168.110.134 | Node | kubelet、kube-proxy、docker。 |

| k8s-node3 | 192.168.110.135 | Node | kubelet、kube-proxy、docker。 |

备注:Node节点安装的时候会自动安装好docker,Master节点和Node节点在一台机器上面,只要将kubelet、kube-proxy组件安装到Master节点即可。即完成了一个Master节点,三个Node节点。

2、按照自己的规定,修改主机名,分别为k8s-master、k8s-node2、k8s-node3。

1 [root@slaver4 ~]# vim /etc/hostname

可以使用hostname master立即生效,不用重启机器。修改之后,重连Xshell即可,显示效果。

3、开始修改域名和主机名对应关系,做好host解析,如下所示:

[root@k8s-master ~]# vim /etc/hosts

1 192.168.110.133 k8s-master 2 192.168.110.134 k8s-node2 3 192.168.110.135 k8s-node3

修改之后,可以ping一下修改的主机名,看看是否可以ping通。

[root@k8s-master ~]# ping k8s-node2

[root@k8s-master ~]# ping k8s-node3

三台机器都需要安装依赖包,如下所示:

1 [root@master ~]# yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

3、关闭防火墙,如下所示:

1 [root@k8s-master ~]# systemctl stop firewalld 2 [root@k8s-master ~]# systemctl disable firewalld

或者也可以设置防火墙为iptables并设置空规则。

1 # 关闭防火墙,关闭防火墙自启动 2 [root@master ~]# systemctl stop firewalld && systemctl disable firewalld 3 4 # 安装iptables的service服务,启动iptables的工具,设置开机自启动,清空iptables的规则,保存默认规则 5 # [root@master ~]# yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

关闭SELINUX,避免后续报错,如下所示:

1 # 关闭swap分区,永久关闭虚拟内存。K8s初始化init时,会检测swap分区有没有关闭,如果虚拟内存开启,容器pod就可能会放置在虚拟内存中运行,会大大降低运行效率 2 [root@master ~]# swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab 3 4 # 关闭SELINUX 5 [root@master ~]# setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

调整内核参数,对于k8s,如下所示:

1 [root@master ~]# cat > kubernetes.conf <<EOF 2 > net.bridge.bridge-nf-call-iptables=1 3 > net.bridge.bridge-nf-call-ip6tables=1 4 > net.ipv4.ip_forward=1 5 > net.ipv4.tcp_tw_recycle=0 6 > vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 7 > vm.overcommit_memory=1 # 不检查物理内存是否够用 8 > vm.panic_on_oom=0 # 开启 OOM 9 > fs.inotify.max_user_instances=8192 10 > fs.inotify.max_user_watches=1048576 11 > fs.file-max=52706963 12 > fs.nr_open=52706963 13 > net.ipv6.conf.all.disable_ipv6=1 14 > net.netfilter.nf_conntrack_max=2310720 15 > EOF 16 17 [root@master ~]# cp kubernetes.conf /etc/sysctl.d/kubernetes.conf 18 19 [root@master ~]# sysctl -p /etc/sysctl.d/kubernetes.conf 20 sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: 没有那个文件或目录 21 sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: 没有那个文件或目录 22 net.ipv4.ip_forward = 1 23 net.ipv4.tcp_tw_recycle = 0 24 vm.swappiness = 0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 25 vm.overcommit_memory = 1 # 不检查物理内存是否够用 26 vm.panic_on_oom = 0 # 开启 OOM 27 fs.inotify.max_user_instances = 8192 28 fs.inotify.max_user_watches = 1048576 29 fs.file-max = 52706963 30 fs.nr_open = 52706963 31 net.ipv6.conf.all.disable_ipv6 = 1 32 net.netfilter.nf_conntrack_max = 2310720 33 [root@master ~]# 34 35 36 37 38 其中必备参数,如下所示: 39 net.bridge.bridge-nf-call-iptables=1 40 net.bridge.bridge-nf-call-ip6tables=1 //开启网桥模式 41 net.ipv6.conf.all.disable_ipv6=1//关闭ipv6的协议,其余为优化参数,可不设置 42 43 # 拷贝,开机能调用 44 cp kubernetes.conf /etc/sysctl.d/kubernetes.conf 45 46 # 手动刷新,使其立刻生效 47 sysctl -p /etc/sysctl.d/kubernetes.conf

调整系统时区,如下所示:

1 # 设置系统时区为中国/上海 2 [root@master ~]# timedatectl set-timezone Asia/Shanghai 3 4 # 将当前的 UTC 时间写入硬件时钟 5 [root@master ~]# timedatectl set-local-rtc 0 6 7 # 重启依赖于系统时间的服务 8 [root@master ~]# systemctl restart rsyslog && systemctl restart crond

关闭系统不需要的服务,如下所示:

1 [root@master ~]# systemctl stop postfix && systemctl disable postfix 2 Removed symlink /etc/systemd/system/multi-user.target.wants/postfix.service. 3 [root@master ~]#

设置 rsyslogd 和 systemd journald,原因是由于centos7以后,引导方式改为了systemd,所以会有两个日志系统同时工作只保留一个日志(journald)的方法。

1 # 持久化保存日志的目录 2 [root@master ~]# mkdir /var/log/journal 3 [root@master ~]# mkdir /etc/systemd/journald.conf.d 4 [root@master ~]# cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF 5 > [Journal] 6 > #持久化保存到磁盘 7 > Storage=persistent 8 > # 压缩历史日志 9 > Compress=yes 10 > SyncIntervalSec=5m 11 > RateLimitInterval=30s 12 > RateLimitBurst=1000 13 > # 最大占用空间10G 14 > SystemMaxUse=10G 15 > # 单日志文件最大200M 16 > SystemMaxFileSize=200M 17 > # 日志保存时间 2 周 18 > MaxRetentionSec=2week 19 > # 不将日志转发到 syslog 20 > ForwardToSyslog=no 21 > EOF 22 #重启journald配置 23 [root@master ~]# systemctl restart systemd-journald 24 [root@master ~]#

升级内核为4.4版本,Centos7.x系统自带的3.10.x内核存在一些bugs,导致运行的docker,kubernetes不稳定,如下所示:

1 # 查看内核版本 2 [root@master ~]# uname -a 3 Linux master 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux 4 # 升级内核版本 5 [root@master ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm 6 获取http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm 7 警告:/var/tmp/rpm-tmp.6JHEgq: 头V4 DSA/SHA1 Signature, 密钥 ID baadae52: NOKEY 8 准备中... ################################# [100%] 9 正在升级/安装... 10 1:elrepo-release-7.0-3.el7.elrepo ################################# [100%] 11 [root@master ~]# 12 13 # 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装一次! 14 [root@master ~]# yum --enablerepo=elrepo-kernel install -y kernel-lt 15 16 # 设置开机从新内核启动 17 [root@master ~]# grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)" 18 19 # 最后重启虚拟机 20 [root@master ~]# reboot 21 22 # 重启完毕,使用uname -a查看内核版本 23 [root@master ~]# uname -a

4、在k8s-master这个Master节点安装etcd服务,etcd服务相当于是一个数据库。

1 [root@k8s-master ~]# yum install etcd -y 2 Loaded plugins: fastestmirror, langpacks 3 Loading mirror speeds from cached hostfile 4 * base: mirrors.tuna.tsinghua.edu.cn 5 * extras: mirrors.tuna.tsinghua.edu.cn 6 * updates: mirrors.tuna.tsinghua.edu.cn 7 base | 3.6 kB 00:00:00 8 extras | 2.9 kB 00:00:00 9 updates | 2.9 kB 00:00:00 10 Resolving Dependencies 11 --> Running transaction check 12 ---> Package etcd.x86_64 0:3.3.11-2.el7.centos will be installed 13 --> Finished Dependency Resolution 14 15 Dependencies Resolved 16 17 ============================================================================================================ 18 Package Arch Version Repository Size 19 ============================================================================================================ 20 Installing: 21 etcd x86_64 3.3.11-2.el7.centos extras 10 M 22 23 Transaction Summary 24 ============================================================================================================ 25 Install 1 Package 26 27 Total download size: 10 M 28 Installed size: 45 M 29 Downloading packages: 30 etcd-3.3.11-2.el7.centos.x86_64.rpm | 10 MB 00:00:04 31 Running transaction check 32 Running transaction test 33 Transaction test succeeded 34 Running transaction 35 Installing : etcd-3.3.11-2.el7.centos.x86_64 1/1 36 Verifying : etcd-3.3.11-2.el7.centos.x86_64 1/1 37 38 Installed: 39 etcd.x86_64 0:3.3.11-2.el7.centos 40 41 Complete! 42 [root@k8s-master ~]#

开始配置etcd,由于etcd原生支持做集群的。

[root@k8s-master ~]# vim /etc/etcd/etcd.conf

1 #[Member] 2 #ETCD_CORS="" 3 # 数据存在于那个目录 4 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" 5 #ETCD_WAL_DIR="" 6 #ETCD_LISTEN_PEER_URLS="http://localhost:2380" 7 # 监听的地址,可以将localhost改为0.0.0.0,这样远端也可以访问,本地也可以访问,本地访问默认使用127.0.0.1进行访问。 8 ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" 9 #ETCD_MAX_SNAPSHOTS="5" 10 #ETCD_MAX_WALS="5" 11 # 节点的名称,不配置集群没有什么作用,配置集群必须不一样。 12 ETCD_NAME="default" 13 #ETCD_SNAPSHOT_COUNT="100000" 14 #ETCD_HEARTBEAT_INTERVAL="100" 15 #ETCD_ELECTION_TIMEOUT="1000" 16 #ETCD_QUOTA_BACKEND_BYTES="0" 17 #ETCD_MAX_REQUEST_BYTES="1572864" 18 #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" 19 #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" 20 #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" 21 # 22 #[Clustering] 23 #ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" 24 # 如果配置了集群,集群里面的ip地址。将localhost改为192.168.110.133。 25 ETCD_ADVERTISE_CLIENT_URLS="http://192.168.110.133:2379" 26 #ETCD_DISCOVERY="" 27 #ETCD_DISCOVERY_FALLBACK="proxy" 28 #ETCD_DISCOVERY_PROXY="" 29 #ETCD_DISCOVERY_SRV="" 30 #ETCD_INITIAL_CLUSTER="default=http://localhost:2380" 31 #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" 32 #ETCD_INITIAL_CLUSTER_STATE="new" 33 #ETCD_STRICT_RECONFIG_CHECK="true" 34 #ETCD_ENABLE_V2="true" 35 # 36 #[Proxy] 37 #ETCD_PROXY="off" 38 #ETCD_PROXY_FAILURE_WAIT="5000" 39 #ETCD_PROXY_REFRESH_INTERVAL="30000" 40 #ETCD_PROXY_DIAL_TIMEOUT="1000" 41 #ETCD_PROXY_WRITE_TIMEOUT="5000" 42 #ETCD_PROXY_READ_TIMEOUT="0" 43 # 44 #[Security] 45 #ETCD_CERT_FILE="" 46 #ETCD_KEY_FILE="" 47 #ETCD_CLIENT_CERT_AUTH="false" 48 #ETCD_TRUSTED_CA_FILE="" 49 #ETCD_AUTO_TLS="false" 50 #ETCD_PEER_CERT_FILE="" 51 #ETCD_PEER_KEY_FILE="" 52 #ETCD_PEER_CLIENT_CERT_AUTH="false" 53 #ETCD_PEER_TRUSTED_CA_FILE="" 54 #ETCD_PEER_AUTO_TLS="false" 55 # 56 #[Logging] 57 #ETCD_DEBUG="false" 58 #ETCD_LOG_PACKAGE_LEVELS="" 59 #ETCD_LOG_OUTPUT="default" 60 # 61 #[Unsafe] 62 #ETCD_FORCE_NEW_CLUSTER="false" 63 # 64 #[Version] 65 #ETCD_VERSION="false" 66 #ETCD_AUTO_COMPACTION_RETENTION="0" 67 # 68 #[Profiling] 69 #ETCD_ENABLE_PPROF="false" 70 #ETCD_METRICS="basic" 71 # 72 #[Auth] 73 #ETCD_AUTH_TOKEN="simple"

启动etcd,命令如下所示:

[root@k8s-master ~]# systemctl start etcd

[root@k8s-master ~]#

设置为将etch开机自启动,如下所示:

1 [root@k8s-master ~]# systemctl enable etcd 2 Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service. 3 [root@k8s-master ~]#

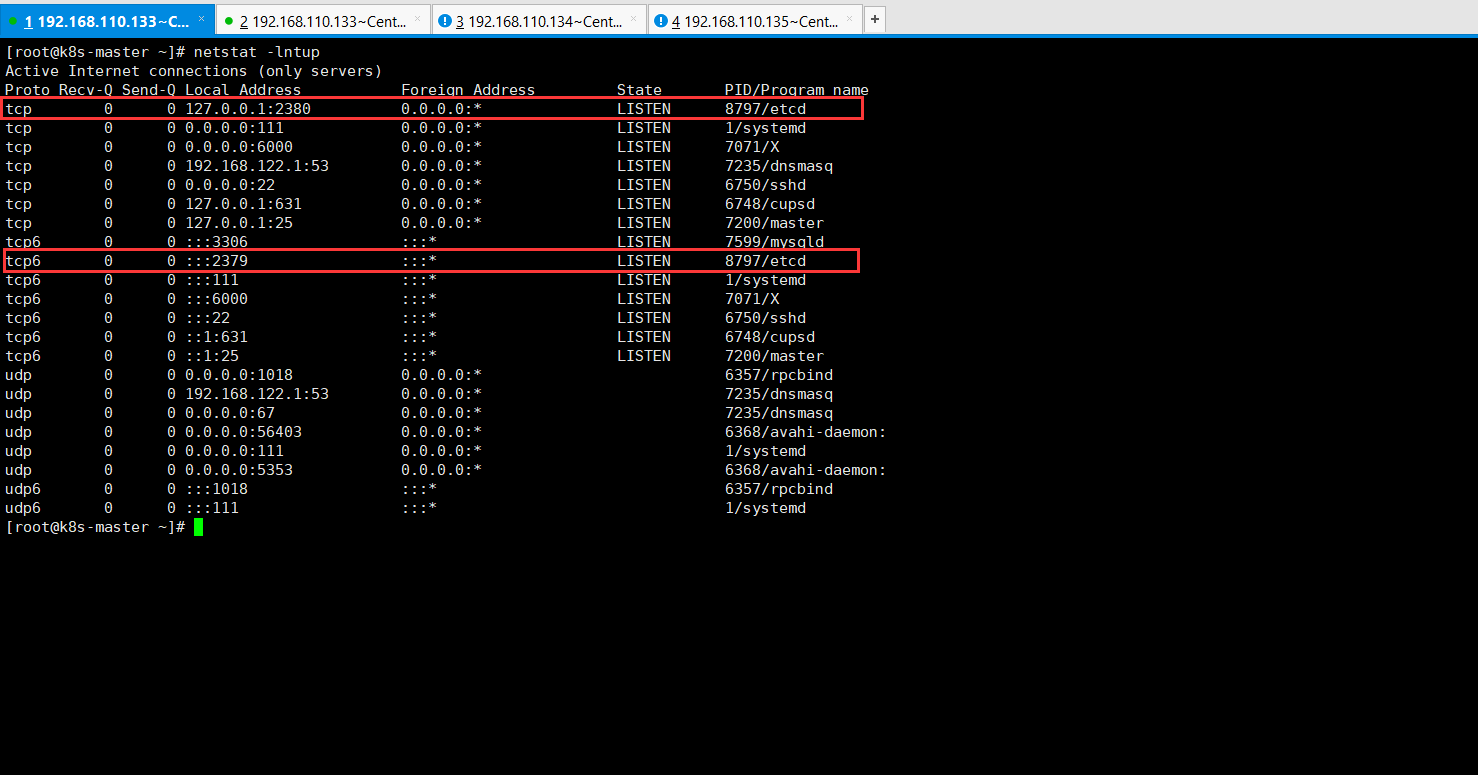

可以使用netstat -lntup命令查看监听的端口号,如果etcd启动之后会监听两个端口,一个是2379,刚才设置为0.0.0.0地址,所以会监听任意的2379,还有一个会监听127.0.0.1:2380。2379是对外提供服务用的,k8s向etcd写数据,使用的是2379端口,etcd集群之间相互数据同步使用的是2380端口。

具体操作,如下所示:

1 [root@k8s-master ~]# netstat -lntup 2 Active Internet connections (only servers) 3 Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name 4 tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 8797/etcd 5 tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1/systemd 6 tcp 0 0 0.0.0.0:6000 0.0.0.0:* LISTEN 7071/X 7 tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 7235/dnsmasq 8 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 6750/sshd 9 tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 6748/cupsd 10 tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 7200/master 11 tcp6 0 0 :::3306 :::* LISTEN 7599/mysqld 12 tcp6 0 0 :::2379 :::* LISTEN 8797/etcd 13 tcp6 0 0 :::111 :::* LISTEN 1/systemd 14 tcp6 0 0 :::6000 :::* LISTEN 7071/X 15 tcp6 0 0 :::22 :::* LISTEN 6750/sshd 16 tcp6 0 0 ::1:631 :::* LISTEN 6748/cupsd 17 tcp6 0 0 ::1:25 :::* LISTEN 7200/master 18 udp 0 0 0.0.0.0:1018 0.0.0.0:* 6357/rpcbind 19 udp 0 0 192.168.122.1:53 0.0.0.0:* 7235/dnsmasq 20 udp 0 0 0.0.0.0:67 0.0.0.0:* 7235/dnsmasq 21 udp 0 0 0.0.0.0:56403 0.0.0.0:* 6368/avahi-daemon: 22 udp 0 0 0.0.0.0:111 0.0.0.0:* 1/systemd 23 udp 0 0 0.0.0.0:5353 0.0.0.0:* 6368/avahi-daemon: 24 udp6 0 0 :::1018 :::* 6357/rpcbind 25 udp6 0 0 :::111 :::* 1/systemd 26 [root@k8s-master ~]#

可以使用systemctl stop etcd.service停止etcd,然后使用netstat -lntup查看监听的端口号,再启动etcd进行观察。

1 [root@k8s-master ~]# systemctl stop etcd.service 2 [root@k8s-master ~]# netstat -lntup 3 Active Internet connections (only servers) 4 Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name 5 tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1/systemd 6 tcp 0 0 0.0.0.0:6000 0.0.0.0:* LISTEN 7071/X 7 tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 7235/dnsmasq 8 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 6750/sshd 9 tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 6748/cupsd 10 tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 7200/master 11 tcp6 0 0 :::3306 :::* LISTEN 7599/mysqld 12 tcp6 0 0 :::111 :::* LISTEN 1/systemd 13 tcp6 0 0 :::6000 :::* LISTEN 7071/X 14 tcp6 0 0 :::22 :::* LISTEN 6750/sshd 15 tcp6 0 0 ::1:631 :::* LISTEN 6748/cupsd 16 tcp6 0 0 ::1:25 :::* LISTEN 7200/master 17 udp 0 0 0.0.0.0:1018 0.0.0.0:* 6357/rpcbind 18 udp 0 0 192.168.122.1:53 0.0.0.0:* 7235/dnsmasq 19 udp 0 0 0.0.0.0:67 0.0.0.0:* 7235/dnsmasq 20 udp 0 0 0.0.0.0:56403 0.0.0.0:* 6368/avahi-daemon: 21 udp 0 0 0.0.0.0:111 0.0.0.0:* 1/systemd 22 udp 0 0 0.0.0.0:5353 0.0.0.0:* 6368/avahi-daemon: 23 udp6 0 0 :::1018 :::* 6357/rpcbind 24 udp6 0 0 :::111 :::* 1/systemd 25 [root@k8s-master ~]# systemctl start etcd.service 26 [root@k8s-master ~]# netstat -lntup 27 Active Internet connections (only servers) 28 Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name 29 tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 9055/etcd 30 tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1/systemd 31 tcp 0 0 0.0.0.0:6000 0.0.0.0:* LISTEN 7071/X 32 tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 7235/dnsmasq 33 tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 6750/sshd 34 tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 6748/cupsd 35 tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 7200/master 36 tcp6 0 0 :::3306 :::* LISTEN 7599/mysqld 37 tcp6 0 0 :::2379 :::* LISTEN 9055/etcd 38 tcp6 0 0 :::111 :::* LISTEN 1/systemd 39 tcp6 0 0 :::6000 :::* LISTEN 7071/X 40 tcp6 0 0 :::22 :::* LISTEN 6750/sshd 41 tcp6 0 0 ::1:631 :::* LISTEN 6748/cupsd 42 tcp6 0 0 ::1:25 :::* LISTEN 7200/master 43 udp 0 0 0.0.0.0:1018 0.0.0.0:* 6357/rpcbind 44 udp 0 0 192.168.122.1:53 0.0.0.0:* 7235/dnsmasq 45 udp 0 0 0.0.0.0:67 0.0.0.0:* 7235/dnsmasq 46 udp 0 0 0.0.0.0:56403 0.0.0.0:* 6368/avahi-daemon: 47 udp 0 0 0.0.0.0:111 0.0.0.0:* 1/systemd 48 udp 0 0 0.0.0.0:5353 0.0.0.0:* 6368/avahi-daemon: 49 udp6 0 0 :::1018 :::* 6357/rpcbind 50 udp6 0 0 :::111 :::* 1/systemd 51 [root@k8s-master ~]#

可以使用etcd的命令进行测试,然后查看etcd集群的健康状态。

1 [root@k8s-master ~]# etcdctl set testdir/testkey0 0 2 0 3 [root@k8s-master ~]# etcdctl get testdir/testkey0 4 0 5 [root@k8s-master ~]# etcdctl set testdir/testkey1 111 6 111 7 [root@k8s-master ~]# etcdctl get testdir/testkey1 8 111 9 [root@k8s-master ~]# etcdctl -C http://192.168.110.133:2379 cluster-health 10 4member 8e9e05c52164694d is healthy: got healthy result from http://192.168.110.133:2379 11 cluster is healthy 12 [root@k8s-master ~]#

5、安装Kubernetes(k8s)的master节点。

安装master的时候会安装一个kubernetes-client,这个kubernetes-client里面有kubectl这个命令。

1 [root@k8s-master ~]# yum install kubernetes 2 kubernetes-client.x86_64 kubernetes-master.x86_64 kubernetes-node.x86_64 kubernetes.x86_64 3 [root@k8s-master ~]# yum install kubernetes 4 kubernetes-client.x86_64 kubernetes-master.x86_64 kubernetes-node.x86_64 kubernetes.x86_64 5 [root@k8s-master ~]# yum install kubernetes-master.x86_64 -y 6 Loaded plugins: fastestmirror, langpacks 7 Loading mirror speeds from cached hostfile 8 * base: mirrors.tuna.tsinghua.edu.cn 9 * extras: mirrors.tuna.tsinghua.edu.cn 10 * updates: mirrors.tuna.tsinghua.edu.cn 11 Resolving Dependencies 12 --> Running transaction check 13 ---> Package kubernetes-master.x86_64 0:1.5.2-0.7.git269f928.el7 will be installed 14 --> Processing Dependency: kubernetes-client = 1.5.2-0.7.git269f928.el7 for package: kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64 15 --> Running transaction check 16 ---> Package kubernetes-client.x86_64 0:1.5.2-0.7.git269f928.el7 will be installed 17 --> Finished Dependency Resolution 18 19 Dependencies Resolved 20 21 ================================================================================================================================================================================================================= 22 Package Arch Version Repository Size 23 ================================================================================================================================================================================================================= 24 Installing: 25 kubernetes-master x86_64 1.5.2-0.7.git269f928.el7 extras 25 M 26 Installing for dependencies: 27 kubernetes-client x86_64 1.5.2-0.7.git269f928.el7 extras 14 M 28 29 Transaction Summary 30 ================================================================================================================================================================================================================= 31 Install 1 Package (+1 Dependent package) 32 33 Total download size: 39 M 34 Installed size: 224 M 35 Downloading packages: 36 (1/2): kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64.rpm | 25 MB 00:00:14 37 (2/2): kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64.rpm | 14 MB 00:00:19 38 ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 39 Total 2.0 MB/s | 39 MB 00:00:19 40 Running transaction check 41 Running transaction test 42 Transaction test succeeded 43 Running transaction 44 Installing : kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64 1/2 45 Installing : kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64 2/2 46 Verifying : kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64 1/2 47 Verifying : kubernetes-master-1.5.2-0.7.git269f928.el7.x86_64 2/2 48 49 Installed: 50 kubernetes-master.x86_64 0:1.5.2-0.7.git269f928.el7 51 52 Dependency Installed: 53 kubernetes-client.x86_64 0:1.5.2-0.7.git269f928.el7 54 55 Complete! 56 [root@k8s-master ~]#

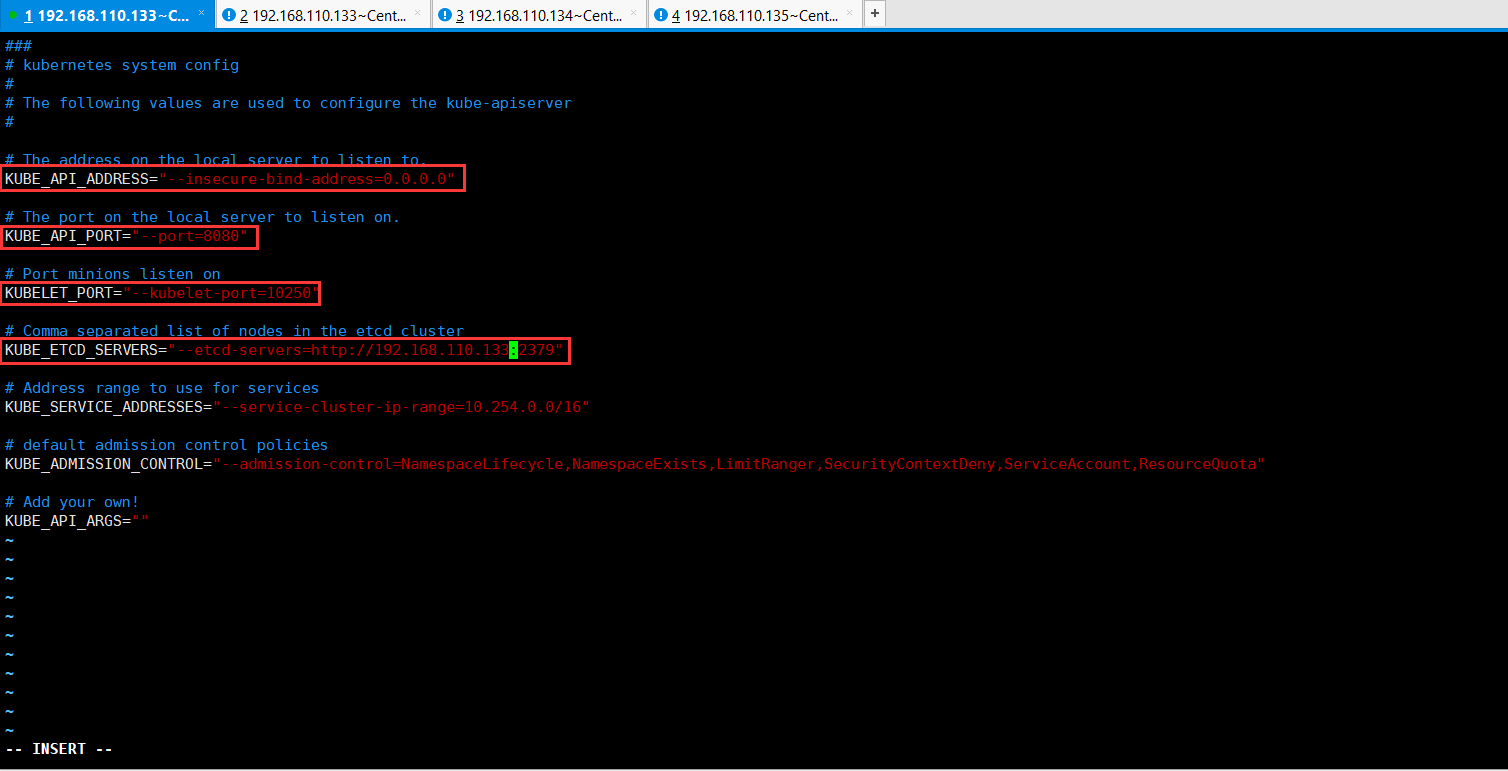

主节点里面包含etcd、api-server、controller-manager、scheduler。刚才已经安装过了ectd,现在开始配置api-server,如下所示:

1 [root@k8s-master ~]# vim /etc/kubernetes/apiserver

具体配置,如下所示:

1 ### 2 # kubernetes system config 3 # 4 # The following values are used to configure the kube-apiserver 5 # 6 7 # The address on the local server to listen to. 8 # 首先要配置的api-server监听的地址,如果是仅本机可以访问,就配置为127.0.0.1,如果在其他地方想用k8s的客户端来访问,就需要监听0.0.0.0 9 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" 10 11 # The port on the local server to listen on. 12 # 监听端口,api-server的端口是8080,由于api-server是k8s的核心服务,很多时候,把安装了api-server服务的这台机器叫做k8s的Master节点。 13 KUBE_API_PORT="--port=8080" 14 15 # Port minions listen on 16 # minions是随从的意思,即Node的意思,这里是有三个Node节点的,Node节点它的端口启动Kubelet服务之后,需要监听10250,Master节点也是通过10250去访问Node节点。 17 # 需要在配置文件中进行协商,这里默认为10250。为了安全也可以改为其他端口。 18 KUBELET_PORT="--kubelet-port=10250" 19 20 # Comma separated list of nodes in the etcd cluster 21 # 连接到etcd,由于etcd在master节点上,所以将127.0.0.1改为192.168.110.133,etcd对外提供服务使用的2379端口。 22 KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.110.133:2379" 23 24 # Address range to use for services 25 KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" 26 27 # default admission control policies 28 KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" 29 30 # Add your own! 31 KUBE_API_ARGS=""

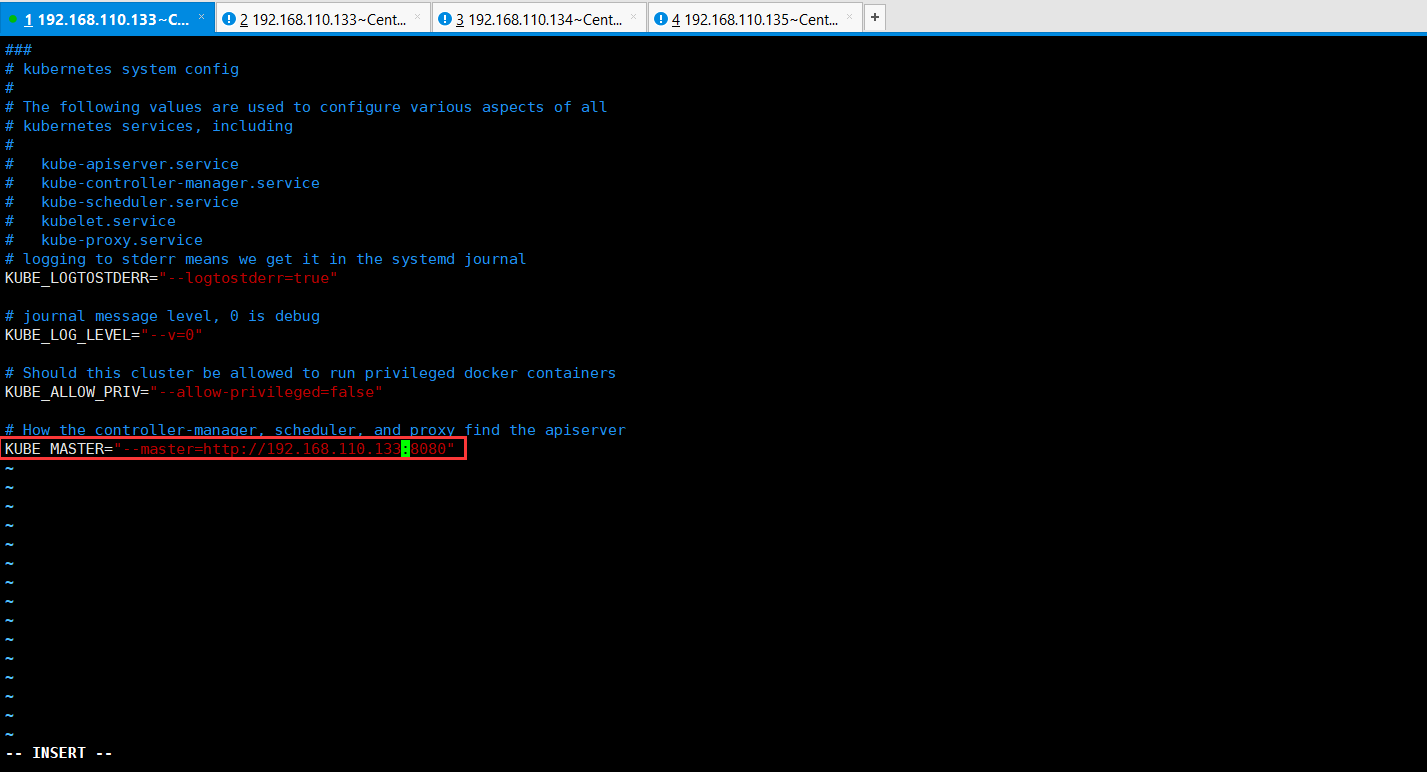

现在开始配置controller-manager、scheduler,由于它们共用一个配置文件,如下所示:

1 [root@k8s-master ~]# vim /etc/kubernetes/config

具体配置,如下所示:

1 ### 2 # kubernetes system config 3 # 4 # The following values are used to configure various aspects of all 5 # kubernetes services, including 6 # 7 # kube-apiserver.service 8 # kube-controller-manager.service 9 # kube-scheduler.service 10 # kubelet.service 11 # kube-proxy.service 12 # logging to stderr means we get it in the systemd journal 13 KUBE_LOGTOSTDERR="--logtostderr=true" 14 15 # journal message level, 0 is debug 16 KUBE_LOG_LEVEL="--v=0" 17 18 # Should this cluster be allowed to run privileged docker containers 19 KUBE_ALLOW_PRIV="--allow-privileged=false" 20 21 # How the controller-manager, scheduler, and proxy find the apiserver 22 # controller-manager, scheduler, and proxy找到apiserver的地址,需要将127.0.0.1改为192.168.110.133。 23 # 注意参数名称KUBE_MASTER,由于将api-server安装到那台机器,那台机器就是master节点。 24 KUBE_MASTER="--master=http://192.168.110.133:8080"

那么,现在开始启动api-server服务、启动controller-manager服务、启动scheduler服务。

1 [root@k8s-master ~]# systemctl start kube-apiserver.service 2 [root@k8s-master ~]# systemctl start kube-controller-manager.service 3 [root@k8s-master ~]# systemctl start kube-scheduler.service 4 [root@k8s-master ~]#

可以设置为开机自启,以免机器意外停机,或者关机k8s集群开机之后不能用了。

1 [root@k8s-master ~]# systemctl enable kube-apiserver.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service. 3 [root@k8s-master ~]# systemctl enable kube-controller-manager.service 4 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. 5 [root@k8s-master ~]# systemctl enable kube-scheduler.service 6 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service. 7 [root@k8s-master ~]#

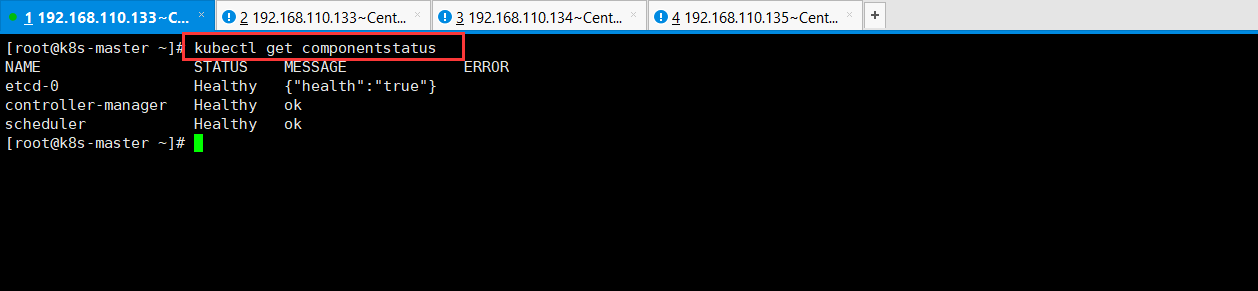

6、全部启动完毕之后,可以使用命令查看状态是否正常。

1 [root@k8s-master ~]# kubectl get componentstatus 2 NAME STATUS MESSAGE ERROR 3 controller-manager Healthy ok 4 scheduler Healthy ok 5 etcd-0 Healthy {"health":"true"} 6 [root@k8s-master ~]#

目前为止,主节点Master分配的2G内存,已经快用完了。

1 [root@k8s-master ~]# free -h 2 total used free shared buff/cache available 3 Mem: 1.8G 636M 282M 12M 900M 961M 4 Swap: 2.0G 0B 2.0G 5 [root@k8s-master ~]#

7、安装kubernetes(k8s)的 node 节点。安装node服务的时候,会自动依赖帮助你安装好docker。

1 [root@k8s-master ~]# yum install kubernetes-node.x86_64 -y

安装过程,如下所示:

1 [root@k8s-master ~]# yum install kubernetes-node.x86_64 -y 2 Loaded plugins: fastestmirror, langpacks 3 Loading mirror speeds from cached hostfile 4 * base: mirrors.tuna.tsinghua.edu.cn 5 * extras: mirrors.tuna.tsinghua.edu.cn 6 * updates: mirrors.tuna.tsinghua.edu.cn 7 Resolving Dependencies 8 --> Running transaction check 9 ---> Package kubernetes-node.x86_64 0:1.5.2-0.7.git269f928.el7 will be installed 10 --> Processing Dependency: docker for package: kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 11 --> Processing Dependency: conntrack-tools for package: kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 12 --> Running transaction check 13 ---> Package conntrack-tools.x86_64 0:1.4.4-7.el7 will be installed 14 --> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 15 --> Processing Dependency: libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 16 --> Processing Dependency: libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 17 --> Processing Dependency: libnetfilter_queue.so.1()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 18 --> Processing Dependency: libnetfilter_cttimeout.so.1()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 19 --> Processing Dependency: libnetfilter_cthelper.so.0()(64bit) for package: conntrack-tools-1.4.4-7.el7.x86_64 20 ---> Package docker.x86_64 2:1.13.1-161.git64e9980.el7_8 will be installed 21 --> Processing Dependency: docker-common = 2:1.13.1-161.git64e9980.el7_8 for package: 2:docker-1.13.1-161.git64e9980.el7_8.x86_64 22 --> Processing Dependency: docker-client = 2:1.13.1-161.git64e9980.el7_8 for package: 2:docker-1.13.1-161.git64e9980.el7_8.x86_64 23 --> Processing Dependency: subscription-manager-rhsm-certificates for package: 2:docker-1.13.1-161.git64e9980.el7_8.x86_64 24 --> Running transaction check 25 ---> Package docker-client.x86_64 2:1.13.1-161.git64e9980.el7_8 will be installed 26 ---> Package docker-common.x86_64 2:1.13.1-161.git64e9980.el7_8 will be installed 27 --> Processing Dependency: skopeo-containers >= 1:0.1.26-2 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 28 --> Processing Dependency: oci-umount >= 2:2.3.3-3 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 29 --> Processing Dependency: oci-systemd-hook >= 1:0.1.4-9 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 30 --> Processing Dependency: oci-register-machine >= 1:0-5.13 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 31 --> Processing Dependency: container-storage-setup >= 0.9.0-1 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 32 --> Processing Dependency: container-selinux >= 2:2.51-1 for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 33 --> Processing Dependency: atomic-registries for package: 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 34 ---> Package libnetfilter_cthelper.x86_64 0:1.0.0-11.el7 will be installed 35 ---> Package libnetfilter_cttimeout.x86_64 0:1.0.0-7.el7 will be installed 36 ---> Package libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 will be installed 37 ---> Package subscription-manager-rhsm-certificates.x86_64 0:1.24.26-3.el7.centos will be installed 38 --> Running transaction check 39 ---> Package atomic-registries.x86_64 1:1.22.1-33.gitb507039.el7_8 will be installed 40 --> Processing Dependency: python-pytoml for package: 1:atomic-registries-1.22.1-33.gitb507039.el7_8.x86_64 41 ---> Package container-selinux.noarch 2:2.119.1-1.c57a6f9.el7 will be installed 42 ---> Package container-storage-setup.noarch 0:0.11.0-2.git5eaf76c.el7 will be installed 43 ---> Package containers-common.x86_64 1:0.1.40-7.el7_8 will be installed 44 --> Processing Dependency: subscription-manager for package: 1:containers-common-0.1.40-7.el7_8.x86_64 45 --> Processing Dependency: slirp4netns for package: 1:containers-common-0.1.40-7.el7_8.x86_64 46 --> Processing Dependency: fuse-overlayfs for package: 1:containers-common-0.1.40-7.el7_8.x86_64 47 ---> Package oci-register-machine.x86_64 1:0-6.git2b44233.el7 will be installed 48 ---> Package oci-systemd-hook.x86_64 1:0.2.0-1.git05e6923.el7_6 will be installed 49 ---> Package oci-umount.x86_64 2:2.5-3.el7 will be installed 50 --> Running transaction check 51 ---> Package fuse-overlayfs.x86_64 0:0.7.2-6.el7_8 will be installed 52 --> Processing Dependency: libfuse3.so.3(FUSE_3.2)(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 53 --> Processing Dependency: libfuse3.so.3(FUSE_3.0)(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 54 --> Processing Dependency: libfuse3.so.3()(64bit) for package: fuse-overlayfs-0.7.2-6.el7_8.x86_64 55 ---> Package python-pytoml.noarch 0:0.1.14-1.git7dea353.el7 will be installed 56 ---> Package slirp4netns.x86_64 0:0.4.3-4.el7_8 will be installed 57 ---> Package subscription-manager.x86_64 0:1.24.26-3.el7.centos will be installed 58 --> Processing Dependency: subscription-manager-rhsm = 1.24.26 for package: subscription-manager-1.24.26-3.el7.centos.x86_64 59 --> Processing Dependency: python-dmidecode >= 3.12.2-2 for package: subscription-manager-1.24.26-3.el7.centos.x86_64 60 --> Processing Dependency: python-syspurpose for package: subscription-manager-1.24.26-3.el7.centos.x86_64 61 --> Processing Dependency: python-dateutil for package: subscription-manager-1.24.26-3.el7.centos.x86_64 62 --> Running transaction check 63 ---> Package fuse3-libs.x86_64 0:3.6.1-4.el7 will be installed 64 ---> Package python-dateutil.noarch 0:1.5-7.el7 will be installed 65 ---> Package python-dmidecode.x86_64 0:3.12.2-4.el7 will be installed 66 ---> Package python-syspurpose.x86_64 0:1.24.26-3.el7.centos will be installed 67 ---> Package subscription-manager-rhsm.x86_64 0:1.24.26-3.el7.centos will be installed 68 --> Finished Dependency Resolution 69 70 Dependencies Resolved 71 72 ================================================================================================================================================================================================================= 73 Package Arch Version Repository Size 74 ================================================================================================================================================================================================================= 75 Installing: 76 kubernetes-node x86_64 1.5.2-0.7.git269f928.el7 extras 14 M 77 Installing for dependencies: 78 atomic-registries x86_64 1:1.22.1-33.gitb507039.el7_8 extras 36 k 79 conntrack-tools x86_64 1.4.4-7.el7 base 187 k 80 container-selinux noarch 2:2.119.1-1.c57a6f9.el7 extras 40 k 81 container-storage-setup noarch 0.11.0-2.git5eaf76c.el7 extras 35 k 82 containers-common x86_64 1:0.1.40-7.el7_8 extras 42 k 83 docker x86_64 2:1.13.1-161.git64e9980.el7_8 extras 18 M 84 docker-client x86_64 2:1.13.1-161.git64e9980.el7_8 extras 3.9 M 85 docker-common x86_64 2:1.13.1-161.git64e9980.el7_8 extras 99 k 86 fuse-overlayfs x86_64 0.7.2-6.el7_8 extras 54 k 87 fuse3-libs x86_64 3.6.1-4.el7 extras 82 k 88 libnetfilter_cthelper x86_64 1.0.0-11.el7 base 18 k 89 libnetfilter_cttimeout x86_64 1.0.0-7.el7 base 18 k 90 libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k 91 oci-register-machine x86_64 1:0-6.git2b44233.el7 extras 1.1 M 92 oci-systemd-hook x86_64 1:0.2.0-1.git05e6923.el7_6 extras 34 k 93 oci-umount x86_64 2:2.5-3.el7 extras 33 k 94 python-dateutil noarch 1.5-7.el7 base 85 k 95 python-dmidecode x86_64 3.12.2-4.el7 base 83 k 96 python-pytoml noarch 0.1.14-1.git7dea353.el7 extras 18 k 97 python-syspurpose x86_64 1.24.26-3.el7.centos updates 269 k 98 slirp4netns x86_64 0.4.3-4.el7_8 extras 81 k 99 subscription-manager x86_64 1.24.26-3.el7.centos updates 1.1 M 100 subscription-manager-rhsm x86_64 1.24.26-3.el7.centos updates 327 k 101 subscription-manager-rhsm-certificates x86_64 1.24.26-3.el7.centos updates 232 k 102 103 Transaction Summary 104 ================================================================================================================================================================================================================= 105 Install 1 Package (+24 Dependent packages) 106 107 Total download size: 40 M 108 Installed size: 166 M 109 Downloading packages: 110 (1/25): atomic-registries-1.22.1-33.gitb507039.el7_8.x86_64.rpm | 36 kB 00:00:00 111 (2/25): container-storage-setup-0.11.0-2.git5eaf76c.el7.noarch.rpm | 35 kB 00:00:00 112 (3/25): containers-common-0.1.40-7.el7_8.x86_64.rpm | 42 kB 00:00:00 113 (4/25): container-selinux-2.119.1-1.c57a6f9.el7.noarch.rpm | 40 kB 00:00:00 114 (5/25): conntrack-tools-1.4.4-7.el7.x86_64.rpm | 187 kB 00:00:00 115 (6/25): docker-client-1.13.1-161.git64e9980.el7_8.x86_64.rpm | 3.9 MB 00:00:02 116 (7/25): docker-common-1.13.1-161.git64e9980.el7_8.x86_64.rpm | 99 kB 00:00:00 117 (8/25): fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm | 54 kB 00:00:00 118 (9/25): fuse3-libs-3.6.1-4.el7.x86_64.rpm | 82 kB 00:00:00 119 (10/25): libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm | 18 kB 00:00:00 120 (11/25): libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm | 23 kB 00:00:00 121 (12/25): libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm | 18 kB 00:00:05 122 (13/25): kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64.rpm | 14 MB 00:00:10 123 (14/25): oci-register-machine-0-6.git2b44233.el7.x86_64.rpm | 1.1 MB 00:00:00 124 (15/25): oci-systemd-hook-0.2.0-1.git05e6923.el7_6.x86_64.rpm | 34 kB 00:00:00 125 (16/25): oci-umount-2.5-3.el7.x86_64.rpm | 33 kB 00:00:00 126 (17/25): python-pytoml-0.1.14-1.git7dea353.el7.noarch.rpm | 18 kB 00:00:00 127 (18/25): python-dateutil-1.5-7.el7.noarch.rpm | 85 kB 00:00:00 128 (19/25): python-dmidecode-3.12.2-4.el7.x86_64.rpm | 83 kB 00:00:00 129 (20/25): slirp4netns-0.4.3-4.el7_8.x86_64.rpm | 81 kB 00:00:00 130 (21/25): subscription-manager-1.24.26-3.el7.centos.x86_64.rpm | 1.1 MB 00:00:00 131 (22/25): subscription-manager-rhsm-certificates-1.24.26-3.el7.centos.x86_64.rpm | 232 kB 00:00:00 132 (23/25): docker-1.13.1-161.git64e9980.el7_8.x86_64.rpm | 18 MB 00:00:16 133 (24/25): subscription-manager-rhsm-1.24.26-3.el7.centos.x86_64.rpm | 327 kB 00:00:05 134 (25/25): python-syspurpose-1.24.26-3.el7.centos.x86_64.rpm | 269 kB 00:00:10 135 ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 136 Total 1.5 MB/s | 40 MB 00:00:26 137 Running transaction check 138 Running transaction test 139 Transaction test succeeded 140 Running transaction 141 Installing : subscription-manager-rhsm-certificates-1.24.26-3.el7.centos.x86_64 1/25 142 Installing : python-dateutil-1.5-7.el7.noarch 2/25 143 Installing : subscription-manager-rhsm-1.24.26-3.el7.centos.x86_64 3/25 144 Installing : libnetfilter_cttimeout-1.0.0-7.el7.x86_64 4/25 145 Installing : libnetfilter_cthelper-1.0.0-11.el7.x86_64 5/25 146 Installing : slirp4netns-0.4.3-4.el7_8.x86_64 6/25 147 Installing : 1:oci-register-machine-0-6.git2b44233.el7.x86_64 7/25 148 Installing : 1:oci-systemd-hook-0.2.0-1.git05e6923.el7_6.x86_64 8/25 149 Installing : fuse3-libs-3.6.1-4.el7.x86_64 9/25 150 Installing : fuse-overlayfs-0.7.2-6.el7_8.x86_64 10/25 151 Installing : python-pytoml-0.1.14-1.git7dea353.el7.noarch 11/25 152 Installing : 1:atomic-registries-1.22.1-33.gitb507039.el7_8.x86_64 12/25 153 Installing : 2:oci-umount-2.5-3.el7.x86_64 13/25 154 Installing : python-dmidecode-3.12.2-4.el7.x86_64 14/25 155 Installing : python-syspurpose-1.24.26-3.el7.centos.x86_64 15/25 156 Installing : subscription-manager-1.24.26-3.el7.centos.x86_64 16/25 157 Installing : 1:containers-common-0.1.40-7.el7_8.x86_64 17/25 158 Installing : container-storage-setup-0.11.0-2.git5eaf76c.el7.noarch 18/25 159 Installing : libnetfilter_queue-1.0.2-2.el7_2.x86_64 19/25 160 Installing : conntrack-tools-1.4.4-7.el7.x86_64 20/25 161 Installing : 2:container-selinux-2.119.1-1.c57a6f9.el7.noarch 21/25 162 Installing : 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 22/25 163 Installing : 2:docker-client-1.13.1-161.git64e9980.el7_8.x86_64 23/25 164 Installing : 2:docker-1.13.1-161.git64e9980.el7_8.x86_64 24/25 165 Installing : kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 25/25 166 Verifying : 2:container-selinux-2.119.1-1.c57a6f9.el7.noarch 1/25 167 Verifying : 1:atomic-registries-1.22.1-33.gitb507039.el7_8.x86_64 2/25 168 Verifying : libnetfilter_queue-1.0.2-2.el7_2.x86_64 3/25 169 Verifying : fuse-overlayfs-0.7.2-6.el7_8.x86_64 4/25 170 Verifying : container-storage-setup-0.11.0-2.git5eaf76c.el7.noarch 5/25 171 Verifying : subscription-manager-1.24.26-3.el7.centos.x86_64 6/25 172 Verifying : conntrack-tools-1.4.4-7.el7.x86_64 7/25 173 Verifying : python-syspurpose-1.24.26-3.el7.centos.x86_64 8/25 174 Verifying : python-dateutil-1.5-7.el7.noarch 9/25 175 Verifying : subscription-manager-rhsm-1.24.26-3.el7.centos.x86_64 10/25 176 Verifying : python-dmidecode-3.12.2-4.el7.x86_64 11/25 177 Verifying : 2:oci-umount-2.5-3.el7.x86_64 12/25 178 Verifying : python-pytoml-0.1.14-1.git7dea353.el7.noarch 13/25 179 Verifying : 2:docker-client-1.13.1-161.git64e9980.el7_8.x86_64 14/25 180 Verifying : fuse3-libs-3.6.1-4.el7.x86_64 15/25 181 Verifying : 1:containers-common-0.1.40-7.el7_8.x86_64 16/25 182 Verifying : 1:oci-systemd-hook-0.2.0-1.git05e6923.el7_6.x86_64 17/25 183 Verifying : 1:oci-register-machine-0-6.git2b44233.el7.x86_64 18/25 184 Verifying : slirp4netns-0.4.3-4.el7_8.x86_64 19/25 185 Verifying : libnetfilter_cthelper-1.0.0-11.el7.x86_64 20/25 186 Verifying : libnetfilter_cttimeout-1.0.0-7.el7.x86_64 21/25 187 Verifying : 2:docker-common-1.13.1-161.git64e9980.el7_8.x86_64 22/25 188 Verifying : kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 23/25 189 Verifying : subscription-manager-rhsm-certificates-1.24.26-3.el7.centos.x86_64 24/25 190 Verifying : 2:docker-1.13.1-161.git64e9980.el7_8.x86_64 25/25 191 192 Installed: 193 kubernetes-node.x86_64 0:1.5.2-0.7.git269f928.el7 194 195 Dependency Installed: 196 atomic-registries.x86_64 1:1.22.1-33.gitb507039.el7_8 conntrack-tools.x86_64 0:1.4.4-7.el7 container-selinux.noarch 2:2.119.1-1.c57a6f9.el7 197 container-storage-setup.noarch 0:0.11.0-2.git5eaf76c.el7 containers-common.x86_64 1:0.1.40-7.el7_8 docker.x86_64 2:1.13.1-161.git64e9980.el7_8 198 docker-client.x86_64 2:1.13.1-161.git64e9980.el7_8 docker-common.x86_64 2:1.13.1-161.git64e9980.el7_8 fuse-overlayfs.x86_64 0:0.7.2-6.el7_8 199 fuse3-libs.x86_64 0:3.6.1-4.el7 libnetfilter_cthelper.x86_64 0:1.0.0-11.el7 libnetfilter_cttimeout.x86_64 0:1.0.0-7.el7 200 libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 oci-register-machine.x86_64 1:0-6.git2b44233.el7 oci-systemd-hook.x86_64 1:0.2.0-1.git05e6923.el7_6 201 oci-umount.x86_64 2:2.5-3.el7 python-dateutil.noarch 0:1.5-7.el7 python-dmidecode.x86_64 0:3.12.2-4.el7 202 python-pytoml.noarch 0:0.1.14-1.git7dea353.el7 python-syspurpose.x86_64 0:1.24.26-3.el7.centos slirp4netns.x86_64 0:0.4.3-4.el7_8 203 subscription-manager.x86_64 0:1.24.26-3.el7.centos subscription-manager-rhsm.x86_64 0:1.24.26-3.el7.centos subscription-manager-rhsm-certificates.x86_64 0:1.24.26-3.el7.centos 204 205 Complete! 206 [root@k8s-master ~]#

此时,将192.168.110.133、192.168.110.134、192.168.110.135三台机器的Node节点都安装上去。

开始在Master节点配置Node服务,由于刚才已经配置过vim /etc/kubernetes/config。所以Master节点的Node服务不用进行配置了,那么开始配置kubelet服务。

1 [root@k8s-master ~]# vim /etc/kubernetes/kubelet

具体配置,如下所示:

1 ### 2 # kubernetes kubelet (minion) config 3 4 # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) 5 # kublet的监听地址,将127.0.0.1改为192.168.110.133,对192.168.110.133进行监听。 6 KUBELET_ADDRESS="--address=192.168.110.133" 7 8 # The port for the info server to serve on 9 # 刚才在master节点上的api-server中配置的端口是10250,那么kublet里面的端口也需要是10250,它们需要一致。 10 KUBELET_PORT="--port=10250" 11 12 # You may leave this blank to use the actual hostname 13 # 配置kublet的hostname主机名,因为有多个kublet Node节点的时候,每个节点都需要给它贴上一个标签。这样的话master节点在下命令的时候,可以找到指定的node。 14 # 可以使用ip地址进行区别,或者主机名称进行区分。主机名是需要做hosts解析的哦,不然找不到指定的地址的。 15 KUBELET_HOSTNAME="--hostname-override=k8s-master" 16 17 # location of the api-server 18 # kublet需要连接api-server,将127.0.0.1改为192.168.110.133。 19 KUBELET_API_SERVER="--api-servers=http://192.168.110.133:8080" 20 21 # pod infrastructure container 22 KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" 23 24 # Add your own! 25 KUBELET_ARGS=""

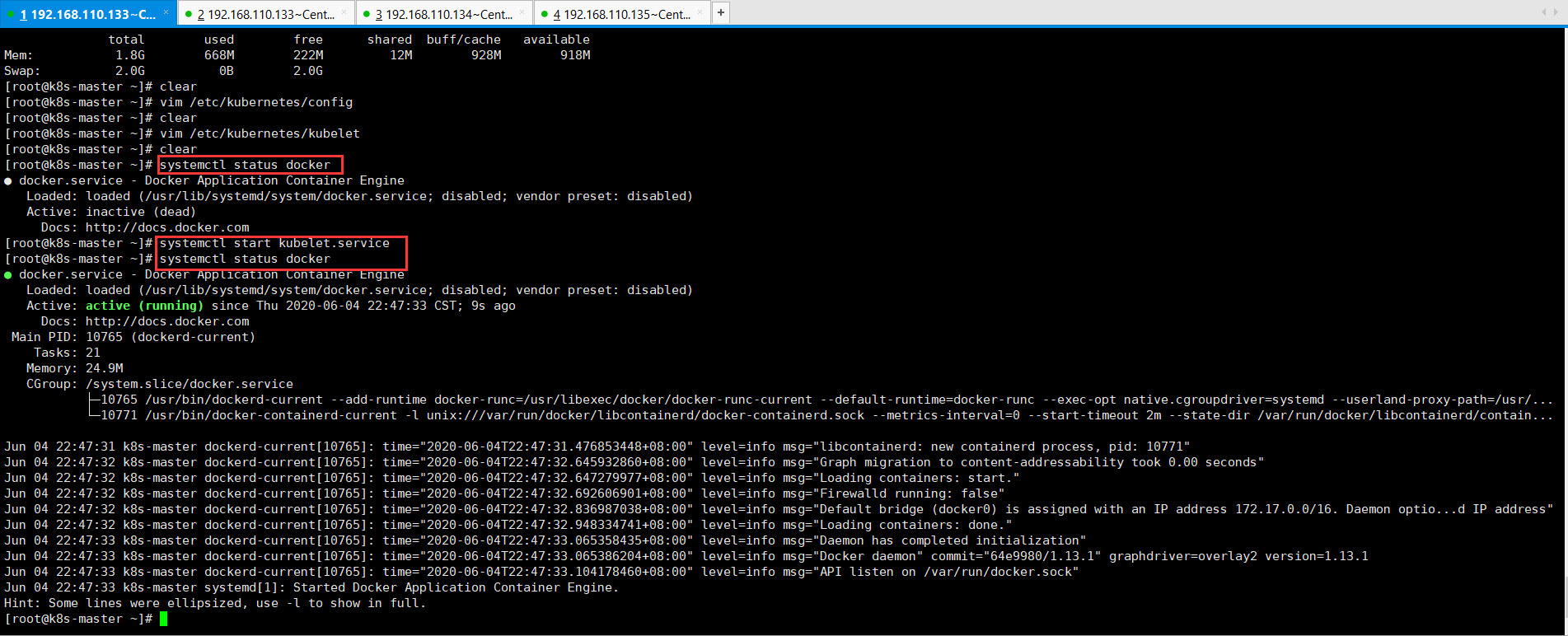

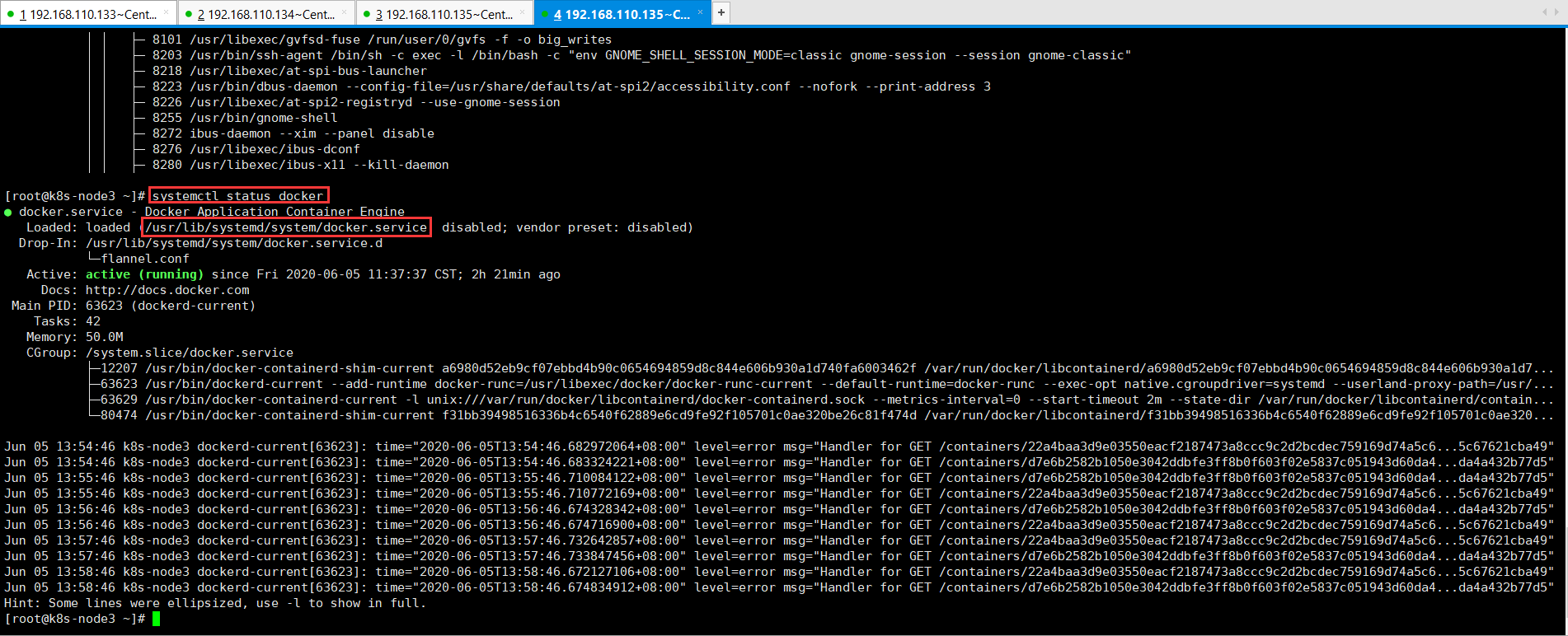

配置好之后,就可以将Node节点上的两个服务kubelet、kube-proxy进行启动。启动kubelet会自动启动Docker的。

可以先查看Docker的运行状态。

1 [root@k8s-master ~]# systemctl status docker 2 ● docker.service - Docker Application Container Engine 3 Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled) 4 Active: inactive (dead) 5 Docs: http://docs.docker.com 6 [root@k8s-master ~]#

开始启动kubelet,然后再查看Docker的状态。启动kubelet会进行检测Docker,如果Docker没有启动,会将Docker进行启动。

具体操作,如下所示:

1 [root@k8s-master ~]# systemctl start kubelet.service 2 [root@k8s-master ~]# systemctl status docker 3 ● docker.service - Docker Application Container Engine 4 Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled) 5 Active: active (running) since Thu 2020-06-04 22:47:33 CST; 9s ago 6 Docs: http://docs.docker.com 7 Main PID: 10765 (dockerd-current) 8 Tasks: 21 9 Memory: 24.9M 10 CGroup: /system.slice/docker.service 11 ├─10765 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt native.cgroupdriver=systemd --userland-proxy-path=/usr/... 12 └─10771 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/docker-containerd.sock --metrics-interval=0 --start-timeout 2m --state-dir /var/run/docker/libcontainerd/contain... 13 14 Jun 04 22:47:31 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:31.476853448+08:00" level=info msg="libcontainerd: new containerd process, pid: 10771" 15 Jun 04 22:47:32 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:32.645932860+08:00" level=info msg="Graph migration to content-addressability took 0.00 seconds" 16 Jun 04 22:47:32 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:32.647279977+08:00" level=info msg="Loading containers: start." 17 Jun 04 22:47:32 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:32.692606901+08:00" level=info msg="Firewalld running: false" 18 Jun 04 22:47:32 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:32.836987038+08:00" level=info msg="Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon optio...d IP address" 19 Jun 04 22:47:32 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:32.948334741+08:00" level=info msg="Loading containers: done." 20 Jun 04 22:47:33 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:33.065358435+08:00" level=info msg="Daemon has completed initialization" 21 Jun 04 22:47:33 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:33.065386204+08:00" level=info msg="Docker daemon" commit="64e9980/1.13.1" graphdriver=overlay2 version=1.13.1 22 Jun 04 22:47:33 k8s-master dockerd-current[10765]: time="2020-06-04T22:47:33.104178460+08:00" level=info msg="API listen on /var/run/docker.sock" 23 Jun 04 22:47:33 k8s-master systemd[1]: Started Docker Application Container Engine. 24 Hint: Some lines were ellipsized, use -l to show in full. 25 [root@k8s-master ~]#

将Kublet服务设置为开机自启动。

1 [root@k8s-master ~]# systemctl enable kubelet.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. 3 [root@k8s-master ~]#

然后,将kube-proxy服务启动。

1 [root@k8s-master ~]# systemctl start kube-proxy.service

将kube-proxy服务设置为开机自启动。

1 [root@k8s-master ~]# systemctl enable kube-proxy.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. 3 [root@k8s-master ~]#

此时,当有一个Node节点创建好之后,可以执行kube-ctl,在master节点执行。

1 [root@k8s-master ~]# kubectl get nodes 2 NAME STATUS AGE 3 k8s-master Ready 6m 4 [root@k8s-master ~]#

此时,已经有一个节点自动注册进来了,注册进来6分钟了。在启动kubelet服务的一瞬间就会自动注册进来,受master节点管控了。这个Node节点的名称k8s-master,这个名称是在kubelet的配置文件里面指定的。

利用相同的方法,接下来配置k8s-node2、k8s-node3这两个Node节点的服务。此配置主要给kube-proxy服务使用的。

1 [root@k8s-node2 ~]# vim /etc/kubernetes/config

1 [root@k8s-node3 ~]# vim /etc/kubernetes/config

将配置KUBE_MASTER="--master=http://192.168.110.133:8080",如下所示:

1 ### 2 # kubernetes system config 3 # 4 # The following values are used to configure various aspects of all 5 # kubernetes services, including 6 # 7 # kube-apiserver.service 8 # kube-controller-manager.service 9 # kube-scheduler.service 10 # kubelet.service 11 # kube-proxy.service 12 # logging to stderr means we get it in the systemd journal 13 KUBE_LOGTOSTDERR="--logtostderr=true" 14 15 # journal message level, 0 is debug 16 KUBE_LOG_LEVEL="--v=0" 17 18 # Should this cluster be allowed to run privileged docker containers 19 KUBE_ALLOW_PRIV="--allow-privileged=false" 20 21 # How the controller-manager, scheduler, and proxy find the apiserver 22 KUBE_MASTER="--master=http://192.168.110.133:8080"

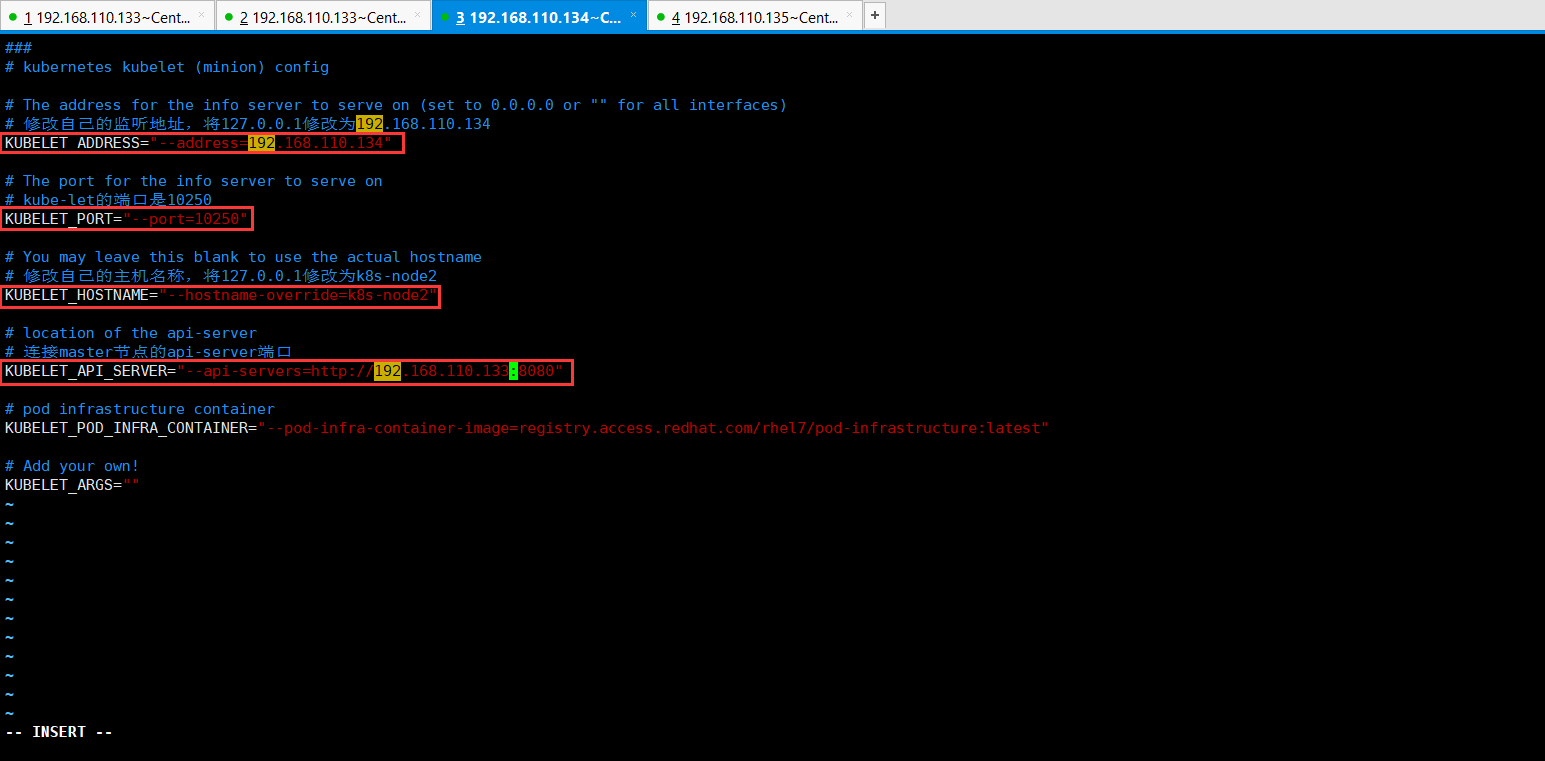

接下来,修改k8s-node2的配置/etc/kubernetes/kubelet给kubelet服务使用。

1 [root@k8s-node2 ~]# vim /etc/kubernetes/kubelet

具体配置,如下所示:

1 ### 2 # kubernetes kubelet (minion) config 3 4 # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) 5 # 修改自己的监听地址,将127.0.0.1修改为192.168.110.134 6 KUBELET_ADDRESS="--address=192.168.110.134" 7 8 # The port for the info server to serve on 9 # kube-let的端口是10250 10 KUBELET_PORT="--port=10250" 11 12 # You may leave this blank to use the actual hostname 13 # 修改自己的主机名称,将127.0.0.1修改为k8s-node2 14 KUBELET_HOSTNAME="--hostname-override=k8s-node2" 15 16 # location of the api-server 17 # 连接master节点的api-server端口 18 KUBELET_API_SERVER="--api-servers=http://192.168.110.133:8080" 19 20 # pod infrastructure container 21 KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" 22 23 # Add your own! 24 KUBELET_ARGS=""

启动k8s-node2的kubelet的服务。

1 [root@k8s-node2 ~]# systemctl start kubelet.service

然后设置为k8s-node2的kubelet的服务开机自启动。

1 [root@k8s-node2 ~]# systemctl enable kubelet.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. 3 [root@k8s-node2 ~]#

然后启动k8s-node2的kube-proxy的服务。

1 [root@k8s-node2 ~]# systemctl start kube-proxy.service

然后设置为k8s-node2的kube-proxy的服务开机自启动。

1 [root@k8s-node2 ~]# systemctl enable kube-proxy.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. 3 [root@k8s-node2 ~]#

当将k8s-node2节点配置好之后,去Master节点去查看Node节点的状态。

1 [root@k8s-master ~]# kubectl get nodes 2 NAME STATUS AGE 3 k8s-master Ready 26m 4 k8s-node2 Ready 3m

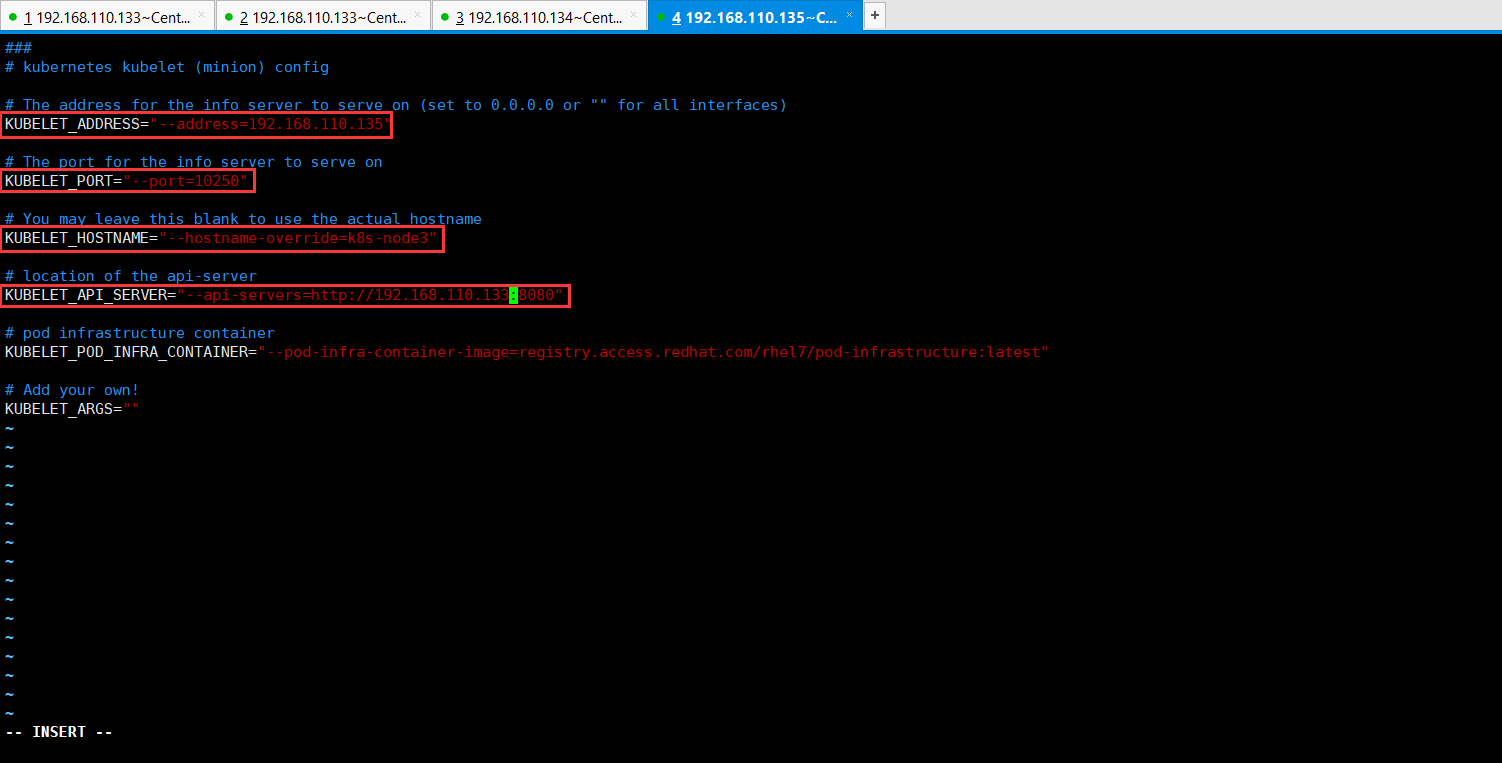

接下来,修改k8s-node3的配置/etc/kubernetes/kubelet给kubelet服务使用。

1 [root@k8s-node3 ~]# vim /etc/kubernetes/kubelet

具体配置,如下所示:

1 ### 2 # kubernetes kubelet (minion) config 3 4 # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) 5 KUBELET_ADDRESS="--address=192.168.110.135" 6 7 # The port for the info server to serve on 8 KUBELET_PORT="--port=10250" 9 10 # You may leave this blank to use the actual hostname 11 KUBELET_HOSTNAME="--hostname-override=k8s-node3" 12 13 # location of the api-server 14 KUBELET_API_SERVER="--api-servers=http://192.168.110.133:8080" 15 16 # pod infrastructure container 17 KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" 18 19 # Add your own! 20 KUBELET_ARGS=""

然后启动k8s-node3的kubelet、kube-proxy服务。

1 [root@k8s-node3 ~]# systemctl start kubelet.service 2 [root@k8s-node3 ~]# systemctl enable kubelet.service 3 Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. 4 [root@k8s-node3 ~]# systemctl start kube-proxy.service 5 [root@k8s-node3 ~]# systemctl enable kube-proxy.service 6 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service. 7 [root@k8s-node3 ~]#

当将k8s-node3节点配置好之后,去Master节点去查看Node节点的状态。

1 [root@k8s-master ~]# kubectl get nodes 2 NAME STATUS AGE 3 k8s-master Ready 38m 4 k8s-node2 Ready 15m 5 k8s-node3 Ready 17s 6 [root@k8s-master ~]#

删除节点的命令,[root@k8s-master ~]# kubectl delete node k8s-node3

1 [root@k8s-master ~]# kubectl delete node k8s-node3

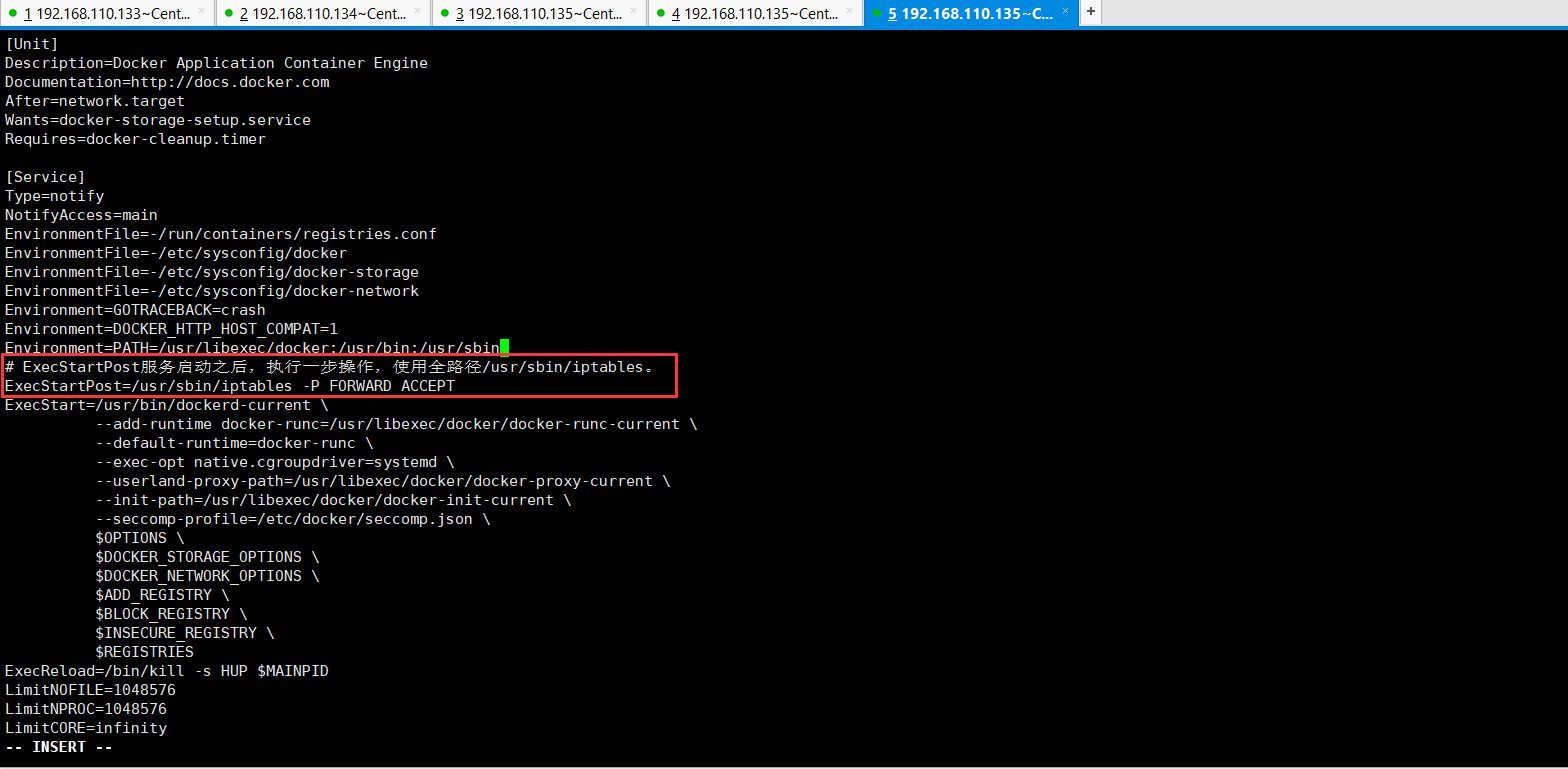

8、所有node节点配置flannel网络插件。目的是因为现在有三台Node节点,Node节点会起容器,当大规模使用容器的时候,容器和容器之间是需要通讯的,此时需要实现Docker宿主机跨Docker宿主机容器之间的通讯,此时需要网络插件来让所有的宿主机之间容器之间可以通讯,这里使用的是flannel网络插件。在三个机器都需要安装flannel网络插件。

1 [root@k8s-master ~]# yum install flannel.x86_64 -y

具体安装步骤,如下所示:

1 [root@k8s-master ~]# yum install flannel.x86_64 -y 2 Loaded plugins: fastestmirror, langpacks, product-id, search-disabled-repos, subscription-manager 3 4 This system is not registered with an entitlement server. You can use subscription-manager to register. 5 6 Loading mirror speeds from cached hostfile 7 * base: mirrors.tuna.tsinghua.edu.cn 8 * extras: mirrors.tuna.tsinghua.edu.cn 9 * updates: mirrors.tuna.tsinghua.edu.cn 10 base | 3.6 kB 00:00:00 11 extras | 2.9 kB 00:00:00 12 updates | 2.9 kB 00:00:00 13 updates/7/x86_64/primary_db | 2.1 MB 00:00:01 14 Resolving Dependencies 15 --> Running transaction check 16 ---> Package flannel.x86_64 0:0.7.1-4.el7 will be installed 17 --> Finished Dependency Resolution 18 19 Dependencies Resolved 20 21 ================================================================================================================================= 22 Package Arch Version Repository Size 23 ================================================================================================================================= 24 Installing: 25 flannel x86_64 0.7.1-4.el7 extras 7.5 M 26 27 Transaction Summary 28 ================================================================================================================================= 29 Install 1 Package 30 31 Total download size: 7.5 M 32 Installed size: 41 M 33 Downloading packages: 34 flannel-0.7.1-4.el7.x86_64.rpm | 7.5 MB 00:00:03 35 Running transaction check 36 Running transaction test 37 Transaction test succeeded 38 Running transaction 39 Installing : flannel-0.7.1-4.el7.x86_64 1/1 40 Verifying : flannel-0.7.1-4.el7.x86_64 1/1 41 42 Installed: 43 flannel.x86_64 0:0.7.1-4.el7 44 45 Complete! 46 [root@k8s-master ~]#

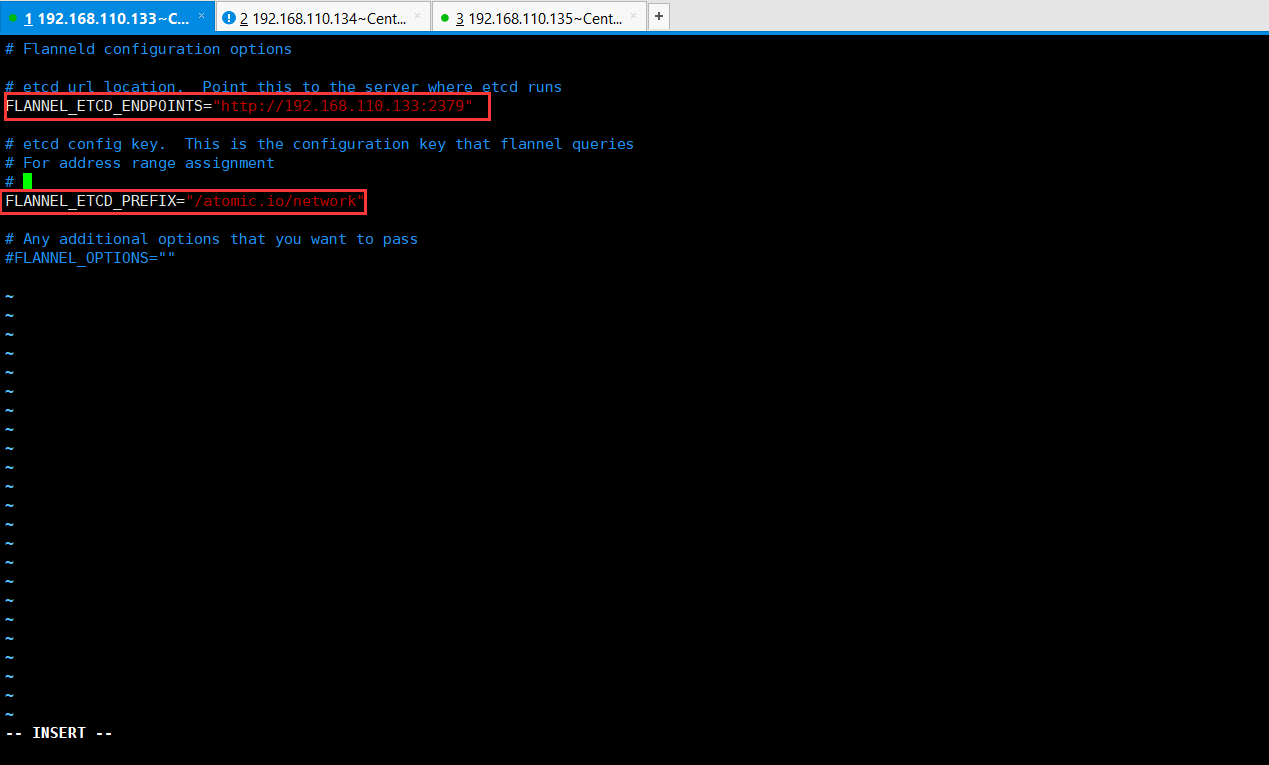

开始配置一下flanneld,配置文件。

1 [root@k8s-master ~]# vim /etc/sysconfig/flanneld

具体配置如下所示:

1 # Flanneld configuration options 2 3 # etcd url location. Point this to the server where etcd runs 4 # 配置flannel连接etcd的地址,注意,api-server和flannel都会使用到etcd。将127.0.0.1改为192.168.110.133 5 FLANNEL_ETCD_ENDPOINTS="http://192.168.110.133:2379" 6 7 # etcd config key. This is the configuration key that flannel queries 8 # For address range assignment 9 # 配置一个Key,这个Key是后期flannel去查询etcd用的。每个容器的Ip地址分配都会存储在etcd里面,此时需要建立一个key,这里使用默认的 10 FLANNEL_ETCD_PREFIX="/atomic.io/network" 11 12 # Any additional options that you want to pass 13 #FLANNEL_OPTIONS=""

接下来在etcd里面创建这个key。

1 [root@k8s-master ~]# vim /etc/sysconfig/flanneld 2 [root@k8s-master ~]# cat /etc/sysconfig/flanneld 3 # Flanneld configuration options 4 5 # etcd url location. Point this to the server where etcd runs 6 FLANNEL_ETCD_ENDPOINTS="http://192.168.110.133:2379" 7 8 # etcd config key. This is the configuration key that flannel queries 9 # For address range assignment 10 # 11 FLANNEL_ETCD_PREFIX="/atomic.io/network" 12 13 # Any additional options that you want to pass 14 #FLANNEL_OPTIONS="" 15 16 [root@k8s-master ~]# etcdctl set /atomic.io/network/config '{ "Network": "172.16.0.0/16" }' 17 { "Network": "172.16.0.0/16" } 18 [root@k8s-master ~]#

开始启动flannel,并加入到开机自启动。

1 [root@k8s-master ~]# systemctl start flanneld.service 2 [root@k8s-master ~]# systemctl enable flanneld.service 3 Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 4 Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 5 [root@k8s-master ~]#

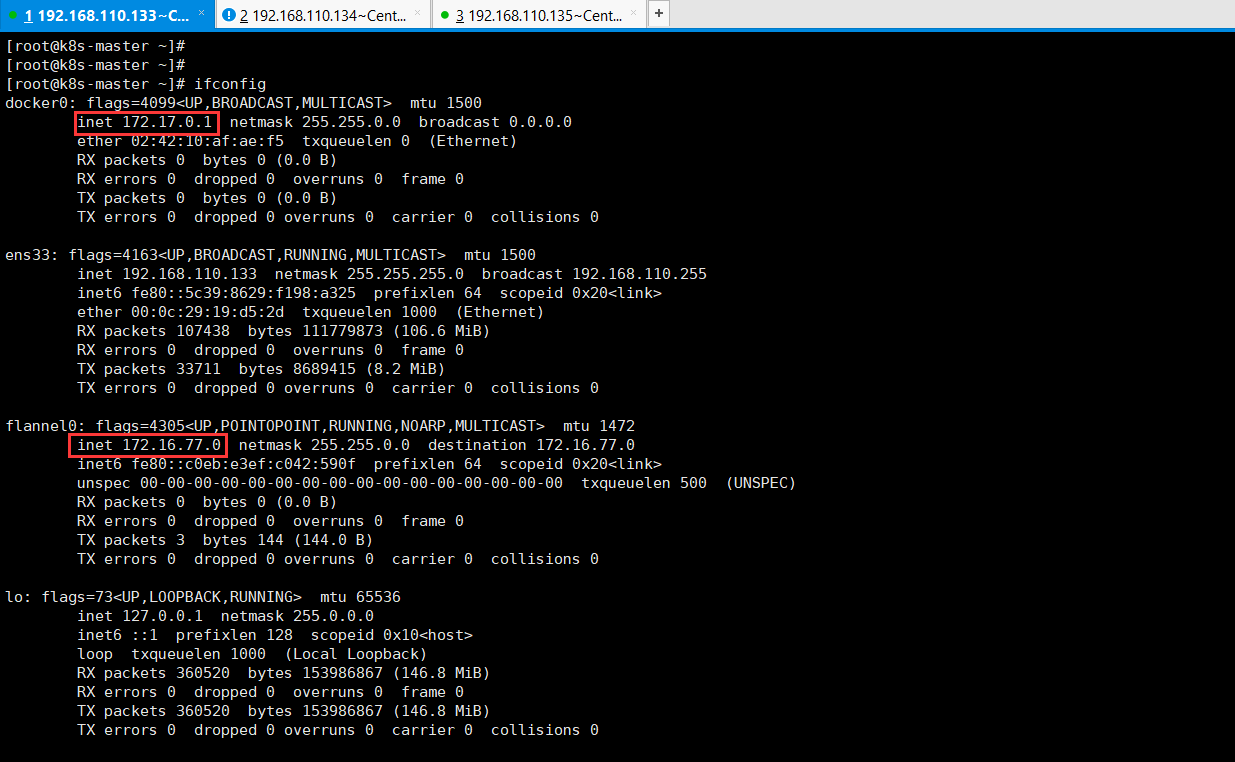

启动flannel之后,使用ifconfig命令可以看到多了一块网卡,inet 172.16.77.0,第三段77是随机分配的,但是docker的网段还是 inet 172.17.0.1。

具体操作,如下所示:

1 [root@k8s-master ~]# ifconfig 2 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 3 inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0 4 ether 02:42:10:af:ae:f5 txqueuelen 0 (Ethernet) 5 RX packets 0 bytes 0 (0.0 B) 6 RX errors 0 dropped 0 overruns 0 frame 0 7 TX packets 0 bytes 0 (0.0 B) 8 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 9 10 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 11 inet 192.168.110.133 netmask 255.255.255.0 broadcast 192.168.110.255 12 inet6 fe80::5c39:8629:f198:a325 prefixlen 64 scopeid 0x20<link> 13 ether 00:0c:29:19:d5:2d txqueuelen 1000 (Ethernet) 14 RX packets 107438 bytes 111779873 (106.6 MiB) 15 RX errors 0 dropped 0 overruns 0 frame 0 16 TX packets 33711 bytes 8689415 (8.2 MiB) 17 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 18 19 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 20 inet 172.16.77.0 netmask 255.255.0.0 destination 172.16.77.0 21 inet6 fe80::c0eb:e3ef:c042:590f prefixlen 64 scopeid 0x20<link> 22 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) 23 RX packets 0 bytes 0 (0.0 B) 24 RX errors 0 dropped 0 overruns 0 frame 0 25 TX packets 3 bytes 144 (144.0 B) 26 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 27 28 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 29 inet 127.0.0.1 netmask 255.0.0.0 30 inet6 ::1 prefixlen 128 scopeid 0x10<host> 31 loop txqueuelen 1000 (Local Loopback) 32 RX packets 360520 bytes 153986867 (146.8 MiB) 33 RX errors 0 dropped 0 overruns 0 frame 0 34 TX packets 360520 bytes 153986867 (146.8 MiB) 35 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 36 37 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 38 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 39 ether 52:54:00:0f:f4:f2 txqueuelen 1000 (Ethernet) 40 RX packets 0 bytes 0 (0.0 B) 41 RX errors 0 dropped 0 overruns 0 frame 0 42 TX packets 0 bytes 0 (0.0 B) 43 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 44 45 [root@k8s-master ~]#

启动flannel之后,使用ifconfig命令可以看到多了一块网卡,inet 172.16.77.0,第三段77是随机分配的,但是docker的网段还是 inet 172.17.0.1,此时配置完flannel之后,重启docker服务,此时插件才可以生效。

1 [root@k8s-master ~]# systemctl restart docker 2 [root@k8s-master ~]#

可以再使用ifconfig查看docker的网段。此时docker的网段inet 172.16.77.1和flannel的网段inet 172.16.77.0一致了。

具体操作,如下所示:

1 [root@k8s-master ~]# ifconfig 2 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 3 inet 172.16.77.1 netmask 255.255.255.0 broadcast 0.0.0.0 4 ether 02:42:10:af:ae:f5 txqueuelen 0 (Ethernet) 5 RX packets 0 bytes 0 (0.0 B) 6 RX errors 0 dropped 0 overruns 0 frame 0 7 TX packets 0 bytes 0 (0.0 B) 8 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 9 10 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 11 inet 192.168.110.133 netmask 255.255.255.0 broadcast 192.168.110.255 12 inet6 fe80::5c39:8629:f198:a325 prefixlen 64 scopeid 0x20<link> 13 ether 00:0c:29:19:d5:2d txqueuelen 1000 (Ethernet) 14 RX packets 108807 bytes 111980559 (106.7 MiB) 15 RX errors 0 dropped 0 overruns 0 frame 0 16 TX packets 34584 bytes 9157328 (8.7 MiB) 17 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 18 19 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 20 inet 172.16.77.0 netmask 255.255.0.0 destination 172.16.77.0 21 inet6 fe80::c0eb:e3ef:c042:590f prefixlen 64 scopeid 0x20<link> 22 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) 23 RX packets 0 bytes 0 (0.0 B) 24 RX errors 0 dropped 0 overruns 0 frame 0 25 TX packets 3 bytes 144 (144.0 B) 26 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 27 28 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 29 inet 127.0.0.1 netmask 255.0.0.0 30 inet6 ::1 prefixlen 128 scopeid 0x10<host> 31 loop txqueuelen 1000 (Local Loopback) 32 RX packets 372011 bytes 159660750 (152.2 MiB) 33 RX errors 0 dropped 0 overruns 0 frame 0 34 TX packets 372011 bytes 159660750 (152.2 MiB) 35 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 36 37 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 38 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 39 ether 52:54:00:0f:f4:f2 txqueuelen 1000 (Ethernet) 40 RX packets 0 bytes 0 (0.0 B) 41 RX errors 0 dropped 0 overruns 0 frame 0 42 TX packets 0 bytes 0 (0.0 B) 43 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 44 45 [root@k8s-master ~]#

Master节点配置完毕之后,现在开始配置剩下的两个Node节点。

1 [root@k8s-node2 ~]# vim /etc/sysconfig/flanneld

1 [root@k8s-node3 ~]# vim /etc/sysconfig/flanneld

配置内容,如下所示,将FLANNEL_ETCD_ENDPOINTS="http://192.168.110.133:2379"配置,找到指定的etcd。

1 # Flanneld configuration options 2 3 # etcd url location. Point this to the server where etcd runs 4 FLANNEL_ETCD_ENDPOINTS="http://192.168.110.133:2379" 5 6 # etcd config key. This is the configuration key that flannel queries 7 # For address range assignment 8 FLANNEL_ETCD_PREFIX="/atomic.io/network" 9 10 # Any additional options that you want to pass 11 #FLANNEL_OPTIONS=""

配置完毕之后,开始启动flannel,注意,刚才在master节点已经配置key,这里启动才可以正常地,不然两个Node节点启动也是会报错的。

1 [root@k8s-node2 ~]# systemctl start flanneld.service

1 [root@k8s-node3 ~]# systemctl start flanneld.service

顺便将两个Node节点的flannel设置为开机自启动。

1 [root@k8s-node2 ~]# systemctl enable flanneld.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 3 Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 4 [root@k8s-node2 ~]#

1 [root@k8s-node3 ~]# systemctl enable flanneld.service 2 Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 3 Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service. 4 [root@k8s-node3 ~]#

想要flannel生效,还需要重启一下docker的。

1 [root@k8s-node2 ~]# systemctl restart docker

1 [root@k8s-node3 ~]# systemctl restart docker

开始测试一下,所有网段之间是否可以正常通讯呢。在master节点,docker的随机分配的地址段,网段是inet 172.16.77.1,Node2节点的docker随机分配的地址段是inet 172.16.52.1,Node3节点的docker随机分配的地址段是inet 172.16.13.1。请记好自己的网段。

1 [root@k8s-master ~]# ifconfig 2 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 3 inet 172.16.77.1 netmask 255.255.255.0 broadcast 0.0.0.0 4 ether 02:42:10:af:ae:f5 txqueuelen 0 (Ethernet) 5 RX packets 0 bytes 0 (0.0 B) 6 RX errors 0 dropped 0 overruns 0 frame 0 7 TX packets 0 bytes 0 (0.0 B) 8 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 9 10 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 11 inet 192.168.110.133 netmask 255.255.255.0 broadcast 192.168.110.255 12 inet6 fe80::5c39:8629:f198:a325 prefixlen 64 scopeid 0x20<link> 13 ether 00:0c:29:19:d5:2d txqueuelen 1000 (Ethernet) 14 RX packets 113455 bytes 112697638 (107.4 MiB) 15 RX errors 0 dropped 0 overruns 0 frame 0 16 TX packets 37647 bytes 10843382 (10.3 MiB) 17 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 18 19 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 20 inet 172.16.77.0 netmask 255.255.0.0 destination 172.16.77.0 21 inet6 fe80::c0eb:e3ef:c042:590f prefixlen 64 scopeid 0x20<link> 22 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) 23 RX packets 0 bytes 0 (0.0 B) 24 RX errors 0 dropped 0 overruns 0 frame 0 25 TX packets 3 bytes 144 (144.0 B) 26 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 27 28 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 29 inet 127.0.0.1 netmask 255.0.0.0 30 inet6 ::1 prefixlen 128 scopeid 0x10<host> 31 loop txqueuelen 1000 (Local Loopback) 32 RX packets 413251 bytes 179956183 (171.6 MiB) 33 RX errors 0 dropped 0 overruns 0 frame 0 34 TX packets 413251 bytes 179956183 (171.6 MiB) 35 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 36 37 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 38 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 39 ether 52:54:00:0f:f4:f2 txqueuelen 1000 (Ethernet) 40 RX packets 0 bytes 0 (0.0 B) 41 RX errors 0 dropped 0 overruns 0 frame 0 42 TX packets 0 bytes 0 (0.0 B) 43 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 44 45 [root@k8s-master ~]#

1 [root@k8s-node2 ~]# ifconfig 2 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 3 inet 172.16.52.1 netmask 255.255.255.0 broadcast 0.0.0.0 4 ether 02:42:8f:81:1d:f5 txqueuelen 0 (Ethernet) 5 RX packets 0 bytes 0 (0.0 B) 6 RX errors 0 dropped 0 overruns 0 frame 0 7 TX packets 0 bytes 0 (0.0 B) 8 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 9 10 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 11 inet 192.168.110.134 netmask 255.255.255.0 broadcast 192.168.110.255 12 inet6 fe80::5c39:8629:f198:a325 prefixlen 64 scopeid 0x20<link> 13 inet6 fe80::1dad:6022:4131:5f1c prefixlen 64 scopeid 0x20<link> 14 ether 00:0c:29:d3:11:ba txqueuelen 1000 (Ethernet) 15 RX packets 78216 bytes 87153782 (83.1 MiB) 16 RX errors 0 dropped 0 overruns 0 frame 0 17 TX packets 32399 bytes 5365699 (5.1 MiB) 18 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 19 20 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 21 inet 172.16.52.0 netmask 255.255.0.0 destination 172.16.52.0 22 inet6 fe80::8630:7924:a3c3:a6e7 prefixlen 64 scopeid 0x20<link> 23 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) 24 RX packets 0 bytes 0 (0.0 B) 25 RX errors 0 dropped 0 overruns 0 frame 0 26 TX packets 3 bytes 144 (144.0 B) 27 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 28 29 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 30 inet 127.0.0.1 netmask 255.0.0.0 31 inet6 ::1 prefixlen 128 scopeid 0x10<host> 32 loop txqueuelen 1000 (Local Loopback) 33 RX packets 200 bytes 21936 (21.4 KiB) 34 RX errors 0 dropped 0 overruns 0 frame 0 35 TX packets 200 bytes 21936 (21.4 KiB) 36 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 37 38 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 39 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 40 ether 52:54:00:0f:f4:f2 txqueuelen 1000 (Ethernet) 41 RX packets 0 bytes 0 (0.0 B) 42 RX errors 0 dropped 0 overruns 0 frame 0 43 TX packets 0 bytes 0 (0.0 B) 44 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 45 46 [root@k8s-node2 ~]#

1 [root@k8s-node3 ~]# ifconfig 2 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 3 inet 172.16.13.1 netmask 255.255.255.0 broadcast 0.0.0.0 4 ether 02:42:c7:0d:a6:e9 txqueuelen 0 (Ethernet) 5 RX packets 0 bytes 0 (0.0 B) 6 RX errors 0 dropped 0 overruns 0 frame 0 7 TX packets 0 bytes 0 (0.0 B) 8 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 9 10 ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 11 inet 192.168.110.135 netmask 255.255.255.0 broadcast 192.168.110.255 12 inet6 fe80::f2ac:286a:e885:a442 prefixlen 64 scopeid 0x20<link> 13 ether 00:0c:29:b6:cf:68 txqueuelen 1000 (Ethernet) 14 RX packets 586163 bytes 827958100 (789.6 MiB) 15 RX errors 0 dropped 0 overruns 0 frame 0 16 TX packets 180587 bytes 12919890 (12.3 MiB) 17 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 18 19 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 20 inet 172.16.13.0 netmask 255.255.0.0 destination 172.16.13.0 21 inet6 fe80::d6d3:434e:c643:5f73 prefixlen 64 scopeid 0x20<link> 22 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) 23 RX packets 0 bytes 0 (0.0 B) 24 RX errors 0 dropped 0 overruns 0 frame 0 25 TX packets 3 bytes 144 (144.0 B) 26 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 27 28 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 29 inet 127.0.0.1 netmask 255.0.0.0 30 inet6 ::1 prefixlen 128 scopeid 0x10<host> 31 loop txqueuelen 1000 (Local Loopback) 32 RX packets 25226 bytes 2092968 (1.9 MiB) 33 RX errors 0 dropped 0 overruns 0 frame 0 34 TX packets 25226 bytes 2092968 (1.9 MiB) 35 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 36 37 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 38 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 39 ether 52:54:00:d7:6e:e2 txqueuelen 1000 (Ethernet) 40 RX packets 0 bytes 0 (0.0 B) 41 RX errors 0 dropped 0 overruns 0 frame 0 42 TX packets 0 bytes 0 (0.0 B) 43 TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 44 45 [root@k8s-node3 ~]#

此时,已经将flannel插件已经安全好了,可以将主节点Master的api-server、controller-manager、scheduler都进行重启。两个Node节点的kubelet、kube-proxy进行重启。如果你已经将Master节点、两个Node节点的flannel配置好,并启动,然后将Master节点、两个Node节点的docker重启。

1 [root@k8s-master ~]# systemctl restart kube-apiserver.service 2 [root@k8s-master ~]# systemctl restart kube-controller-manager.service 3 [root@k8s-master ~]# systemctl restart kube-scheduler.service 4 [root@k8s-master ~]#

1 [root@k8s-node2 ~]# systemctl restart kubelet.service 2 [root@k8s-node2 ~]# systemctl restart kube-proxy.service 3 [root@k8s-node2 ~]#

1 [root@k8s-node3 ~]# systemctl restart kubelet.service 2 [root@k8s-node3 ~]# systemctl restart kube-proxy.service 3 [root@k8s-node3 ~]#

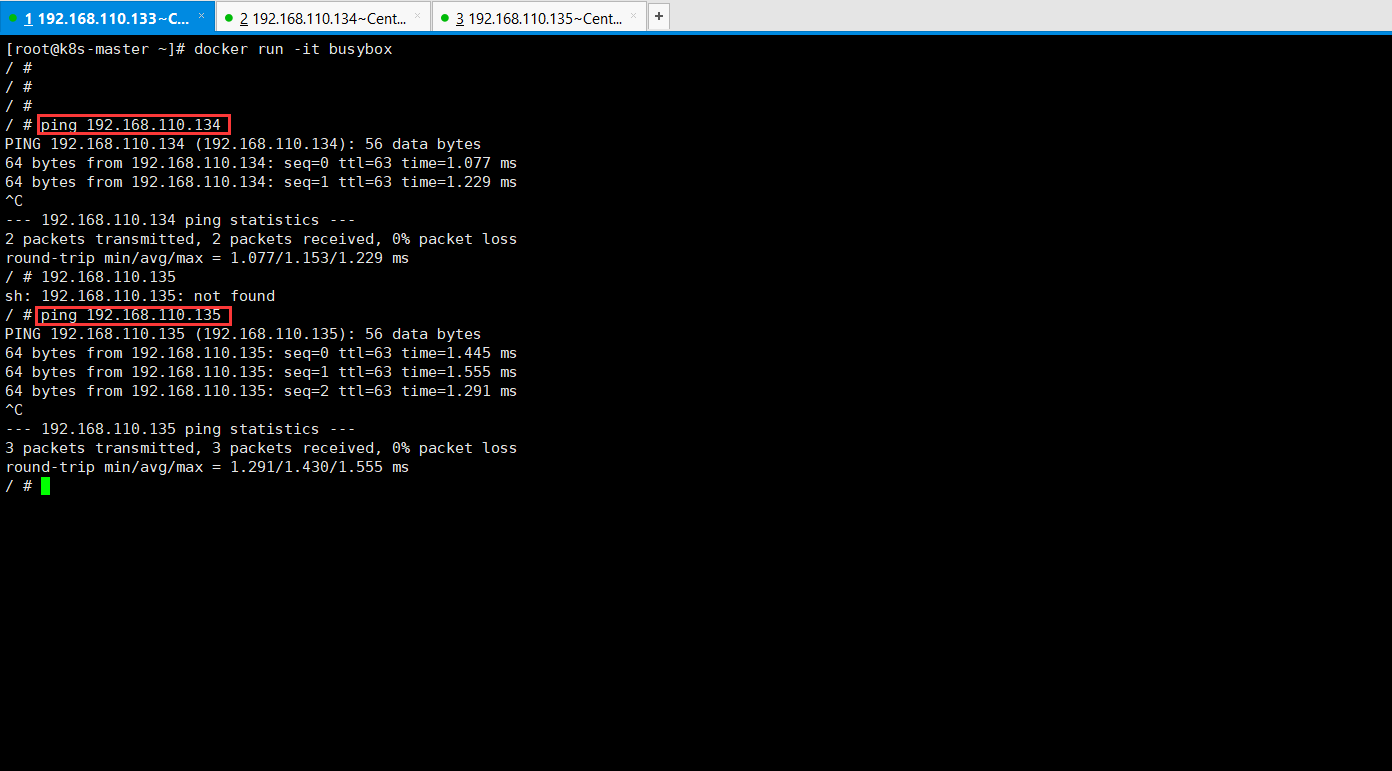

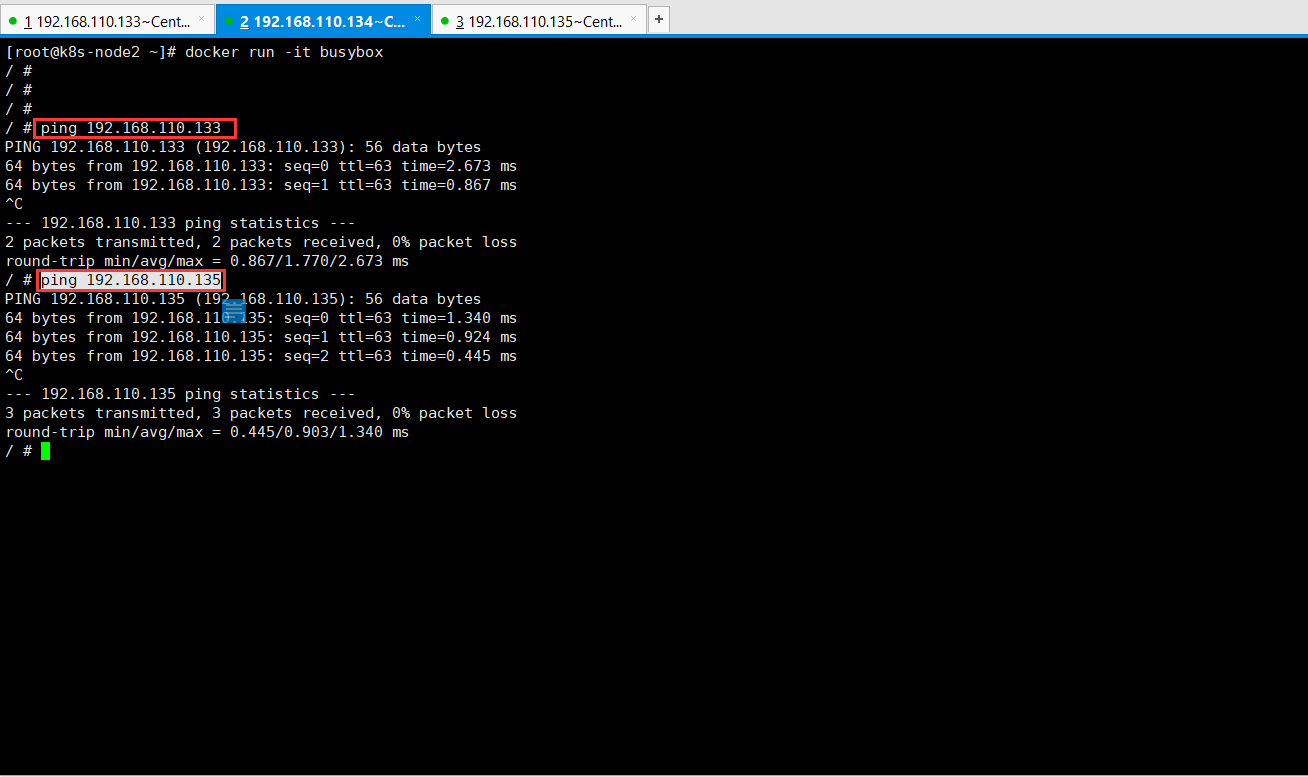

9、最后,测试跨宿主机容器之间的互通性。在所有节点执行docker run -it busybox。

这里使用docker pull拉取镜像,也可以导入包的方式。

1 [root@k8s-master ~]# docker pull busybox 2 Using default tag: latest 3 Trying to pull repository docker.io/library/busybox ... 4 latest: Pulling from docker.io/library/busybox 5 76df9210b28c: Pull complete 6 Digest: sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 7 Status: Downloaded newer image for docker.io/busybox:latest 8 [root@k8s-master ~]# docker image ls 9 REPOSITORY TAG IMAGE ID CREATED SIZE 10 docker.io/busybox latest 1c35c4412082 2 days ago 1.22 MB 11 [root@k8s-master ~]#

1 [root@k8s-node2 ~]# docker pull busybox 2 Using default tag: latest 3 Trying to pull repository docker.io/library/busybox ... 4 latest: Pulling from docker.io/library/busybox 5 76df9210b28c: Pull complete 6 Digest: sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 7 Status: Downloaded newer image for docker.io/busybox:latest 8 [root@k8s-node2 ~]# docker image ls 9 REPOSITORY TAG IMAGE ID CREATED SIZE 10 docker.io/busybox latest 1c35c4412082 2 days ago 1.22 MB 11 [root@k8s-node2 ~]#

1 [root@k8s-node3 ~]# docker pull busybox 2 Using default tag: latest 3 Trying to pull repository docker.io/library/busybox ... 4 latest: Pulling from docker.io/library/busybox 5 76df9210b28c: Pull complete 6 Digest: sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 7 Status: Downloaded newer image for docker.io/busybox:latest 8 [root@k8s-node3 ~]# docker image ls 9 REPOSITORY TAG IMAGE ID CREATED SIZE 10 docker.io/busybox latest 1c35c4412082 2 days ago 1.22 MB 11 [root@k8s-node3 ~]#

也可以将docker_busybox.tar.gz上传到服务器,然后使用docker load -i docker_busybox.tar.gz导入一下,然后可以使用docker image ls进行查看镜像。

现在开始运行 busybox,启动一个 busybox 容器。

1 [root@k8s-master ~]# docker run -it busybox 2 / #

1 [root@k8s-node2 ~]# docker run -it busybox 2 / #

1 [root@k8s-node3 ~]# docker run -it busybox 2 / #

现在在所有节点查看ip地址。master节点的ip地址是inet addr:172.16.77.2、node2节点的ip地址是inet addr:172.16.52.2、node3节点的ip地址是inet addr:172.16.13.2。

1 [root@k8s-master ~]# docker run -it busybox 2 / # ifconfig 3 eth0 Link encap:Ethernet HWaddr 02:42:AC:10:4D:02 4 inet addr:172.16.77.2 Bcast:0.0.0.0 Mask:255.255.255.0 5 inet6 addr: fe80::42:acff:fe10:4d02/64 Scope:Link 6 UP BROADCAST RUNNING MULTICAST MTU:1472 Metric:1 7 RX packets:24 errors:0 dropped:0 overruns:0 frame:0 8 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 9 collisions:0 txqueuelen:0 10 RX bytes:2650 (2.5 KiB) TX bytes:656 (656.0 B) 11 12 lo Link encap:Local Loopback 13 inet addr:127.0.0.1 Mask:255.0.0.0 14 inet6 addr: ::1/128 Scope:Host 15 UP LOOPBACK RUNNING MTU:65536 Metric:1 16 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 17 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 18 collisions:0 txqueuelen:1000 19 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 20 21 / #

1 [root@k8s-node2 ~]# docker run -it busybox 2 / # ifconfig 3 eth0 Link encap:Ethernet HWaddr 02:42:AC:10:34:02 4 inet addr:172.16.52.2 Bcast:0.0.0.0 Mask:255.255.255.0 5 inet6 addr: fe80::42:acff:fe10:3402/64 Scope:Link 6 UP BROADCAST RUNNING MULTICAST MTU:1472 Metric:1 7 RX packets:53 errors:0 dropped:0 overruns:0 frame:0 8 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 9 collisions:0 txqueuelen:0 10 RX bytes:9546 (9.3 KiB) TX bytes:656 (656.0 B) 11 12 lo Link encap:Local Loopback 13 inet addr:127.0.0.1 Mask:255.0.0.0 14 inet6 addr: ::1/128 Scope:Host 15 UP LOOPBACK RUNNING MTU:65536 Metric:1 16 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 17 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 18 collisions:0 txqueuelen:1000 19 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 20 21 / #

1 [root@k8s-node3 ~]# docker run -it busybox 2 / # ifconfig 3 eth0 Link encap:Ethernet HWaddr 02:42:AC:10:0D:02 4 inet addr:172.16.13.2 Bcast:0.0.0.0 Mask:255.255.255.0 5 inet6 addr: fe80::42:acff:fe10:d02/64 Scope:Link 6 UP BROADCAST RUNNING MULTICAST MTU:1472 Metric:1 7 RX packets:24 errors:0 dropped:0 overruns:0 frame:0 8 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 9 collisions:0 txqueuelen:0 10 RX bytes:2634 (2.5 KiB) TX bytes:656 (656.0 B) 11 12 lo Link encap:Local Loopback 13 inet addr:127.0.0.1 Mask:255.0.0.0 14 inet6 addr: ::1/128 Scope:Host 15 UP LOOPBACK RUNNING MTU:65536 Metric:1 16 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 17 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 18 collisions:0 txqueuelen:1000 19 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 20 21 / #

然后在所有节点之间互ping。