CentOS 7下Greenplum集群源码安装

一、系统设置(所有主机)

1、操作系统环境

CentOS 7.5.1804,64位操作系统,没有更新过

[root@localhost tools]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core)

2、关闭防火墙

#systemctl stop firewalld.service #停止firewall

3、关闭NetworkManager

#systemctl stop NetworkManager

4、关闭selinux

root@mdw gpAdminLogs]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of three two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

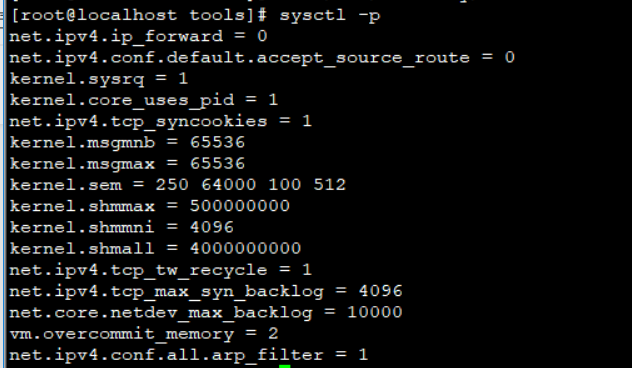

5、配置/etc/sysctl.conf

加入有关共享内存与网络参数配置:

net.ipv4.ip_forward = 0 net.ipv4.conf.default.accept_source_route = 0 kernel.sysrq = 1 kernel.core_uses_pid = 1 net.ipv4.tcp_syncookies = 1 kernel.msgmnb = 65536 kernel.msgmax = 65536 kernel.sem = 250 64000 100 512 kernel.shmmax = 500000000 kernel.shmmni = 4096 kernel.shmall = 4000000000 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_max_syn_backlog = 4096 net.core.netdev_max_backlog = 10000 vm.overcommit_memory = 2 net.ipv4.conf.all.arp_filter = 1

6、配置/etc/security/limits.conf

加入限制参数

* soft nofile 65536 * hard nofile 65536 * soft nproc 131072 * hard nproc 131072

7、手动执行命令让参数生效,也可以重启系统

#sysctl -p

8、修改/etc/hosts文件配置主机与ip地址的映射

192.168.2.9 mdw 192.168.2.10 sdw1 192.168.2.11 sdw2

9、修改主机名

[root@mdw1 ~]# vim /etc/sysconfig/network

# Created by anaconda

HOSTNAME=mdw(各主机依此修改)

10、创建gpadmin用户

[root@mdw ~]# groupadd gpadmin [root@mdw ~]# useradd -g gpadmin gpadmin [root@mdw1~]# passwd gpadmin #####密码临时给一个:123456

二、安装和分发

2.1 联网安装必要的包(所有机器)

[root@mdw~]# yum -y install rsync coreutils glib2 lrzsz sysstat e4fsprogs xfsprogs ntp readline-devel zlib zlib-devel openssl openssl-devel pam-devel libxml2-devel libxslt-devel python-devel tcl-devel gcc make smartmontools flex bison perl perl-devel perl-ExtUtils* OpenIPMI-tools openldap openldap-devel logrotate gcc-c++ python-py [root@mdw~]# yum -y install bzip2-devel libevent-devel apr-devel curl-devel ed python-paramiko python-devel [root@mdw~]# wget https://bootstrap.pypa.io/get-pip.py [root@mdw~]# python get-pip.py [root@mdw~]# pip install lockfile paramiko setuptools epydoc psutil [root@mdw~]# pip install --upgrade setuptools

2.2 master节点解压代码编译安装Greenplum

切换到gpadmin登录,在https://github.com/greenplum-db/gpdb/archive/5.15.1.zip上下载源码,并在对应的目录下安装。

一般来说安装目录安装在应用目录下,如果不慎安装在根目录下,做压测的时候很容易把磁盘写满,给压测造成麻烦。本文以/home/gpadmin为例

在下载源码zip包解压后会生成gpdb-5.15.1文件夹。可将其移动到/home/gpadmin目录下。

创建程序安装目录gpdb,安装目录也放在home下,确认目录所有者为gpadmin,如果是root用户创建的,之后需要chown修改

gpadmin用户执行配置 --prefix后是安装目录,可知道的参数如下,由于ORCA优化器初始化容易报错,一般执行红色那各参数即可:

#####编译

[root@mdw1 gpdb-5.15.1]# ./configure --prefix=/home/gpsql --with-gssapi --with-pgport=5432 --with-libedit-preferred --with-perl --with-python --with-openssl --with-pam --with-krb5 --with-ldap --with-libxml --enable-cassert --enable-debug --enable-testutils --enable-debugbreak --enable-depend --disable-orca

常见的错误:

checking for string.h... yes checking for memory.h... yes checking for strings.h... yes checking for inttypes.h... yes checking for stdint.h... yes checking for unistd.h... yes ./configure: line 12043: #include: command not found checking how to run the C++ preprocessor... g++ -std=c++11 -E checking gpos/_api.h usability... no checking gpos/_api.h presence... no checking for gpos/_api.h... no configure: error: GPOS header files are required for Pivotal Query Optimizer (orca)

这个问题解决时在编译命令上加上:–dsiable-orca

###make -j8

[root@mdw1 gpdb-5.15.1]# make -j8 make[2]:Leaving directory `/home/tools/gpdb-5.15.1/gpAux/extensions/gpcloud/bin/gpcheckcloud' make[1]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/extensions' make -C gpAux/gpperfmon all make[1]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/gpperfmon' Configuration not enabled for gpperfmon. make[1]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/gpperfmon' make -C gpAux/platform all make[1]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/platform' make -C gpnetbench all make[2]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' gcc -Werror -Wall -g -O2 -o gpnetbenchServer.o -c gpnetbenchServer.c gcc -Werror -Wall -g -O2 -o gpnetbenchClient.o -c gpnetbenchClient.c gcc -o gpnetbenchServer gpnetbenchServer.o gcc -o gpnetbenchClient gpnetbenchClient.o make[2]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' make[1]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/platform' All of Greenplum Database successfully made. Ready to install.

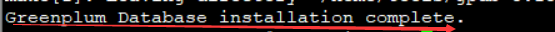

#####make install

[root@mdw1 gpdb-5.15.1]# make install make -C gpAux/gpperfmon install make[1]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/gpperfmon' make[1]: Nothing to be done for `install'. make[1]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/gpperfmon' make -C gpAux/platform install make[1]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/platform' make -C gpnetbench all make[2]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' make[2]: Nothing to be done for `all'. make[2]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' make -C gpnetbench install make[2]: Entering directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' mkdir -p /home/gpsql/bin/lib cp -p gpnetbenchServer /home/gpsql/bin/lib cp -p gpnetbenchClient /home/gpsql/bin/lib make[2]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/platform/gpnetbench' make[1]: Leaving directory `/home/tools/gpdb-5.15.1/gpAux/platform' Greenplum Database installation complete.

若出现:

则说明安装成功。

2.3 分发

因为只在master上安装了Greenplum,所以下面要将安装包批量发送到每个slave机器上,才能算是整个Greenplum集群安装了Greenplum。

先在master主节点上创建GP的tar文件,其中gpsqlpwd是安装路径。

[root@mdw1 gpsql]# chown -R gpadmin:gpadmin /home/gpsql/ [root@mdw1 gpadmin]# gtar -cvf /home/gpadmin/gp.tar /home/gpsql

下面的操作都是为了连接所有节点,并将安装包发送到每个节点

在master主机,以gpadmin用户身份创建以下文本,可在gpadmin目录下创建conf文件夹,用来放这些启动互信信息

[root@mdw1 conf]# vim /home/gpadmin/conf/all_hosts mdw sdw1 sdw2 保存并退出 [root@mdw1 conf]# vim /home/gpadmin/conf/seg_hosts sdw1 sdw2 保存并退出

安装目录下的 greenplum_path.sh中保存了运行Greenplum的一些环境变量设置,包括GPHOME、PYTHONHOME等设置。

以gpadmin身份执行source命令生效,之后gpssh-exkeys交换密钥

gpadmin@mdw gpsql]$ gpssh-exkeys -f /home/gpadmin/conf/all_hosts [STEP 1 of 5] create local ID and authorize on local host [STEP 2 of 5] keyscan all hosts and update known_hosts file [STEP 3 of 5] authorize current user on remote hosts ... send to sdw1 *** *** Enter password for sdw1: ... send to sdw2 [STEP 4 of 5] determine common authentication file content [STEP 5 of 5] copy authentication files to all remote hosts ... finished key exchange with sdw1 ... finished key exchange with sdw2 [INFO] completed successfully

通过gpscp命令将之前的压缩包分发到/conf/seg_hosts文件配置的segment节点

[gpadmin@mdw ~]$ gpscp -f /home/gpadmin/conf/seg_hosts /home/gpadmin/gp.tar =:/home/gpadmin/

查看是否将压缩包分发成功

[gpadmin@sdw1 ~]$ ll total 96780 -rw-r--r--. 1 gpadmin gpadmin 99102720 Apr 26 15:18 gp.tar

[gpadmin@swd2 ~]$ ll total 96780 -rw-r--r--. 1 gpadmin gpadmin 99102720 Apr 26 15:18 gp.tar

通过gpssh协议连接到segment节点时,all_hosts里有多少机器应该有多少输出

[gpadmin@mdw ~]$ gpssh -f /home/gpadmin/conf/all_hosts => pwd [ mdw] /home/gpadmin [sdw2] /home/gpadmin [sdw1] /home/gpadmin

=>

解压之间的安装包

=> gtar -xvf gp.tar

最后创建数据库工作目录

=> pwd [ mdw] /home/gpadmin [sdw2] /home/gpadmin [sdw1] /home/gpadmin => mkdir gpdata [ mdw] [sdw2] [sdw1] => cd gpdata [ mdw] [sdw2] [sdw1] => mkdir gpdatap1 gpdatap2 gpdatam1 gpdatam2 gpmaster [ mdw] [sdw2] [sdw1] => ll [ mdw] total 20 [ mdw] drwxrwxr-x 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam1 [ mdw] drwxrwxr-x 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam2 [ mdw] drwxrwxr-x 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap1 [ mdw] drwxrwxr-x 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap2 [ mdw] drwxrwxr-x 2 gpadmin gpadmin 4096 Apr 26 15:30 gpmaster [sdw2] total 20 [sdw2] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam1 [sdw2] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam2 [sdw2] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap1 [sdw2] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap2 [sdw2] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpmaster [sdw1] total 20 [sdw1] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam1 [sdw1] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatam2 [sdw1] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap1 [sdw1] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpdatap2 [sdw1] drwxrwxr-x. 2 gpadmin gpadmin 4096 Apr 26 15:30 gpmaster =>exit

3、初始化和创建数据库

3.1 配置.bash_profile 环境变量(每台机器都要配置)

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

source /home/gpsql/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/home/gpadmin/gpadata/gpmaster/gpseg-1

export PGPORT=2346

让环境变量生效

[gpadmin@mdw ~]$ . ~/.bash_profile

3.2 同步时钟

在gpadmin下:

检查时钟:

[gpadmin@mdw ~]$ gpssh -f /home/gpadmin/conf/all_hosts -v date [WARN] Reference default values as $MASTER_DATA_DIRECTORY/gpssh.conf could not be found Using delaybeforesend 0.05 and prompt_validation_timeout 1.0 [Reset ...] [INFO] login mdw [INFO] login sdw2 [INFO] login sdw1 [ mdw] Fri Apr 26 15:51:13 CST 2019 [sdw2] Fri Apr 26 15:51:13 CST 2019 [sdw1] Fri Apr 26 15:51:16 CST 2019 [INFO] completed successfully [Cleanup...]

同步:

[gpadmin@mdw ~]$ gpssh -f /home/gpadmin/conf/all_hosts -v ntpd [WARN] Reference default values as $MASTER_DATA_DIRECTORY/gpssh.conf could not be found Using delaybeforesend 0.05 and prompt_validation_timeout 1.0 [Reset ...] [INFO] login mdw [INFO] login sdw1 [INFO] login sdw2 [ mdw] must be run as root, not uid 1001 [sdw1] must be run as root, not uid 1001 [sdw2] must be run as root, not uid 1001 [INFO] completed successfully

3.3 编写数据库启动参数文件

将安装目录下的/home/gpsql/docs/cli_help/gpconfigs/gpinitsystem_config文件copy到/home/gpadmin/conf目录下,然后编辑,保留如下参数即可,

#vi /home/gpadmin/conf/gpinitsystem_config

文件中添加如下参数: ARRAY_NAME="Greenplum Data Platform" SEG_PREFIX=gpseg PORT_BASE=42000 declare -a DATA_DIRECTORY=(/home/gpadmin/gpdata/gpdatap1 /home/gpadmin/gpdata/gpdatap2) MASTER_HOSTNAME=mdw MASTER_DIRECTORY=/home/gpadmin/gpdata/gpmaster MASTER_PORT=2346 TRUSTED_SHELL=/usr/bin/ssh CHECK_POINT_SEGMENTS=8 ENCODING=UNICODE

####可选参数,针对mirror的参数 MIRROR_PORT_BASE=53000 REPLICATION_PORT_BASE=43000 MIRROR_REPLICATION_PORT_BASE=54000 declare -a MIRROR_DATA_DIRECTORY=(/home/gpadmin/gpdata/gpdatam1 /home/gpadmin/gpdata/gpdatam2) MACHINE_LIST_FILE=/home/gpadmin/conf/seg_hosts

3.4初始化

[gpadmin@mdw ~]$ gpinitsystem -c /home/gpadmin/conf/gpinitsystem_config -a 20190429:15:23:32:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, please wait... /bin/mv: try to overwrite ?.home/gpadmin/conf/gpinitsystem_config?. overriding mode 0644 (rw-r--r--)? 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Reading Greenplum configuration file /home/gpadmin/conf/gpinitsystem_config 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Locale has not been set in /home/gpadmin/conf/gpinitsystem_config, will set to default value 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Locale set to en_US.utf8 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, Completed 20190429:15:23:33:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, please wait... ... 20190429:15:23:34:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Configuring build for standard array 20190429:15:23:34:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, Completed 20190429:15:23:34:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Building primary segment instance array, please wait... ...... 20190429:15:23:39:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Building group mirror array type , please wait... ...... 20190429:15:23:43:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Checking Master host 20190429:15:23:43:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, please wait... ............ 20190429:15:24:02:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, Completed 20190429:15:24:02:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Building the Master instance database, please wait... 20190429:15:24:18:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Starting the Master in admin mode 20190429:15:24:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing parallel build of primary segment instances 20190429:15:24:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait... ...... 20190429:15:24:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait... ......................................................... 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Parallel process exit status 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as completed = 6 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as killed = 0 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as failed = 0 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:27:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing parallel build of mirror segment instances 20190429:15:25:28:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait... ...... 20190429:15:25:28:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait... .......................... 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Parallel process exit status 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as completed = 6 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as killed = 0 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Total processes marked as failed = 0 20190429:15:25:54:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------ 20190429:15:25:55:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Deleting distributed backout files 20190429:15:25:55:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Removing back out file 20190429:15:25:55:096604 gpinitsystem:mdw:gpadmin-[INFO]:-No errors generated from parallel processes 20190429:15:25:55:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Restarting the Greenplum instance in production mode 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Starting gpstop with args: -a -l /home/gpadmin/gpAdminLogs -i -m -d /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Gathering information and validating the environment... 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Obtaining Segment details from master... 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 5.0.0 build dev' 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-There are 0 connections to the database 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Commencing Master instance shutdown with mode='immediate' 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Master host=mdw 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Commencing Master instance shutdown with mode=immediate 20190429:15:25:55:106448 gpstop:mdw:gpadmin-[INFO]:-Master segment instance directory=/home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:25:56:106448 gpstop:mdw:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process 20190429:15:25:56:106448 gpstop:mdw:gpadmin-[INFO]:-Terminating processes for segment /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:25:57:106476 gpstart:mdw:gpadmin-[INFO]:-Starting gpstart with args: -a -l /home/gpadmin/gpAdminLogs -d /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:25:57:106476 gpstart:mdw:gpadmin-[INFO]:-Gathering information and validating the environment... 20190429:15:25:57:106476 gpstart:mdw:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 5.0.0 build dev' 20190429:15:25:57:106476 gpstart:mdw:gpadmin-[INFO]:-Greenplum Catalog Version: '301705051' 20190429:15:25:57:106476 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance in admin mode 20190429:15:25:58:106476 gpstart:mdw:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information 20190429:15:25:58:106476 gpstart:mdw:gpadmin-[INFO]:-Obtaining Segment details from master... 20190429:15:25:58:106476 gpstart:mdw:gpadmin-[INFO]:-Setting new master era 20190429:15:25:58:106476 gpstart:mdw:gpadmin-[INFO]:-Master Started... 20190429:15:25:58:106476 gpstart:mdw:gpadmin-[INFO]:-Shutting down master 20190429:15:26:00:106476 gpstart:mdw:gpadmin-[INFO]:-Commencing parallel primary and mirror segment instance startup, please wait... ...... 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:-Process results... 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:----------------------------------------------------- 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:- Successful segment starts = 12 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:- Failed segment starts = 0 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:----------------------------------------------------- 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:-Successfully started 12 of 12 segment instances 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:----------------------------------------------------- 20190429:15:26:06:106476 gpstart:mdw:gpadmin-[INFO]:-Starting Master instance mdw directory /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:26:07:106476 gpstart:mdw:gpadmin-[INFO]:-Command pg_ctl reports Master mdw instance active 20190429:15:26:07:106476 gpstart:mdw:gpadmin-[INFO]:-No standby master configured. skipping... 20190429:15:26:07:106476 gpstart:mdw:gpadmin-[INFO]:-Database successfully started 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Completed restart of Greenplum instance in production mode 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Scanning utility log file for any warning messages 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Log file scan check passed 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Greenplum Database instance successfully created 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------------- 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-To complete the environment configuration, please 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-update gpadmin .bashrc file with the following 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-2. Add "export MASTER_DATA_DIRECTORY=/home/gpadmin/gpdata/gpmaster/gpseg-1" 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:- to access the Greenplum scripts for this instance: 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:- or, use -d /home/gpadmin/gpdata/gpmaster/gpseg-1 option for the Greenplum scripts 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:- Example gpstate -d /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Script log file = /home/gpadmin/gpAdminLogs/gpinitsystem_20190429.log 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-To initialize a Standby Master Segment for this Greenplum instance 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Review options for gpinitstandby 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:------------------------------------------------------- 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-The Master /home/gpadmin/gpdata/gpmaster/gpseg-1/pg_hba.conf post gpinitsystem 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-has been configured to allow all hosts within this new 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-new array must be explicitly added to this file 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-located in the /home/gpsql/docs directory 20190429:15:26:07:096604 gpinitsystem:mdw:gpadmin-[INFO]:-----------------

正常得话,会出现

psql (8.3.23)

Type "help" for help.

dbid | content | role | preferred_role | mode | status | port | hostname | address | replication_port

------+---------+------+----------------+------+--------+-------+----------+---------+------------------

1 | -1 | p | p | s | u | 2346 | mdw | mdw |

2 | 0 | p | p | s | u | 42000 | mdw | mdw | 43000

4 | 2 | p | p | s | u | 42000 | sdw1 | sdw1 | 43000

6 | 4 | p | p | s | u | 42000 | sdw2 | sdw2 | 43000

3 | 1 | p | p | s | u | 42001 | mdw | mdw | 43001

5 | 3 | p | p | s | u | 42001 | sdw1 | sdw1 | 43001

7 | 5 | p | p | s | u | 42001 | sdw2 | sdw2 | 43001

8 | 0 | m | m | s | u | 53000 | sdw1 | sdw1 | 54000

9 | 1 | m | m | s | u | 53001 | sdw1 | sdw1 | 54001

10 | 2 | m | m | s | u | 53000 | sdw2 | sdw2 | 54000

11 | 3 | m | m | s | u | 53001 | sdw2 | sdw2 | 54001

12 | 4 | m | m | s | u | 53000 | mdw | mdw | 54000

13 | 5 | m | m | s | u | 53001 | mdw | mdw | 54001

(13 rows)

搭建过程中得遇到得问题,记录如下:

错误1:

[gpadmin@mdw ~]$ gpinitsystem -c /home/gpadmin/conf/gpinitsystem_config -a 20190426:16:24:59:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, please wait... /bin/mv: try to overwrite ?.home/gpadmin/conf/gpinitsystem_config?. overriding mode 0644 (rw-r--r--)? 20190426:16:25:05:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Reading Greenplum configuration file /home/gpadmin/conf/gpinitsystem_config 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Locale has not been set in /home/gpadmin/conf/gpinitsystem_config, will set to default value 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Locale set to en_US.utf8 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, Completed 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, please wait... .. 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Configuring build for standard array 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, Completed 20190426:16:25:06:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Building primary segment instance array, please wait... .... 20190426:16:25:10:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Building group mirror array type , please wait... .... 20190426:16:25:14:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Checking Master host 20190426:16:25:14:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, please wait... ........ 20190426:16:25:31:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Checking new segment hosts, Completed 20190426:16:25:31:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Building the Master instance database, please wait... 20190426:16:25:46:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Starting the Master in admin mode 20190426:16:25:54:gpinitsystem:mdw:gpadmin-[FATAL]:-Unknown host swd2 Script Exiting! 20190426:16:25:54:049694 gpinitsystem:mdw:gpadmin-[WARN]:-Script has left Greenplum Database in an incomplete state 20190426:16:25:54:049694 gpinitsystem:mdw:gpadmin-[WARN]:-Run command /bin/bash /home/gpadmin/gpAdminLogs/backout_gpinitsystem_gpadmin_20190426_162459 to remove these changes 20190426:16:25:54:049694 gpinitsystem:mdw:gpadmin-[INFO]:-Start Function BACKOUT_COMMAND 20190426:16:25:54:049694 gpinitsystem:mdw:gpadmin-[INFO]:-End Function BACKOUT_COMMAND

解决方式:

查看各节点的主机名是否正确,发现sdw2的主机名写成了swd2,将其修改后,再次初始化

错误2:

[gpadmin@mdw gpmaster]$ gpinitsystem -c /home/gpadmin/conf/gpinitsystem_config -a 20190426:16:35:24:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, please wait... /bin/mv: try to overwrite ?.home/gpadmin/conf/gpinitsystem_config?. overriding mode 0644 (rw-r--r--)? y /bin/mv: cannot move ?.tmp/cluster_tmp_file.51899?.to ?.home/gpadmin/conf/gpinitsystem_config?. Permission denied 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Reading Greenplum configuration file /home/gpadmin/conf/gpinitsystem_config 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Locale has not been set in /home/gpadmin/conf/gpinitsystem_config, will set to default value 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Locale set to en_US.utf8 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, Completed 20190426:16:35:26:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, please wait... .. 20190426:16:35:27:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Configuring build for standard array 20190426:16:35:27:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, Completed 20190426:16:35:27:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Building primary segment instance array, please wait... .... 20190426:16:35:30:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Building group mirror array type , please wait... .... 20190426:16:35:34:051899 gpinitsystem:mdw:gpadmin-[INFO]:-Checking Master host 20190426:16:35:34:gpinitsystem:mdw:gpadmin-[FATAL]:-Found indication of postmaster process on port 2346 on Master host Script Exiting!

解决方式:关闭杀死占用端口2346的进程

先查询进程

[gpadmin@mdw ~]$ lsof -i:2346 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME postgres 51235 gpadmin 3u IPv4 111661 0t0 TCP *:redstorm_join (LISTEN) postgres 51235 gpadmin 4u IPv6 111662 0t0 TCP *:redstorm_join (LISTEN)

然后杀死进程

gpadmin@mdw ~]$ kill -9 51235

错误三:

[gpadmin@mdw ~]$ gpinitsystem -c /home/gpadmin/conf/gpinitsystem_config -a 20190426:16:41:38:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, please wait... /bin/mv: try to overwrite ?.home/gpadmin/conf/gpinitsystem_config?. overriding mode 0644 (rw-r--r--)? y /bin/mv: cannot move ?.tmp/cluster_tmp_file.52895?.to ?.home/gpadmin/conf/gpinitsystem_config?. Permission denied 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Reading Greenplum configuration file /home/gpadmin/conf/gpinitsystem_config 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Locale has not been set in /home/gpadmin/conf/gpinitsystem_config, will set to default value 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Locale set to en_US.utf8 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-MASTER_MAX_CONNECT not set, will set to default value 250 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Checking configuration parameters, Completed 20190426:16:41:40:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, please wait... .. 20190426:16:41:41:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Configuring build for standard array 20190426:16:41:41:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Commencing multi-home checks, Completed 20190426:16:41:41:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Building primary segment instance array, please wait... .... 20190426:16:41:44:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Building group mirror array type , please wait... .... 20190426:16:41:48:052895 gpinitsystem:mdw:gpadmin-[INFO]:-Checking Master host 20190426:16:41:48:052895 gpinitsystem:mdw:gpadmin-[WARN]:-Have lock file /tmp/.s.PGSQL.2346.lock but no process running on port 2346 20190426:16:41:48:gpinitsystem:mdw:gpadmin-[FATAL]:-Found indication of postmaster process on port 2346 on Master host Script Exiting!

解决:删除文件:/tmp/.s.PGSQL.2346.lock

错误四:

20190429:14:58:52:095181 gpstart:mdw:gpadmin-[WARNING]:-Segment instance startup failures reported 20190429:14:58:52:095181 gpstart:mdw:gpadmin-[WARNING]:-Failed start 9 of 12 segment instances <<<<<<<< 20190429:14:58:52:095181 gpstart:mdw:gpadmin-[WARNING]:-Review /home/gpadmin/gpAdminLogs/gpstart_20190429.log 20190429:14:58:52:095181 gpstart:mdw:gpadmin-[INFO]:----------------------------------------------------- 20190429:14:58:52:095181 gpstart:mdw:gpadmin-[INFO]:-Commencing parallel segment instance shutdown, please wait... ... 20190429:14:58:57:095181 gpstart:mdw:gpadmin-[ERROR]:-gpstart error: Do not have enough valid segments to start the array. 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]: 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]:-Failed to start Greenplum instance; review gpstart output to 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]:- determine why gpstart failed and reinitialize cluster after resolving 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]:- issues. Not all initialization tasks have completed so the cluster 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]:- should not be used. 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]:-gpinitsystem will now try to stop the cluster 20190429:14:58:57:085332 gpinitsystem:mdw:gpadmin-[WARN]: 20190429:14:58:58:095823 gpstop:mdw:gpadmin-[INFO]:-Starting gpstop with args: -a -l /home/gpadmin/gpAdminLogs -i -d /home/gpadmin/gpdata/gpmaster/gpseg-1 20190429:14:58:58:095823 gpstop:mdw:gpadmin-[INFO]:-Gathering information and validating the environment... 20190429:14:58:58:095823 gpstop:mdw:gpadmin-[ERROR]:-gpstop error: postmaster.pid file does not exist. is Greenplum instance already stopped?

错误5:

[gpadmin@gpmdw ~]$ gpstart 20130815:22:28:28:003675 gpstart:gpmaster:gpadmin-[INFO]:-Starting gpstart with args: 20130815:22:28:28:003675 gpstart:gpmaster:gpadmin-[INFO]:-Gathering information and validating the environment... 20130815:22:28:28:003675

gpstart failed. (Reason='[Errno 2] No such file or directory: '/home/gpadmin/gpadata/gpmaster/gpseg-1/postgresql.conf'') exiting...

解决方法:

.bash_profile文件中添加

export PATH source /home/gpsql/greenplum_path.sh export MASTER_DATA_DIRECTORY=/home/gpadmin/gpadata/gpmaster/gpseg-1 export PGPORT=2346 export PGDATABASE=testDB

并让其生效:. ~/.bash_profile

注: 一个常见的错误是有部分节点死活 start 不起来,log 中显示 gpdata 下某某文件夹不存在,事实上是该文件夹下初始化了错误的文件。尝试 vi /home/gpadmin/.gphostcache 看看缓存的 host 对不对,不对的话修改过来。因为如果在修改 network 文件之前执行过 gpssh-exkeys ,可能会在 gphostcache 文件中生成主机名和 hostlist 配置中的名字形成对应关系,而 greenplum 之后不会再修改这个文件,这样的话 gpdata 下就会初始化错误的节点数据,所以这里是个大坑。

参考链接:

https://www.linuxidc.com/Linux/2018-02/150872.htm

https://www.cnblogs.com/chou1214/p/9846952.html

https://www.linuxidc.com/Linux/2018-02/150872.htm

https://blog.csdn.net/seeyouc/article/details/53102274

https://blog.csdn.net/weixin_34209851/article/details/85973637

https://blog.csdn.net/q936889811/article/details/85612046