Matminer学习

Matminer—example

机器学习预测弹性模量

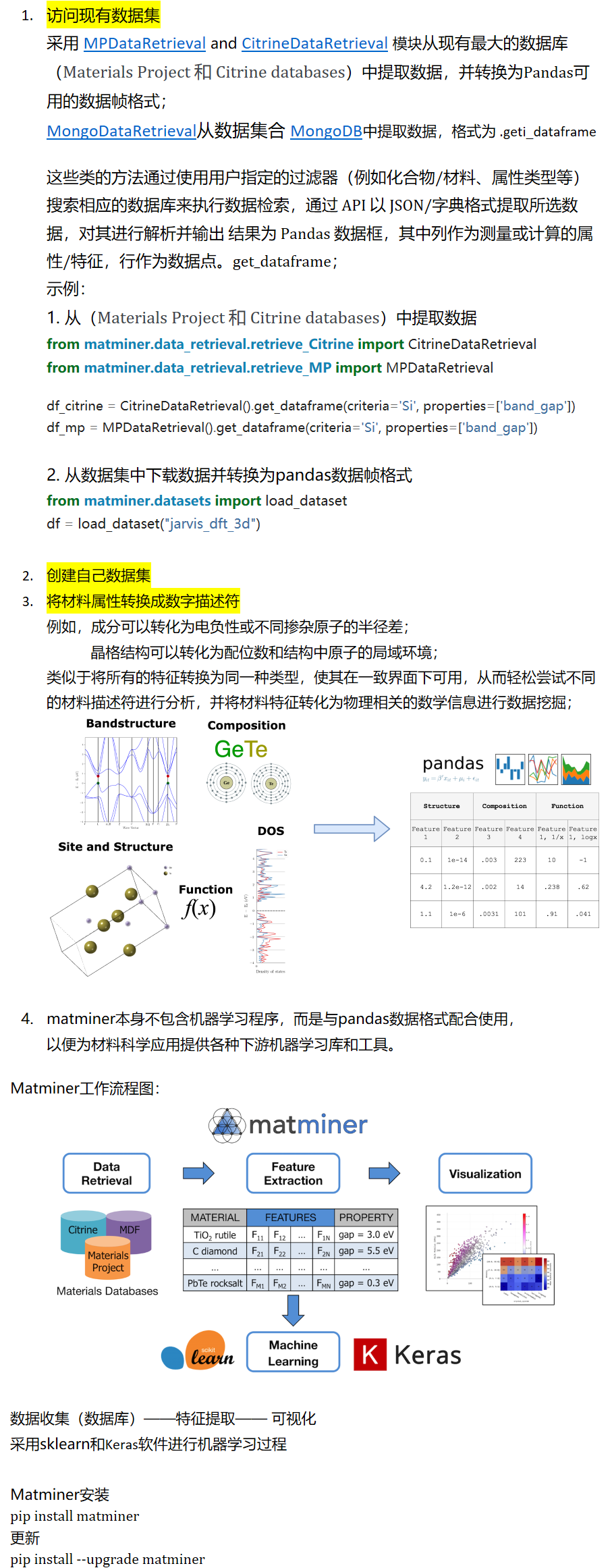

1.从pandas数据集中下载弹性常数数据;

from matminer.datasets.convenience_loaders import load_elastic_tensor df = load_elastic_tensor() # loads dataset in a pandas DataFrame object

2.去除不需要的参数

unwanted_columns = ["volume", "nsites", "compliance_tensor", "elastic_tensor",

"elastic_tensor_original", "K_Voigt", "G_Voigt", "K_Reuss", "G_Reuss"]

df = df.drop(unwanted_columns, axis=1)

3.数据描述符

确定输出( K_VRH、)和输入G_VRH, elastic_anisotropy

1)成分特征:对于不是数字信息的输入特征要进行转换,确定描述符

1.化学式转化成pymatgen Composition(类似列表形式)

from matminer.featurizers.conversions import StrToComposition df = StrToComposition().featurize_dataframe(df, "formula")

2.将化学式和结构的字母信息转为其他描述符,如电负性,磁性等;

#化学式信息

from matminer.featurizers.composition import ElementProperty ep_feat = ElementProperty.from_preset(preset_name="magpie") df = ep_feat.featurize_dataframe(df, col_id="composition") # input the "composition" column to the featurizer

from matminer.featurizers.conversions import CompositionToOxidComposition

from matminer.featurizers.composition import OxidationStates

df = CompositionToOxidComposition().featurize_dataframe(df, "composition")

os_feat = OxidationStates()

df = os_feat.featurize_dataframe(df, "composition_oxid")

#结构信息,密度等

from matminer.featurizers.structure import DensityFeatures

df_feat = DensityFeatures()

df = df_feat.featurize_dataframe(df, "structure") # input the structure column to the featurizer

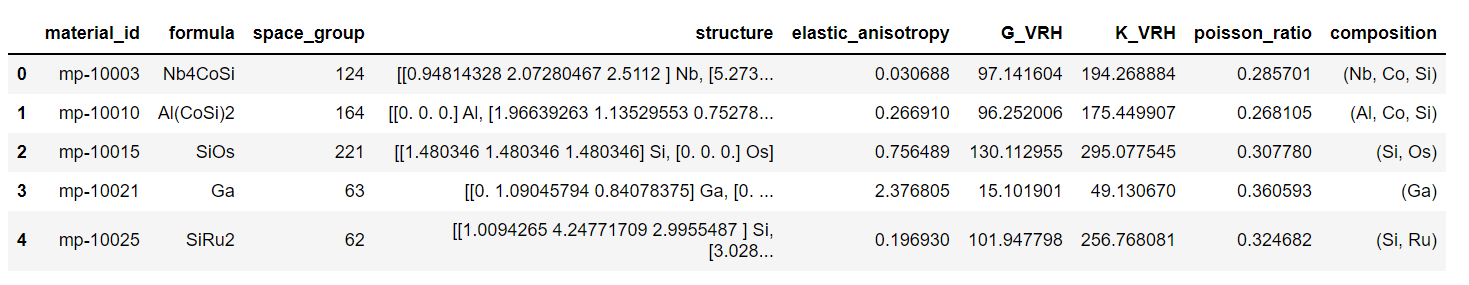

4. 分别采用线性回归和随机森林模型预测体积模量

1)确定input和output

y = df['K_VRH'].values

excluded = ["G_VRH", "K_VRH", "elastic_anisotropy", "formula", "material_id",

"poisson_ratio", "structure", "composition", "composition_oxid"]

X = df.drop(excluded, axis=1)

print("There are {} possible descriptors:\n\n{}".format(X.shape[1], X.columns.values))

2)采用线性回归模型;

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

import numpy as np

lr = LinearRegression()

lr.fit(X, y)

# get fit statistics

print('training R2 = ' + str(round(lr.score(X, y), 3)))

print('training RMSE = %.3f' % np.sqrt(mean_squared_error(y_true=y, y_pred=lr.predict(X))))

training R2 = 0.927

training RMSE = 19.625

3)对线性回归模型进行交叉验证,以避免过拟合;

交叉验证的基本思想是:将数据集分为k等份,对于每一份数据集,其中k-1份用作训练集,单独的那一份用作测试集;

交叉验证分为两步,一是划分训练集与测试集,二是运用交叉验证进行模型评估;

数据集划分:k折交叉验证( KFold):

KFold(n_split, random_state, shuffle),参数:n_split:需要划分多少折数,shuffle:是否进行数据打乱,random_state:随机数;

模型交叉验证:

cross_value_score,cross_validate,cross_val_predict

(cross_val_predict 和 cross_val_score的使用方法是一样的,但是它返回的是一个使用交叉验证以后的输出值,而不是评分标准)

from sklearn.model_selection import KFold, cross_val_score

# Use 10-fold cross validation (90% training, 10% test)

crossvalidation = KFold(n_splits=10, shuffle=True, random_state=1)

scores = cross_val_score(lr, X, y, scoring='neg_mean_squared_error', cv=crossvalidation, n_jobs=1)

rmse_scores = [np.sqrt(abs(s)) for s in scores]

r2_scores = cross_val_score(lr, X, y, scoring='r2', cv=crossvalidation, n_jobs=1)

print('Cross-validation results:')

print('Folds: %i, mean R2: %.3f' % (len(scores), np.mean(np.abs(r2_scores))))

print('Folds: %i, mean RMSE: %.3f' % (len(scores), np.mean(np.abs(rmse_scores))))

Cross-validation results:

Folds: 10, mean R2: 0.902

Folds: 10, mean RMSE: 22.467

5.画图

from figrecipes import PlotlyFig

from sklearn.model_selection import cross_val_predict

pf = PlotlyFig(x_title='DFT (MP) bulk modulus (GPa)',

y_title='Predicted bulk modulus (GPa)',

title='Linear regression',

mode='notebook',

filename="lr_regression.html")

pf.xy(xy_pairs=[(y, cross_val_predict(lr, X, y, cv=crossvalidation)), ([0, 400], [0, 400])],

labels=df['formula'],

modes=['markers', 'lines'],

lines=[{}, {'color': 'black', 'dash': 'dash'}],

showlegends=False

)

总结,机器学习预测流程:

1.采用matminer对数据进行预处理,输入特征;

2.机器学习预测,选择模型+交叉验证;

参考资料:使用sklearn进行交叉验证 - 小舔哥 - 博客园 (cnblogs.com)

K折交叉验证的使用之KFold和split函数_沐风大大的博客-CSDN博客_kfold.split

浙公网安备 33010602011771号

浙公网安备 33010602011771号