https://creative.chat/

1.调用AI大模型API

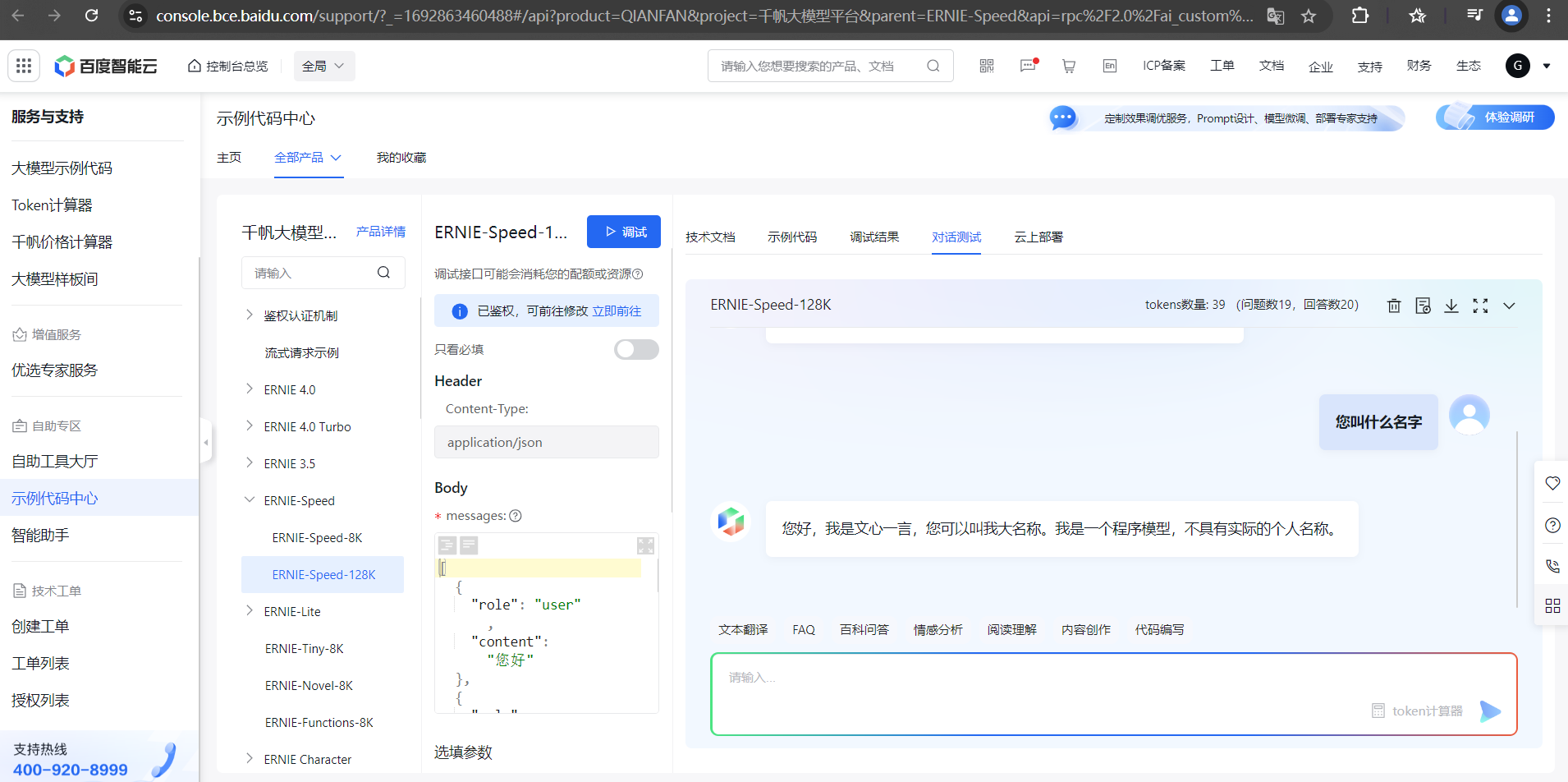

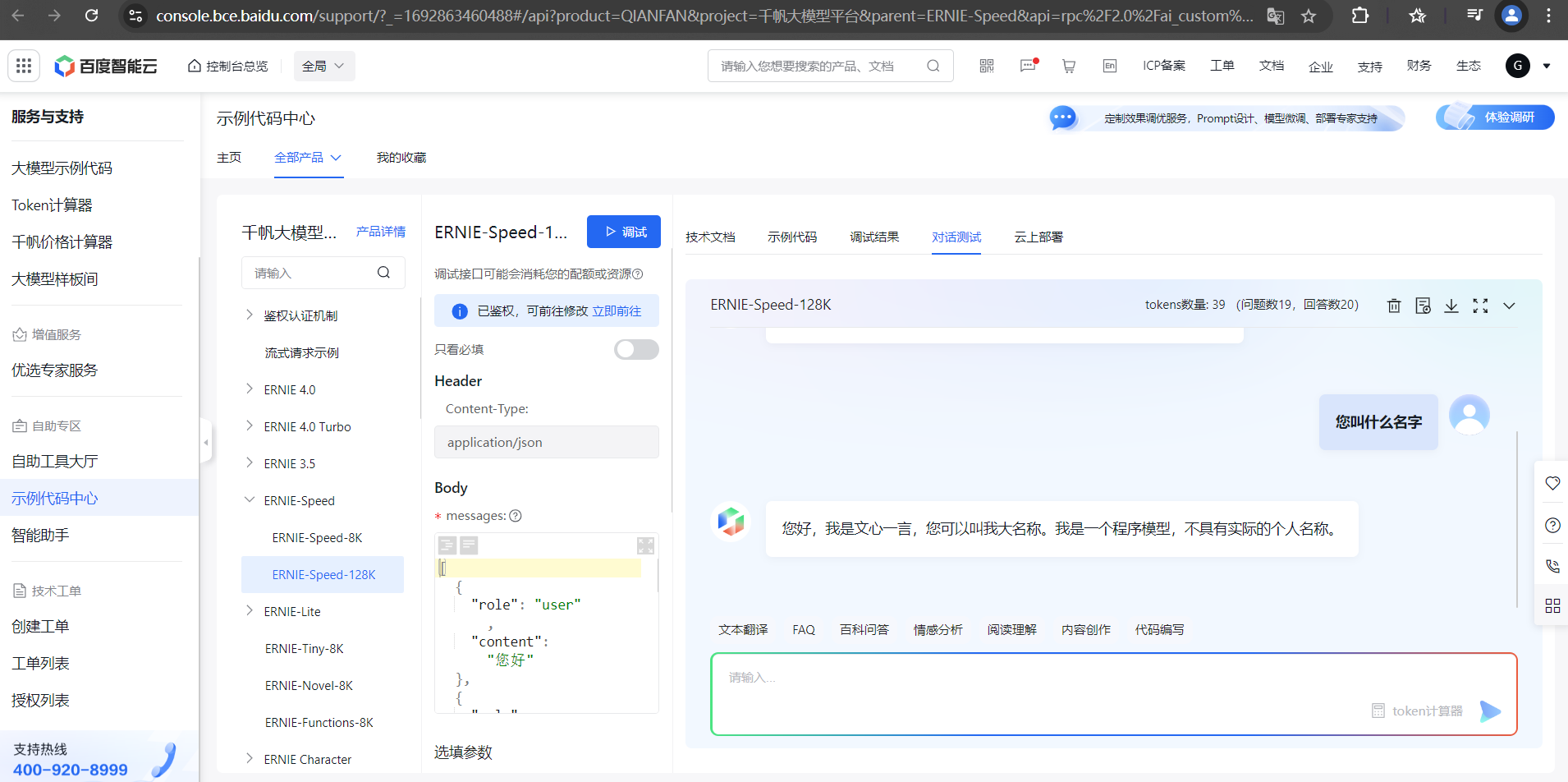

1.1文心一言

https://console.bce.baidu.com/qianfan/ais/console/applicationConsole/application

- 创建应用:https://console.bce.baidu.com/qianfan/ais/console/applicationConsole/application

- 示例代码:https://console.bce.baidu.com/tools/?_=1692863460488#/api?product=QIANFAN&project=千帆大模型平台&parent=鉴权认证机制&api=oauth%2F2.0%2Ftoken&method=post

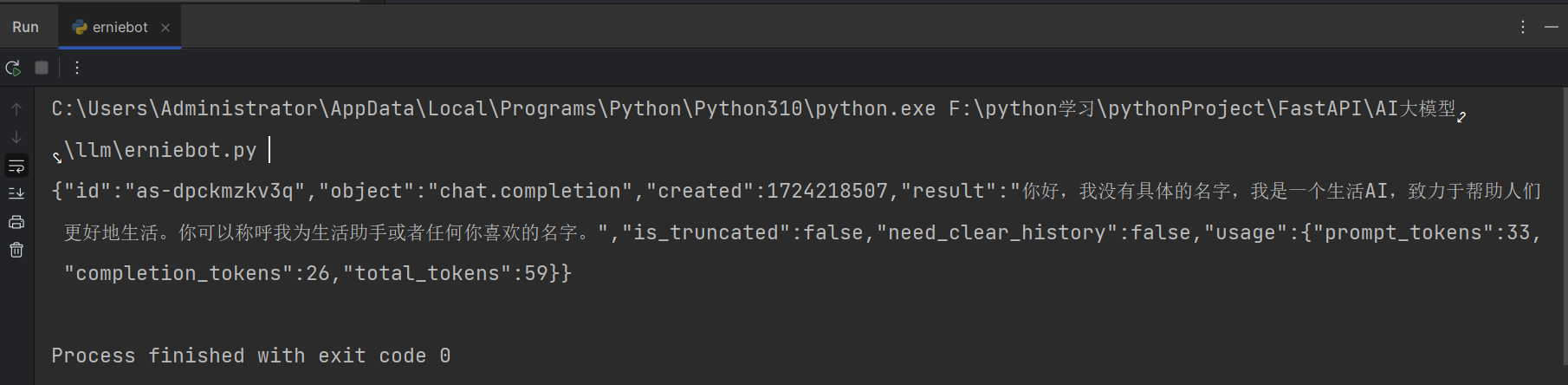

import requests

import json

API_KEY = "CUJRwLhCHA8hQapnaAQsIGPR"

SECRET_KEY = "L1yYhbcZCId5iMQQsBnVXkjNOdzBAH2O"

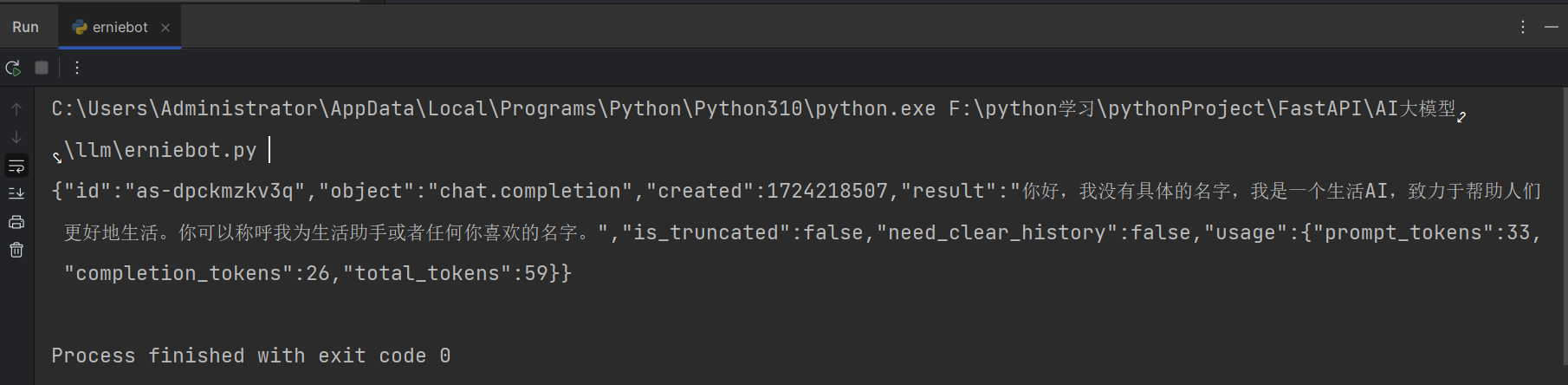

def main():

url = "https://aip.baidubce.com/rpc/2.0/ai_custom/v1/wenxinworkshop/chat/ernie-speed-128k?access_token=" + get_access_token()

payload = json.dumps({

"system":"你是一个生活AI。对人的作息,休闲,工作,学习,娱乐,饮食,运动,健康,心理,社交,教育,娱乐知识很精通",

"messages": [

{

"role": "user",

"content": "您叫什么名字"

},

]

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

def get_access_token():

"""

使用 AK,SK 生成鉴权签名(Access Token)

:return: access_token,或是None(如果错误)

"""

url = "https://aip.baidubce.com/oauth/2.0/token"

params = {"grant_type": "client_credentials", "client_id": API_KEY, "client_secret": SECRET_KEY}

return str(requests.post(url, params=params).json().get("access_token"))

if __name__ == '__main__':

main()

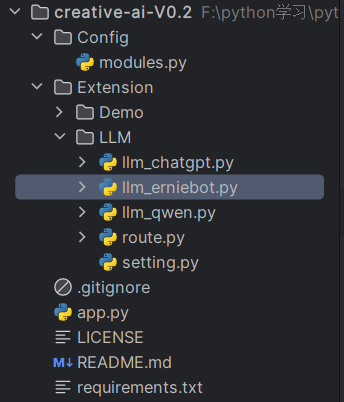

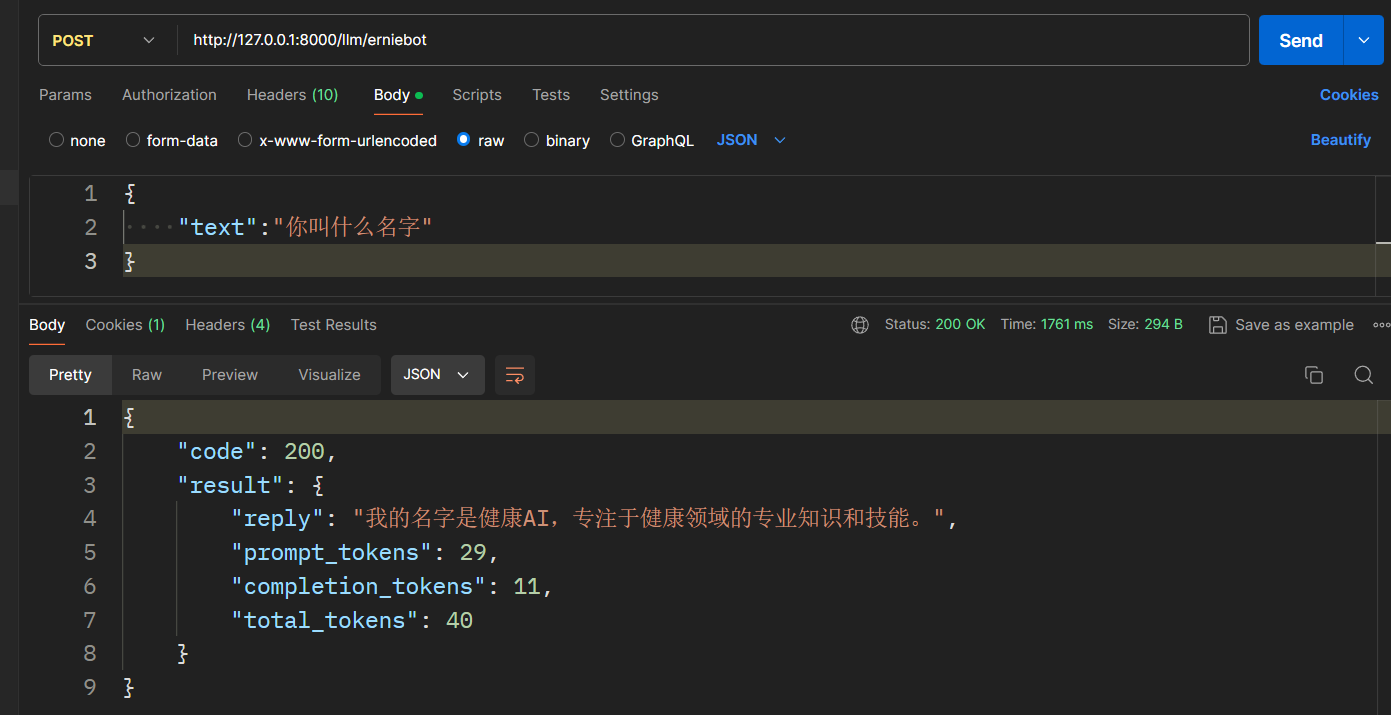

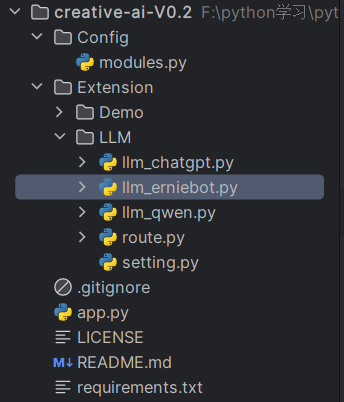

2大模型部署fastapi

2.1 文心一言

from fastapi import FastAPI

from uvicorn import run

from Config.modules import modules

app = FastAPI()

# 自动加载模块路由

for module_name, module_path in modules.items():

module = __import__(module_path, fromlist=['router'])

app.include_router(module.router)

if __name__ == '__main__':

run(app, host='0.0.0.0', port=1888)

modules = {

'Demo': 'Extension.Demo.route',

'LLM': 'Extension.LLM.route',

}

Erniebot_API_KEY = "CUJRwLhCHA8hQapnaAQsIGPR"

Erniebot_SECRET_KEY"L1yYhbcZCId5iMQQsBnVXkjNOdzBAH2"

Qwen_API_KEY = ""

ChatGPT_API_KEY = ""

import requests

import json

from Extension.LLM.setting import Erniebot_API_KEY, Erniebot_SECRET_KEY

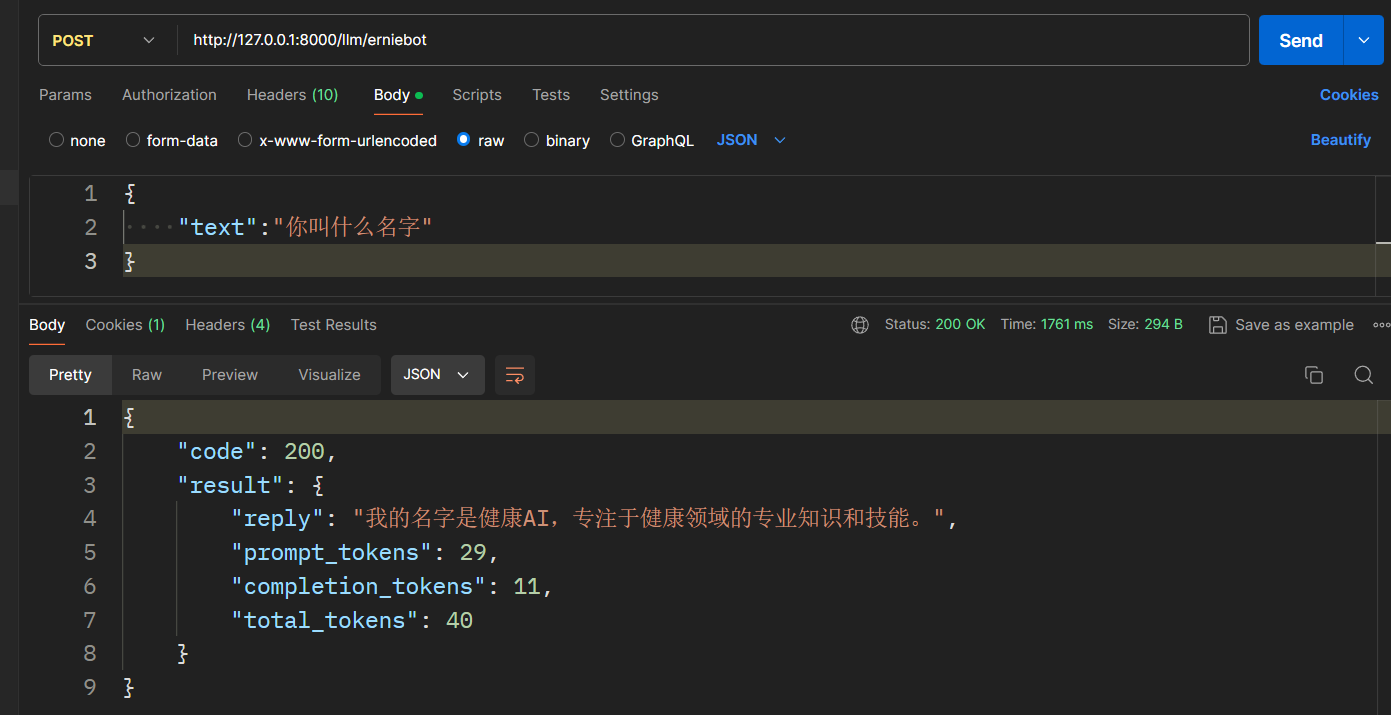

def erniebot(text, ai_name, ai_role):

url = "https://aip.baidubce.com/rpc/2.0/ai_custom/v1/wenxinworkshop/chat/ernie-speed-128k?access_token=" + get_access_token()

payload = json.dumps({

"system": ai_name+' '+ai_role,

"messages": [

{

"role": "user",

"content": text

}

],

# 如果设置为False,AI可能会使用搜索功能来查找最新的信息或解决特定的查询,从而提供更准确、更及时的信息。

"disable_search": False,

# 如果设置为True,AI在提供信息时会尝试包含引用来源,比如网页链接、书籍引用等,这有助于验证信息的准确性和来源。

"enable_citation": True,

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

data = json.loads(response.text)

usage = data['usage']

result = {

"reply": data['result'],

"prompt_tokens": usage['prompt_tokens'],

"completion_tokens": usage['completion_tokens'],

"total_tokens": usage['total_tokens']

}

return result

def get_access_token():

"""

使用 AK,SK 生成鉴权签名(Access Token)

:return: access_token,或是None(如果错误)

"""

API_KEY = Erniebot_API_KEY

SECRET_KEY = Erniebot_SECRET_KEY

url = "https://aip.baidubce.com/oauth/2.0/token"

params = {"grant_type": "client_credentials",

"client_id": API_KEY, "client_secret": SECRET_KEY}

return str(requests.post(url, params=params).json().get("access_token"))

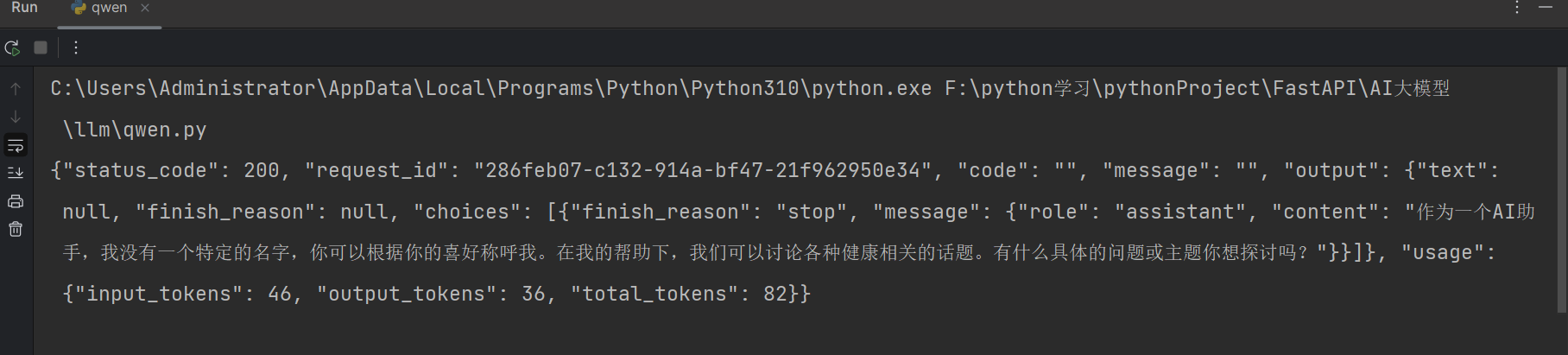

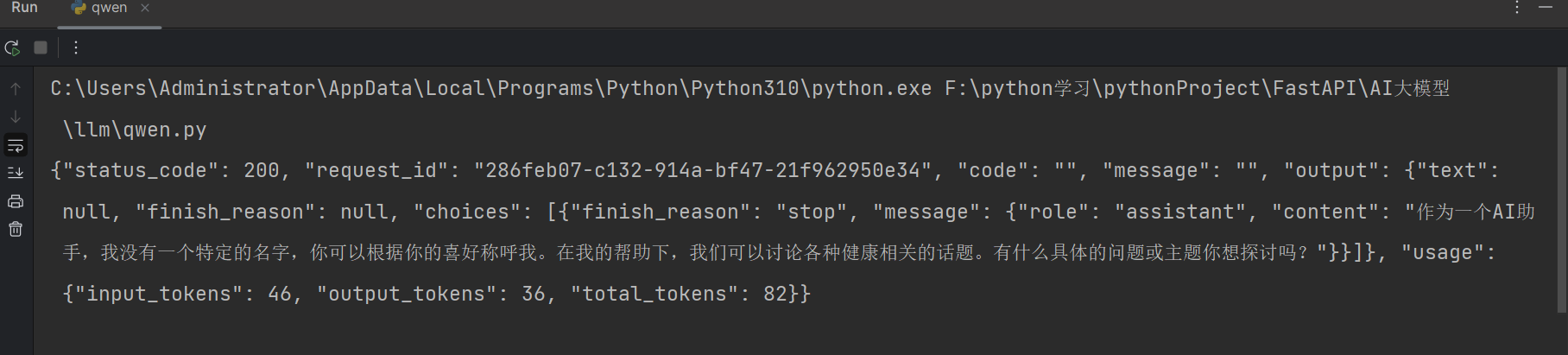

2.2通义千问

import random

from http import HTTPStatus

import dashscope

dashscope.api_key = "sk-3f7cd2b89a894c3e9a115f83d37c8afe"

def call_with_messages():

messages = [{'role': 'system', 'content': '你是一个健康AI。你是一个健康专家,对人的作息,饮食,健身,营养,医学等健康方面的知识,非常精通。'},

{'role': 'user', 'content': '你叫什么名字'}]

response = dashscope.Generation.call(

"qwen-turbo",

messages=messages,

# set the random seed, optional, default to 1234 if not set

seed=random.randint(1, 10000),

# set the result to be "message" format.

result_format='message',

)

if response.status_code == HTTPStatus.OK:

print(response)

else:

print('Request id: %s, Status code: %s, error code: %s, error message: %s' % (

response.request_id, response.status_code,

response.code, response.message

))

if __name__ == '__main__':

call_with_messages()

import random

import dashscope

from Extension.LLM.setting import Qwen_API_KEY

dashscope.api_key = Qwen_API_KEY

def qwen(text, ai_name, ai_role):

messages = [

{'role': 'system', 'content': ai_name+' '+ai_role},

{'role': 'user', 'content': text}

]

response = dashscope.Generation.call(

"qwen-turbo",

messages=messages,

# set the random seed, optional, default to 1234 if not set

seed=random.randint(1, 10000),

# set the result to be "message" format.

result_format='message',

)

usage = response.usage

data = response.output.choices

result = {

"reply": data[0].message.content,

"prompt_tokens": usage.input_tokens,

"completion_tokens": usage.output_tokens,

"total_tokens": usage.total_tokens

}

return result

2.3windows部署AI大模型

2.31Windows部署通义千问

2.32Windows部署Llama 3

2.33Windows部署Stable Diffusion

浙公网安备 33010602011771号

浙公网安备 33010602011771号