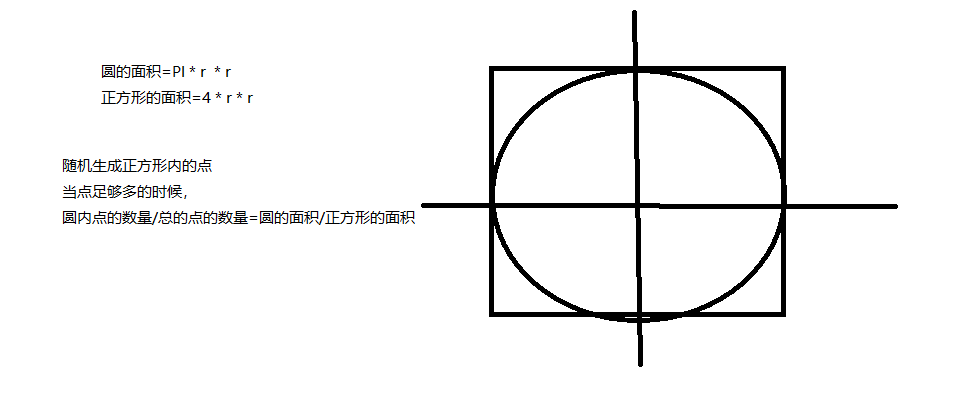

Spark测试代码求PI的原理

原理图

![]()

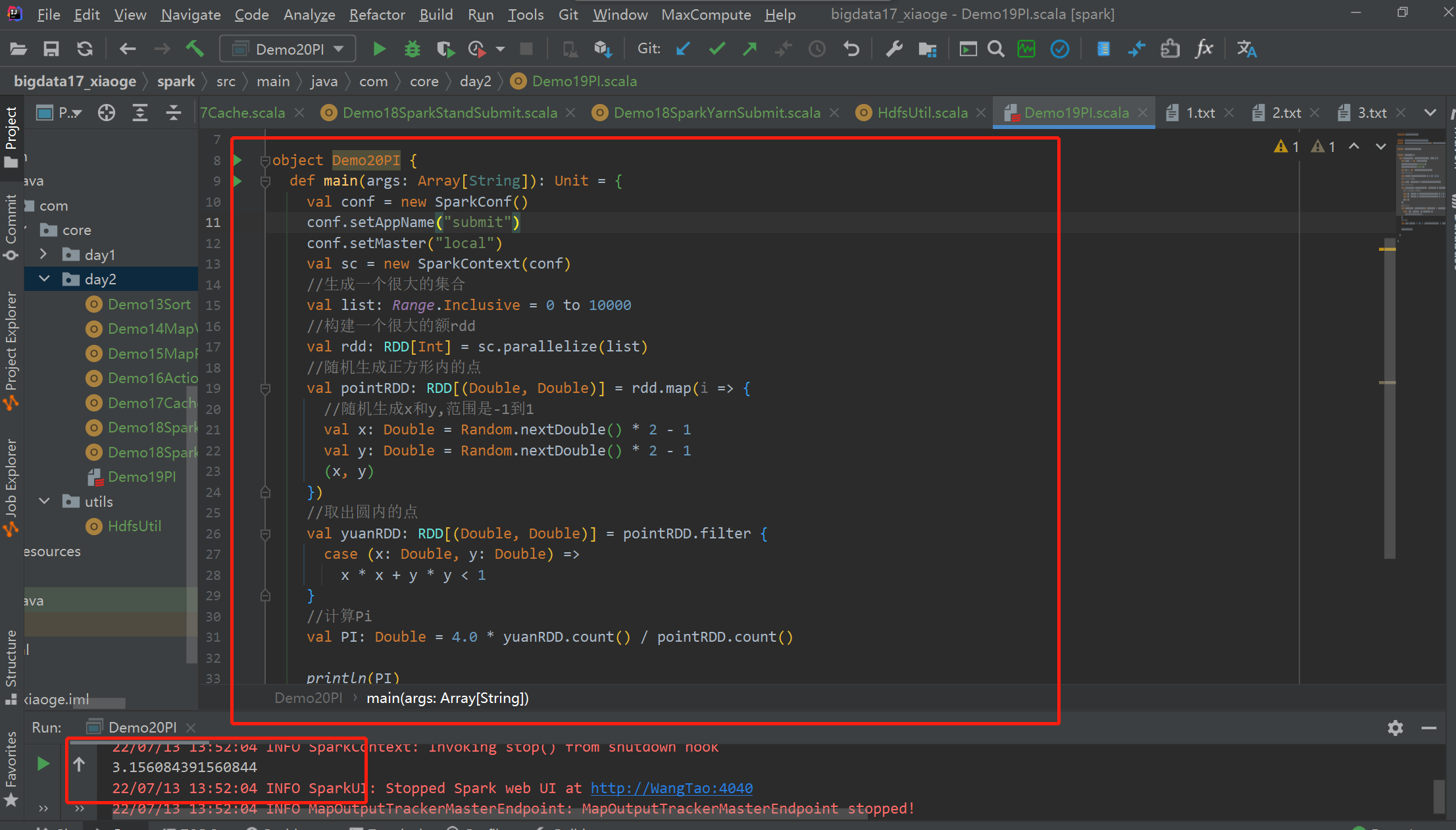

代码实现

package com.core.day2

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import scala.util.Random

object Demo20PI {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

conf.setAppName("submit")

conf.setMaster("local")

val sc = new SparkContext(conf)

//生成一个很大的集合

val list: Range.Inclusive = 0 to 10000

//构建一个很大的额rdd

val rdd: RDD[Int] = sc.parallelize(list)

//随机生成正方形内的点

val pointRDD: RDD[(Double, Double)] = rdd.map(i => {

//随机生成x和y,范围是-1到1

val x: Double = Random.nextDouble() * 2 - 1

val y: Double = Random.nextDouble() * 2 - 1

(x, y)

})

//取出圆内的点

val yuanRDD: RDD[(Double, Double)] = pointRDD.filter {

case (x: Double, y: Double) =>

x * x + y * y < 1

}

//计算Pi

val PI: Double = 4.0 * yuanRDD.count() / pointRDD.count()

println(PI)

}

}

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号